So, I am using a CD player direct into a tube amp that does not have DC blocking caps at the input. Meter reads 5.7 mv. or so DC at the players output. Wondering if this is safe for the amp and speakers, as I know the goal is no DC here at all. Is this small enough to not be a concern ? It's a low powered 15 to 20 watt amp if that makes any difference. Hoping someone with more tech know-how than I could enlighten me on this. Thanks so much for any comments.

5.7mV suggests that possibly a ground leak resistor is missing or open circuit. Or maybe the CD player uses a DC servo instead of an output coupling cap. In either case I would be concerned. It may be OK now, but what exactly is there to stop that 5.7mV turning into something bigger in the future?

........ but what exactly is there to stop that 5.7mV turning into something bigger in the future?

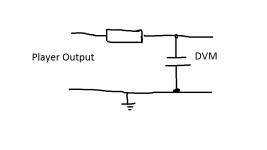

You'll often find that the low DC offset measured at the player output is in fact a mix of low level hf hash/noise. If a scope isn't available as a check then making a simple R/C filter (say 47k and 1uf) gives a more accurate DVM result.

Mooly, and DF96, Thanks for chiming in. Not sure what to do as you both make good points with differing opinions. The amps (Fisher 30A's) were designed with a cap at the input, but I eliminated it during restoration. Sounds better that way and I have been using another pair of 30A's this way for more than a decade with various sources or preamps and no problems. Just never used this CD player before, so I thought I should test for DC first. It's an older cheap Sony from a Garage sale. The "meat" of my question was whether or not we should only accept 0 DC, or is 5.7mv. so small as to not really matter. Good point though that if something was wrong in the CD circuitry, that 5.7 mv. could increase in time. Thanks for your input so far.

There's no real reason to suspect the 5.7 mv would increase over time... and its a non issue as far as it altering the grid bias is concerned.

If the Sony is all original then its almost certainly AC coupled anyway, which brings us back to the 5.7mv actually being an effect of measuring low level hash/noise with a DVM. Try making a simple filter as I outlined and measure the offset again.

If the Sony is all original then its almost certainly AC coupled anyway, which brings us back to the 5.7mv actually being an effect of measuring low level hash/noise with a DVM. Try making a simple filter as I outlined and measure the offset again.

Good advice on the filter, thanks, will do. Am I correct in that would be the + going through the cap and the resistor across the - and +? You did mean 1 uf, right? That might be hard to find in my "collection" of parts.

Please pardon my ignorance, I know just enough to be dangerous. But, wouldn't the cap in the test filter block the DC just like the input cap on an amp?

You test the player in isolation i.e. not connected to anything else. Its easiest to tag the parts to a phono plug to make a test plug up.

The cap can even be an electrolytic, 10uf, 47uf. Polarity doesn't matter because the offset is so low anyway.

Attachments

Transducers are more hardy than you might be thinking.

5.7mV at amp in, say the amp gain is 10x, means 57mV at amp out. Many amps by themselves without input will already give 20mV. The cheap amps 100mV. Yet speakers still don't die.

Worry about earphones more instead. They have 100 to 1000 times less power dissipation capability yet they are surviving fine on those preamp-cum-headphone out. One would think they would be fried by a few hundred micro volts.

tl;dr 5.7mV is no big concern. Although it does reflect on the quality of the product. The good amps should do nearly 0mV with a load (<-keyword).

5.7mV at amp in, say the amp gain is 10x, means 57mV at amp out. Many amps by themselves without input will already give 20mV. The cheap amps 100mV. Yet speakers still don't die.

Worry about earphones more instead. They have 100 to 1000 times less power dissipation capability yet they are surviving fine on those preamp-cum-headphone out. One would think they would be fried by a few hundred micro volts.

tl;dr 5.7mV is no big concern. Although it does reflect on the quality of the product. The good amps should do nearly 0mV with a load (<-keyword).

I'm soaking it all in, Thanks Mooly for the mini schematic. FWIW, with a 40k load, the DC was 2.4 mv. Assuming that is, that I set up the load properly. It was 40K across the + and - of the player's output. To clarify, I was concerned about both the amps and the speakers. I will try to set up that filter after work today and test again. Thanks to all.

Feel the need to straighten out the facts on this thread.

1)Less than 50mV dc or so is completely normal & negligible offset on the (pre-blocking cap) output of an opamp or other split-supplied gain stage.

2)Your 5.7mV, as has been pointed out, is within "noise anomaly" range of a 0mV reading on any DMM. You'd have to have very short meter leads & measure from the nearest ground point to the point under test in order to get closer to a 0mV reading. If you measure from a point on your chassis to another point on the chassis at other end of unit, you'll very likely read 5mV or so of dc, just from noise, rf, etc. Remember that a DMM input is VERY high impedance, and subject to noise pickup.

3)Majority of power amps have a dc blocking cap at the input. Those that don't either have an output transformer that will block dc from spkrs, or has a LF corner cap on the terminus of the feedback loop to reduce amp gain at dc to near zero. Only a wildly incompetent direct-coupled power amp design has gain at dc, unless it's designed to be a dc motor driver. So, even if you had several HUNDRED mV of dc on your dac or preamp output, it would not upset a darn thing in the system.

4)If you were reading a distinct negative voltage offset after the dc blocking caps in unmuted, playing mode, or a distinct positive offset in mute mode, I would expect to find defective muting transistors leaking it to the line. Very common with the ubiquitous 2SC2878 used by lots of makers for muting.

1)Less than 50mV dc or so is completely normal & negligible offset on the (pre-blocking cap) output of an opamp or other split-supplied gain stage.

2)Your 5.7mV, as has been pointed out, is within "noise anomaly" range of a 0mV reading on any DMM. You'd have to have very short meter leads & measure from the nearest ground point to the point under test in order to get closer to a 0mV reading. If you measure from a point on your chassis to another point on the chassis at other end of unit, you'll very likely read 5mV or so of dc, just from noise, rf, etc. Remember that a DMM input is VERY high impedance, and subject to noise pickup.

3)Majority of power amps have a dc blocking cap at the input. Those that don't either have an output transformer that will block dc from spkrs, or has a LF corner cap on the terminus of the feedback loop to reduce amp gain at dc to near zero. Only a wildly incompetent direct-coupled power amp design has gain at dc, unless it's designed to be a dc motor driver. So, even if you had several HUNDRED mV of dc on your dac or preamp output, it would not upset a darn thing in the system.

4)If you were reading a distinct negative voltage offset after the dc blocking caps in unmuted, playing mode, or a distinct positive offset in mute mode, I would expect to find defective muting transistors leaking it to the line. Very common with the ubiquitous 2SC2878 used by lots of makers for muting.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- Digital Source

- How much DC can be at a CD player output without harm to amp/speakers, etc.