Really? Can you give us an example of such tiny dB filter ripple in the audio band giving rise to -60dB correlation difference?Without qualitative analysis of the data no judgement of audibility/transparency can be made. Simple ripples in frequency response of tiny fractions of a dB due, for instance, to the anti-aliasing/imaging filters in the different chip sets could cause -60dB errors.

But this isn't how the tests on Gearslutsz were created - the analogue output was take directly from the DAC's output - no headphones/speakers or any transducers involved.Any real speaker/headphone is orders of magnitude worse in this regard. If you put a dummy head at your favorite spot and played just about any piece of music and fed the original and the recording to diffmaker you would essentially get garbage.

What I believe you're saying here is that room responses & reflections will deviate greatly from the input signal - no disagreement here but relevance?

It looks like you are describing a theoretical point of view about how some of human cognition works. As a theory, it seems significantly at odds with much of cognitive psychology and neuroscience research, perhaps fatally so. In particular, human cognition is not nearly as linear as your theory seems to suppose and require.

Beyond that, there are other problems with some of the claims in the above quote. For one example, even if you were in the same room with the producer when he printed it, you almost certainly wouldn't hear the exact same way the producer does. Maybe he has perfect pitch, and other ways of hearing that you don't hear in the same way. That is, being exposed to the same sound waves and hearing the same thing as someone else are two different things.

Also, its not clear what distortion less than -40dB means. THD? Any kind of THD? What kind of source material? Any kind of source material?

In addition, it's not clear that ABX is reliable for finding low level limits. It's not the only way to do blind testing, and I haven't seen any research showing it to be the most sensitive or no less sensitive than any of the others. That being the case, nobody really knows how sensitive it is. Of course, that doesn't stop people from thinking up reasons in support of the proposition. But, that type of reasoning is often a poor substitute for careful application of the scientific method, particularly so in the area of medical research, which hearing research is.

In short, there are multiple problems with the theory as stated. Probably more than one of the problems is fatal to the overall point being expressed, IMHO.

Mark, it gives a ballpark, and no one really cares (nor is there really any motivation *to* care) just *exactly* where that limitation is. Taking Matt to the task for all your frustration about ABX testing is a bit unfair, for what was a pretty off the cuff comment and not a fully fleshed proposal. -40 dB and not worrying about -100 dB gives him a giant fudge factor of a 1000x to work with; let's not lose sight of the forest because of the trees.

This is a good question & helps to delve into the workings of auditory perception. Maybe you can tell us how you subsequently learned the ability to view without the image anomaly being the foremost focus of your attention?

But before you do, why would you train yourself to spot visual anomalies that 99% of people cannot see? When you say it helped to complete the job in hand, do you mean that there was a need for spotting this & presumably eliminating it because without so doing it would interfere with most people's perception of the overall image even though they couldn't spot the exact anomaly you can?

As I said, it was for a job... I was working on compression algorithms... Too much close study of the materials looking for artefacts I guess. Sure, probably anyone could train themselves to see these things - you can train yourself to see the frame rate at the cinema* - but why would you do it?! It ruins the enjoyment.

I was helped to learn to ignore the acquired "hypersensitivity" as it were by learning to allow conscious processes to take a sort of back seat. This was some years ago, and was a point of academic interest to a neuroscientist in my extended family who was happy to play with a guinea pig... Probably helps that his missus is a clinical psychologist!

*There's an interesting sideline here, in that some people find high frame rate (48fps) cinema unpleasant to watch. There's some interesting papers on this, I'll try and find....

Can you give us an example of such tiny dB filter ripple in the audio band giving rise to -60dB correlation difference?

One could as well as if you could give an example of two data converters measuring at less than -100dB total distortion (maybe less than -120dB), by themselves in one pass giving rise to -60dB nonlinear distortion?

Again, something isn't right here and we don't know what it is. Asking leading questions like a lawyer or politician is more suited to debate than scientific inquiry.

Also, you may know from experience different data conversion anti-alias filters are often audibly different. No surprise there.

Thank you for the reply & the bit I highlighted is exactly what I'm saying is the difference between conscious, analytical listening mostly used in ABX testing & the everyday listening normally engaged in as was stated on page 1 of this thread.As I said, it was for a job... I was working on compression algorithms... Too much close study of the materials looking for artefacts I guess. Sure, probably anyone could train themselves to see these things - you can train yourself to see the frame rate at the cinema* - but why would you do it?! It ruins the enjoyment.

I was helped to learn to ignore the acquired "hypersensitivity" as it were by learning to allow conscious processes to take a sort of back seat. This was some years ago, and was a point of academic interest to a neuroscientist in my extended family who was happy to play with a guinea pig... Probably helps that his missus is a clinical psychologist!

*There's an interesting sideline here, in that some people find high frame rate (48fps) cinema unpleasant to watch. There's some interesting papers on this, I'll try and find....

Most people can switch between these two forms of listening & do so in the normal course of auditioning equipment but being required to listen purely analytically as in ABX testing, is difficult without training

Mark, it gives a ballpark, and no one really cares (nor is there really any motivation *to* care) just *exactly* where that limitation is. Taking Matt to the task for all your frustration about ABX testing is a bit unfair, for what was a pretty off the cuff comment and not a fully fleshed proposal. -40 dB and not worrying about -100 dB gives him a giant fudge factor of a 1000x to work with; let's not lose sight of the forest because of the trees.

Understood. I would invite Matt to rephrase the point he would like to make if he wants. He might have use for making more or less the same point some time again, and maybe this would be a good time to test it out.

That being said, I am not aware of trying to take out frustration in that particular instance. Not impossible though.

Really? Can you give us an example of such tiny dB filter ripple in the audio band giving rise to -60dB correlation difference?

What I believe you're saying here is that room responses & reflections will deviate greatly from the input signal - no disagreement here but relevance?

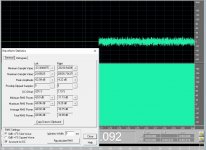

First one is easy I took two identical broadband noise signals (~-10dB L and R in the picture). I amplified 1/2 the top one by only .01dB and replaced the top channel (L) with the difference. The R channel is drawn off scale here but the waveform statistics show the difference is identically 0 in the first 1/2 and the .01dB difference in the 2nd 1/2 corresponds to a -59dB null. So +- .01dB simple amplitude variance can account for a ~-60dB null.

My second point is if the difference could be accounted for by benign .01dB or so frequency response differences how would you resolve that from the several dB ripples in any real listening environment?

Attachments

I hope my picture is clear the waveform statistics are on the second half of the signal, the first half is just there for completeness the subtraction yields exactly 0 (perfect null). It's just math BTW, rule of thumb 10% ~=1dB so -60dB = .1% = .01dB.

Last edited:

I am unclear what it is that puzzles you. Unless devices are perfect there will be differences. These differences may be measurable without being audible. Minor wiggles in frequency response are a likely explanation. -60dB difference could mean a 0.009dB response peak; unlikely to be audible?mmerrill99 said:I'm not saying Diffmaker is perfect but those who live by measurements have never explained what the problem is that leads to such reported differences between input & output of <-60dB for supposedly transparent devices.

Does learning to hear, what are at best tiny and very hard to measure differences, result in an improved listening experience?

In my experience, yes.

dave

OK, it seems the weakness of such null testing is that it only shows the worst performance figure irrespective of where that arises from & thus it can be masking audible distortions which are below this headline result . So if the digital output filter is where the largest difference is being recorded - this will define the headline result & every other difference below this is hidden

Probably best illustrated by Archimago example of his use of Diffmaker where both 192kpbs MP3 & NOS outputs give the same corr depth of 50 but sound very different from one another Archimago's Musings: PROTOCOL: [UPDATED] The DiffMaker Audio Composite (DMAC) Test.

Probably best illustrated by Archimago example of his use of Diffmaker where both 192kpbs MP3 & NOS outputs give the same corr depth of 50 but sound very different from one another Archimago's Musings: PROTOCOL: [UPDATED] The DiffMaker Audio Composite (DMAC) Test.

Last edited:

Probably best illustrated by Archimago example of his use of Diffmaker where both 192kpbs MP3 & NOS outputs give the same corr depth of 50 but sound very different from one another

Another vote to try multitone tests, they inherently reject "linear" (still hate this term) distortion which is always at the frequencies in the stimulus and non-linear distortions which are probably more objectionable when present at the same levels.

For this test you might try the standard 32 tone multitone waveform with all clocks synchronously tied to the waveform, then linear and nonlinear distortions are almost completely separable by removing the 32 FFT bins from the output. This is equivalent in some ways to a diffmaker null with no crosstalk between the linear and nonlinear effects.

Last edited:

Another vote to try multitone tests, they inherently reject "linear" (still hate this term) distortion which is always at the frequencies in the stimulus and non-linear distortions which are probably more objectionable when present at the same levels.

Sure, can you give us the typical range of IMD levels you found with your multitone tests on DACs without naming any - just a typical range ?

Edit: I see you edited after I posted. Still interested in the typical range of IMD you found

When you say all clock synchronously tied to the waveform, can you explain what "tied to the waveform" means?

Last edited:

Sorry I didn't compile the results in terms of total rms noise floor so right now I would be speculating wildly. I'm willing to give a Scarlett 2i2 a try for at least one data point.

By synchronous I mean the exact same clock for the DAC and A/D, when you use for instance two different USB sound devices with separate clocks the tones are no longer exactly in 1 FFT bin (usually) and in gross cases they can be way off. For instance I have a sound card that recorded a 1kHz sine from a lab standard generator as 1006 Hz but only at 44.1 sampling rate. The clock divider was flawed. Just another point that as Mark says you have to question results when on the face of it they make no sense. Remember the data sheets for DAC's and A/D's stress the non-linear distortions only so when they both reproduce an output to input at -100dB levels the -60dB null makes no sense. It's entirely possible that the GS tests are of no practical use, this must be on the table.

EDIT - By tied to the waveform I mean you pick frequencies that are an exact multiple of the sampling frequency. I simply prefer to use 96K FFT's so every bin is exactly in Hz.

By synchronous I mean the exact same clock for the DAC and A/D, when you use for instance two different USB sound devices with separate clocks the tones are no longer exactly in 1 FFT bin (usually) and in gross cases they can be way off. For instance I have a sound card that recorded a 1kHz sine from a lab standard generator as 1006 Hz but only at 44.1 sampling rate. The clock divider was flawed. Just another point that as Mark says you have to question results when on the face of it they make no sense. Remember the data sheets for DAC's and A/D's stress the non-linear distortions only so when they both reproduce an output to input at -100dB levels the -60dB null makes no sense. It's entirely possible that the GS tests are of no practical use, this must be on the table.

EDIT - By tied to the waveform I mean you pick frequencies that are an exact multiple of the sampling frequency. I simply prefer to use 96K FFT's so every bin is exactly in Hz.

Last edited:

Thanks

Yea, I got the synchronous bit, I just didn't know if "tied to waveform" was significant?

Yea, I got the synchronous bit, I just didn't know if "tied to waveform" was significant?

Thank you for the reply & the bit I highlighted is exactly what I'm saying is the difference between conscious, analytical listening mostly used in ABX testing & the everyday listening normally engaged in as was stated on page 1 of this thread.

Most people can switch between these two forms of listening & do so in the normal course of auditioning equipment but being required to listen purely analytically as in ABX testing, is difficult without training

Ok, I see where you are going, but no, what I'm saying does not support your argument. Rather different cases.

EDIT - By tied to the waveform I mean you pick frequencies that are an exact multiple of the sampling frequency. I simply prefer to use 96K FFT's so every bin is exactly in Hz.

OK but this gets complicated - don't the tone frequencies need to be arranged so that their IMD products don't fall on other tones & therefore are hidden? Sorry if this is OT

in other words, if the files differ mostly by a small timing offset, that's not same as if they differ mostly due to distortion.

DiffMaker does time-align the two files under test to minimize differences.

Jan

DiffMaker does time-align the two files under test to minimize differences.

Jan

It time aligns as best it can - is this based on some sample period of analysis between the two tracks? If the tracks drift in time during the sample being analyzed does it dynamically align them? This would be wrong IMO as it could also hide other timing differences that are not due to clock drift.

I reckon it's probably best to use synchronous clocking as Scott says & turn off timing compensation in Diffmaker

Last edited:

Minor wiggles in frequency response are a likely explanation. -60dB difference could mean a 0.009dB response peak; unlikely to be audible?

The blurb on the Diffmaker download page leads me to believe it compensates for frequency response anomalies.

Under the heading 'What can Audio Diffmaker do?' it says, inter alia :

Measure the frequency response of the equipment being tested and apply it so the effects of linear frequency response can be removed from the testing.

- Home

- Source & Line

- Digital Line Level

- DAC blind test: NO audible difference whatsoever