They will almost certainly use the asio driverI still don't understand why DAWs like Cubase can do that though

I couldn't find the info which DSP system to select in VituixCAD for using it with CamillaDSP, but it should be "Generic", right?

https://kimmosaunisto.net/Software/VituixCAD/VituixCAD_help_20.html#Options_Frequency_responses

https://kimmosaunisto.net/Software/VituixCAD/VituixCAD_help_20.html#Options_Frequency_responses

Generic:

Adjustable sample rate with list box.

BW of Peak/notch filter is between dBgain/2.

Frequency parameter of Shelving LP/HP filter is dBgain/2.

Bessel LP/HP is phase-normalized. phase@fc=pi/4*order, -3dB point depends on order.

Hi maybe I can help you with attaching my post on audiosciencereview. I managed to display alle the separate channels of my device as one multichannel interface.Thanks @phofman !

I'm planning to use my ultralite's loopback, so software loopback won't be a problem.

I found that it actually works if I type in the channel name that the audio interface provides like S/PDIF OUT 1-2(Ultralite-mk5). That's a good start.

However, this method only allows me to utilize two of the stereo input/output channels, for example Line Out 1-2,Line Out 3-4, Line Out 5-6, etc. And it doesn't appear to allow me to input multiple playback devices.

What I was hoping for was to recognize the entire device at once and use all 16 inputs and 18 outputs at once, and apparently the tutorials using the Raspberry Pi do this in the form of hw:Ultralitemk5. Your link also shows 2 channel application.. so I'm stuck again 😢

Its requiring a specific setup in Windows.

https://audiosciencereview.com/forum/index.php?threads/camilladsp-and-rme-interfaces.35838/

Gr Marcel

I have a parametric filter running Camilla in Moode. My DAC won't accept higher than 96kHz so I want to add a resampler so that everything comes out at 48kHz. I looked but can't find this. Can someone help me with this? Here is my existing filter:

Attachments

Last edited:

My poor phrasing. That was where I was looking but I couldn't work out the proper settings. But they will still show in the yml file?Resampling is defined under the Device tab i.e. its not a "filter"...

On top of Device page there is a pulldown menu - here you set what you want Out. The under the Resampling section below, you defined the properties of the Resampling. Read about them at the Manual pages:

https://github.com/HEnquist/camilladsp?tab=readme-ov-file#resampling

//

https://github.com/HEnquist/camilladsp?tab=readme-ov-file#resampling

//

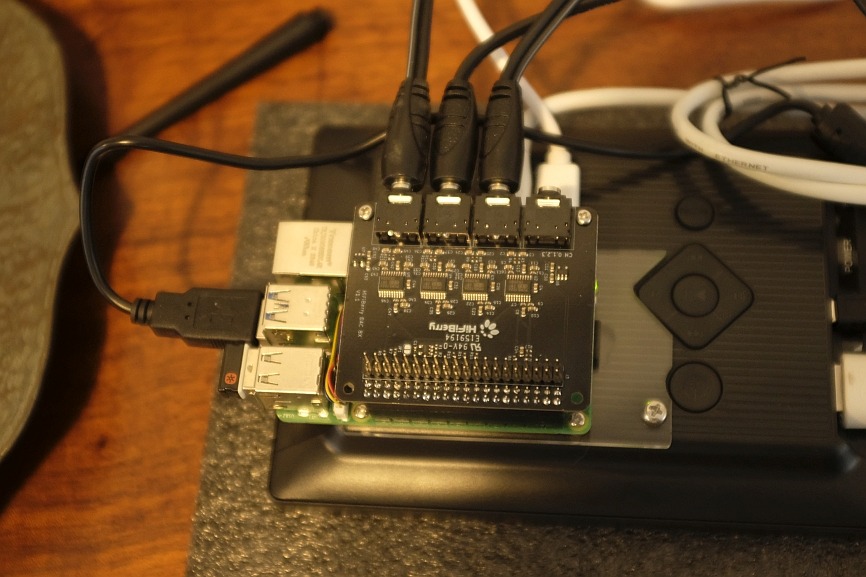

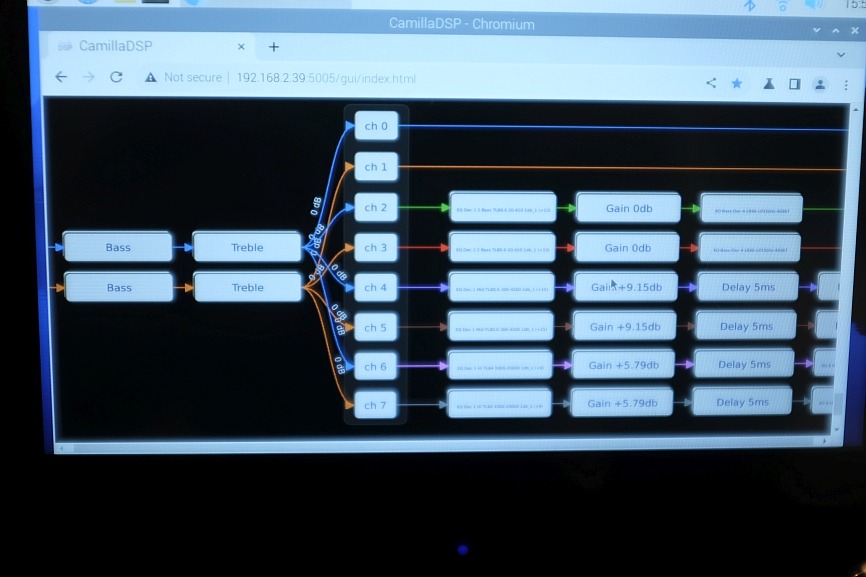

HiFiBerry DAC8X on Raspberry Pi 5 with CamillaDSP and touchscreen.

I put this together as an experiment to show that a full DSP could be homebuilt with a Raspberry Pi mounted to a touchscreen for control.

This shows the DAC8X mounted on the RPi5. The screen is an Elecrow 7" (I need to invert the RPi so the cables come out the top. I will hunt down some right angled 3.5mm plugs). Screen works like a phone screen, pinch out to zoom expand, pinch in to shrink, drag with finger etc.

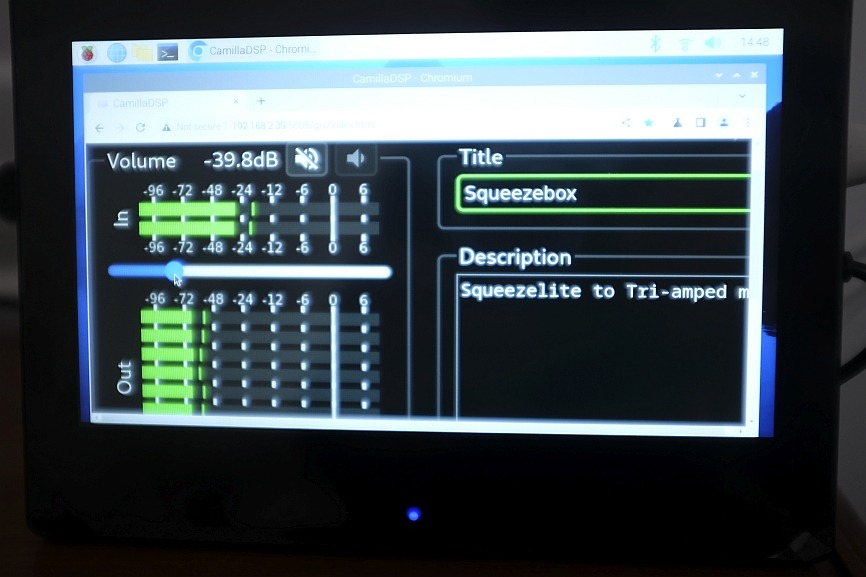

Screen pinched out to show volume control slider.

The zoom controls make the slider easy to control. Selection of other configs is also easy after selecting the Files tab and zoom.

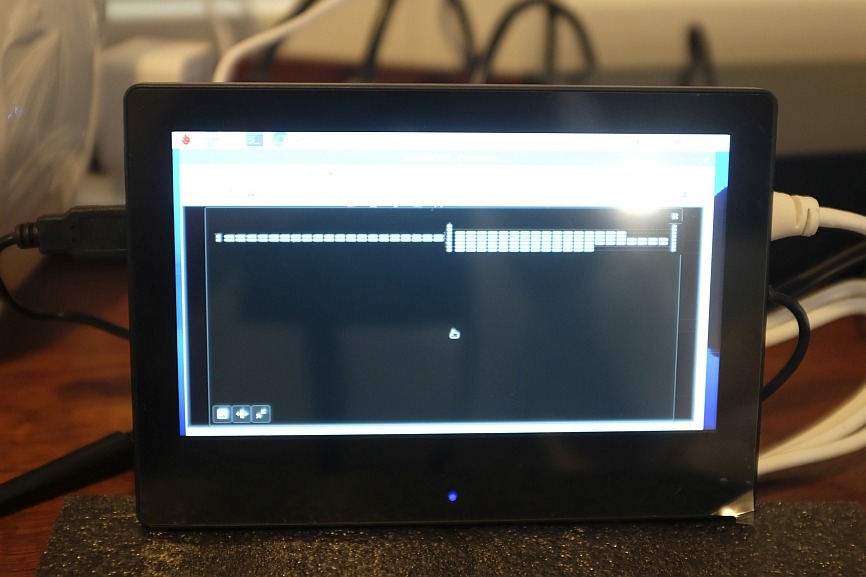

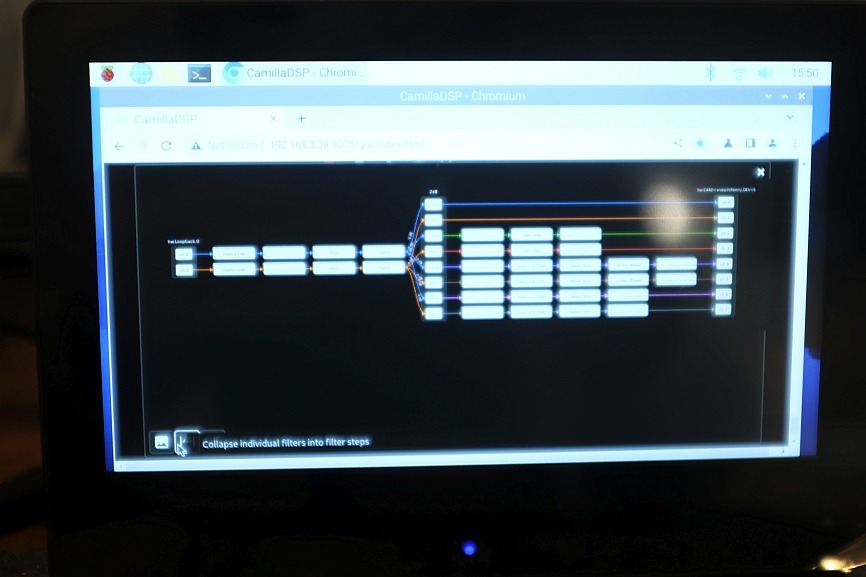

These pics are the Pipeline, pipeline collapsed and the pinched out.

For setup I used a Logitech K400r keyboard, and bookmarked the web addresses I use frequently in the browser. I have not bothered with a "virtual" keyboard yet.

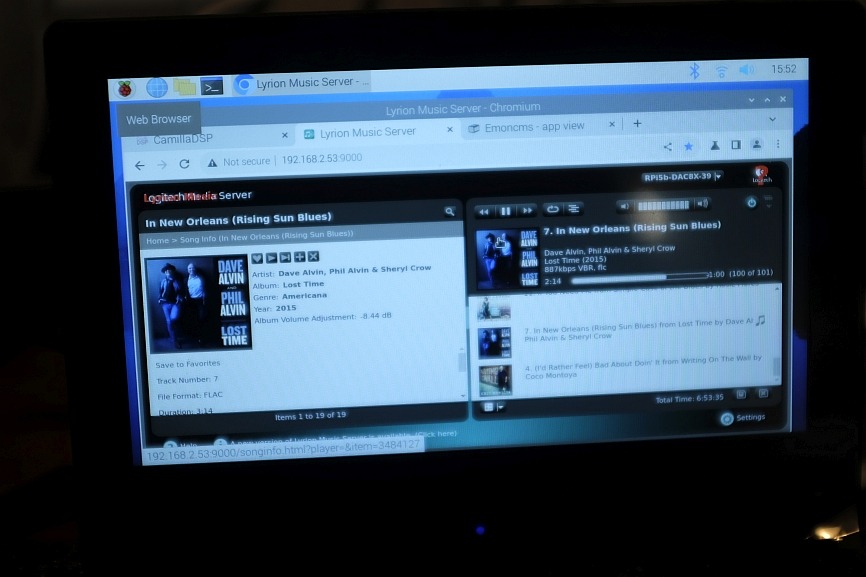

This demo was using Squeezelite as a streamer. A USB audio interface such as a Motu M4 could be added to provide analog input.

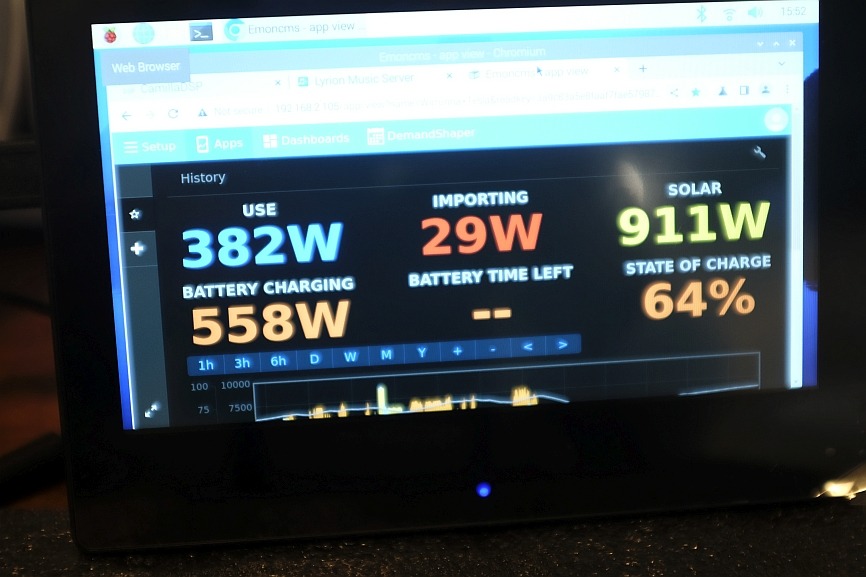

The Pi is running Raspberry Pi OS Lite 64bit and the Chromium browser, so with a couple of bookmarks I can run the Squeezebox server or check the state of my Solar PV system.

The K-Horn speakers are very sensitive (110db/W for mid & hi) but I could not hear any hiss even with the volume right up and my ear in the mid horn mouth, the DAC8X does not add any noise. The first pic shows the amps, an N-Core from Audiophonics for bass and a pair of SMSL SP200 THX headphone amps for the mid and hi.

https://www.elecrow.com/rc070p-7-in...-ips-display-with-built-in-speaker-stand.html

I put this together as an experiment to show that a full DSP could be homebuilt with a Raspberry Pi mounted to a touchscreen for control.

This shows the DAC8X mounted on the RPi5. The screen is an Elecrow 7" (I need to invert the RPi so the cables come out the top. I will hunt down some right angled 3.5mm plugs). Screen works like a phone screen, pinch out to zoom expand, pinch in to shrink, drag with finger etc.

Screen pinched out to show volume control slider.

The zoom controls make the slider easy to control. Selection of other configs is also easy after selecting the Files tab and zoom.

These pics are the Pipeline, pipeline collapsed and the pinched out.

For setup I used a Logitech K400r keyboard, and bookmarked the web addresses I use frequently in the browser. I have not bothered with a "virtual" keyboard yet.

This demo was using Squeezelite as a streamer. A USB audio interface such as a Motu M4 could be added to provide analog input.

The Pi is running Raspberry Pi OS Lite 64bit and the Chromium browser, so with a couple of bookmarks I can run the Squeezebox server or check the state of my Solar PV system.

The K-Horn speakers are very sensitive (110db/W for mid & hi) but I could not hear any hiss even with the volume right up and my ear in the mid horn mouth, the DAC8X does not add any noise. The first pic shows the amps, an N-Core from Audiophonics for bass and a pair of SMSL SP200 THX headphone amps for the mid and hi.

https://www.elecrow.com/rc070p-7-in...-ips-display-with-built-in-speaker-stand.html

Last edited:

Great result and really nice to see the outputs on screen etc.

This is wetting my appetite for something like this.

I'm standing on the precipice of doing something to combine Hifi 4/5 way and another up to 3/4/5 way for HT.

Or if my 8 output DSP dies...

I noted that HT is not there or at least lots of SW and HW work - at present that would put me off.

This is wetting my appetite for something like this.

I'm standing on the precipice of doing something to combine Hifi 4/5 way and another up to 3/4/5 way for HT.

Or if my 8 output DSP dies...

I noted that HT is not there or at least lots of SW and HW work - at present that would put me off.

That's exactly the difference. The reasoning is that the real-world capture/playback ratio does not deviate much (is basically constant) and the adjustment should strive to approach that value, instead of jumping around with some average. You can play with the adjustment constants in https://github.com/HEnquist/camilladsp/blob/eagain/src/alsadevice_utils.rs#L221 . The algorithm and the param values were taken from alsaloop, IIRC.

Maybe the algorithm constants could dynamically reflect the chunksize - larger chunksize could be slower will fewer fluctuations, smaller chunks more agile.

Keeping small chunksize/latency = small buffers goes against feedback rate adjustments, especially should the processing times vary (due to varying load on the DSP CPUs caused by other processes).

If you look at the chart at https://www.diyaudio.com/community/...rce-to-playback-hw-device.408077/post-7574371 - IMO the optimum target level should be slightly above chunksize, not at chunksize.

Revisiting this post as I've been experimenting with a HifiBerry DAC8x and as a result I've been dealing with an asynchronous system that requires rate adjust.

I know that it would seem that in the real-world the capture/playback ratio would be constant, but this does not seem to always be the case.

Using an ALSA loopback as capture device and the DAC8x with rate adjust but no resampling seems pretty constant. I have the rate adjust interval set to 2 seconds and the buffer level usually only changes ~10 samples each time and stays within +/- ~100 samples of the target level. On occasion I get a bit more variation but usually not.

However, when I use a SPDIF to USB card (like the hifime S2 digi) as capture device with rate adjust and async resampling I sometimes get jumps of 100s of samples each interval. With the 2 second rate adjust interval I am able to avoid buffer under runs but sometimes it gets close to using up the buffer.

Any idea what would cause this behavior? I've tried a few different SPDIF sources, and I see it on all of them. This is only anecdotal, but it usually seems like what happens is the buffer level exceeds my target and the rate adjust adjusts continues to adjust down (usually only going down to 0.9997) but the buffer level keeps increasing. Eventually there will be a sequence of large drops in buffer level until I am hundreds of samples below my target level. It is almost like the feedback is delayed.

Michael

@mdsimon2 : Lately I have been stress-testing weak RK3308 and its capabilities, using CDSP as a bridge + optionally async resampling between UAC2 gadget and the SoC I2S interface/s. That SoC has a driver which supports merging two I2S to produce 16ch out + 10ch in or any combination up to 26 channels.

Since the I2S hardware has fixed max buffers (512kB per I2S interface, IIRC), at 16ch out at 384kHz the maximum buffer frames size ends up quite small. I used CDSP 2 with 4 chunks per buffer and 8 periods per buffer. Target level was set at 75% of the buffer size, i.e. 3 chunksizes. I increased the base adjustment step ten fold to 1e-4 https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L233 , adjustment period down to 2 seconds, to make sure the regulation kicks in faster and is more steep.

WIth this setup the system was able to cleanly playback and capture e.g. IIRC USB host -> gadget -> CDSP1 compiled with float32 format sync resamplling 16ch from 48kHz to 384kHz -> 16ch I2S -> 10ch I2S -> CDSP2 rate adjust to playback, using 5 channels only (isochronous packet limit) -> gadget -> host, no xruns, no issues on sox spectrogram for 20 minutes. Amazingly stable performance from CDSP (and that SoC too). All four cores were quite loaded, yet no timing issues. CDSP1 doing the resampling was reniced to -19. I did not play with any RT priorities or core assignments.

Of course the buffer level deviates, but never got any wild fluctuations. Also if your processing thread takes time and your CPU load varies (other processing running on the background), the processed chunks get delivered to the playback with varying delay which makes the buffer fluctuate more.

What was your buffer size and target level? Hundreds of samples seem safe if your buffer is several thousands and the target level is above half of it. Using the whole buffer is no problem, in fact you want the buffer as full as possible - larger safety margin. There can be no buffer overflow on playback, writing the samples will simply wait. Underflow is a problem on playback. Unlike on capture where overflow is a problem.

The buffer level keeps increasing if the capture speed is still too fast. But it slows down every cycle and eventually will get too slow, causing adjustment to the other side.

It takes some cycles to reach and settle around the real capture speed, therefore it may be useful to decrease the cycle period (adjustment time). Exact target level will never be reached stably, too many timing factors in the chain - look at that linked chart how complicated the timing is. But if the deviations do not drop below some reasonable level (e.g. I would not be OK with some 20% of the buffer - hence my target at 75%), IMO no need to worry.

EDIT: corected async -> sync resampling in my setup description (no need for async).

Since the I2S hardware has fixed max buffers (512kB per I2S interface, IIRC), at 16ch out at 384kHz the maximum buffer frames size ends up quite small. I used CDSP 2 with 4 chunks per buffer and 8 periods per buffer. Target level was set at 75% of the buffer size, i.e. 3 chunksizes. I increased the base adjustment step ten fold to 1e-4 https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L233 , adjustment period down to 2 seconds, to make sure the regulation kicks in faster and is more steep.

WIth this setup the system was able to cleanly playback and capture e.g. IIRC USB host -> gadget -> CDSP1 compiled with float32 format sync resamplling 16ch from 48kHz to 384kHz -> 16ch I2S -> 10ch I2S -> CDSP2 rate adjust to playback, using 5 channels only (isochronous packet limit) -> gadget -> host, no xruns, no issues on sox spectrogram for 20 minutes. Amazingly stable performance from CDSP (and that SoC too). All four cores were quite loaded, yet no timing issues. CDSP1 doing the resampling was reniced to -19. I did not play with any RT priorities or core assignments.

Of course the buffer level deviates, but never got any wild fluctuations. Also if your processing thread takes time and your CPU load varies (other processing running on the background), the processed chunks get delivered to the playback with varying delay which makes the buffer fluctuate more.

What was your buffer size and target level? Hundreds of samples seem safe if your buffer is several thousands and the target level is above half of it. Using the whole buffer is no problem, in fact you want the buffer as full as possible - larger safety margin. There can be no buffer overflow on playback, writing the samples will simply wait. Underflow is a problem on playback. Unlike on capture where overflow is a problem.

Look at the algorithm, its quite simple https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L221 . At start it does not know the samplerate ratio (the capture_speed). If the delay difference (in samples) is outside of some "equality" range, the capture speed is increased (resp. decreased) by 3 speed_delta steps. If the delay difference is inside this equality range, the adjustment drops to one speed_delta step, in resp. direction.This is only anecdotal, but it usually seems like what happens is the buffer level exceeds my target and the rate adjust adjusts continues to adjust down (usually only going down to 0.9997) but the buffer level keeps increasing. Eventually there will be a sequence of large drops in buffer level until I am hundreds of samples below my target level. It is almost like the feedback is delayed.

The buffer level keeps increasing if the capture speed is still too fast. But it slows down every cycle and eventually will get too slow, causing adjustment to the other side.

It takes some cycles to reach and settle around the real capture speed, therefore it may be useful to decrease the cycle period (adjustment time). Exact target level will never be reached stably, too many timing factors in the chain - look at that linked chart how complicated the timing is. But if the deviations do not drop below some reasonable level (e.g. I would not be OK with some 20% of the buffer - hence my target at 75%), IMO no need to worry.

EDIT: corected async -> sync resampling in my setup description (no need for async).

Last edited:

At 44.1 kHz sample rate I was using a chunk size of 1024 and target level of 1024.What was your buffer size and target level? Hundreds of samples seem safe if your buffer is several thousands and the target level is above half of it. Using the whole buffer is no problem, in fact you want the buffer as full as possible - larger safety margin. There can be no buffer overflow on playback, writing the samples will simply wait. Underflow is a problem on playback. Unlike on capture where overflow is a problem.

A noob question, I thought the CamillaDSP buffer size was 2x chunk size, is this correct? So when I am setting my target at 1024 that is actually half the buffer? And I actually want it to be something like 75% of the buffer, i.e. 1536? The GUI reported buffer is typically is in the 800-1300 range but I've seen it below 400 a few times.

I know I can increase the buffer size but I am trying to minimize latency. I've been spoiled by my normal synchronous systems which I run at 128 chunk size and no rate adjust (48 kHz sample rate). Sounds like the answer is decrease the rate adjust interval and increase the speed delta.

Michael

It was changed to 4x chunksize in v2 https://github.com/HEnquist/camilladsp/blame/v2.0.3/src/alsadevice_buffermanager.rs#L22I thought the CamillaDSP buffer size was 2x chunk size, is this correct?

So if you set target level equal to chunksize, you are setting at 25% which is too low, IMO

For lower latencies - it's always better to have smaller granularity of buffer updates, that's why the Henrik's change from 2 to 4 chunks is very good. I would try using smaller chunks and still keep the target level at 3 chunks (see https://github.com/HEnquist/camilladsp/issues/335 to modify your code if needed). Smaller chunks will take a bit more CPU but more chunks give more buffer safety.

Of course you can play with the rate adjustment parameters too. Updates more often (adjustment period), larger single change (maybe) https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L233 , larger change if too far from the target level https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L237 + https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L245

As of the buffer size - it's always good to check the actual device params in /proc/..../hw_params. Also CDSP reports the requested device params in logs with -v (debug), very useful to check for the initial runs with new configuration. The timing is designed for buffer = 4 x chunksize = 8 x period. In some cases I hit device limits where e.g. buffer would end up equal to chunksize (getting immediately xruns), period could not be of the requested size, etc. CDSP asks alsa to set some values, but the driver actually sets values within its constraints. Really good to check the reality.

Last edited:

Thinking about chunk size / target level in regard to a system where capture and playback device are synchronous, target level = chunk size should be fine for such a setup, correct?

Buffer level stays constant due to the synchronous system and this still provides you enough room to avoid buffer underruns. This has been my experience so far and increasing target level will increase latency for no gain, because again, buffer level is constant in such a system.

Michael

Buffer level stays constant due to the synchronous system and this still provides you enough room to avoid buffer underruns. This has been my experience so far and increasing target level will increase latency for no gain, because again, buffer level is constant in such a system.

Michael

Yes that should work well.Thinking about chunk size / target level in regard to a system where capture and playback device are synchronous, target level = chunk size should be fine for such a setup, correct?

I have started looking at improving the rate adjust controller. It was a bit too sensitive in v1, and now in v2 it's too slow. I'm simulating various solutions, and a normal pid controller with modest gains looks good. I'll post some simulation results when I get a little further.

It was changed to 4x chunksize in v2 https://github.com/HEnquist/camilladsp/blame/v2.0.3/src/alsadevice_buffermanager.rs#L22

So if you set target level equal to chunksize, you are setting at 25% which is too low, IMO

For lower latencies - it's always better to have smaller granularity of buffer updates, that's why the Henrik's change from 2 to 4 chunks is very good. I would try using smaller chunks and still keep the target level at 3 chunks (see https://github.com/HEnquist/camilladsp/issues/335 to modify your code if needed). Smaller chunks will take a bit more CPU but more chunks give more buffer safety.

Of course you can play with the rate adjustment parameters too. Updates more often (adjustment period), larger single change (maybe) https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L233 , larger change if too far from the target level https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L237 + https://github.com/HEnquist/camilla...45df8bfba6e22cc1/src/alsadevice_utils.rs#L245

As of the buffer size - it's always good to check the actual device params in /proc/..../hw_params. Also CDSP reports the requested device params in logs with -v (debug), very useful to check for the initial runs with new configuration. The timing is designed for buffer = 4 x chunksize = 8 x period. In some cases I hit device limits where e.g. buffer would end up equal to chunksize (getting immediately xruns), period could not be of the requested size, etc. CDSP asks alsa to set some values, but the driver actually sets values within its constraints. Really good to check the reality.

I've compiled CamillaDSP to allow for larger target levels. I've been using a S2 Digi (TOSLINK input capture device) and a HifiBerry DAC8x (analog output playback device) with rate adjust and async resampling. At 48 kHz I've been using 128 chunk size, 384 target level and 2 second adjust period. This works very well and the buffer level is about +/- 50 of target. Latency is around 15 ms which is not bad at all and fine for an audio/video application.

For comparison a MOTU Ultralite Mk5 in a TOSLINK input / analog out synchronous configuration at 48 kHz with 128 chunk size and 128 target level is about 10 ms. An Okto dac8 pro with the same settings as the MOTU is about 6 ms.

Michael

@mdsimon2 : Good to hear about your good results. Maybe RPi5 with its fast RAM would handle even chunk size 64.

The large latency difference between MOTU and Okto is interesting. Do I understand correctly that the alsa/SW buffers were identical? 4ms is 192 frames, that would suggest the MOTU internal buffers are quite long.

The large latency difference between MOTU and Okto is interesting. Do I understand correctly that the alsa/SW buffers were identical? 4ms is 192 frames, that would suggest the MOTU internal buffers are quite long.

As far as I can tell the ALSA reported buffers are the same.

MOTU

Okto

However, behavior wrt to CamillaDSP is a bit different.

MOTU

1) Buffer level always slightly above target. For example, at 128 chunk size / 128 target level, typical buffer levels are 140 to 180.

2) When coming out of paused state to running, log always reports a buffer underrun.

Okto

1) Initially buffer level slightly above target level. However, after going in / out of paused state one time buffer level decreases to 50-100. This happens regardless of chunk size / buffer level. Low buffer level remains unless CamillaDSP is restarted, or a new configuration is applied.

2) Log never reports buffer underruns when going from paused to running state.

3) Although buffer level is constant, larger chunk sizes result in more latency.

Latency difference between the two seems related to reduced buffer level in Okto after coming out of paused state. I did some comparisons a while back at 512 chunk size / target, with and without pausing.

Okto w/ pause: 26 ms

Okto w/o Pause: 16 ms

MOTU w/o pause: 26 ms

Michael

MOTU

michael5@raspberrypi5:/proc/asound/UltraLitemk5/pcm0p/sub0$ cat hw_params

access: RW_INTERLEAVED

format: S24_3LE

subformat: STD

channels: 22

rate: 48000 (48000/1)

period_size: 64

buffer_size: 512

michael5@raspberrypi5:/proc/asound/UltraLitemk5/pcm0p/sub0$ cat sw_params

tstamp_mode: NONE

period_step: 1

avail_min: 128

start_threshold: 1

stop_threshold: 512

silence_threshold: 0

silence_size: 0

boundary: 4611686018427387904

Okto

michael4@raspberrypi4:/proc/asound/DAC8PRO/pcm0p/sub0$ cat hw_params

access: RW_INTERLEAVED

format: S32_LE

subformat: STD

channels: 8

rate: 48000 (48000/1)

period_size: 64

buffer_size: 512

michael4@raspberrypi4:/proc/asound/DAC8PRO/pcm0p/sub0$ cat sw_params

tstamp_mode: NONE

period_step: 1

avail_min: 128

start_threshold: 1

stop_threshold: 512

silence_threshold: 0

silence_size: 0

boundary: 4611686018427387904

However, behavior wrt to CamillaDSP is a bit different.

MOTU

1) Buffer level always slightly above target. For example, at 128 chunk size / 128 target level, typical buffer levels are 140 to 180.

2) When coming out of paused state to running, log always reports a buffer underrun.

Okto

1) Initially buffer level slightly above target level. However, after going in / out of paused state one time buffer level decreases to 50-100. This happens regardless of chunk size / buffer level. Low buffer level remains unless CamillaDSP is restarted, or a new configuration is applied.

2) Log never reports buffer underruns when going from paused to running state.

3) Although buffer level is constant, larger chunk sizes result in more latency.

Latency difference between the two seems related to reduced buffer level in Okto after coming out of paused state. I did some comparisons a while back at 512 chunk size / target, with and without pausing.

Okto w/ pause: 26 ms

Okto w/o Pause: 16 ms

MOTU w/o pause: 26 ms

Michael

- Home

- Source & Line

- PC Based

- CamillaDSP - Cross-platform IIR and FIR engine for crossovers, room correction etc