The NFS still requires some skill in operating it, it’s not like pressing a button and getting a soda from a vending machine. So yes, the data from the apparatus is dependent on human factors.

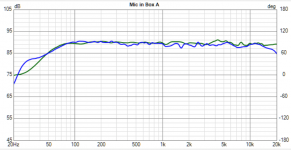

In the Klippel software the user can choose between various forms of scaling and representation of the data as is obviously the case here as these measurements are also taken at about a 30dB difference in output level.

In the Klippel software the user can choose between various forms of scaling and representation of the data as is obviously the case here as these measurements are also taken at about a 30dB difference in output level.

I made an effeort of course to match the x and y axis. Rest assure that that are comparable in this context. My case is that it is shows how very hard it is to repeat the same measurement in two different locations and obtain the same results.

//

//

OK - the level difference is not desirable - I'll shall see of I can find some at same SPL...

//

//

I would say it rather shows two people operating the machine and they choose to present the data in a different way since there’s no established protocol.I made an effeort of course to match the x and y axis. Rest assure that that are comparable in this context. My case is that it is shows how very hard it is to repeat the same measurement in two different locations and obtain the same results.

//

The measurements also seem to be taken at different levels as I already pointed out.

I run Klippel software on my computer and the data is available through the cloud so conceivably I could check any file’s exakt content from any machine.

Edit: The software is available at request from Klippel so people having their DUT:s tested can look at all the data any which way they please. Also Erin and Amir are two people who have privately acquired their Klippel NFS. They are both, as far as I know, and with all due respect to both, literally operated in garages by very committed amateurs. I don’t think it’s fair to draw conclusions on the validity of the entire Klippel range of equipment based on how they present their data. The environment of the scanner does play a part in the measurements, ambient temperature, vibrations, noise intrusion etc are all a factor. They do however provide a very valuable service to the public and for this I for one am extremely grateful.

Last edited:

Drive voltage data, sensitivity settings on the Klippel and other variables have to be accounted for.

If f.i. Amir uses the 1V default drive setting and Erin uses 2.83V you can’t do these types of comparisons.

Isn’t one of the above curves for the 208Be version btwy?

This is absolutely pointless unless you supply some info along with your gif:s.

If f.i. Amir uses the 1V default drive setting and Erin uses 2.83V you can’t do these types of comparisons.

Isn’t one of the above curves for the 208Be version btwy?

This is absolutely pointless unless you supply some info along with your gif:s.

Last edited:

The LSR305 measurement from Amir was an early one where there were teething troublesOK - the level difference is not desirable - I'll shall see of I can find some at same SPL...

Here is a better comparison of the KEF R3, looks very close to me given potential production tolerances

Agree, in general the match is very good. But there are still local differences in the odd dB territory which I think is still what a user hoped to gain... for 500USD...

Look at 1k-4k... a 1 dB shelf... thats absolutely a different tuning of a speaker.... and for those who don't think 1 dB matter - do you need a NFS.

//

Look at 1k-4k... a 1 dB shelf... thats absolutely a different tuning of a speaker.... and for those who don't think 1 dB matter - do you need a NFS.

//

Then they are kidding themselves and will never be happy with acoustic measurements. Was the mic a mm in a different place, a different mic with a slightly different calibration, was it the left or the right speaker, how many measurements did the NFS take, what was the temperature, humidity etc. etc.Agree, in general the match is very good. But there are still local differences in the odd dB territory which I think is still what a user hoped to gain... for 500USD...

Considering all of those factors the differences you see there show just how consistent those speakers are.

If you use manufacturer data, simulations and own measurements, depending on your skills, which include designing and in most cases building of the cabinet, you will be able to get quite a lot out of given chassis.

I really apreciate Klippel, but don't see it as an essential tool for me and my hobby. When you measure commercial speakers, I daubt you will always find Klippel-staight response. There is quite a lot of taste included in designing speakers. So the 10% difference to a Klippel assisted build may boil down to taste, more than right or wrong.

When I got my first measuring system in the 80s, I startet to measure all speakers that I could find. Commercial and my own builds. The system could do near field measurments down to 200 Hz, in a large living room. To my surprise my ear tuned builds where very linear, they did not show any peaks, just dips. Commercial speakers where usually much more peaky. What surprised me was how far off my own designs x-over frequencys often where, as these where basic calculations from impedance plots.

Today, with my measuring equipment, the time needed for build a compareable well sounding speaker is a fraction of the time I spent with listenig tests and changing hundreds of x-over parts. Also, manufacturers data have improved, they were mostly flat out lies in the 80s.

I really apreciate Klippel, but don't see it as an essential tool for me and my hobby. When you measure commercial speakers, I daubt you will always find Klippel-staight response. There is quite a lot of taste included in designing speakers. So the 10% difference to a Klippel assisted build may boil down to taste, more than right or wrong.

When I got my first measuring system in the 80s, I startet to measure all speakers that I could find. Commercial and my own builds. The system could do near field measurments down to 200 Hz, in a large living room. To my surprise my ear tuned builds where very linear, they did not show any peaks, just dips. Commercial speakers where usually much more peaky. What surprised me was how far off my own designs x-over frequencys often where, as these where basic calculations from impedance plots.

Today, with my measuring equipment, the time needed for build a compareable well sounding speaker is a fraction of the time I spent with listenig tests and changing hundreds of x-over parts. Also, manufacturers data have improved, they were mostly flat out lies in the 80s.

I think you're missing the point.Agree, in general the match is very good. But there are still local differences in the odd dB territory which I think is still what a user hoped to gain... for 500USD...

Look at 1k-4k... a 1 dB shelf... thats absolutely a different tuning of a speaker.... and for those who don't think 1 dB matter - do you need a NFS.

Yes, one dB can sometimes matter, but it's not much more than a very slight nuance.

Even a nuance that is not much more than personal taste.

It's most certainly doesn't make or break an entire speaker.

Looking at this kind of details is nothing more than pixel peeping.

But it doesn't have much to do with understanding how to make a better speaker.

You're also missing the context of any tolerances/errors in the speakers themselves, or like the tolerances in especially passive crossovers.

With an average tolerances of around ±5-10%, that one dB difference is easily made.

So if you want to do it right, you should at least measure a bunch of the same speakers of the same brand, ideally over multiple badges if really you want to scientifically correct and discuss those tiny little details.

Otherwise it's being nitpicky about details you have no clue why they are there to begin with.

That could be as well a statistical error, or rather the lack of any statistical information and data.

Last edited:

It's very hard to compare to graphs with different scales, or mostly graphs that have a different size.

Zo here the two in one graph, traced in VituixCAD, there are some errors due to tracing errors.

Attachments

Here the same for the KEF R3The LSR305 measurement from Amir was an early one where there were teething troubles

Here is a better comparison of the KEF R3, looks very close to me given potential production tolerances

It really looks like the measurements from ASC have quite a bit more interferences and other acoustic artifacts in them.

They also both looks quite smoothed, probably 1/12 smoothing or so.

On top, VituixCAD always has a bit of smoothing, even on the "no-smoothing mode".

Most more modern programs seem to "like" this smooth look.

I personally hate it.

Attachments

Are these of the same speaker sample ?Two different NFS users, Erin and amir, measuring the same product. I think it proves my case that even using such a refined equipment, the differences are about the same as with an insightful home DIYer using a mic and sound card could obtain... ref point ended up att 100Hz...

View attachment 1092031

//

did you read my comment above?I doubt they measured the same individual. Yet an argument to hope for too high accuracy.

//

Here the same for the KEF R3

It really looks like the measurements from ASC have quite a bit more interferences and other acoustic artifacts in them.

They also both looks quite smoothed, probably 1/12 smoothing or so.

On top, VituixCAD always has a bit of smoothing, even on the "no-smoothing mode".

Most more modern programs seem to "like" this smooth look.

I personally hate it.

1/20-th octave per CEA-2034.

ASR mic setup is different. Amir uses the mic adapter which hangs off the boom and then put a big towel around around it to help. Pretty janky. I went the extra mile and put the mic at the end of a boom and use clay to smooth the transition so there will be no effect from the mic holder/boom. I made a video about it over a year ago.

Amir also does not use a mic calibration file. I do.

There have been a few instances where results from Amir‘s measurements are different and I have taken the time to perform ground playing measurements to verify the LF and in other cases I have performed outdoor gated measurements to confirm the HF. In all instances those quasi-anechoic measurements support my NFS measurements in a quick sanity check and leave the ball in Amir’s court to verify his own findings. I make all of this publicly available in each of my reviews where an instance like this occurs. So far I have not had any issues. It’s even caused a few manufacturers to find faults in their own measurement setups. More likely than not, we are finding differences in manufacturing tolerances of parts. Though, there have been a couple cases where Amir does not properly measure a speaker. For instance the JBL 708P was measured asymmetrically because he did not take the felt pads off of the bottom - which the owner had been using to keep the speaker from scratching his desk - and therefore the speaker was tilted when he measured it. You can verify this easily by simply looking at his vertical responses and realizing that the microphone was not lined up with the on axis tweeter response. When I measured it, I took the felt pads off with permission from the owner. This was the exact same speaker.

Not everyone is perfect. I’m just explaining why you might see differences between our measurements from time to time. Sometimes they are tolerance-based and sometimes they are due to incorrect set up. I go out of my way to verify my measurements are correct whenever I have a case that I am suspicious of them. And again, I have document of these cases many times on my website, on my YouTube page, and even on my Facebook group.

Last edited:

Yes. NFS offers accuracy. No doubt. But my take is still that it is not necessary to make a world class product. The use of the product and the intended deployment environment simply don't necessitate the high accuracy. And it wont secure that you to create a completely neutral speaker. I see it as a way to perform a series of comparable measure of products - like bikinpunk and amir - if you don't change your procedure. Or for product development of home appliances where one need to declare standardised measurement of noise levels.did you read my comment above?

Its like its too accurate in one sense (music in room) but not enough precise in an other (reproducibility between users)...

//

And that is exactly the point!Not everyone is perfect. I’m just explaining why you might see differences between our measurements from time to time. Sometimes they are tolerance-based and sometimes they are due to incorrect set up. I go out of my way to verify my measurements are correct whenever I have a case that I am suspicious of them. And again, I have document of these cases many times on my website, on my YouTube page, and even on my Facebook group.

It's not about who is better than who.

It's about collecting data and learning from it.

The most important factor in any (scientific) experiment, is being honest about it.

Which very often doesn't mean the whole dataset is wrong, as long as you keep it into consideration.

What's the reason Amir doesn't use calibration files though?

Even mics have a small bafflestep, so data is always different that way.

btw, the 1/20the per octave is the given smoothing.

However on top of that, there is certain line smoothing as well.

This is the interpolation between data points (besides the octave smoothing)

REW does something very similar.

If you measure the same thing with REW vs ARTA and show the data with the same smoothing, REW measurements look a lot "smoother" than ARTA.

As an example, Way WAY back, this used to be a think in Excel as well.

Ever since Excel 2007, there was a graph smoothing applied to data, to make it "less jittery"

Programs use the same kind of code it seems for graphs.

Last edited:

I am a little confused, since you were first saying that even 1dB matters?Yes. NFS offers accuracy. No doubt. But my take is still that it is not necessary to make a world class product. The use of the product and the intended deployment environment simply don't necessitate the high accuracy. And it wont secure that you to create a completely neutral speaker. I see it as a way to perform a series of comparable measure of products - like bikinpunk and amir - if you don't change your procedure. Or for product development of home appliances where one need to declare standardised measurement of noise levels.

Its like its too accurate in one sense (music in room) but not enough precise in an other (reproducibility between users)...

//

1dB falls kind of within measurements tolerances, user error tolerances, production tolerances etc, either or combined.

- Home

- Loudspeakers

- Multi-Way

- How much would YOU pay for Klippel service?