Has anyone interested in Entropy ever considered the Monty Hall problem?

https://en.wikipedia.org/wiki/Monty_Hall_problem

It makes no sense superficially. The contestant is convinced he/she has a 1 in 3 chance of winning a Car.

Otherwise it is a 2 in 3 chance of winning a goat. 😡

The answer is to switch and select Monty's closed door. That makes it 2 out of 3! A better bet. Thing is, Monty knows where the Car is.

Weird but True. I say this as a Mathematician.

https://en.wikipedia.org/wiki/Monty_Hall_problem

It makes no sense superficially. The contestant is convinced he/she has a 1 in 3 chance of winning a Car.

Otherwise it is a 2 in 3 chance of winning a goat. 😡

The answer is to switch and select Monty's closed door. That makes it 2 out of 3! A better bet. Thing is, Monty knows where the Car is.

Weird but True. I say this as a Mathematician.

Last edited:

Ta TNT!

There are 17 different instrument "modes" to be checked out before the JWST can start its scientific studies of the universe - as detailed in your link.

There are 17 different instrument "modes" to be checked out before the JWST can start its scientific studies of the universe - as detailed in your link.

Amongst the Pure Mathematics Community, It is considered important what your Erdos Number is. Paul Erdos has previously been considered our Leading Light.

https://en.wikipedia.org/wiki/Paul_Erdős

Thus if you published a Proof about Prime Numbers jointly with Paul, You get an "Erdos Number" of 1!

I am reconsidering this proposition. Paul Erdos simply didn't get the Monty Hall problem.

I am Happy with my "Fermi Number" of 2. A student of a student. How it is.

https://en.wikipedia.org/wiki/Paul_Erdős

Thus if you published a Proof about Prime Numbers jointly with Paul, You get an "Erdos Number" of 1!

I am reconsidering this proposition. Paul Erdos simply didn't get the Monty Hall problem.

I am Happy with my "Fermi Number" of 2. A student of a student. How it is.

Your IQ must be other-worldly.Has anyone interested in Entropy ever considered the Monty Hall problem?

https://en.wikipedia.org/wiki/Monty_Hall_problem

It makes no sense superficially. The contestant is convinced he/she has a 1 in 3 chance of winning a Car.

Otherwise it is a 2 in 3 chance of winning a goat. 😡

The answer is to switch and select Monty's closed door. That makes it 2 out of 3! A better bet. Thing is, Monty knows where the Car is.

Weird but True. I say this as a Mathematician.

Do you know that “erdos” means ‘of the forest’?Amongst the Pure Mathematics Community, It is considered important what your Erdos Number is. Paul Erdos has previously been considered our Leading Light.

https://en.wikipedia.org/wiki/Paul_Erdős

Thus if you published a Proof about Prime Numbers jointly with Paul, You get an "Erdos Number" of 1!

I am reconsidering this proposition. Paul Erdos simply didn't get the Monty Hall problem.

I am Happy with my "Fermi Number" of 2. A student of a student. How it is.

I amaze myself. I appear also to have an "Erdos Number" of 2!

My Mother would often summon me to the Living Room. She would say things like "Steve, (or Stephen, when she would show noticeable disappointment in me) notice most of your friends are oddballs."

"Mother," I would say, "Without the Oddballs, I would have NO FRIENDS at all."

Apropos my putative Erdos Number of 2. My Nephew in Law is called Purdy!

https://en.wikipedia.org/wiki/George_B._Purdy

No, I can't justify it either.

My Mother would often summon me to the Living Room. She would say things like "Steve, (or Stephen, when she would show noticeable disappointment in me) notice most of your friends are oddballs."

"Mother," I would say, "Without the Oddballs, I would have NO FRIENDS at all."

Apropos my putative Erdos Number of 2. My Nephew in Law is called Purdy!

https://en.wikipedia.org/wiki/George_B._Purdy

No, I can't justify it either.

Last edited:

Thus if you published a Proof about Prime Numbers jointly with Paul, You get an "Erdos Number" of 1!

This is sooooo fascinating! 🤓

If Alice collaborates with Paul Erdős on one paper, and with Bob on another, but Bob never collaborates with Erdős himself, then Alice is given an Erdős number of 1 and Bob is given an Erdős number of 2, as he is two steps from Erdős.

https://en.wikipedia.org/wiki/Erdős_number#Most_frequent_Erdős_collaborators

Interestingly, Srinivasa Ramanujan has an Erdős number of only 3 (through G.H. Hardy, Erdős number 2), even though Paul Erdős was only 7 years old when Ramanujan died.

Attachments

Do we have two kinds of entropy ?So, are you saying that information entropy decreases as thermodynamic entropy increases?

I do not think so, but who knows, then there should be two names.

I think there is one and only one entropy and there are two aspect to it.

First of all, entropy does not hang from nowhere. We deal with the entropy of a system and it better be well defined with the point wether it is opened or closed.

I am puzzled by the double face of entropy, how one is derived from the other.

I think I should look at Shannon about this.

And Brilliouin works.

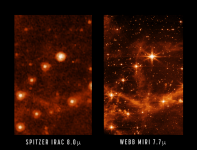

TNT's link includes Webb's MIRI image of part of the Large Magellanic Cloud, which is a satellite galaxy of the Milky Way.

The increase in observable detail compared to the retired Spitzer Space Telescope's image of the same region is quite remarkable.

In particular, Webb’s MIRI image shows the interstellar gas in unprecedented detail.

The increase in observable detail compared to the retired Spitzer Space Telescope's image of the same region is quite remarkable.

In particular, Webb’s MIRI image shows the interstellar gas in unprecedented detail.

Attachments

Do we have two kinds of entropy ?

Quoting from Wikipedia, slightly revised:

"At an everyday practical level, the links between information entropy and thermodynamic entropy are not evident.

In classical thermodynamics, entropy is defined in terms of macroscopic measurements and makes no reference to any probability distribution, which is central to the definition of information entropy.

Maxwell's demon can (hypothetically) reduce the thermodynamic entropy of a system by using information about the states of individual molecules. However, in order to function, the demon himself must increase thermodynamic entropy in the process, by at least the amount of Shannon information he proposes to first acquire and store - and so the total thermodynamic entropy does not, in fact, decrease."

https://en.wikipedia.org/wiki/Entropy_(information_theory)#Relationship_to_thermodynamic_entropy

Last edited:

We deal with the entropy of a system and it better be well defined with the point wether it is opened or closed.

Real-life versions of Maxwellian demons occur, but all such "real demons" or molecular demons have their entropy-lowering effects duly balanced by increase of entropy elsewhere.

The proof Shannon is full of baloney lies in his corroborative coin toss. Here he’s theorizibg with machines and verifying with a coin toss. Ridiculous! A coin toss is absolutely 100% consistently predictable with a pre ordained out come when a machine is used to toss it. Also, even a person well practiced can consistently have the same outcome.Do we have two kinds of entropy ?

I do not think so, but who knows, then there should be two names.

I think there is one and only one entropy and there are two aspect to it.

First of all, entropy does not hang from nowhere. We deal with the entropy of a system and it better be well defined with the point wether it is opened or closed.

I am puzzled by the double face of entropy, how one is derived from the other.

I think I should look at Shannon about this.

And Brilliouin works.

I can only tell you what I know:

"According to legend, Greek Heracles descended to Hades from Cape Tenato. Hecateus the inquiring Physicist visited (maybe on a a bus trip) Cape Tenato. and determined no subterranean passage. or access to Hades there."

Sort of thing I do on holiday when I was trying to determine if Norwegian Trolls really exist! It's all Bunk! Just Childish stories.

This marks the dawn of a new era. I happen to think that Planet Earth is reducing its Entropy. This is because we do it at the expense of the Suns increasing its Entropy. How it works.

"According to legend, Greek Heracles descended to Hades from Cape Tenato. Hecateus the inquiring Physicist visited (maybe on a a bus trip) Cape Tenato. and determined no subterranean passage. or access to Hades there."

Sort of thing I do on holiday when I was trying to determine if Norwegian Trolls really exist! It's all Bunk! Just Childish stories.

This marks the dawn of a new era. I happen to think that Planet Earth is reducing its Entropy. This is because we do it at the expense of the Suns increasing its Entropy. How it works.

Sort of thing I do on holiday when I was trying to determine if Norwegian Trolls really exist!

And don't we know it!

Attachments

P.S. "Trollheim" means "Trollhome".

I guess the Trolls were not at home when you visited, Steve!

I guess the Trolls were not at home when you visited, Steve!

No, I saw them Just that my Norwegian companion was not as alert as me!

She was sleepwalking. Anyway, good work. I have fallen out with Bill Gates recently. Thus no images.

She was sleepwalking. Anyway, good work. I have fallen out with Bill Gates recently. Thus no images.

How far has inflation driven up the toll?No, I saw them Just that my Norwegian companion was not as alert as me!

She was sleepwalking. Anyway, good work. I have fallen out with Bill Gates recently. Thus no images.

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- What is the Universe expanding into..