i have a thread on here about designing a system that flip flops between 5 and 6 way and one of the questions i struggle with every day for the past couple weeks, maybe months is how much of the budget is reasonable to allocate to a particular frequency band ...

so i been doing some googling and i think maybe we can have a thread where we can pile on all the various bits of information we have that can help people get an idea of relative importance of various frequencies to overall experience of listening ...

here is something i just found a few minutes ago for example:

https://www.dpamicrophones.com/mic-university/facts-about-speech-intelligibility

specifically this chart:

https://cdn.dpamicrophones.com/media/images/mic-university/facts-about-speech-fig04_1.jpg

it shows relative importance of various frequencies for speech intelligibility ... now you may ask who cares ? we listen to music ! well ... because there is a good reason to suspect that our brain has evolved to process certain frequencies more efficiently BECAUSE of language ... in fact our brains have pretty insane performance in terms of being able to pick out what somebody is saying in a room with 20 other people talking ... it probably makes sense to match the resolution of your sound system to the resolution of your ear-brain mechanism in terms of which frequencies are handled most clearly ...

and here is something i found a few days ago:

https://www.axiomaudio.com/blog/dis...ortion at levels,the masking effect of music.

specifically this chart:

https://www.axiomaudio.com/pub/media/wysiwyg/distortion_figure02.gif

it shows how loud distortion needs to be for people to hear it versus frequency ... not surprisingly it closely mirrors the speech intelligibility curves with stuff below 120 hz being essentially irrelevant with distortion up to almost 100% being almost inaudible at lowest frequencies ... and then as frequencies increase so does our ability to hear distortion ... but unlike with speech intelligibility there doesn't seem to be any corner at 3 khz and the trend simply continues ...

probably because of this ( from the same DPA blog )

https://cdn.dpamicrophones.com/media/images/mic-university/facts-about-speech-fig03.jpg

namely speech begins to roll off around 3 khz about the same area where ears are most sensitive

so our ears, brain and speech have all evolved in parallel around the same frequencies ... ( no surprise there ! )

our ability to detect distortion is basically zero at 20 hz and rises steadily all the way up to the very high frequencies ...

and our speech spectrum is from about 200 hz to about 5 khz with a peak around 1.5 khz or so ...

speech intelligibility is simply a product of the two curves - the frequencies where we can detect distortion AND where speech audio has high energy are the ones most critical to speech intelligibility ...

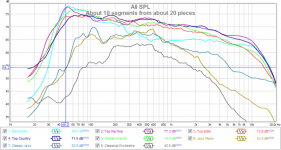

going to conclude by attaching a chart a member of another forum made where he analyzed his own music collection using REW ... this information is consistent with my own measurements and with what i have recently read in an AES paper on hearing damage from loud music ...

when you put all these curves together you begin to better understand where to allocate budget in the system to achieve the best results ...

i hope others can contribute their insight as well ...

EDIT: here is the AES paper on hearing damage from music that i'm reading:

https://www.aes.org/technical/documents/AESTD1007_1_20_05.pdf

among other things they ask questions like why do people like loud music and why do people like loud bass ...

so i been doing some googling and i think maybe we can have a thread where we can pile on all the various bits of information we have that can help people get an idea of relative importance of various frequencies to overall experience of listening ...

here is something i just found a few minutes ago for example:

https://www.dpamicrophones.com/mic-university/facts-about-speech-intelligibility

specifically this chart:

https://cdn.dpamicrophones.com/media/images/mic-university/facts-about-speech-fig04_1.jpg

it shows relative importance of various frequencies for speech intelligibility ... now you may ask who cares ? we listen to music ! well ... because there is a good reason to suspect that our brain has evolved to process certain frequencies more efficiently BECAUSE of language ... in fact our brains have pretty insane performance in terms of being able to pick out what somebody is saying in a room with 20 other people talking ... it probably makes sense to match the resolution of your sound system to the resolution of your ear-brain mechanism in terms of which frequencies are handled most clearly ...

and here is something i found a few days ago:

https://www.axiomaudio.com/blog/dis...ortion at levels,the masking effect of music.

specifically this chart:

https://www.axiomaudio.com/pub/media/wysiwyg/distortion_figure02.gif

it shows how loud distortion needs to be for people to hear it versus frequency ... not surprisingly it closely mirrors the speech intelligibility curves with stuff below 120 hz being essentially irrelevant with distortion up to almost 100% being almost inaudible at lowest frequencies ... and then as frequencies increase so does our ability to hear distortion ... but unlike with speech intelligibility there doesn't seem to be any corner at 3 khz and the trend simply continues ...

probably because of this ( from the same DPA blog )

https://cdn.dpamicrophones.com/media/images/mic-university/facts-about-speech-fig03.jpg

namely speech begins to roll off around 3 khz about the same area where ears are most sensitive

so our ears, brain and speech have all evolved in parallel around the same frequencies ... ( no surprise there ! )

our ability to detect distortion is basically zero at 20 hz and rises steadily all the way up to the very high frequencies ...

and our speech spectrum is from about 200 hz to about 5 khz with a peak around 1.5 khz or so ...

speech intelligibility is simply a product of the two curves - the frequencies where we can detect distortion AND where speech audio has high energy are the ones most critical to speech intelligibility ...

going to conclude by attaching a chart a member of another forum made where he analyzed his own music collection using REW ... this information is consistent with my own measurements and with what i have recently read in an AES paper on hearing damage from loud music ...

when you put all these curves together you begin to better understand where to allocate budget in the system to achieve the best results ...

i hope others can contribute their insight as well ...

EDIT: here is the AES paper on hearing damage from music that i'm reading:

https://www.aes.org/technical/documents/AESTD1007_1_20_05.pdf

among other things they ask questions like why do people like loud music and why do people like loud bass ...

Attachments

Last edited:

A traditional approach is to make system choices so the quality of the components' sound match. So any logical person would go to the local Salvation Army store and buy a second-hand 1975 amp* and that would be an equal-quality match with the best electrostatic speaker in the world you could find.

The interesting links you post are meaningful in defining the parameters of "matching". Audiophiles take disproportional delight in being able to produce "fire-works" of great sub-low bass an sparkling treble. But non-enthusiasts would simply see the heart of music is in the mid-range and look for Quad electrostatics or just a great 6-inch cone driver. So the mid-range deserves the most attention with highs and lows just "icing on the cake".

But since different pieces have wildly different costs, you can't figure on a dollar basis, as you inquire. A 6-inch covering 120-2kHz (4 octaves) might cost $50, likewise a dome tweeter for $25 (3 8aves), and the woofer matching sound quality would need motional feedback and cost hundreds of dollars or more (for 2 8aves).

B.

* or a 1965 Dynamo Stereo-70

The interesting links you post are meaningful in defining the parameters of "matching". Audiophiles take disproportional delight in being able to produce "fire-works" of great sub-low bass an sparkling treble. But non-enthusiasts would simply see the heart of music is in the mid-range and look for Quad electrostatics or just a great 6-inch cone driver. So the mid-range deserves the most attention with highs and lows just "icing on the cake".

But since different pieces have wildly different costs, you can't figure on a dollar basis, as you inquire. A 6-inch covering 120-2kHz (4 octaves) might cost $50, likewise a dome tweeter for $25 (3 8aves), and the woofer matching sound quality would need motional feedback and cost hundreds of dollars or more (for 2 8aves).

B.

* or a 1965 Dynamo Stereo-70

Last edited:

Isn't this basically the inverse of the fletcher munson curve? To solve this we increase bass and treble to achieve a perceived flat response depending on the listening level at the listening positionspecifically this chart:

https://cdn.dpamicrophones.com/media/images/mic-university/facts-about-speech-fig04_1.jpg

it shows relative importance of various frequencies for speech intelligibility ... now you may ask who cares ? we listen to music ! well ... because there is a good reason to suspect that our brain has evolved to process certain frequencies more efficiently BECAUSE of language ... in fact our brains have pretty insane performance in terms of being able to pick out what somebody is saying in a room with 20 other people talking ... it probably makes sense to match the resolution of your sound system to the resolution of your ear-brain mechanism in terms of which frequencies are handled most clearly ...

That link in the first post to a study of detectable distortion levels is good example a poor science. Its something that sort of looks like science, but actually isn't good science. Possibly helpful to look up "Auditory Scene Analysis' for better understanding of modern research into what people can and can't hear.

Also, an assumption that frequency response is for the most part all that matters would be wrong. For one thing, speakers tend to be non-time-invariant enough that time-domain transient response can vary significantly from steady-state test tone response.

Also, an assumption that frequency response is for the most part all that matters would be wrong. For one thing, speakers tend to be non-time-invariant enough that time-domain transient response can vary significantly from steady-state test tone response.

Lots of ways to be sloppy in such a study (esp. when "published" on your own website). But just what do you think are the big flaws that make it totally useless? If you know a study using music that assesses distortion, please provide a link. Maybe in Toole???That link in the first post to a study of detectable distortion levels is good example a poor science. Its something that sort of looks like science, but actually isn't good science

B.

To the best of my understanding, historically thresholds of audibility of distortion have been done with steady-state test tones, usually sine waves. With a few hundred test subjects its possible to produce an estimate of the average threshold for a population (threshold being the level at which half the population can't hear the distortion, and the other half of the population can still hear it). With enough test subjects the data pool can be randomly sampled into smaller sets. Analysis of the random smaller sample sets can then be used to estimate variance (the sort of height to width ratio of the bell curve). Again IIUC that was about the best they could do. The assumption was apparently that hearing is probably linear and time-invariant enough that continuous test tone threshold data could be reasonably applied to more complex signals such as music. That is to say, it was assumed that the time domain view and the frequency domain view could be taken as equivalently valid ways of looking at the same thing. However that assumption doesn't necessarily hold when the system being measured is strongly nonlinear and non-time invariant (and probably nonstationary too). So, the bottom line for me is that we have the existing data for whatever its worth, but we probably shouldn't expect it to hold under all conditions, especially at very low distortion levels. It seems like what has happened is that we can fairly easily measure -120dB distortion, so we have assumed that our existing models of hearing are also valid down to such levels including with complex signals like music. Once more IIUC, its possible to show that people can't hear a brass band buried at -60dB below some music, that some people can hear quantizing distortion at -93dBFS, and that some can even hear lower levels of distortion under some conditions.

Regarding the present state of published research, most of the action today seems to be in the field of 'auditory scene analysis.' I don't know of any recent work in that area on distortion thresholds for complex signals like music. Probably not too surprising since the field is fairly new. For a long time there was not much academic interest in hearing research except maybe for medical purposes such as hearing aid design. Things are finally starting change though, so I am willing to be patient and see how it goes.

Regarding the present state of published research, most of the action today seems to be in the field of 'auditory scene analysis.' I don't know of any recent work in that area on distortion thresholds for complex signals like music. Probably not too surprising since the field is fairly new. For a long time there was not much academic interest in hearing research except maybe for medical purposes such as hearing aid design. Things are finally starting change though, so I am willing to be patient and see how it goes.

Last edited:

Digging deep into your engineering geek talk, I find you could find no particular problems with the report you previously dismissed.

Well, I have lots of problems with it (really dumb to raise your hand in a group setting - actually mimics a famous psychology experiment in group-think). But then the topic of distortion masking with music playing is real important and real soft. So this kind of amateur test has to be considered a bit.

There is a wonderful but little-known study by Geddes and Lee you would like. They re-processed pure tone distortion sensitivity in light of good old Fletcher-Munson. So woofer harmonics are far more audible than the fundamental but nobody should sweat distortion at 10kHz, eh, since you can't hear it. It is a fine example combining acoustics and psychology/perception (as are the authors too).

B.

Well, I have lots of problems with it (really dumb to raise your hand in a group setting - actually mimics a famous psychology experiment in group-think). But then the topic of distortion masking with music playing is real important and real soft. So this kind of amateur test has to be considered a bit.

There is a wonderful but little-known study by Geddes and Lee you would like. They re-processed pure tone distortion sensitivity in light of good old Fletcher-Munson. So woofer harmonics are far more audible than the fundamental but nobody should sweat distortion at 10kHz, eh, since you can't hear it. It is a fine example combining acoustics and psychology/perception (as are the authors too).

B.

...you could find no particular problems with the report you previously dismissed.

I thought it was self-explanatory that it didn't meet the requirements of good research. Certainly it was not replicable from the information given.

that's what i used to think ... that distortion is more important in woofers because they throw harmonics into vocal range while tweeters throw harmonics into ultrasound ...Digging deep into your engineering geek talk, I find you could find no particular problems with the report you previously dismissed.

Well, I have lots of problems with it (really dumb to raise your hand in a group setting - actually mimics a famous psychology experiment in group-think). But then the topic of distortion masking with music playing is real important and real soft. So this kind of amateur test has to be considered a bit.

There is a wonderful but little-known study by Geddes and Lee you would like. They re-processed pure tone distortion sensitivity in light of good old Fletcher-Munson. So woofer harmonics are far more audible than the fundamental but nobody should sweat distortion at 10kHz, eh, since you can't hear it. It is a fine example combining acoustics and psychology/perception (as are the authors too).

B.

the problem is that harmonics don't ruin music ... intermodulation distortion does

and IMD products are at roughly the same frequencies as stimulus - not several octaves above ...

people are also now starting to look into subharmonic distortion ... that's when speakers have resonances that get excited by signal that is higher in frequency than that resonance ... but this seems to be very new area ...

intermodulation distortion on other hand is well understood ... i googled why people measure THD instead of IMD and the answer is it is simply easier to measure ...

so we measure what is completely irrelevant and don't measure the only thing that matters ...

this is the danger of relying on measurements ... you need to be measuring the right things and 90% of the time that is not the case ...

axiperiodic -

Great points. Thanks.

A peculiarity for us is the difference between research and practical application. Your wise points would have be distilled into some kind of standard which would be consensually shared, used readily by manufacturers, and intuitively meaningful to users.

Taking all your good points together, I think of the way Consumer Reports test dishwashers. They invent a horrible gooey sticky mess and apply a precise amount to a set of dishes of precise size. Without drawing any moral comparison to audio measurements and a sticky mess, some standardized AES mess of distortion bits might be helpful.

In the meantime, THD+N is simple to assess (just filter out the fundamental) and reasonably intuitive to customers as a yardstick for quality. So we're stuck with it today.

B.

Great points. Thanks.

A peculiarity for us is the difference between research and practical application. Your wise points would have be distilled into some kind of standard which would be consensually shared, used readily by manufacturers, and intuitively meaningful to users.

Taking all your good points together, I think of the way Consumer Reports test dishwashers. They invent a horrible gooey sticky mess and apply a precise amount to a set of dishes of precise size. Without drawing any moral comparison to audio measurements and a sticky mess, some standardized AES mess of distortion bits might be helpful.

In the meantime, THD+N is simple to assess (just filter out the fundamental) and reasonably intuitive to customers as a yardstick for quality. So we're stuck with it today.

B.

One of my fave subjects, but no time ATM, though these interactive charts can be quite helpful to some:

https://www.audio-issues.com/wp-content/uploads/2011/07/main_chart-610x677.jpg

https://alexiy.nl/eq_chart/ear_sensitivity.htm

https://www.audio-issues.com/wp-content/uploads/2011/07/main_chart-610x677.jpg

https://alexiy.nl/eq_chart/ear_sensitivity.htm

The top octave is the least important, followed by the lowest. A system that does 50 Hz to 10kHz gives you 99% of the experience for most musical genres. For video, you would also want the bottom octave.

Even in my wildest fantasies, I never felt the need to go beyond a three way.

Even in my wildest fantasies, I never felt the need to go beyond a three way.

Last edited:

I will play a bit of Devil's advocate here...

Regarding harmonic distortion: Yes it is easy to measure and easy to display, much easier than intermodulation distortion. A single value of THD is worthless, but when HD is displayed as a frequency response plot, 2nd through 5th, it is easy to understand and adds value. IMD on the other hand, has to be measured with a pair of test signal tones. All the extraneous noise that results (other than the two test tones) is identified as either HD or IMD components. However, there is an infinite combination of test tone pairs that can be used. HiFiCompass uses 30 Hz + 255 Hz for woofers and mid woofers, but if different values were used would the results be different? For example, if 53 Hz and 330 Hz were used ? Would the results be very similar, or different? I know of no standard for this kind of testing (anyone know?)

Another thing to consider: Most of the time, with typical cone/dome drivers, those things which cause IMD also cause HD. So we measure and compare HD among drivers, and we make the assumption that a low HD driver is also a low IMD driver. Usually this is a good assumption. Purifi is showing us that the link between HD and IMD is not a strict one to one correlation, and with careful, clever, advanced engineering, it is possible to drive both IMD and HD to very low levels.

So I agree that IMD is more objectionable than HD. 2nd HD is fairly benign, 3rd and 5th HD is much more audible and fatiguing.

j.

Regarding harmonic distortion: Yes it is easy to measure and easy to display, much easier than intermodulation distortion. A single value of THD is worthless, but when HD is displayed as a frequency response plot, 2nd through 5th, it is easy to understand and adds value. IMD on the other hand, has to be measured with a pair of test signal tones. All the extraneous noise that results (other than the two test tones) is identified as either HD or IMD components. However, there is an infinite combination of test tone pairs that can be used. HiFiCompass uses 30 Hz + 255 Hz for woofers and mid woofers, but if different values were used would the results be different? For example, if 53 Hz and 330 Hz were used ? Would the results be very similar, or different? I know of no standard for this kind of testing (anyone know?)

Another thing to consider: Most of the time, with typical cone/dome drivers, those things which cause IMD also cause HD. So we measure and compare HD among drivers, and we make the assumption that a low HD driver is also a low IMD driver. Usually this is a good assumption. Purifi is showing us that the link between HD and IMD is not a strict one to one correlation, and with careful, clever, advanced engineering, it is possible to drive both IMD and HD to very low levels.

So I agree that IMD is more objectionable than HD. 2nd HD is fairly benign, 3rd and 5th HD is much more audible and fatiguing.

j.

Even in my wildest fantasies, I never felt the need to go beyond a three way.

Yeah, I mostly agree... There are a wide variety of tweeters that can be crossed as low as 1.5 kHz, and this opens up a lot of possibilities that did not exist 20 years ago.

Well it used to be. These days with software like ARTA and REW, measureing IMD is just as easy as HD. @hifijim points out that IMD offers many frequnecy choices, but there are some standards to follow. The simple answer may be "Because we've always done it that way."i googled why people measure THD instead of IMD and the answer is it is simply easier to measure ...

Agreed, shouldn't be arbitrary at all.The number of ways shouldn't be arbitrary, the design process should determine it.

The best designs very foundation is, "how many ways does it take to do what i want."

fullrange works in headphonesThe number of ways shouldn't be arbitrary, the design process should determine it.

2-way + sub works for nearfield monitors

3-way + sub works for mid-field monitors

beyond that we generally stick with 3-way plus sub and simply use horns and arrays to increase output

in headphones there is bass gain from the headphone sealing the ear

in horns there is gain from horn loading

in arrays there is gain from woofers coupling to each other

it's a question of what kind of space you want to cover at what SPL and what distortion level

although not always the case you generally get higher distortion as you get closer to resonance frequency ( FS ) and also higher distortion as you enter breakup ... also usually the range where breakup happens is also where beaming happens ...

furthermore more efficient drivers have higher FS and EBP so if you want to only use the cleanest portion of response between FS and breakup it gets narrower the louder your drivers are ... forcing you to use more frequency bands OR accept distortion, beaming etc ...

if we look at some 8" prosound midranges that boast over 100 decibel efficiency their usable range is maybe 2 octaves from about 500 hz to 2 khz of clean and flat response without excessive beaming ... of course if you dropped that same driver from 100 db to 80 db efficiency it could go flat to 20 hz instead of 500 hz ... and you could also have 10 mm xmax instead of 1 millimeter ... you would now be covering 6 octaves instead of 2 enabling the use of a 2-way instead of 5-way but your output would only be 1% of the efficient system ...

is doubling the number of frequency bands worth it if it gives you 100 times the output ? i think so.

of course i'm exaggerating - it's just to make a point.

the reason prosound systems stick with 3-way or so despite hitting very high SPLs is because they accept compromises to sound quality by driving both cones and compression drivers into breakup as well as by driving past xmax. low cost and small size is more important to them than sound quality.

you have three things fundamentally at odds with each other:

1 - sound quality

2 - SPL

3 - size, cost and complexity

if you want more of one you will have less of others.

home audio completely ignores SPL to enable good sound quality from small, simple speakers.

pro sound reinforcement focuses on the SPL and is willing to sacrifice the bottom octave and accept very harsh treble.

main studio monitors balance all 3 considerations and as a result are big and expensive as they try to chase both sound quality an dynamics at the same time.

are main studio monitors 5-ways ? no. but they are mostly just using prosound recipe with a layer of polish added to it. instead of compact and lightweight plywood boxes they use huge and heavy MDF boxes tuned much lower, perhaps beryllium compression drivers instead of titanium, but otherwise they stick with the same formula. why ? i'm guessing because it's a small market that can't justify the cost of developing an entirely new design philosophy - just tweaking the one used in mainstream prosound.

then you have to consider that with steeper crossover slopes the "danger zone" so to speak is narrower ... with 12 db / oct the crossover region is wide and you probably don't want too many of them ... with 48 db / oct the crossover region is 1/4 as wide and you could have more of them and still do less damage ( except to phase ) ...

i'm falling asleep ( slept for 3 hours then woke up for some reason ) ... hope whatever i wrote was coherent ...

I don't see a question in there, but I don't agree with too much of it. Do you follow pro-sound or have a pro-sound background? There is much that is done differently in a domestic environment because it has a very different set of problems.

home audio completely ignores SPL to enable good sound quality from small, simple speakers.

That is over generalizing just a bit. A good pair of commercial home-hi-fi speakers can generate sufficient SPL for the environment they are intended to operate in. 95 dB SPL average, with 110 dB peaks, this is absolutely achievable in an average room (16' x 25'), at a reasonable distance (10'). It's not that SPL capability is ignored, it's just that by the time a good well rounded high performance home-hi-fi speaker meets all the other requirements of deep bass, low distortion, smooth frequency response through the entire range of on/off axis, it can usually meet the needed SPL capability for its intended use. in other words, SPL capability does not drive the design.

- Home

- Loudspeakers

- Multi-Way

- relative importance of different sound frequencies