First I would suggest to test the multichannel device alone, without ecasound. Just to troubleshoot small steps at a time.

Can you store the ecasound result into a 4-channel wav file and play that using aplay into your multichannel device? You can pick/play only selected channels using e.g. sox, just to test individual channels of the wav file sound OK.

Can you store the ecasound result into a 4-channel wav file and play that using aplay into your multichannel device? You can pick/play only selected channels using e.g. sox, just to test individual channels of the wav file sound OK.

I did the test. I exported my song to a file using

Then I play the file to my virtual device with aplay:

The file is played correctly without distortion, which is good. But I have the stereo image pulled on one side. I confirmed that it was not related to my initial song, and generated a file with same input for L and R... Same stereo image on one side. And it is the same side everytime I play the song...

JMF

Code:

ecasound -d -z:mixmode,sum -B:rt -a:pre -i:"50_male_english.flac" -f:32,2,44100 -pf:/home/jmf/ecasound/ACDf2PreRef.ecp -o:loop,1 -a:woofer,tweeter -i:loop,1 -a:woofer -pf:/home/jmf/ecasound/ACDf2WooferRef.ecp -chorder:1,0,2,0 -a:tweeter -pf:/home/jmf/ecasound/ACDf2TweeterRef.ecp -chorder:0,1,0,2 -a:woofer,tweeter -f:32,4,44100 -o:loop,2 -a:delay -i:loop,2 -el:mTAP,0,0.06,0,0.06 -o:loop,3 -a:DAC -i:loop,3 -f:16,4,44100 -o:MaleVoice.wavThen I play the file to my virtual device with aplay:

Code:

aplay -f S16_LE -D multi MaleVoice.wavThe file is played correctly without distortion, which is good. But I have the stereo image pulled on one side. I confirmed that it was not related to my initial song, and generated a file with same input for L and R... Same stereo image on one side. And it is the same side everytime I play the song...

JMF

Well, then the ecasound chain puts delay to channels of one side. Is the channel order of the delay part correct?

I double checked my syntax for delays vs channels and everything looks OK. I also made an attempt suppressing the mTAP filter to make it more simple... and same effect.

I will try to do additional tests during the week reducing the number of parameters: only one driver, no ecasound filters... to better see what happens.

Thank you for your support and the troubleshooting ideas.

JMF

I will try to do additional tests during the week reducing the number of parameters: only one driver, no ecasound filters... to better see what happens.

Thank you for your support and the troubleshooting ideas.

JMF

Would you advice a tool to compare tracks of a song ? I would want to check that the file I generated is perfectly "mono" (no difference between L and R, no delay added to a channel by ecasound)

I would also like to visualize/quantify the delay I get between the 2 amps. My best idea is to get a measurement of the speaker with HolmImplulse, with the woofer on one amp and the tweeter on the other, and to look at the notch at the crossover frequency with Tweeter inversed. Would there be better ideas?

Best regards,

JMF

I would also like to visualize/quantify the delay I get between the 2 amps. My best idea is to get a measurement of the speaker with HolmImplulse, with the woofer on one amp and the tweeter on the other, and to look at the notch at the crossover frequency with Tweeter inversed. Would there be better ideas?

Best regards,

JMF

Time diff between channels is easily visible e.g. in audacity - just zoom in to individual samples, they should be aligned vertically.

Time diff between channels is easily visible e.g. in audacity - just zoom in to individual samples, they should be aligned vertically.

My question may be a dummy one, but it is not clear to me. Do you mind feeding Audacity with the file and compare channels ?

Or is it about comparing the outputs of the amps? In that case, how is feeded Audacity:

- recording with MIC (but then I don't see how the separate the 2 channels) ?

- with electrical wiring from amp to line input (in that case I don't know which type of adaptation is needed),

- other mean ?

Best regards,

JMF

Last tests:

I created a 4 channels wav file, with the same track (=4 mono tracks). I checked in audacity that all tracks were identical (no delay, no difference in levels...)

I play the file with the simplest command:

And depending on the order tha I use to declare the virtual device, I pull the stereo image to one side or the other

gives a different side than

Difficult to improve the situation, if it happens so low in the system

It may be specific to the OrangePi PC, H3 soc and its USB architecture where it seems tha it has 4 "independant" USB

JMF

I created a 4 channels wav file, with the same track (=4 mono tracks). I checked in audacity that all tracks were identical (no delay, no difference in levels...)

I play the file with the simplest command:

Code:

aplay -f S16_LE -c 4 -D multi MaleVoiceMono4chanNoFilter.wavAnd depending on the order tha I use to declare the virtual device, I pull the stereo image to one side or the other

Code:

pcm.multi {

type multi;

slaves.a.pcm "hw:2,0";

slaves.a.channels 2;

slaves.b.pcm "hw:3,0";

slaves.b.channels 2;

bindings.0.slave a;

bindings.0.channel 0;

bindings.1.slave a;

bindings.1.channel 1;

bindings.2.slave b;

bindings.2.channel 0;

bindings.3.slave b;

bindings.3.channel 1;

}gives a different side than

Code:

pcm.multi {

type multi;

slaves.b.pcm "hw:3,0";

slaves.b.channels 2;

slaves.a.pcm "hw:2,0";

slaves.a.channels 2;

bindings.0.slave a;

bindings.0.channel 0;

bindings.1.slave a;

bindings.1.channel 1;

bindings.2.slave b;

bindings.2.channel 0;

bindings.3.slave b;

bindings.3.channel 1;

}Difficult to improve the situation, if it happens so low in the system

It may be specific to the OrangePi PC, H3 soc and its USB architecture where it seems tha it has 4 "independant" USB

Code:

jmf@orangepipc:~/ecasound$ lsusb -t

/: Bus 08.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ohci/1p, 12M

|__ Port 1: Dev 2, If 0, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 1: Dev 2, If 1, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 1: Dev 2, If 2, Class=Human Interface Device, Driver=usbhid, 12M

/: Bus 07.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ohci/1p, 12M

/: Bus 06.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ohci/1p, 12M

|__ Port 1: Dev 2, If 0, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 1: Dev 2, If 1, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 1: Dev 2, If 2, Class=Human Interface Device, Driver=usbhid, 12M

/: Bus 05.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ohci/1p, 12M

/: Bus 04.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ehci/1p, 480M

/: Bus 03.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ehci/1p, 480M

/: Bus 02.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ehci/1p, 480M

/: Bus 01.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ehci/1p, 480MJMF

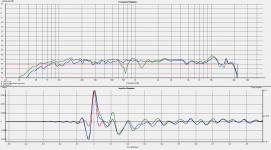

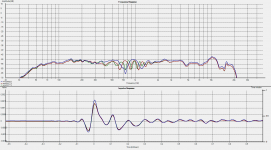

Belo some measurements when I put the both tweeters on the same amp and both woofers on the same amp.

First is a comparion: measurement signal, expected response (W+T on the same amp), disaster due to W and T on different amp, with the strange delay

Second is 3 measurements of the same config with both tweeters on the same amp and both woofers on the same amp. Seems that the delay is not constant...

First is a comparion: measurement signal, expected response (W+T on the same amp), disaster due to W and T on different amp, with the strange delay

Second is 3 measurements of the same config with both tweeters on the same amp and both woofers on the same amp. Seems that the delay is not constant...

Attachments

Starting transfer by two USB controllers at exactly the same time is probably unfeasible. I would try hooking both soundcards to the same controller via a hub. IMO you need to have same-time samples for both the cards in one usb frame, together. Just my 2 cents.

Code:ecasound -d -z:mixmode,sum -B:rt -tl\ -a:pre -i:"HeCanOnlyHoldHer.flac" -eadb:-2 -pf:/home/jmf/ecasound/ACDf2$ -a:woofer,tweeter -i:loop,1 \ -a:woofer -pf:/home/jmf/ecasound/ACDf2WooferRef.ecp -chorder:1,0,2,0 \ -a:tweeter -pf:/home/jmf/ecasound/ACDf2TweeterRef.ecp -chorder:0,1,0,2 \ -a:woofer,tweeter -f:32,4,44100 -o:loop,2 \ -a:delay -i:loop,2 -el:mTAP,0,0.06,0,0.06 -o:loop,3 \ -a:DAC1,DAC2 -i:loop,3 \ -a:DAC2 -chorder:1,2 -f:16,2,44100 -o:alsa,front:FXAUDIOD802_1 \ -a:DAC1 -chorder:1,2 -f:16,2,44100 -o:alsa,front:FXAUDIOD802

I noticed that you made a small mistake in the last two lines (depending on exactly what you want to do with the inputs and outputs). You might try the corrections below, using the two DACs separately. I use multiple DACs on my system I don't recall this this problem... Are you SURE your DACs are running in USB adaptive mode? See this thread for how to check that:

http://www.diyaudio.com/forums/pc-based/291056-how-tell-if-usb-dac-async-adaptive.html

In your ecasound command string, loop,2 and loop,3 each have FOUR channels. The channel assignments were defined on these two lines:

Code:

-a:woofer -pf:/home/jmf/ecasound/ACDf2WooferRef.ecp -chorder:1,0,2,0 \

-a:tweeter -pf:/home/jmf/ecasound/ACDf2TweeterRef.ecp -chorder:0,1,0,2 \CH 1 = input channel 1 processed by the woofer filtering

CH 2 = input channel 1 processed by the tweeter filtering

CH 3 = input channel 2 processed by the woofer filtering

CH 4 = input channel 2 processed by the tweeter filtering

where input channel 1 is the LEFT channel and input channel 2 is the RIGHT channel for a stereo input.

Using this line:

Code:

-a:delay -i:loop,2 -el:mTAP,0,0.06,0,0.06 -o:loop,3 \loop, 3 CH 1 delay = 0.00 milliseconds (no delay)

loop, 3 CH 2 delay = 0.06 milliseconds

loop, 3 CH 3 delay = 0.00 milliseconds (no delay)

loop, 3 CH 4 delay = 0.06 milliseconds

The four channels of delayed audio are then sent out to loop,3.

The last lines send two of the available channels from loop, 3 out to the two STEREO DACs in your system. The problem is that you are sending channels 1 and 2 from loop,3 to BOTH DACs. This is like playing the left channel on both speakers.

I think you probably mean to do this:

Code:

-a:DAC2 -chorder:1,2 -f:16,2,44100 -o:alsa,front:FXAUDIOD802_1 \

-a:DAC1 -chorder:3,4 -f:16,2,44100 -o:alsa,front:FXAUDIOD802There are other combinations of how you output each of the FOUR loop,3 channels to "FXAUDIOD802" and "FXAUDIOD802_1" that will determine how the input channels get routed.

It's a good idea to keep close track of the routing in this way.

The last lines send two of the available channels from loop, 3 out to the two STEREO DACs in your system. The problem is that you are sending channels 1 and 2 from loop,3 to BOTH DACs. This is like playing the left channel on both speakers.

I think you probably mean to do this:

The above would send the input LEFT channel, split into woofer and tweeter channels, to the DAC called "FXAUDIOD802_1" and would send the input RIGHT channel, split into woofer and tweeter channels, to the DAC called "FXAUDIOD802".Code:-a:DAC2 -chorder:1,2 -f:16,2,44100 -o:alsa,front:FXAUDIOD802_1 \ -a:DAC1 -chorder:3,4 -f:16,2,44100 -o:alsa,front:FXAUDIOD802

There are other combinations of how you output each of the FOUR loop,3 channels to "FXAUDIOD802" and "FXAUDIOD802_1" that will determine how the input channels get routed.

It's a good idea to keep close track of the routing in this way.

Thank you Charlie for checking. I'm sorry not to have been more clear in my post. This was on purpose, during those specific tests, to reduce the number of variables.

To get a centered image, I decided to send exactly the same signal to both channels. And my issue is that even in those conditions, the stereo image is pulled on one side.

JMF

Starting transfer by two USB controllers at exactly the same time is probably unfeasible. I would try hooking both soundcards to the same controller via a hub. IMO you need to have same-time samples for both the cards in one usb frame, together. Just my 2 cents.

I have to try this, but I don't have an USB hub at the moment. I have to borrow or buy one.

Question: could upsampling help ? If i go to 96kHz, will there be more frequent frames reducing the potential delay?

Best regards,

JMF

Question: could upsampling help ? If i go to 96kHz, will there be more frequent frames reducing the potential delay?

Probably not. These frames are not audio samples but USB frames, transmitted every 1ms (USB fullspeed 12M) by the USB controller. I would try to make sure same-time data for each card are packed into the same USB frame. That would result in the best time diff attainable.

The packet-capturing analyzer wireshark can capture USB data. It captures whole URBs (frame groups) but IIRC these can be unwrapped down to individual frames. That way you may be able to analyze timing of each soundcard stream.

Ok, I understand that the basis of my design proves not so good.

I will test the external USB hub influence, as soon as I get a hand on one. The network analyser decoding is clearly above my capacity, and goes against my idea to have a simple design, that would rely on sound design and adequate underlying mecanisms. This proves not to be the case.

I have at home a STM32F4 discovery board. I would like to have a look at its capablibities for sound reproduction. It has a DSP instruction set, 2 I2S, It can get an additional oscillator... It needs coding, but seems to have the required basic needed features. I don't know if I will go through the process to develop such an application.

Last (maybe best) option is Thin client with Delta 1010 🙂

JMF

I will test the external USB hub influence, as soon as I get a hand on one. The network analyser decoding is clearly above my capacity, and goes against my idea to have a simple design, that would rely on sound design and adequate underlying mecanisms. This proves not to be the case.

I have at home a STM32F4 discovery board. I would like to have a look at its capablibities for sound reproduction. It has a DSP instruction set, 2 I2S, It can get an additional oscillator... It needs coding, but seems to have the required basic needed features. I don't know if I will go through the process to develop such an application.

Last (maybe best) option is Thin client with Delta 1010 🙂

JMF

Last (maybe best) option is Thin client with Delta 1010 🙂

I definitely prefer that solution 🙂 There are loads of PCI multichannel cards available everywhere.

Yes, I know. The issue for me, is that part of my project, I want to use my D803 amps that are digital up the the amp chip (no D/A, A/D on the way). This amp has only USB and SPDIF in (may be investigated the possibility to tap an I2S somewhere on the digital input path) . So I would need multichannel cards with multiple digital outputs (SPDIF for plug and play with my amp.

This narrows the choice I think...

JMF

This narrows the choice I think...

JMF

This narrows the choice I think...

That certainly does. Yet there are soundcards with multichannel spdif like How to change a Soundblaster Live! card to Live! 5.1

I think that giving the USB hub a try is definitely worth it. You should be able to get a USB 2.0 hub for about Euro 20 or less.

Not lucky this time... I have found a small USB hub. Plugged the 2 amps on it. I can play each one individually. But not together.

When I launch the music to the second one, it fails with

The USB structure, with the USB hub is:

The hub is a 2.0 one, not powered (but the amps should not pull too much current)...

I feel that there is no need to continue to insist in that implementation. It is not a robust design.

JMF

When I launch the music to the second one, it fails with

Code:

ERROR: Connecting chainsetup failed: "Enabling chainsetup: AUDIOIO-ALSA:

... Error when setting up hwparams: Broken pipe"The USB structure, with the USB hub is:

Code:

jmf@orangepipc:~/ecasound$ lsusb -t

/: Bus 08.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ohci/1p, 12M

/: Bus 07.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ohci/1p, 12M

/: Bus 06.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ohci/1p, 12M

/: Bus 05.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ohci/1p, 12M

/: Bus 04.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ehci/1p, 480M

|__ Port 1: Dev 3, If 0, Class=Hub, Driver=hub/4p, 480M

|__ Port 2: Dev 5, If 0, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 2: Dev 5, If 1, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 2: Dev 5, If 2, Class=Human Interface Device, Driver=usbhid, 12M

|__ Port 3: Dev 4, If 0, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 3: Dev 4, If 1, Class=Audio, Driver=snd-usb-audio, 12M

|__ Port 3: Dev 4, If 2, Class=Human Interface Device, Driver=usbhid, 12M

/: Bus 03.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ehci/1p, 480M

/: Bus 02.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ehci/1p, 480M

/: Bus 01.Port 1: Dev 1, Class=root_hub, Driver=sunxi-ehci/1p, 480MThe hub is a 2.0 one, not powered (but the amps should not pull too much current)...

I feel that there is no need to continue to insist in that implementation. It is not a robust design.

JMF

- Status

- Not open for further replies.

- Home

- Source & Line

- PC Based

- RPI + 2x USB FDA amps - time synch OK or issue?