Gents,

Can someone explain to me how dithering the output reduces quantization error?

The only dither I learned was the use of a triangular waveform of 2 lsb total amplitude added to the analog in, and averaging the resultant pwm stream of the ADC LSB's for the between-levels average. Seems to me dither at the output of a DAC would require knowledge of prior and subsequent levels, which would be info used by a deep FIR or a very fast and strong math package.

So, I'm confused..

ps..fear not, that state is familiar territory to me.😱

jn

Can someone explain to me how dithering the output reduces quantization error?

The only dither I learned was the use of a triangular waveform of 2 lsb total amplitude added to the analog in, and averaging the resultant pwm stream of the ADC LSB's for the between-levels average. Seems to me dither at the output of a DAC would require knowledge of prior and subsequent levels, which would be info used by a deep FIR or a very fast and strong math package.

So, I'm confused..

ps..fear not, that state is familiar territory to me.😱

jn

actually you can have an arbitrary MSB error and still be monotonic == single signed slope

you may be thinking of <1/2 lsb differential linearity

My questions ignore sign. If sign is used, the question only shifts one bit.. Pardon my lack of digital experience, it causes miscommunication..

jn

+1The only dither I learned was the use of a triangular waveform of 2 lsb total amplitude added to the analog in, and averaging the resultant pwm stream of the ADC LSB's for the between-levels average.

Can be white noise as well ?

+1

Can be white noise as well ?

I would assume so.

But several people have mentioned dither during the discussion of D/A conversion at the user..that is what I do not understand..so ask.

jn

edit: previously, I mentioned a triangular dither signal of 2lsb height..I suspect that is inaccurate and may actually be 1 lsb height..

Last edited:

Gents,

Can someone explain to me how dithering the output reduces quantization error?

In the same way as it reduces quantization error on the input.

Every time there's a word lenght reduction in the signal path, the output should be dithered.

Some examples:

24 bit data -> Digital EQ (32 bit) -> 24 bit data

16 bit data -> 8 x Oversampling filter (22bit) -> 20 bit DAC

A few links on the subject. (Not for you, jn 😉 )

Stop dithering about dither and just... dither !

When to dither ? Always !

Audio Myth - "24-bit Audio Has More Resolution Than 16-bit Audio" - Benchmark Media Systems, Inc.

Stop dithering about dither and just... dither !

When to dither ? Always !

Audio Myth - "24-bit Audio Has More Resolution Than 16-bit Audio" - Benchmark Media Systems, Inc.

In the same way as it reduces quantization error on the input.

It can't. Not to my understanding anyway.

To my way of thinking:

If the analog output value of 4.3 is desired, yet the DAC can only do a 4 or a 5, how can dither produce that .3 increase over the 4? The output equivalent would need the addition of .3 via some "smarts". That "smarts" has to decide if the value is above 4, or below 5 depending on what the output code is, so needs to calculate the add on signal average to bias the level one way or the other. Simply adding a symmetrical signal to a quantized value waveform doesn't give the correct value desired.

If I were to look ahead to the next value, I could simply add half the LSB value to the stream. But that still needs the value of the difference. That would be a dumb interpolation, but not dither.

So I am still confused..

For A/D, the dither signal is used to force the A/D input to rapidly traverse a transition level. A triangular wave will cause the converter to rapidly switch code. If the analog is exactly at the transition level, the converter out will switch at a 50% duty cycle, which can be seen by digital circuits and converted. If the duty cycle strays from 50%, the digital stuff can tell how far between transition levels the true signal is.

I can't see that same process applied to the analog output of a DAC.

So, what am I missing??😕

jn

ps..If I were to dither the DAC timing, I could probably get closer to the desired waveform, but that still requires knowledge of which way to dither the timing.

Last edited:

dither happens in the creation of the reduced bit depth output stream

starting with say typical studio 24 digital audio (but only at best 20-22 bits effective ADC resolution by various measures) you add the dither as digital random numbers in the lower 8-9 bits of the 24 bit stream before rounding 24 to 16 bits

dither does flip the lsb (or 2) of the 16 bits fed to the DAC with a "duty cycle" determined by the 1st moment of the sub 16 lsb, 24 bit source in the band under consideration - and gains this ability by having added total noise power to work with - over the whole (22.05 kHz) bandwidth of the (CD rez) digital audio output

for a 1 kHz tone our critical bandwidth is only ~200 Hz, only noise there and in the closest adjacent critical bands determine if the 1kHz tone will be audible absent much larger amplitude maskers

the usefulness of dither for low level below lsb linearity also depends on the DAC linearity exceeding 16bit - although the decorrelation of quantization lose is still helpful even if the DAC linearity isn't perfect

but today multibit delta sigma DAC are the mode for flagship speced audio DAC and they do have better than 16 bit noise and linearity - we can use some of that even feeding them only 16 bits, if properly dithered

starting with say typical studio 24 digital audio (but only at best 20-22 bits effective ADC resolution by various measures) you add the dither as digital random numbers in the lower 8-9 bits of the 24 bit stream before rounding 24 to 16 bits

dither does flip the lsb (or 2) of the 16 bits fed to the DAC with a "duty cycle" determined by the 1st moment of the sub 16 lsb, 24 bit source in the band under consideration - and gains this ability by having added total noise power to work with - over the whole (22.05 kHz) bandwidth of the (CD rez) digital audio output

for a 1 kHz tone our critical bandwidth is only ~200 Hz, only noise there and in the closest adjacent critical bands determine if the 1kHz tone will be audible absent much larger amplitude maskers

the usefulness of dither for low level below lsb linearity also depends on the DAC linearity exceeding 16bit - although the decorrelation of quantization lose is still helpful even if the DAC linearity isn't perfect

but today multibit delta sigma DAC are the mode for flagship speced audio DAC and they do have better than 16 bit noise and linearity - we can use some of that even feeding them only 16 bits, if properly dithered

Last edited:

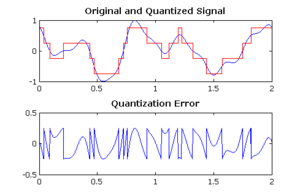

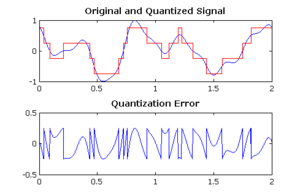

Try to visualize the quantization error for a perfect DAC: it is essentially the difference between the smooth waveform and its staircase version.So, what am I missing??😕

It is thus highly correlated to both the signal and the sampling frequency, which is what we want to avoid.

Let us concentrate on the disturber only: how can it be eliminated? Simply adding a random or coherent dither will bury it in noise, but it will still be present, and there are limits to the level of noise you can add to mask an unwanted signal: you don't want to degrade the S/N excessively.

The clever answer is to add a dither signal, but not linearly: it will be dependent on the disturber, and will actually jam it, change it into a variety of pseudo-random noises, more or less colored depending on the type of optimization chosen. The end result will be a quantization error changed into a somewhat higher level of noise, but totally decorrelated from the signal, which is the really important bit.

dither happens in the creation of the reduced bit depth output stream

starting with say typical studio 24 digital audio (but only at best 20-22 bits effective ADC resolution by various measures) you add the dither as digital random numbers in the lower 8-9 bits of the 24 bit stream before rounding 24 to 16 bits

dither does flip the lsb (or 2) of the 16 bits fed to the DAC with a "duty cycle" determined by the 1st moment of the sub 16 lsb, 24 bit source in the band under consideration - and gains this ability by having added total noise power to work with - over the whole (22.05 kHz) bandwidth of the (CD rez) digital audio output

for a 1 kHz tone our critical bandwidth is only ~200 Hz, only noise there and in the closest adjacent critical bands determine if the 1kHz tone will be audible absent much larger amplitude maskers

the usefulness of dither for low level below lsb linearity also depends on the DAC linearity exceeding 16bit - although the decorrelation of quantization lose is still helpful even if the DAC linearity isn't perfect

but today multibit delta sigma DAC are the mode for flagship speced audio DAC and they do have better than 16 bit noise and linearity - we can use some of that even feeding them only 16 bits, if properly dithered

Look at the case where the signal is DC. Then for a value of 4.3 the first conversion may be 4, the next 5 and then 4, 4, 4, 5, the lowpass filter of this would be 4.333, more samples with random dither and no bit step issues would long term average to 4.3. Now if you have a sample rate of 48k and a signal of 24k that does not happen, but at lower frequencies it does get better.

dither happens in the creation of the reduced bit depth output stream

starting with say typical studio 24 digital audio (but only at best 20-22 bits effective ADC resolution by various measures) you add the dither as digital random numbers in the lower 8-9 bits of the 24 bit stream before rounding 24 to 16 bits

dither does flip the lsb (or 2) of the 16 bits fed to the DAC with a "duty cycle" determined by the 1st moment of the sub 16 lsb, 24 bit source in the band under consideration - and gains this ability by having added total noise power to work with - over the whole (22.05 kHz) bandwidth of the (CD rez) digital audio output

for a 1 kHz tone our critical bandwidth is only ~200 Hz, only noise there and in the closest adjacent critical bands determine if the 1kHz tone will be audible absent much larger amplitude maskers

the usefulness of dither for low level below lsb linearity also depends on the DAC linearity exceeding 16bit - although the decorrelation of quantization lose is still helpful even if the DAC linearity isn't perfect

but today multibit delta sigma DAC are the mode for flagship speced audio DAC and they do have better than 16 bit noise and linearity - we can use some of that even feeding them only 16 bits, if properly dithered

Seems reasonable..but it is done prior to putting it on the CD. That explanation does not allow dither applied at the user end, which was why I was confused.

I followed you up to the word "visualize"..😱Try to visualize the quantization error for a perfect DAC: it is essentially the difference between the smooth waveform and its staircase version.

It is thus highly correlated to both the signal and the sampling frequency, which is what we want to avoid.

Let us concentrate on the disturber only: how can it be eliminated? Simply adding a random or coherent dither will bury it in noise, but it will still be present, and there are limits to the level of noise you can add to mask an unwanted signal: you don't want to degrade the S/N excessively.

The clever answer is to add a dither signal, but not linearly: it will be dependent on the disturber, and will actually jam it, change it into a variety of pseudo-random noises, more or less colored depending on the type of optimization chosen. The end result will be a quantization error changed into a somewhat higher level of noise, but totally decorrelated from the signal, which is the really important bit.

You're basically correlating the noise to the quantization error. Doesn't seem correct to me.

Perhaps I'm just dense..😕

I guess I'm lucky in that I never listen to quiet music. Zep turned up to 11..and only zep 1, 2, and 3..

jn

Look at the case where the signal is DC. Then for a value of 4.3 the first conversion may be 4, the next 5 and then 4, 4, 4, 5, the lowpass filter of this would be 4.333, more samples with random dither and no bit step issues would long term average to 4.3. Now if you have a sample rate of 48k and a signal of 24k that does not happen, but at lower frequencies it does get better.

This is the A/D dither I spoke of, and yes I agree, the lower the freq, the better.

Neither my copies of Papoulis, Tretter, nor Schwartz prepared me for diss stuff..now I gots me a headache..

jn

This is the A/D dither I spoke of, and yes I agree, the lower the freq, the better.

Neither my copies of Papoulis, Tretter, nor Schwartz prepared me for diss stuff..now I gots me a headache..

jn

1 The quote function miscued and it is not the quote I was responding to.

2 The edit function wasn't there either.

3 Papoulis, Tretter, & Schwartz sounds like a law firm!

Papoulis, Tretter, & Schwartz sounds like a law firm!

I wish. No, they are blunt instruments of torture, chosen by professors to inflict the most damage to forming minds...

jn

OK, let's picture it:I followed you up to the word "visualize"..😱

No, the final goal is to transform the quantization error into noise, but before you dither, it isn't the caseYou're basically correlating the noise to the quantization error. Doesn't seem correct to me.

OK, let's picture it:

No, the final goal is to transform the quantization error into noise, but before you dither, it isn't the case

When I look at your depiction of quantization noise, I see that it basically won't make it past the brick.

So what is the dither (or any process for that matter)actually getting rid of? Spectra that's easily 1/4 LSB in amplitude? Using some kind of FIR or some DSP to extrapolate seems to me to be far better than trying to dither.

But what do I know, I'm just a poor country doctor..

jn

ps...BTW, thank you very much for your time and effort, much appreciated.

Seems reasonable..but it is done prior to putting it on the CD. That explanation does not allow dither applied at the user end, which was why I was confused.

jn

jn,

every CD/DVD/SACD playback device, with exception of few very early CD players, contains some DSP 'block' that does manipulate original data in one way or another. Digital oversampling filter, either external IC or built-in into latest generation DAC ICs, is one such typical example. It receives 16/24 bit data from the media and performs calculations (oversampling, low-pass filter) on that data in HIGHER resolution than the data itself - i.e. it does it in 24/32/48 or even 64 bits.

Now, the output of such DSP has to be calculated down to the wordlenght that the external DAC IC or DAC part integrated into IC can accept. This is a bottleneck of the system and it is 20-24bits, or, very rarely, 32 bits wide. This is where the dither 'at the user end' comes into play (one more time), if one doesn't want round-off errors.

Here's the dithered 997Hz -110dB 16bit file spectra superimposed on the -110dB 16bit file spectra where no dither was used during 24 to 16 bit conversion. The process run 'native' in 32 float.

Please note that the -110dB signal is not at -110dB mark anymore (!)

This equally apply to any DSP out there.

Attachments

jn,

every CD/DVD/SACD playback device, with exception of few very early CD players, contains some DSP 'block' that does manipulate original data in one way or another. Digital oversampling filter, either external IC or built-in into latest generation DAC ICs, is one such typical example. It receives 16/24 bit data from the media and performs calculations (oversampling, low-pass filter) on that data in HIGHER resolution than the data itself - i.e. it does it in 24/32/48 or even 64 bits.

Now, the output of such DSP has to be calculated down to the wordlenght that the external DAC IC or DAC part integrated into IC can accept. This is a bottleneck of the system and it is 20-24bits, or, very rarely, 32 bits wide. This is where the dither 'at the user end' comes into play (one more time), if one doesn't want round-off errors.

Here's the dithered 997Hz -110dB 16bit file spectra superimposed on the -110dB 16bit file spectra where no dither was used during 24 to 16 bit conversion. The process run 'native' in 32 float.

Please note that the -110dB signal is not at -110dB mark anymore (!)

This equally apply to any DSP out there.

I figured eventually someone would provide an explanation I could understand. Thank you very much, from this old dog..

jn

I assume that with oversampling that you are reading ahead the digital data in real time so you can do all of this dsp function. Now how are the non oversampling implementation working, it would seem that even with dither that you could or would have much higher chances of data error? Not to make this conversation that much harder than it already is, but what is going on between the two very different approaches?

Not sure if its possible to go more off piste, but found the following interesting To Play or Not to Play | Stereophile.com I fear it will set Frank off. I have the CD referenced and really like it.

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- John Curl's Blowtorch preamplifier part II