I've been using Linux and Windows 10 for audio processing for many years now, but DSP and loudspeaker crossover for the last few years. The Windows Subsystem for Windows 10 has always been interesting, but the deal breaker was always this. That there was no (native) access to the audio equipment on the computer. Apparently Windows 11 is changing this. And coming up with a great look of windows which is very interesting.

Before installing Win 11, please check the health and system requirements of the puck.

Upgrade Windows 10 to Windows 11

You can also download through ISO file.

Download Windows ISO File Link.

And you can also check Windows updates and security through Windows Insider.

Before installing Win 11, please check the health and system requirements of the puck.

Upgrade Windows 10 to Windows 11

You can also download through ISO file.

Download Windows ISO File Link.

And you can also check Windows updates and security through Windows Insider.

For those who wanted to try Win11.

I've Installed Win11 yesterday on top of Win10 and my machine doesn't have a TPM module and the processor is one of the 4th generation. Secure boot was already enabled. Working fine so far. Updates are being installed also. Will do a clean installation next week after doing some backups first.

Universal MediaCreationTool wrapper for all MCT Windows 10 versions from 1507 to 21H1 with business (Enterprise) edition support * GitHub

Some folks say it is enough to delete the Appraiseres.dll from the original ISO in order not to check for the TPM & Secure boot during install.

Replacing the Install.esd in a win10 install USB with the win11 one should also work.

Plenty of work-arounds...

P.S it is faster than my old WIN10 installation.

I've Installed Win11 yesterday on top of Win10 and my machine doesn't have a TPM module and the processor is one of the 4th generation. Secure boot was already enabled. Working fine so far. Updates are being installed also. Will do a clean installation next week after doing some backups first.

Universal MediaCreationTool wrapper for all MCT Windows 10 versions from 1507 to 21H1 with business (Enterprise) edition support * GitHub

Some folks say it is enough to delete the Appraiseres.dll from the original ISO in order not to check for the TPM & Secure boot during install.

Replacing the Install.esd in a win10 install USB with the win11 one should also work.

Plenty of work-arounds...

P.S it is faster than my old WIN10 installation.

I finally got around to installing Windows 11 on a relatively new machine that has built in TPM 2.0 support. The install went relatively smoothly. The primary reason for this was to check out audio under WSL2. I used the default Ubuntu (20.04) install. At first I could not detect any pulseaudio present, so as a hail mary I install Firefox and VLC (both interface with the OS audio subsystem). As soon as I pulled up a streaming audio web page, voila, audio came out of the rear jack of the PC. But that was about the only highlight. Next I installed the pulse audio volume control tool (pavucontrol) so that I could check levels and change the configuration graphically. This is very convenient under native Ubuntu for example. When I launched pavucontrol I found that it remained open for about 10 seconds before crashing. This also crashed pulse audio. I tried several attempts, and got the same behavior each time. I had to restart WSL2 in order to get pulse up and running again, as pulseaudio --kill or --start did nothing. Next I installed alsa, and I tried to play from e.g. VLC to alsa's pulse device, which should just direct the audio back to pulse audio. This also crashed pulse audio. Next I installed gstreamer and I ran a script that tried to use pulse audio as a source, sending the audio to a null sink. This crashed pulse audio. Next trial again used gstreamer. I attempted to use internally generated noise and send it to pulse audio. That worked. But that was about the only success. Any attempt at getting audio FROM pulse audio was a bust.

This was just me trying random things for a couple of hours. But it looks like there is still some work to be done to get pulse audio under WSL2 working in a more complete way. If I have any breakthroughs I will post here.

This was just me trying random things for a couple of hours. But it looks like there is still some work to be done to get pulse audio under WSL2 working in a more complete way. If I have any breakthroughs I will post here.

Hm, the audio solution for WSL2 in Win11 seems quite ugly https://stackoverflow.com/a/68316880/15717902

Yes, and all in all rather disappointing.

MY goal was to use the Linux code that I have developed to run a multichannel DSP crossover that could then be routed to Win 11 and to the audio hardware. It seems there is no chance in (that hot place) this is going to work for the foreseeable future.

Probably the best option now is the g_audio USB mode that you (phofman) have been contributing to, and sending audio to external Linux hardware (e.g. R-Pi) that does the DSP, etc. But that will not be able to use the Win 11 mobo audio, so it is really not a great solution.

MY goal was to use the Linux code that I have developed to run a multichannel DSP crossover that could then be routed to Win 11 and to the audio hardware. It seems there is no chance in (that hot place) this is going to work for the foreseeable future.

Probably the best option now is the g_audio USB mode that you (phofman) have been contributing to, and sending audio to external Linux hardware (e.g. R-Pi) that does the DSP, etc. But that will not be able to use the Win 11 mobo audio, so it is really not a great solution.

Last edited:

Well, the second PA in WSLg should theoretically work, when (if) MS fixes all the bugs. Communicating with PA over localhost should be quite reliable, despite being ugly. Another question is configuration of that PA instance running in WSLg. Maybe MS devs would have more detailed information about the options. I would be surprised they would hard-code that PA config (samplerates etc.). But everything is possible, of course

The gadget alsa device is quite peculiar. It can stop producing/consuming samples at any time, depending on what the host side does, there is no way to tell in advance. Standard alsa clients (sox, aplay/arecord/alsaloop) will stall and exit after some rather long timeout.Probably the best option now is the g_audio USB mode that you (phofman) have been contributing to, and sending audio to external Linux hardware (e.g. R-Pi) that does the DSP, etc.

We have been working with Henrik on proper support of this operation for CamillaDSP https://github.com/HEnquist/camilladsp/pull/179 , it's actually a non-trivial task. I would say CDSP works OK now in the gadget, with the patches applied, together with another control layer which keeps track of the requested samplerate and pcm stream stops and restarts the actual audio software (e.g. https://github.com/pavhofman/gaudio_ctl ). I do not know how other softwares would handle that, it requires non-blocking alsa access, permanent checking if the device stall has resumed, etc.

If it were just about loopback, e.g. the simple WASAPI loopback example from Henrik's crate could have been used. Compiling a Rust project on windows is trivial, unlike any code for C/C++ etc. But here two clock domains are merged (USB controller vs. Intel HDA clock) and async resampling should be involved. Again, CDSP is by far the easiest loopback as it includes a well-tested and CPU-optimized ASRC between capture and playback and supports WASAPI very well and openly (no buggy black box which is the standard on windows).But that will not be able to use the Win 11 mobo audio, so it is really not a great solution.

Actually, ASIO driver is "just" a COM interface in user-space. There is no reason it could not be implemented in Rust too, it already support ASIO playback (i.e. talking to the COM interface). Then CDSP could act as an ASIO device, eliminating all the hassle with routing audio to the DSP processes.

I understand you have a different software on hand but windows is pretty harsh to non-windows solutions and IMO Rust seems to be currently the best fit for writing cross-platform code with C-level performance.

I've been thinking about a work-around that allows me to do routing and DSP processing under WSL2 (Linux) if the source and sink are on the same Windows 11 computer. One way to do this would be to use FFmpeg. I have some experience in this from about 8 years ago when I started experimenting with streaming audio to remote clients in my home. I used a combination of FFmpeg and VLC at the time, and I remembered some of the capabilities while awake at night recently.

FFmpeg is a windows command line program that can do many useful audio tasks. One of these is re-streaming audio, as described on this page. It should be possible to obtain audio and send it to a local port on the machine using RTP like this:

where INPUT is the source of audio and RATE is the sample rate. INPUT could be via DirectShow. The Soxr resampler might be needed to fix the sample rate. This approach would allow all Windows side sounds to be exposed to WSL2.

Under WSL2 I would use my existing Gstreamer based code that does all the DSP stuff. It's a text based (console only) application, so it should work without a problem. The input (source) for Gstreamer would be the RTP on the loacalhost : port that was used by FFmpeg. The output (sink) would be another port on the localhost, e.g. localhost : port2. Here is some good into to read about localhost:

https://devdojo.com/mvnarendrareddy/access-windows-localhost-from-wsl2The web page explains that you cannot use 127.0.0.1 under WSL2 but have to send to the actual IP address of the local machine.

Back on the Windows side I would need another process to retreive the RTP on localhost : port2 by Gstreamer and send it to the DAC. This could be another FFmpeg process, or something else.

This seems a bit roundabout with all the passing back and forth but is probably how things need to work for now.

A more modern, full featured and capable replacement for using FFmpeg is software offered by VB-Audio software:

https://vb-audio.com/Voicemeeter/vban.htmI could either install the Voicemeeter (banana version/) or the applications "Receptor" and "Talkie" shown lower down on that same web page. Streams can consist of up to 8 channels, meaning I could use it to implement a stereo 4-way crossover.

FFmpeg is a windows command line program that can do many useful audio tasks. One of these is re-streaming audio, as described on this page. It should be possible to obtain audio and send it to a local port on the machine using RTP like this:

Code:

ffmpeg -i INPUT -acodec CODEC -ar RATE -f rtp rtp://host:portUnder WSL2 I would use my existing Gstreamer based code that does all the DSP stuff. It's a text based (console only) application, so it should work without a problem. The input (source) for Gstreamer would be the RTP on the loacalhost : port that was used by FFmpeg. The output (sink) would be another port on the localhost, e.g. localhost : port2. Here is some good into to read about localhost:

https://devdojo.com/mvnarendrareddy/access-windows-localhost-from-wsl2The web page explains that you cannot use 127.0.0.1 under WSL2 but have to send to the actual IP address of the local machine.

Back on the Windows side I would need another process to retreive the RTP on localhost : port2 by Gstreamer and send it to the DAC. This could be another FFmpeg process, or something else.

This seems a bit roundabout with all the passing back and forth but is probably how things need to work for now.

A more modern, full featured and capable replacement for using FFmpeg is software offered by VB-Audio software:

https://vb-audio.com/Voicemeeter/vban.htmI could either install the Voicemeeter (banana version/) or the applications "Receptor" and "Talkie" shown lower down on that same web page. Streams can consist of up to 8 channels, meaning I could use it to implement a stereo 4-way crossover.

Last edited:

After many months of this thread being dormant, I have an update.

I bought myself a new mini-PC as an early Xmas present, a MINISFORUM HX90. Perfect for my needs, since my aging ATX tower was running Win8.1 and there was no sensible upgrade path to Windows 11 and Win8 will soon no longer be supported. Along with the new machine came Win11 Pro and I knew I had to see what I could do under WSL2.

I have a mature Gstreamer based tool set for implementing DSP crossovers and related stuff. I would like use these under WSL2 but there is one small problem - WSL2 has only a rudimentary PulseAudio connection to and from Windows and otherwise has no sound capabilities - it cannot directly access the Windows sound system or any of its sources or sinks. Since I use Gstreamer for RTP/UDP streaming around my home I am well versed in how to do this so I decided to try sending audio between WSL2 and Windows by streaming via ports on the machine. I have been experimenting with this sort of approach for a few days, and after lots of hair pulling I think I am finally getting somewhere.

Overview:

I have installed Gstreamer under both WSL2 (Ubuntu) and Windows. In order to make it possible to send and receive data, you must add rules to the Windows firewall for the ports you will be using. Also, you will need the IP address of the Windows machine. Finally, a loopback on the Windows side is needed - I installed Virtual Cable from VB-Audio. For convenience and help to debug I installed the pulse audio control (pavucontrol) under WSL2. I am using VLC as an audio source, playing files.

Here is a description of the initial working setup:

I play an audio file in VLC in Windows. I set VLC's audio device to the Virtual Cable.

On the Windows side I can observe the audio level fluctuating along with the music in Settings>Sound

On the WSL2 side I can see the audio level fluctuating under Input Devices (the lone device is "RDP source")

NOTE that I did not do anything at all to make this happen. The built-in PulseAudio system is configured to make the default Input/Recording device available to WSL2 right outta the box.

Now that we have audio routed to WSL2 I can use my Gstreamer tools to process it via DSP or whatever. But in order to make use of the processed audio I need to get it back to the Windows side. This is where RTP streaming to a port comes in.

On the WSL side I run this Gstreamer pipeline:

where XXX is the last part of the IP address of the computer (the Win-11 NIC IP address) and PPPPP is the port I chose to use.

On the Windows side I run this Gstreamer pipeline in the command window:

These commands take the audio (on the WSL2 side), format it into a new format and rate, and stream it using RTP to the port PPPPP, then receive it back on the Windows side, depayload it from RTP and send it on to the default audio sink.

This produces audio from the default audio sink (Output device) defined under Settings>Sound in Windows. Woo-hoo!

This is showing some promise, but also has some limitations. It seems that PulseAudio reformats the audio to 16bit 44.1k and only that format is available when it comes out on the WSL2 side. I suspect the audio properties can be changed via some configuration of PA but I have not yet looked into that. I might also be able to stream audio from the Windows side to the WSL2 side on another port in the format set for the Virtual Cable under Windows.

Also, it would be nice to be able to specify the Windows audio device to send the processed audio to as part of the Windows side Gstreamer pipeline instead of just the default sink. It turns out that Gstreamer under Windows has a utility called gst-device-monitor-1.0.exe. Running this command in the command window produces a list of all the devices that Gstreamer can access. This list has a separate entry for each mode that each device can operate under, and by looking through the list I found my desired audio sink and mode listed like this:

What I need is on the last line, which shows how to use Gstreamer's WASAPI sink element to send the audio to this device in this mode (32 bit float at 96k Hz):

To direct the audio to this device I replace "autoaudiosink" at the end of the Windows Gstreamer command with this text.

So, that is the update for today. A bit of success!

I bought myself a new mini-PC as an early Xmas present, a MINISFORUM HX90. Perfect for my needs, since my aging ATX tower was running Win8.1 and there was no sensible upgrade path to Windows 11 and Win8 will soon no longer be supported. Along with the new machine came Win11 Pro and I knew I had to see what I could do under WSL2.

I have a mature Gstreamer based tool set for implementing DSP crossovers and related stuff. I would like use these under WSL2 but there is one small problem - WSL2 has only a rudimentary PulseAudio connection to and from Windows and otherwise has no sound capabilities - it cannot directly access the Windows sound system or any of its sources or sinks. Since I use Gstreamer for RTP/UDP streaming around my home I am well versed in how to do this so I decided to try sending audio between WSL2 and Windows by streaming via ports on the machine. I have been experimenting with this sort of approach for a few days, and after lots of hair pulling I think I am finally getting somewhere.

Overview:

I have installed Gstreamer under both WSL2 (Ubuntu) and Windows. In order to make it possible to send and receive data, you must add rules to the Windows firewall for the ports you will be using. Also, you will need the IP address of the Windows machine. Finally, a loopback on the Windows side is needed - I installed Virtual Cable from VB-Audio. For convenience and help to debug I installed the pulse audio control (pavucontrol) under WSL2. I am using VLC as an audio source, playing files.

Here is a description of the initial working setup:

I play an audio file in VLC in Windows. I set VLC's audio device to the Virtual Cable.

On the Windows side I can observe the audio level fluctuating along with the music in Settings>Sound

On the WSL2 side I can see the audio level fluctuating under Input Devices (the lone device is "RDP source")

NOTE that I did not do anything at all to make this happen. The built-in PulseAudio system is configured to make the default Input/Recording device available to WSL2 right outta the box.

Now that we have audio routed to WSL2 I can use my Gstreamer tools to process it via DSP or whatever. But in order to make use of the processed audio I need to get it back to the Windows side. This is where RTP streaming to a port comes in.

On the WSL side I run this Gstreamer pipeline:

Code:

gst-launch-1.0 -vvv autoaudiosrc ! audioconvert ! audio/x-raw,channels=2,format=S24LE ! audioresample ! audio/x-raw,rate=96000 ! queue ! audioconvert ! rtpL24pay ! udpsink host='192.168.1.XXX' port='PPPPP'On the Windows side I run this Gstreamer pipeline in the command window:

Code:

gst-launch-1.0.exe" udpsrc port=PPPPP ! application/x-rtp,media=audio,clock-rate=96000,encoding-name=L24,channels=2,payload=96 ! rtpL24depay ! queue ! audioconvert ! autoaudiosinkThis produces audio from the default audio sink (Output device) defined under Settings>Sound in Windows. Woo-hoo!

This is showing some promise, but also has some limitations. It seems that PulseAudio reformats the audio to 16bit 44.1k and only that format is available when it comes out on the WSL2 side. I suspect the audio properties can be changed via some configuration of PA but I have not yet looked into that. I might also be able to stream audio from the Windows side to the WSL2 side on another port in the format set for the Virtual Cable under Windows.

Also, it would be nice to be able to specify the Windows audio device to send the processed audio to as part of the Windows side Gstreamer pipeline instead of just the default sink. It turns out that Gstreamer under Windows has a utility called gst-device-monitor-1.0.exe. Running this command in the command window produces a list of all the devices that Gstreamer can access. This list has a separate entry for each mode that each device can operate under, and by looking through the list I found my desired audio sink and mode listed like this:

Code:

Device found:

name : SPDIF Interface (UX1 USB DAC)

class : Audio/Sink

caps : audio/x-raw, format=F32LE, layout=interleaved, rate=96000, channels=2, channel-mask=0x0000000000000003

properties:

device.api = wasapi

device.strid = {0.0.0.00000000}.{f7d66cf4-b5bd-420a-8704-acd3621b959a}

wasapi.device.description = SPDIF Interface (UX1 USB DAC)

gst-launch-1.0 ... ! wasapisink device="\{0.0.0.00000000\}.\{f7d66cf4-b5bd-420a-8704-acd3621b959a\}"

Code:

wasapisink device="\{0.0.0.00000000\}.\{f7d66cf4-b5bd-420a-8704-acd3621b959a\}So, that is the update for today. A bit of success!

Last edited:

OK, making more progress...

I am now bypassing PulseAudio completely since that allows me to choose the audio format and sample rate that is passing through the chain. I set the Windows default audio device to the Virtual Cable (loopback device) input. That way any audio app will send its output through this chain. Then I use several Gstreamer pipelines:

Using the above I can maintain a fixed 24/32 bit format at 96k Hz throughout. So far it seems to be stable and sounds good. Just listening to various sources using headphones while developing and debugging.

The next step is to plug in a multichannel USB interface to check the ability to send N channels back to Windows from WSL2 and then to the audio device. If that works then I can be confident that all the necessary parts of a local DSP processing setup are functioning properly.

Also, I found this nice volume control app called Ear Trumpet that makes accessing volume controls for apps, sources, and sinks very convenient via a taskbar shortcut icon. Highly recommended.

I am now bypassing PulseAudio completely since that allows me to choose the audio format and sample rate that is passing through the chain. I set the Windows default audio device to the Virtual Cable (loopback device) input. That way any audio app will send its output through this chain. Then I use several Gstreamer pipelines:

- stream audio from Windows to WSL2

- in WSL2 receive the stream, process the audio (not actually doing this yet), and stream it back to Windows

- receive the stream back on the Windows side and route it to a physical sink (e.g. DAC).

Using the above I can maintain a fixed 24/32 bit format at 96k Hz throughout. So far it seems to be stable and sounds good. Just listening to various sources using headphones while developing and debugging.

The next step is to plug in a multichannel USB interface to check the ability to send N channels back to Windows from WSL2 and then to the audio device. If that works then I can be confident that all the necessary parts of a local DSP processing setup are functioning properly.

Also, I found this nice volume control app called Ear Trumpet that makes accessing volume controls for apps, sources, and sinks very convenient via a taskbar shortcut icon. Highly recommended.

Last edited:

Update:

I got my Gstreamer app up and running under WSL2 (Ubuntu). I use it to do routing and filtering (what a crossover does, in a nutshell) with the filters implemented via LADSPA plugins. So all good there.

Unfortunately I have run into a majro problem, or so it seems. I am receiving the audio back on the windows side using another Gstreamer pipeline that sends the audio via WASAPI to the endpoint. I decided to try a couple of different multichannel DACs that I own. The first, a MOTU Ultrlite mk5, has 10 output channels but under Windows audio only presents these as 2-channel pairs, e.g. there is one audio endpoint for channels 1&2, another for channels 3&4, etc. That isn't very helpful or useful. What I want is ONE device that presents ALL of the output channels. So I moved on to the Behringer UMC1820. I can see this as a 10-channel output device from Gstreamer under Windows using app gst-device-monitor-1.0. The problem is that there is no associated channel-mask for the 10 channel mode. I have faced this problem under Linux, but there I figured out that all the channels must be set with a flag that essentially says "no assignment" which makes the overall channel-mask equal to NULL or something. Then it would work perfectly. But under Windows I cannot seem to get this to happen, and the device driver will not accept a NULL channel mask (it throws an error).

There is likely some workaround for this, but I am not sure what that is at this point in time. I might just need to use a different app on the Windows side to receive the RTP stream and play out the audio to the endpoint.

Alternately I could try to find a multichannel DAC that exposes a proper channel-mask to Gstreamer, but that sort of limits what audio hardware I can use. I would rather a solution to the channel-mask problem.

I got my Gstreamer app up and running under WSL2 (Ubuntu). I use it to do routing and filtering (what a crossover does, in a nutshell) with the filters implemented via LADSPA plugins. So all good there.

Unfortunately I have run into a majro problem, or so it seems. I am receiving the audio back on the windows side using another Gstreamer pipeline that sends the audio via WASAPI to the endpoint. I decided to try a couple of different multichannel DACs that I own. The first, a MOTU Ultrlite mk5, has 10 output channels but under Windows audio only presents these as 2-channel pairs, e.g. there is one audio endpoint for channels 1&2, another for channels 3&4, etc. That isn't very helpful or useful. What I want is ONE device that presents ALL of the output channels. So I moved on to the Behringer UMC1820. I can see this as a 10-channel output device from Gstreamer under Windows using app gst-device-monitor-1.0. The problem is that there is no associated channel-mask for the 10 channel mode. I have faced this problem under Linux, but there I figured out that all the channels must be set with a flag that essentially says "no assignment" which makes the overall channel-mask equal to NULL or something. Then it would work perfectly. But under Windows I cannot seem to get this to happen, and the device driver will not accept a NULL channel mask (it throws an error).

There is likely some workaround for this, but I am not sure what that is at this point in time. I might just need to use a different app on the Windows side to receive the RTP stream and play out the audio to the endpoint.

Alternately I could try to find a multichannel DAC that exposes a proper channel-mask to Gstreamer, but that sort of limits what audio hardware I can use. I would rather a solution to the channel-mask problem.

And finally.... SUCCESS!

It turns out I was using the wrong wasapi device id for the endpoint (the UMC1820) back on the Windows side. I found a different one that uses Gstreamer's wasapi2sink, very similar to the wasapisink that I used earlier. I had not seen it before in the very extensive device+mode list (81 dsifferent ones!) that gst-device-monitor-1.0 produces. Also, I had to set all of the channel assignments to "0" under Gstreamer, which produced the channel mask 0x0 because the null mask was not accepted by the RTP payloader.

So, I now have a 10-channel crossover up and running at 24 bit/ 96k Hz, with sources playing in Windows, the audio routed to WSL2 where my Gstreamer app implements the crossover, and then back to Windows and out through the UMC1820. Nice!

I think I am starting to like WSL2, especially the inter-operability between Linux and Windows commands from the same prompt. That can do some interesting things! For example, I did the following:

The above runs Gstreamer-Windows' device monitor, then uses the Linux commands grep and wc (word count) to count up the number of instances, e.g. count the number of device+mode for audio endpoints under Windows.

It turns out I was using the wrong wasapi device id for the endpoint (the UMC1820) back on the Windows side. I found a different one that uses Gstreamer's wasapi2sink, very similar to the wasapisink that I used earlier. I had not seen it before in the very extensive device+mode list (81 dsifferent ones!) that gst-device-monitor-1.0 produces. Also, I had to set all of the channel assignments to "0" under Gstreamer, which produced the channel mask 0x0 because the null mask was not accepted by the RTP payloader.

So, I now have a 10-channel crossover up and running at 24 bit/ 96k Hz, with sources playing in Windows, the audio routed to WSL2 where my Gstreamer app implements the crossover, and then back to Windows and out through the UMC1820. Nice!

I think I am starting to like WSL2, especially the inter-operability between Linux and Windows commands from the same prompt. That can do some interesting things! For example, I did the following:

Code:

"D:\gstreamer\1.0\msvc_x86_64\bin\gst-device-monitor-1.0.exe" | wsl grep name | wsl wc

Last edited:

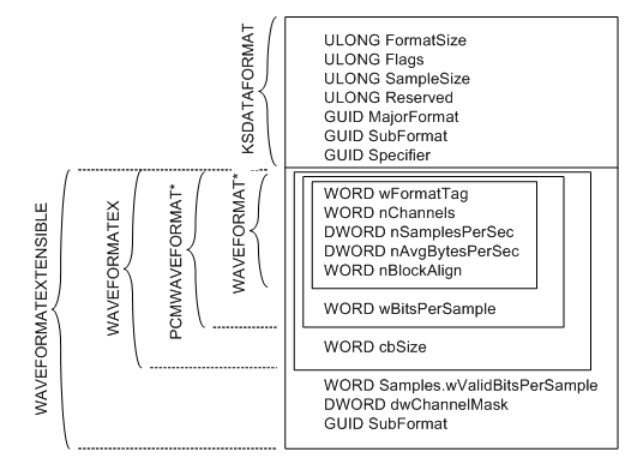

Yeah, WASAPI and channel masks are a never-ending story. In my wasapi-exclusive driver for javasound the development was trial/error while testing a number of interfaces by several people, with every turn discovering new supported-only combinations. I ended up with a series of checks for each device format https://github.com/pavhofman/csjsou...d08bc07a281857d2cf1c6f2f6/src/formats.rs#L106 :

1. First masks defined in PortAudio https://github.com/pavhofman/csjsou...1d08bc07a281857d2cf1c6f2f6/src/formats.rs#L70

2. Then linear bitmasks (e.g. 6ch = 6 LSBs)

3. Then a zero channel mask (some capture devices require that, but many other devices refuse that)

4. Plus checking the legacy WAVEFORMATEX support for 2ch/16b devices as requested in https://learn.microsoft.com/en-us/w...o/device-formats#specifying-the-device-format

No idea why MS "architects" made the channel mask compulsory, without any API method for the driver to give hints of accepted channel combinations.

Plus the confusing names WAVEFORMATEX vs. WAVEFORMATEXTENSIBLE

1. First masks defined in PortAudio https://github.com/pavhofman/csjsou...1d08bc07a281857d2cf1c6f2f6/src/formats.rs#L70

2. Then linear bitmasks (e.g. 6ch = 6 LSBs)

3. Then a zero channel mask (some capture devices require that, but many other devices refuse that)

4. Plus checking the legacy WAVEFORMATEX support for 2ch/16b devices as requested in https://learn.microsoft.com/en-us/w...o/device-formats#specifying-the-device-format

No idea why MS "architects" made the channel mask compulsory, without any API method for the driver to give hints of accepted channel combinations.

Plus the confusing names WAVEFORMATEX vs. WAVEFORMATEXTENSIBLE

It's possible to probe an endpoint for the correct channel mask and other info using Gstreamer. I developed a script file to do this under ALSA/Linux and I just did it manually under Windows for the UMC1820 that I was trying to get working. It is done like this: I create a Gstreamer pipeline that starts with audiotestsrc and the desired number of channels, format, and sample rate. The pipeline ends with the sink in question, and I invoke Gstreamer with the -vm switch to get more debug output. The output includes info on whether the mode is supported and the channel mask required. I am not sure how Gstreamer gets that info under Windows (or Linux for that matter) only that I can parse the output to gain insight. When I run this for the Behringer UMC1820 under Windows the output shows that the channel-mask is 0x00. Since the sink is a 10 channel one, this means that there is no valid channel mask for it. Under Linux I have to configure each channel as "no mask" under Gstreamer, and it is only the order of channels in the stream that determine which audio channel they emerge from on the physical device.

An example of this approach under Windows is shown below.

Info about the sink is provided in messages 30-38.

An example of this approach under Windows is shown below.

Code:

C:\Users\charl>"D:\gstreamer\1.0\msvc_x86_64\bin\gst-launch-1.0.exe" -vm audiotestsrc wave=silence ! audio/x-raw,channels=10,format=F32LE,rate=96000 ! audioconvert ! wasapi2sink device="\\\\\?\\SWD\#MMDEVAPI\#\{0.0.0.00000000\}.\{e3e942ee-10c6-4425-a255-b06b875dc9b3\}\#\{e6327cad-dcec-4949-ae8a-991e976a79d2\}"

Use Windows high-resolution clock, precision: 1 ms

Setting pipeline to PAUSED ...

Pipeline is PREROLLING ...

Got message #12 from element "wasapi2sink0" (state-changed): GstMessageStateChanged, old-state=(GstState)null, new-state=(GstState)ready, pending-state=(GstState)void-pending;

Got message #13 from element "audioconvert0" (state-changed): GstMessageStateChanged, old-state=(GstState)null, new-state=(GstState)ready, pending-state=(GstState)void-pending;

Got message #14 from element "capsfilter0" (state-changed): GstMessageStateChanged, old-state=(GstState)null, new-state=(GstState)ready, pending-state=(GstState)void-pending;

Got message #15 from element "audiotestsrc0" (state-changed): GstMessageStateChanged, old-state=(GstState)null, new-state=(GstState)ready, pending-state=(GstState)void-pending;

Got message #16 from element "pipeline0" (state-changed): GstMessageStateChanged, old-state=(GstState)null, new-state=(GstState)ready, pending-state=(GstState)paused;

Got message #19 from element "audioconvert0" (state-changed): GstMessageStateChanged, old-state=(GstState)ready, new-state=(GstState)paused, pending-state=(GstState)void-pending;

Got message #20 from element "capsfilter0" (state-changed): GstMessageStateChanged, old-state=(GstState)ready, new-state=(GstState)paused, pending-state=(GstState)void-pending;

Got message #23 from pad "audiotestsrc0:src" (stream-status): GstMessageStreamStatus, type=(GstStreamStatusType)create, owner=(GstElement)"\(GstAudioTestSrc\)\ audiotestsrc0", object=(GstTask)"\(GstTask\)\ audiotestsrc0:src";

Got message #24 from element "audiotestsrc0" (state-changed): GstMessageStateChanged, old-state=(GstState)ready, new-state=(GstState)paused, pending-state=(GstState)void-pending;

Got message #25 from pad "audiotestsrc0:src" (stream-status): GstMessageStreamStatus, type=(GstStreamStatusType)enter, owner=(GstElement)"\(GstAudioTestSrc\)\ audiotestsrc0", object=(GstTask)"\(GstTask\)\ audiotestsrc0:src";

Got message #26 from element "pipeline0" (stream-start): GstMessageStreamStart, group-id=(uint)1;

Got message #30 from pad "audiotestsrc0:src" (property-notify): GstMessagePropertyNotify, property-name=(string)caps, property-value=(GstCaps)"audio/x-raw\,\ format\=\(string\)F32LE\,\ layout\=\(string\)interleaved\,\ rate\=\(int\)96000\,\ channels\=\(int\)10\,\ channel-mask\=\(bitmask\)0x0000000000000000";

/GstPipeline:pipeline0/GstAudioTestSrc:audiotestsrc0.GstPad:src: caps = audio/x-raw, format=(string)F32LE, layout=(string)interleaved, rate=(int)96000, channels=(int)10, channel-mask=(bitmask)0x0000000000000000

Got message #32 from pad "capsfilter0:src" (property-notify): GstMessagePropertyNotify, property-name=(string)caps, property-value=(GstCaps)"audio/x-raw\,\ format\=\(string\)F32LE\,\ layout\=\(string\)interleaved\,\ rate\=\(int\)96000\,\ channels\=\(int\)10\,\ channel-mask\=\(bitmask\)0x0000000000000000";

/GstPipeline:pipeline0/GstCapsFilter:capsfilter0.GstPad:src: caps = audio/x-raw, format=(string)F32LE, layout=(string)interleaved, rate=(int)96000, channels=(int)10, channel-mask=(bitmask)0x0000000000000000

Got message #34 from pad "audioconvert0:src" (property-notify): GstMessagePropertyNotify, property-name=(string)caps, property-value=(GstCaps)"audio/x-raw\,\ format\=\(string\)F32LE\,\ layout\=\(string\)interleaved\,\ rate\=\(int\)96000\,\ channels\=\(int\)10\,\ channel-mask\=\(bitmask\)0x0000000000000000";

/GstPipeline:pipeline0/GstAudioConvert:audioconvert0.GstPad:src: caps = audio/x-raw, format=(string)F32LE, layout=(string)interleaved, rate=(int)96000, channels=(int)10, channel-mask=(bitmask)0x0000000000000000

Got message #35 from element "wasapi2sink0" (latency): no message details

Redistribute latency...

Got message #36 from pad "wasapi2sink0:sink" (property-notify): GstMessagePropertyNotify, property-name=(string)caps, property-value=(GstCaps)"audio/x-raw\,\ format\=\(string\)F32LE\,\ layout\=\(string\)interleaved\,\ rate\=\(int\)96000\,\ channels\=\(int\)10\,\ channel-mask\=\(bitmask\)0x0000000000000000";

/GstPipeline:pipeline0/GstWasapi2Sink:wasapi2sink0.GstPad:sink: caps = audio/x-raw, format=(string)F32LE, layout=(string)interleaved, rate=(int)96000, channels=(int)10, channel-mask=(bitmask)0x0000000000000000

Got message #37 from pad "audioconvert0:sink" (property-notify): GstMessagePropertyNotify, property-name=(string)caps, property-value=(GstCaps)"audio/x-raw\,\ format\=\(string\)F32LE\,\ layout\=\(string\)interleaved\,\ rate\=\(int\)96000\,\ channels\=\(int\)10\,\ channel-mask\=\(bitmask\)0x0000000000000000";

/GstPipeline:pipeline0/GstAudioConvert:audioconvert0.GstPad:sink: caps = audio/x-raw, format=(string)F32LE, layout=(string)interleaved, rate=(int)96000, channels=(int)10, channel-mask=(bitmask)0x0000000000000000

Got message #38 from pad "capsfilter0:sink" (property-notify): GstMessagePropertyNotify, property-name=(string)caps, property-value=(GstCaps)"audio/x-raw\,\ format\=\(string\)F32LE\,\ layout\=\(string\)interleaved\,\ rate\=\(int\)96000\,\ channels\=\(int\)10\,\ channel-mask\=\(bitmask\)0x0000000000000000";

/GstPipeline:pipeline0/GstCapsFilter:capsfilter0.GstPad:sink: caps = audio/x-raw, format=(string)F32LE, layout=(string)interleaved, rate=(int)96000, channels=(int)10, channel-mask=(bitmask)0x0000000000000000

Got message #42 from element "wasapi2sink0" (tag): GstMessageTag, taglist=(taglist)"taglist\,\ description\=\(string\)\"audiotest\\\ wave\"\;";

Got message #43 from element "wasapi2sink0" (state-changed): GstMessageStateChanged, old-state=(GstState)ready, new-state=(GstState)paused, pending-state=(GstState)void-pending;

Got message #46 from element "pipeline0" (state-changed): GstMessageStateChanged, old-state=(GstState)ready, new-state=(GstState)paused, pending-state=(GstState)void-pending;

Pipeline is PREROLLED ...

Setting pipeline to PLAYING ...

Got message #45 from element "pipeline0" (async-done): GstMessageAsyncDone, running-time=(guint64)18446744073709551615;

Got message #47 from element "wasapi2sink0" (latency): no message details

Redistribute latency...

Got message #49 from element "pipeline0" (new-clock): GstMessageNewClock, clock=(GstClock)"\(GstAudioClock\)\ GstAudioSinkClock";

New clock: GstAudioSinkClock

Got message #51 from element "wasapi2sink0" (state-changed): GstMessageStateChanged, old-state=(GstState)paused, new-state=(GstState)playing, pending-state=(GstState)void-pending;

Got message #52 from element "audioconvert0" (state-changed): GstMessageStateChanged, old-state=(GstState)paused, new-state=(GstState)playing, pending-state=(GstState)void-pending;

Got message #53 from element "capsfilter0" (state-changed): GstMessageStateChanged, old-state=(GstState)paused, new-state=(GstState)playing, pending-state=(GstState)void-pending;

Got message #54 from element "audiotestsrc0" (state-changed): GstMessageStateChanged, old-state=(GstState)paused, new-state=(GstState)playing, pending-state=(GstState)void-pending;

Got message #55 from element "pipeline0" (state-changed): GstMessageStateChanged, old-state=(GstState)paused, new-state=(GstState)playing, pending-state=(GstState)void-pending;

handling interrupt.9.

Got message #212 from element "pipeline0" (application): GstLaunchInterrupt, message=(string)"Pipeline\ interrupted";

Interrupt: Stopping pipeline ...

Execution ended after 0:00:02.151822000

Setting pipeline to NULL ...

Freeing pipeline ...Info about the sink is provided in messages 30-38.

It's possible to probe an endpoint for the correct channel mask and other info using Gstreamer

The gst source code suggests that works only for wasapi shared mode which is much less picky about formats as the windows audio engine is able to do all sorts of reformatting (similar to the plug plugin of alsa). But the exclusive mode (gst property "exclusive") is like the hw:X device in alsa, no or only minimal format adjustments.

Let's look at the gst code. Method gst_wasapi_util_get_device_format does not pass any gst configs https://github.com/GStreamer/gst-pl...4fdedfe2f33fa/sys/wasapi/gstwasapisink.c#L357 , talks only to wasapi.

The method body first calls IAudioClient_GetMixFormat https://github.com/GStreamer/gst-pl...4fdedfe2f33fa/sys/wasapi/gstwasapiutil.c#L467 which supplies shared-mode params https://learn.microsoft.com/en-us/w...ient/nf-audioclient-iaudioclient-getmixformat . These can fail for exclusive mode https://github.com/GStreamer/gst-pl...4fdedfe2f33fa/sys/wasapi/gstwasapiutil.c#L474 . Then it checks wasapi property PKEY_AudioEngine_DeviceFormat which happens to be for shared mode too https://learn.microsoft.com/en-us/windows/win32/coreaudio/pkey-audioengine-deviceformat . If this format fails https://github.com/GStreamer/gst-pl...4fdedfe2f33fa/sys/wasapi/gstwasapiutil.c#L509 , gst quits and throws an error. I could not find any place where gst tries calls for various channel masks in exclusive mode. It even mentions the iterative checks in https://github.com/GStreamer/gst-pl...4fdedfe2f33fa/sys/wasapi/gstwasapiutil.c#L767 but IIUC that place is not related to generating supported exclusive-mode format, it is called after the method gst_wasapi_util_get_device_format.

IMO gst does not seem to implement full support for the exclusive mode as all format checks are derived from the shared-mode formats obtained by IAudioClient_GetMixFormat call.

@phofman, Thanks for looking into that. I had only attempted to test it once under Windows for the wasapi sink and based on my experiences under Linux I assumed it would work the same, that is be able to return the audio format and chmask info (which it does under Linux when attempting to sink to hw:x like you mentioned). Oh well. In any case I manage to get my pipelines working and can process audio under WSL2 as I had hoped. For now I don't have a need, since I am not planning to run a multiway crossover on my primary desktop box, but it was a good exercise. I would guess that in the future when PipeWire is fully supported in Ubuntu WSLx will use it and everything will be easier.

I wrote a script to make command line recording on Linux less annoying to use.

Feel free to use it or modify it. I named the script "arec" so the usage would be "arec filename" and if the filename exists it says so and aborts so you don't overwrite the old file.

I use 32 bit because my audio stack doesn't play nicely with 24 bit (pipewire just doesn't seem to work if it's set to S24_LE) I will soon experiment with using FLOAT_LE as the format.

Feel free to use it or modify it. I named the script "arec" so the usage would be "arec filename" and if the filename exists it says so and aborts so you don't overwrite the old file.

I use 32 bit because my audio stack doesn't play nicely with 24 bit (pipewire just doesn't seem to work if it's set to S24_LE) I will soon experiment with using FLOAT_LE as the format.

Code:

#!/bin/sh

if [ -f "/data/incoming/audio/$1" ];

then

echo "File $1 exists."

else

arecord --device=hw:0,1 --format=S32_LE --rate=96000 --channels=2 --vumeter=stereo /data/incoming/audio/$1

>&2

fi

Last edited:

Just a note - In alsa format names S24_LE is 24bit wrapped into 32 bits/4 bytes. Standard 24bit (i.e. sample length 3 bytes) is S24_3LE. This format naming is very confusing indeed.I use 32 bit because my audio stack doesn't play nicely with 24 bit (pipewire just doesn't seem to work if it's set to S24_LE)

- Home

- Source & Line

- PC Based

- Windows 11 includes WSL (Linux) with audio system access