Playing with VituixCAD sims, for some reason with the nicest smoothest graphs with full data the phases are pretty well aligned. I'm not sure why, is it the cause or consequence and I don't know if it sounds the best. Sounds good enough I havent bothered try anything else for some time now, been consentrating on the scoustic properties, the baffle and enclosure.

In the '90s, I spent some months writing my first graphical crossover simulator. I took careful measurements and over a space of time, I brought phase very close together. I didn't find what I was looking for then and I haven't found it since.

Current thinking suggests that there are other issues causing the flaws we hear with common speakers. Also, that lining up phase is not even always the best option.

Current thinking suggests that there are other issues causing the flaws we hear with common speakers. Also, that lining up phase is not even always the best option.

Ideally this was already done in the design stage. You'd have phase alignment on the acoustic axis (the intended listening axis) at one meter. That puts the main forward lobe where you want it.What about measuring at listening position? I know it would be rather specific but those who want to go the extra mile could include the room anomalies into the phase correction?

After that I don't bother w/phase compensation in any other direction(s). I just remove any peaks that may/will show up off axis. Myself, I'm restricted to (1)PEQ and a shelf in the woofer section and (2)PEQ and a shelf in the mid/tweeter section. So far that has been sufficient enough to even out the response to a happy medium on and off axis as I'm not a big fan of excessive EQ. I'm able to walk in front of the pair of loudspeakers, left to right, without noting any glaring frequency problems. Both loudspeakers are "flat" someplace but not necessarily "on axis".

On the subject of zobels, its absolutely necessary IMO to use them on higher inductance drivers to get filters to somewhat phase align in the filter overlap region. Listening to a complete system and just inserting a proper zobel, it just "pulls together" the overlapping drivers sonically, allowing them to integrate better. I even use them on tweeters and use the minimum driver impedance value as my series R in the zobel itself, tuning it until i get the flatest resistance possible past the xover point. Sometimes you can tweak the phase in the xover region with the zobel to get the "summing bubble" better in line on the listening axis. Im talking very subtle cap and resistor changes here.

Acoustic center alignment is another critical thing. I don't build any 2 way without it. The rewards are great if this is done and I cant over emphasis this. It simplifies HP and LP, getting as close to an optimal phase response in the xover region as possible.

Multiple LF driver designs are better for LF in room response but come at the price of xover complexity. I generally prefer multiple smaller drivers doing the low end and midrange because they have the ability to cover the midbass with lower distortion and higher output potential. Percussion, bass and piano have the body and weight they need to be felt as well as heard without being boomy or thick sounding.

Large jumps in phase response are easily perceived by the ear and ruin the overall sound of a speaker more than frequency response dips. Therefore I'd rather get the phase right than obsess about super flat response. You can hear +/- 1 dB in the 2 - 5 kHz range, so its more important to concentrate on that area and preferably have one driver reproduce it, which means a low xover 2 way or a 3 way with a smaller mid. If you do cross over in that area, make sure the phase is stable for good three dimensional imaging and minimal lobing or combing.

Unfortunately many 2 way speakers cross the tweeter too low for its output capability. That may satisfy the above criteria for midrange quality, but it usually means dymanics suffer due to limited power handling and excursion limited distortion. I personally would rather run the woofer up higher if permitted by the quality of LF driver than push the tweeter lower. I know many of you disagree, but I can't handle the sound of a stressed tweeter compared to the woofer running a little into controlled cone breakup if its managed well.

Acoustic center alignment is another critical thing. I don't build any 2 way without it. The rewards are great if this is done and I cant over emphasis this. It simplifies HP and LP, getting as close to an optimal phase response in the xover region as possible.

Multiple LF driver designs are better for LF in room response but come at the price of xover complexity. I generally prefer multiple smaller drivers doing the low end and midrange because they have the ability to cover the midbass with lower distortion and higher output potential. Percussion, bass and piano have the body and weight they need to be felt as well as heard without being boomy or thick sounding.

Large jumps in phase response are easily perceived by the ear and ruin the overall sound of a speaker more than frequency response dips. Therefore I'd rather get the phase right than obsess about super flat response. You can hear +/- 1 dB in the 2 - 5 kHz range, so its more important to concentrate on that area and preferably have one driver reproduce it, which means a low xover 2 way or a 3 way with a smaller mid. If you do cross over in that area, make sure the phase is stable for good three dimensional imaging and minimal lobing or combing.

Unfortunately many 2 way speakers cross the tweeter too low for its output capability. That may satisfy the above criteria for midrange quality, but it usually means dymanics suffer due to limited power handling and excursion limited distortion. I personally would rather run the woofer up higher if permitted by the quality of LF driver than push the tweeter lower. I know many of you disagree, but I can't handle the sound of a stressed tweeter compared to the woofer running a little into controlled cone breakup if its managed well.

I must admire how much knowledge is required for passive xo design and tweaking! I've been working with DSP only and find it trivial, delay is one most important tools to get good response (using wveguide/horn). Currently the development is thinking and experimenting within the acoustic domain, where all the problems arise like the drivers, spacings, alignments baffles and what not.

...

Large jumps in phase response are easily perceived by the ear and ruin the overall sound of a speaker more than frequency response dips. Therefore I'd rather get the phase right than obsess about super flat response. You can hear +/- 1 dB in the 2 - 5 kHz range, so its more important to concentrate on that area and preferably have one driver reproduce it, which means a low xover 2 way or a 3 way with a smaller mid. If you do cross over in that area, make sure the phase is stable for good three dimensional imaging and minimal lobing or combing.

...

I wonder why the three dimensional imaging is affected by phase, is it the phase itself as some kind of temporal thing we perceive or is it just because the frequency response to various off-axis directions change with the phase relationship of the transducers that are not coincident?

I mean in a far field listening spot the phase is not minimum phase but result of all kinds of combination of reflections in the room so perceiving the phase difference of drivers as such at listening spot doesn't make sense to me currently. More intuitive would be to assume that the phase difference manifested as varying frequency response to various off-axis angles affects the perceived sound at listening spot more than the phase itself.

The early reflections sound to the listening spot mostly depend on the off-axis frequency response of the speaker (first early reflections especially, which are the loudest and least delayed) and how they sum in the listening spot with the direct sound. ER delays and SPL can be affected by speaker positioning, toe in and pattern control but the off-axis frequency response is also affected by the phase difference around xo.

What are your thought on this?

If this is the case, then the good sound would be achieved not necessarily by aligning the phase traces per se but by optimizing the phases so that first early reflection frequency response is akin to the listening window frequency response. This might be optimized when the phases are aligned, at least on some configurations. I haven't checked too deeply on this. For my prototype projects the phases have been pretty much aligned for smoothest DI and ERDI, at least within 45 degrees, mostly much less.

edit. if this was the case the thread title would be more accurate as "Early reflections based method of designing multi-way speakers"

edit. well, I forgot to think about the phase tracking effect on the on-axis sound. I guess there would be temporal things in the on-axis sound that affect perceived sound in addition to the frequency response difference of listening window and ER? If this was the case, then phase align is a must and the physical construct must be so that both happen, the temporal alignment in the listening window and likeness of listening window and ER frequency response. Did we just arrive to point source sound? Can it be achieved with non coincident transducers?

Last edited:

Made few visualizations to support discussion. These are measurements of a prototype box with only mid and tweet and the c-c spacing is changed in the simulator to kind of test what kind of relationship phase tracking and ER and listening window response have.

c-c spacings on all attached GIFs are 0 (coincident), 0.9wl at xo (as close as possible with the prototype box), 1.2wl and 1.4wl increased c-c spacing.

Attachments:

1. VituixCAD sixpack showing phases on the top right, the phase tracking is best around xo on the coincident case and gets more off as c-c increases as expected.

2. Power, DI and ERDI showing that the 1.4wl spacing has closest resemblance to coincident configuration in terms of DI and ERDI, and the as close as possible c-c spacing gives greatest difference to the coincident. The power and on axis responses droop as the c-c increases but could be corrected with a filter because DI is about the same as with coincident case. Interestingly, DI and ERDI seem to be most alike on the c-c 1.4wl spacing.

3. another look showing all the possible ER graphs, The non coincident drivers spacing is clearly different than the coincident, 1.4wl approximating coincident best of the test set. The settings are for my livingroom, 240cm ceiling ~3m listening distance, 90cm reference height, 45 toe in, against front wall away from the corner.

Anyway, looks like the phase tracking requirement is related to the c-c spacing. If the ER sound signature is more important at listening position than the phase as such then it is not necessary to go with phase tracking I think. The observations might change if each of these c-c versions was optimized by some parameters, now only the c-c distance is varied. The crossover is optimized for the 0.9wl situation and it has the smoothest response (DI, listening window) because of this.

More tests in the sim would be interesting at some point, like what the responses look like if phases were tracking on each c-c or the on-axis response were optimized etc. And then test if there is clear winner soundwise

ps. I know ER depends on the listening situation (nearfield / farfield, acoustic treatment) so this is just a visualization with data I happened to have and would not be in favor or against the phase aligned crossover design. I suspect the phase tracking would be more important in nearfield / low ER situation if ever.

edit. added room config for reference.

edit. noticed listening distance is 2m when it should be 3m. Anyway, not much difference as demonstration.

c-c spacings on all attached GIFs are 0 (coincident), 0.9wl at xo (as close as possible with the prototype box), 1.2wl and 1.4wl increased c-c spacing.

Attachments:

1. VituixCAD sixpack showing phases on the top right, the phase tracking is best around xo on the coincident case and gets more off as c-c increases as expected.

2. Power, DI and ERDI showing that the 1.4wl spacing has closest resemblance to coincident configuration in terms of DI and ERDI, and the as close as possible c-c spacing gives greatest difference to the coincident. The power and on axis responses droop as the c-c increases but could be corrected with a filter because DI is about the same as with coincident case. Interestingly, DI and ERDI seem to be most alike on the c-c 1.4wl spacing.

3. another look showing all the possible ER graphs, The non coincident drivers spacing is clearly different than the coincident, 1.4wl approximating coincident best of the test set. The settings are for my livingroom, 240cm ceiling ~3m listening distance, 90cm reference height, 45 toe in, against front wall away from the corner.

Anyway, looks like the phase tracking requirement is related to the c-c spacing. If the ER sound signature is more important at listening position than the phase as such then it is not necessary to go with phase tracking I think. The observations might change if each of these c-c versions was optimized by some parameters, now only the c-c distance is varied. The crossover is optimized for the 0.9wl situation and it has the smoothest response (DI, listening window) because of this.

More tests in the sim would be interesting at some point, like what the responses look like if phases were tracking on each c-c or the on-axis response were optimized etc. And then test if there is clear winner soundwise

ps. I know ER depends on the listening situation (nearfield / farfield, acoustic treatment) so this is just a visualization with data I happened to have and would not be in favor or against the phase aligned crossover design. I suspect the phase tracking would be more important in nearfield / low ER situation if ever.

edit. added room config for reference.

edit. noticed listening distance is 2m when it should be 3m. Anyway, not much difference as demonstration.

Attachments

Last edited:

A quickie, same c-c distances but now also delay on the midrange is adjusted for the c-c change. Forgot to mention in previous post that the reference axis is tweeter on-axis and when adjusting c-c the mid driver drops virtually lower in height and thus gets farther away from the listening spot. This is seen in the phase graph, the tweeter phase line (dark green) stays the same and the mid phase (orange) changes when c-c changes. See previous post attachment 1.

This new set has delay compensated, EQ phases are aligned despite the c-c changes by adjusting mid range driver delay with a DSP delay block. As you see phases track each other on all c-c and the on axis response stays now about the same. Listening window changes a bit, DI changes a bit and most change seems to be with the the ERDI.

Reasoning from the data: as soon as the listening position change the phases don't track anymore because the relative distance from each driver to ear changes. For this reason I think the phase match can be adjusted only for one listening axis (vertical) an distance. Phases lose tracking as soon as distance to each driver to ear changes. Although the change is relatively small depending on the listening distance, xo frequency and c-c.

Well, for this reason I'd say phase tracking is not very important for the sake of it. I'd say the effects relative phase of the drivers have to the frequency response to various directions is more important. When the frequency response is similar to all directions then the "phase tracking" is as good as possible to all directions right? And not just for single listening spot. As long as the acoustic design is good enough, so that this "average" good phase tracking (response to all directions) is smooth the speaker optimized for far field listening in reflective environment, where ER contribute to the sound at listening position.

This quality, sound to various directions, is easiest to monitor with the DI and ERDI. Make them smooth and far field sound around listening spot should be as stable as possible. Phase match/tracking could be perfected to one listening spot, which could be advantage on near field listening situation.

Does the reasoning sound valid? I haven't thought or studied this too much before.

sorry the GIF here is not exactly the same as with previous posts 1. attachment. The system is same but now most ER lines are hidden as well as frequency range is 200-20kHz instead of 20-20kHz.

This new set has delay compensated, EQ phases are aligned despite the c-c changes by adjusting mid range driver delay with a DSP delay block. As you see phases track each other on all c-c and the on axis response stays now about the same. Listening window changes a bit, DI changes a bit and most change seems to be with the the ERDI.

Reasoning from the data: as soon as the listening position change the phases don't track anymore because the relative distance from each driver to ear changes. For this reason I think the phase match can be adjusted only for one listening axis (vertical) an distance. Phases lose tracking as soon as distance to each driver to ear changes. Although the change is relatively small depending on the listening distance, xo frequency and c-c.

Well, for this reason I'd say phase tracking is not very important for the sake of it. I'd say the effects relative phase of the drivers have to the frequency response to various directions is more important. When the frequency response is similar to all directions then the "phase tracking" is as good as possible to all directions right? And not just for single listening spot. As long as the acoustic design is good enough, so that this "average" good phase tracking (response to all directions) is smooth the speaker optimized for far field listening in reflective environment, where ER contribute to the sound at listening position.

This quality, sound to various directions, is easiest to monitor with the DI and ERDI. Make them smooth and far field sound around listening spot should be as stable as possible. Phase match/tracking could be perfected to one listening spot, which could be advantage on near field listening situation.

Does the reasoning sound valid? I haven't thought or studied this too much before.

sorry the GIF here is not exactly the same as with previous posts 1. attachment. The system is same but now most ER lines are hidden as well as frequency range is 200-20kHz instead of 20-20kHz.

Attachments

Last edited:

Well, I have used "phase tracking" wording in previous texts some times, when I should have written "phase alignment" and vice versa... Hopefully the attached pictures help understand which one I talk about, sorry for the confusion.

By phase alignment I mean situation where both lines are atop of each other. Phase angle is about the same for both drivers over a bandwidth.

By phase tracking I mean the shape of phase trace is about same on both mid and tweeter but not necessarily atop of each other because of varying distance to the drivers.

Phase align changes when relative distance from ear to the drivers change but the tracking could stay the same as long as DI is relatively flat, in other words frequency response is similar to different directions if I have understood it right?

How about constant directivity point source? Should sound as good as possible, if relative phase of various drivers has anything to do with perceived sound quality?

By phase alignment I mean situation where both lines are atop of each other. Phase angle is about the same for both drivers over a bandwidth.

By phase tracking I mean the shape of phase trace is about same on both mid and tweeter but not necessarily atop of each other because of varying distance to the drivers.

Phase align changes when relative distance from ear to the drivers change but the tracking could stay the same as long as DI is relatively flat, in other words frequency response is similar to different directions if I have understood it right?

How about constant directivity point source? Should sound as good as possible, if relative phase of various drivers has anything to do with perceived sound quality?

Last edited:

A quickie, same c-c distances but now also delay on the midrange is adjusted for the c-c change. Forgot to mention in previous post that the reference axis is tweeter on-axis and when adjusting c-c the mid driver drops virtually lower in height and thus gets farther away from the listening spot. This is seen in the phase graph, the tweeter phase line (dark green) stays the same and the mid phase (orange) changes when c-c changes. See previous post attachment 1.

This new set has delay compensated, EQ phases are aligned despite the c-c changes by adjusting mid range driver delay with a DSP delay block. As you see phases track each other on all c-c and the on axis response stays now about the same. Listening window changes a bit, DI changes a bit and most change seems to be with the the ERDI.

Reasoning from the data: as soon as the listening position change the phases don't track anymore because the relative distance from each driver to ear changes. For this reason I think the phase match can be adjusted only for one listening axis (vertical) an distance. Phases lose tracking as soon as distance to each driver to ear changes. Although the change is relatively small depending on the listening distance, xo frequency and c-c.

Well, for this reason I'd say phase tracking is not very important for the sake of it. I'd say the effects relative phase of the drivers have to the frequency response to various directions is more important. When the frequency response is similar to all directions then the "phase tracking" is as good as possible to all directions right? And not just for single listening spot. As long as the acoustic design is good enough, so that this "average" good phase tracking (response to all directions) is smooth the speaker optimized for far field listening in reflective environment, where ER contribute to the sound at listening position.

This quality, sound to various directions, is easiest to monitor with the DI and ERDI. Make them smooth and far field sound around listening spot should be as stable as possible. Phase match/tracking could be perfected to one listening spot, which could be advantage on near field listening situation.

Does the reasoning sound valid? I haven't thought or studied this too much before.

sorry the GIF here is not exactly the same as with previous posts 1. attachment. The system is same but now most ER lines are hidden as well as frequency range is 200-20kHz instead of 20-20kHz.

You show data in the horizontal plane while changing the c-to-c distance, which will have little to no effect on that. The c-to-c distance is causing changes in the vertical plane around the crossover frequency. Have you looked into that?

AllenB wrote:

My thoughts as well...

When I do simulations of flat baffle (non waveguide) 2-way systems, I find that the combination of baffle width, woofer diameter, and crossover frequency can interact in ways which are either helpful, or unhelpful.

To optimize both the on-axis response and the power response, it is often necessary that their be a phase difference between the woofer and the tweeter... usually between 30 and 90 degree phase difference. This allows the on-axis sound from the two drivers to sum flat. It also allows the horizontal off-axis sound, when combined with diffraction, to also sum flat.

Nice sims, tmuikku.

j.

Current thinking suggests that there are other issues causing the flaws we hear with common speakers. Also, that lining up phase is not even always the best option.

My thoughts as well...

When I do simulations of flat baffle (non waveguide) 2-way systems, I find that the combination of baffle width, woofer diameter, and crossover frequency can interact in ways which are either helpful, or unhelpful.

To optimize both the on-axis response and the power response, it is often necessary that their be a phase difference between the woofer and the tweeter... usually between 30 and 90 degree phase difference. This allows the on-axis sound from the two drivers to sum flat. It also allows the horizontal off-axis sound, when combined with diffraction, to also sum flat.

Nice sims, tmuikku.

j.

Hi CharlieLaub,

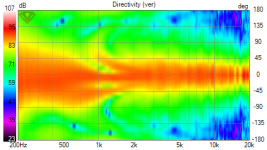

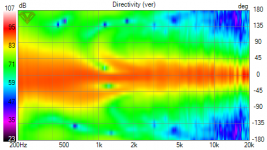

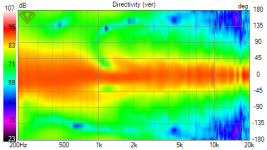

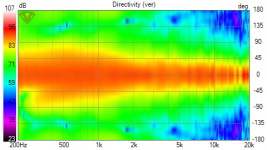

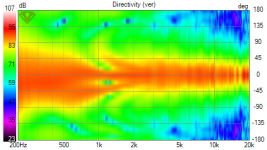

yes all the posted graphs include effect of full vertical (and horizontal) response. It is full 360 horizontal and vertical data although taken with 15 degrees resolution. Forgot the polar map to horizontal in the first post so repeated the same on the second The difference in the graphs posted is mainly due to the vertical offset of drivers, which is standard arrangement for most speakers. Here is vertical polar plots attached if you want to check how it looks like.

The difference in the graphs posted is mainly due to the vertical offset of drivers, which is standard arrangement for most speakers. Here is vertical polar plots attached if you want to check how it looks like.

Profiguy mentioned effect of phase tracking to sound stage and I started thinking it through, what implication of phase might make or break "imaging" since the phase itself is certainly a mixed bag at listening position due to reflections. I assumed he talked about far field listening.

I tried to test why would phase tracking matter in far field situation with early reflections, and why would it affect the perceived sound. If phase itself was responsible for imaging there would be no imaging in reflective environment. I used varying c-c test as an example here since posted similar thing to another thread recently, but could have used a delay or rotation or something else as well that varies the distance of drivers to the simulated listening point.

Interesting observations from the example is that the phase tracking crossover (roughly LR4) seems to yield smooth off-axis response. Another interesting observation was that the phase alignment is good only for one location since the transducers are not coincident and distance to each changes when ear position moves vertically or closer / further.

My conclusion is that if phase tracking affects imaging in far field listening it has to be due to ER because the phase info is kind of lost in the listening position due to reflections. When the sound of very early reflections is same as direct sound then the imaging should be as stable as possible in this situation, right? If there ever was good imaging it has to be due to good sounding ER, good phase match to the various (important) off-axis angles. And of course to the on-axis, the tracking phase adjusted for the listening spot.

Moreover, the ER can be minimized with speaker positioning, toe in, directivity, nearfield situation and room acoustic treatment. If we make assumption that ER are minimal and don't matter, then in this case the phase should track as well. Verdict, yes, phase tracking matters very much and would be good basis for speaker design. What do you think?

Non tracking phase can work fine, and would be very convenient with passive XO I think, in situation where micromanaging delay and slopes is challenging. Especially in listening situation where the ER don't matter much.

edit. Attachments are vertical polar plots from my first set, where only c-c is varied. Phase alignment changes due to increasing distance to listening spot.

1. c-c 0 wl

2. c-c 0.9 wl

3. c-c 1.2 wl

4. c-c 1.4 wl

5. c-c 1.4 wl, with phases are aligned with adjusting the mid woofer delay.

yes all the posted graphs include effect of full vertical (and horizontal) response. It is full 360 horizontal and vertical data although taken with 15 degrees resolution. Forgot the polar map to horizontal in the first post so repeated the same on the second

Profiguy mentioned effect of phase tracking to sound stage and I started thinking it through, what implication of phase might make or break "imaging" since the phase itself is certainly a mixed bag at listening position due to reflections. I assumed he talked about far field listening.

I tried to test why would phase tracking matter in far field situation with early reflections, and why would it affect the perceived sound. If phase itself was responsible for imaging there would be no imaging in reflective environment. I used varying c-c test as an example here since posted similar thing to another thread recently, but could have used a delay or rotation or something else as well that varies the distance of drivers to the simulated listening point.

Interesting observations from the example is that the phase tracking crossover (roughly LR4) seems to yield smooth off-axis response. Another interesting observation was that the phase alignment is good only for one location since the transducers are not coincident and distance to each changes when ear position moves vertically or closer / further.

My conclusion is that if phase tracking affects imaging in far field listening it has to be due to ER because the phase info is kind of lost in the listening position due to reflections. When the sound of very early reflections is same as direct sound then the imaging should be as stable as possible in this situation, right? If there ever was good imaging it has to be due to good sounding ER, good phase match to the various (important) off-axis angles. And of course to the on-axis, the tracking phase adjusted for the listening spot.

Moreover, the ER can be minimized with speaker positioning, toe in, directivity, nearfield situation and room acoustic treatment. If we make assumption that ER are minimal and don't matter, then in this case the phase should track as well. Verdict, yes, phase tracking matters very much and would be good basis for speaker design. What do you think?

Non tracking phase can work fine, and would be very convenient with passive XO I think, in situation where micromanaging delay and slopes is challenging. Especially in listening situation where the ER don't matter much.

edit. Attachments are vertical polar plots from my first set, where only c-c is varied. Phase alignment changes due to increasing distance to listening spot.

1. c-c 0 wl

2. c-c 0.9 wl

3. c-c 1.2 wl

4. c-c 1.4 wl

5. c-c 1.4 wl, with phases are aligned with adjusting the mid woofer delay.

Attachments

Last edited:

That's if phase matters in this way.

You can't call a synergy a point source if you want to identify differences. If it has higher order modes, if it has diffraction, if it has variations in polars.. then it is not a point source.

Hi, I thought about synergy / MEH but didn't mention it. By point source I mean real world point source not ideal, which is impossible in our physical universe. There aren't exceptional point sources I think, this is why it was in my interest to question how close non coincident drivers design get a point source quality because they work pretty well without diffraction etc., other than not being coincident, which shows mainly as the relative phase difference to various directions.

For coincidence it would be enough to have 1/4wl c-c, but this is rarely possible with all the other constrains (no diffraction, HOMs, or constant directivity). It surely looks like second best option, for the ER point of view, is with increased driver spacing if the implications of phase difference matter through the ER.

Sorry my confusing centences, I'm not sure if anyone can follow?

Last edited:

AllenB wrote:

My thoughts as well...

When I do simulations of flat baffle (non waveguide) 2-way systems, I find that the combination of baffle width, woofer diameter, and crossover frequency can interact in ways which are either helpful, or unhelpful.

To optimize both the on-axis response and the power response, it is often necessary that their be a phase difference between the woofer and the tweeter... usually between 30 and 90 degree phase difference. This allows the on-axis sound from the two drivers to sum flat. It also allows the horizontal off-axis sound, when combined with diffraction, to also sum flat.

Nice sims, tmuikku.

j.

Thanks

Yes, most of the time practical issues dictate for certain solutions. There is this idea in back of my head that when the acoustic design is right, the xo snaps into place as well as the sound. Purpose of the xo is merely to make the acoustic design work, all the real problems are in the acoustic domain in the transducers and in the enclosure/structure, like you mentioned as well. All my sims kind of try to look various issues and phenomena through this lense, what should the acoustic design be for things to work out better. VituixCAD lends to this very well, it is just matter of figuring out what to tweak and observe what happens if this was that and so on. And building million prototypes to verify it is not just imagination and false assumptions

Last edited:

- Home

- Loudspeakers

- Multi-Way

- Phase-alignment based method of designing multi-way speakers