Chris Daly, from the (outdated) webpage you linked to it seems a new standard has emerged in 1980:

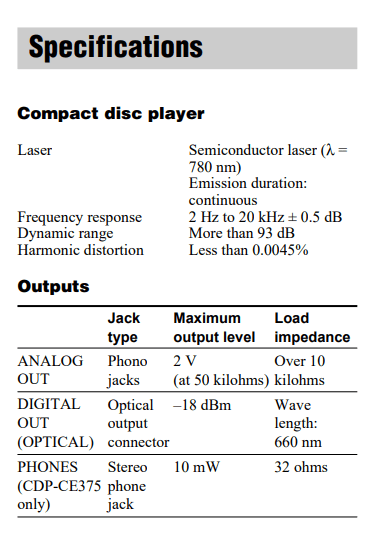

The most common nominal level for professional equipment is +4 dBu (by convention, decibel values are written with an explicit sign symbol). For consumer equipment, a convention exists of −10 dBV, originally intended to reduce manufacturing costs.[3] However, consumer equipment may not necessarily follow that convention. For example, a standard CD-player output voltage has emerged of around 2 VRMS, equivalent to +6 dBV. Such higher output levels allow the CD player to bypass a preamp stage.[4]

Of course I was wrong with 2Vrms nominal. Anyway there is no need to insert an extra device with sources produced after 1980 but for some reason building completely useless preamps is still a very popular habit and especially the high GAIN tube versions generate many issues and forum threads. The term preatt is preattenuator and made up as I wrote it. Covers the situation well I think as the inserted device is not able to put the sources signals through in unaltered state at full volume. A 10 kOhm potentiometer (the same as used with the preatt) without any electronics will not alter the signal by such distortion. I try to keep things as simple as can be

A good read:

https://www.diyaudio.com/community/threads/what-is-gain-structure.186018/

The most common nominal level for professional equipment is +4 dBu (by convention, decibel values are written with an explicit sign symbol). For consumer equipment, a convention exists of −10 dBV, originally intended to reduce manufacturing costs.[3] However, consumer equipment may not necessarily follow that convention. For example, a standard CD-player output voltage has emerged of around 2 VRMS, equivalent to +6 dBV. Such higher output levels allow the CD player to bypass a preamp stage.[4]

Of course I was wrong with 2Vrms nominal. Anyway there is no need to insert an extra device with sources produced after 1980 but for some reason building completely useless preamps is still a very popular habit and especially the high GAIN tube versions generate many issues and forum threads. The term preatt is preattenuator and made up as I wrote it. Covers the situation well I think as the inserted device is not able to put the sources signals through in unaltered state at full volume. A 10 kOhm potentiometer (the same as used with the preatt) without any electronics will not alter the signal by such distortion. I try to keep things as simple as can be

A good read:

https://www.diyaudio.com/community/threads/what-is-gain-structure.186018/

Last edited:

There is no argument over the capability to produce 2v 0dbFS with Test CD's , however the CD medium we enjoy enjoy each day, disallows such levels, so every CD you buy. exhibits no higher than 0.310V RMS , Why this occurs is to leave the CD capable of dynamics when played in home audio systems. The + 4dBu figure is used by recording studios where obviously higher level is needed .

There are entire forums perpetuating this myth that CD players in every day use output 2v already, so no need for DIY Audio not to understand, and correct them, namely the medium we play controls the highest level which is 0.310V RMS .

Here it is seen in light blue, with peak in dark blue. The link was informative thank you.

There are entire forums perpetuating this myth that CD players in every day use output 2v already, so no need for DIY Audio not to understand, and correct them, namely the medium we play controls the highest level which is 0.310V RMS .

Here it is seen in light blue, with peak in dark blue. The link was informative thank you.

Attachments

Last edited:

Crap. This is just like when I finally got it down that Pluto was a planet..... Really, I did hear the CDP output to be 2v, but now understand that it never needs to rise to that level. Are other source components even the same? No, not in my experience. A standard output to at least shoot for would be great, so that when switching sources we don't get surprised for one thing.

Most source components, play media that complies with consumer line level,

Worse than Pluto is now discovering, for some, that power amplifiers as a result of their sensitivity differing to consumer line level , (when used with passive attenuators for lowest distortion), are well short of their stated output.. If you try to raise voltage above consumer line level in between you always add distortion.

The best results ( amazing ) occur when passive resistances are arranged to have no adverse loading on the source component output impedance , and fixed resistance at your power amplifier is kept 20k or higher.

The key is to when using passives is to choose carefully amplifiers that are close with sensitivity,to the same consumer line level standard being played . 0.5V RMS is sensible sensitivity to play all media that is 0.310V RMS , but if you can get closer still like 0.375V RMS achieved with the Quad 306, then better still.

A very good resource for seeing specifications of input sensitivity for amplifiers is here: https://www.hifiengine.com/manual-library.shtml

We need as collective to begin informing these guys who IMO are entirely lost by doing their utter utmost to ignore consumer line level standards

https://www.audiosciencereview.com/forum/index.php

Worse than Pluto is now discovering, for some, that power amplifiers as a result of their sensitivity differing to consumer line level , (when used with passive attenuators for lowest distortion), are well short of their stated output.. If you try to raise voltage above consumer line level in between you always add distortion.

The best results ( amazing ) occur when passive resistances are arranged to have no adverse loading on the source component output impedance , and fixed resistance at your power amplifier is kept 20k or higher.

The key is to when using passives is to choose carefully amplifiers that are close with sensitivity,to the same consumer line level standard being played . 0.5V RMS is sensible sensitivity to play all media that is 0.310V RMS , but if you can get closer still like 0.375V RMS achieved with the Quad 306, then better still.

A very good resource for seeing specifications of input sensitivity for amplifiers is here: https://www.hifiengine.com/manual-library.shtml

We need as collective to begin informing these guys who IMO are entirely lost by doing their utter utmost to ignore consumer line level standards

https://www.audiosciencereview.com/forum/index.php

Please check datasheets of DAC chips proudly stating that these are specified for 2Vrms output. Also check currently produced DACs. Calculate (worst case) with 2Vrms like most do and things will always work out OK contrary to calculating with average levels. Many will remember output stages with +/- 5V malfunctioning.

The trend is to go higher than 2V.

The trend is to go higher than 2V.

Last edited:

Whilst its good perhaps to have some available headroom, it's excessive already without the trend. From experience on forums many will swear black and blue to deny consumer line level standards and replace at a drop of a hat with 0DbFS and more , It is a grind and uphill battle convincing of the actual lower level that the media being played produces.

All we need to know is when a CD get's played in a CD player the output is always 6.45 times less than the players capability which is plenty enough in domestic audio systems . Saying the output is the full scale output capability of the player is where things have gone wildly askew, by not understanding the media being played restricts the players output.

Are you saying the program peaks are output at 310mV rms?

Are you saying that CD players reduce the output signal level to 0dBFS = 310mV rms?

From ripping CDs and then looking at the resultant waveforms in various audio editors, I've seen that most tracks have peaks reaching to -1dB below 0dBFS. Many go all the way to 0dBFS, repeatedly.

I have an Allo Boss DAC that is switchable between max output = 2V rms or max output = 1V rms. My understanding is that most DACs do output 0dBFS = 2V rms. Tests of DACs on Audio Science Review, etc. appear to confirm that assumption.

If the maximum level from the DAC is 2.83V peak (2V rms) at 0dBFS, those -1dBFS peak signals I saw on ripped CD tracks will be about 2.5V peak (or 1.78V rms).

I think it best if the power amplifier can amplify that peak without clipping, even with the volume control turned up very high.

Yes the nominal (average) program level may be 0.31V rms, but music can have signal peaks +15dB or more higher than the average level.

+15dB = 5.6x, so 0.31*5.6 = 1.736V rms or 2.455V peak, so there we are again with over 2V peak output.

If your amp has an input sensitivity of 1V rms (1.414V peak) and the nominal signal level is 0.31V rms with +15dB peaks, you'll need an attenuator to turn down the signal level so those 1.74V rms peaks don't clip the amp.

Apply the 8x gain that's likely to come from a tube preamp and that 0.31V rms nominal level will now potentially become 2.4V rms, Those same +15dB program peaks will now be over 10V rms. Now you'll be turning the volume control way way way down.

2x (+6dB) or 3x (+9.5dB) gain would be useful for playing back DSD sources that are -6dB down from the 2V rms at 0dBFS level (or 310mV nominal, if you prefer). Other than that, I can't see why a preamp with gain is necessary.

Are you saying that CD players reduce the output signal level to 0dBFS = 310mV rms?

From ripping CDs and then looking at the resultant waveforms in various audio editors, I've seen that most tracks have peaks reaching to -1dB below 0dBFS. Many go all the way to 0dBFS, repeatedly.

I have an Allo Boss DAC that is switchable between max output = 2V rms or max output = 1V rms. My understanding is that most DACs do output 0dBFS = 2V rms. Tests of DACs on Audio Science Review, etc. appear to confirm that assumption.

If the maximum level from the DAC is 2.83V peak (2V rms) at 0dBFS, those -1dBFS peak signals I saw on ripped CD tracks will be about 2.5V peak (or 1.78V rms).

I think it best if the power amplifier can amplify that peak without clipping, even with the volume control turned up very high.

Yes the nominal (average) program level may be 0.31V rms, but music can have signal peaks +15dB or more higher than the average level.

+15dB = 5.6x, so 0.31*5.6 = 1.736V rms or 2.455V peak, so there we are again with over 2V peak output.

If your amp has an input sensitivity of 1V rms (1.414V peak) and the nominal signal level is 0.31V rms with +15dB peaks, you'll need an attenuator to turn down the signal level so those 1.74V rms peaks don't clip the amp.

Apply the 8x gain that's likely to come from a tube preamp and that 0.31V rms nominal level will now potentially become 2.4V rms, Those same +15dB program peaks will now be over 10V rms. Now you'll be turning the volume control way way way down.

2x (+6dB) or 3x (+9.5dB) gain would be useful for playing back DSD sources that are -6dB down from the 2V rms at 0dBFS level (or 310mV nominal, if you prefer). Other than that, I can't see why a preamp with gain is necessary.

Last edited:

Correct and well put. Thanks.

This did not exactly help either:

https://en.m.wikipedia.org/wiki/Loudness_war

Californication was indeed unbearable on many DACs. Good music but recorded so that one started to dislike it straight away.

This did not exactly help either:

https://en.m.wikipedia.org/wiki/Loudness_war

Californication was indeed unbearable on many DACs. Good music but recorded so that one started to dislike it straight away.

Last edited:

Neither,to the first two points the peak level not RMS is stated here: https://en.wikipedia.org/wiki/Line_level

Playing commercial media has the result of always complying with consumer line level.

Your post tries to change that in numerous ways, by introducing further gain, beyond what the media is providing, I agree absolutely there is no need for gain With passives the effort is to have resistance not providing any adverse loading on the source impedance, and likewise when done properly there is no need for gain when playing media with consumer line level standards.

It though does rely on sensitivity of power amplifiers to match those same standards

Playing commercial media has the result of always complying with consumer line level.

Your post tries to change that in numerous ways, by introducing further gain, beyond what the media is providing, I agree absolutely there is no need for gain With passives the effort is to have resistance not providing any adverse loading on the source impedance, and likewise when done properly there is no need for gain when playing media with consumer line level standards.

It though does rely on sensitivity of power amplifiers to match those same standards

Last edited:

Are you missing the point that a nominal signal level is a somewhat arbitrarily chosen average, that does not explicitly state the peak levels that deviate from that nominal level?

Example:

A mastering engineer is told by the client to make the recording 'sound louder'. That mastering engineer uses a 'sonic maximizer' or mastering limiter to reduce the amplitude of peaks in the program, such as drum thwacks and loud bass drum booms. Let's say those drum thwacks were +10dB above the average signal level. The mastering engineer reduces the level of those drum thwacks by -7dB, bringing them down to +3dB above the average signal level.

Once those peaks are reduced -7dB in level, the mastering engineer can now increase the signal level of the entire program by +7dB, bringing the average signal level up by a little more than 2x. The dynamic range of the recording has been reduced. Has the "nominal signal level" of the program material been changed? You bet it has!

Is there a reason why the nominal level (0.31V rms) should drive the amp to clipping?

I'm afraid I don't understand that sentence. If you (or anyone) could clarify? Thanks.

Typical amplifier sensitivities range from about 0.2V rms to 1.5V rms to full power (clipping).

See also: https://en.wikipedia.org/wiki/Nominal_level

Example:

A mastering engineer is told by the client to make the recording 'sound louder'. That mastering engineer uses a 'sonic maximizer' or mastering limiter to reduce the amplitude of peaks in the program, such as drum thwacks and loud bass drum booms. Let's say those drum thwacks were +10dB above the average signal level. The mastering engineer reduces the level of those drum thwacks by -7dB, bringing them down to +3dB above the average signal level.

Once those peaks are reduced -7dB in level, the mastering engineer can now increase the signal level of the entire program by +7dB, bringing the average signal level up by a little more than 2x. The dynamic range of the recording has been reduced. Has the "nominal signal level" of the program material been changed? You bet it has!

Is there a reason why the nominal level (0.31V rms) should drive the amp to clipping?

Your post tries to change that in numerous ways, by introducing further gain, beyond what the media is providing,

I'm afraid I don't understand that sentence. If you (or anyone) could clarify? Thanks.

Typical amplifier sensitivities range from about 0.2V rms to 1.5V rms to full power (clipping).

See also: https://en.wikipedia.org/wiki/Nominal_level

Last edited:

Re "Is there a reason why ", You would choose to remain slightly above 0.31VRMS - the Quad 306 is an excellent example at 0.375VRMS sensitivity , so as to avoid any chance of clipping with maximum consumer line level input signal. But the main reason to use sensitivity suggested slightly above 0.31VRMS is to avoid introducing any voltage gain that causes distortion, between your source component and power amp, when used with passive attenuation.

From post # 94 . 'Are you saying that CD players reduce the output signal level to 0dBFS = 310mV rms? " the latter corrected at post #87 as 0.310VRMS at post #83 ... examples why I say "Your post tries to change. "

in the one sentence you are describing two entirely different parameters, but trying to suggest they are equal with the = symbol . 0dbFS is the players maximum output capability, which is never approached, vastly different to the maximum signal level embedded in the media being played which is 0.31V RMS https://en.wikipedia.org/wiki/Line_level

Re Typical amplifier sensitivities , 0.2VRMS appears as though it would certainly introduce distortion , whereas 1.5vRMS just wastes available output power. If you use the formula Stated amplifier sensitivity / by 0.31VRMS consumer line level , a amplifier with 1.5VRMS sensitivity wastes 4.83 times its capability. This applies where choice is made to use passive attenuation.The biggest change and benefit comes from maximising the passive resistance, so as never to place adverse loading on the source output impedance. It should be obvious it cannot be achieved with a conventional potentiometer either linear or log.

From post # 94 . 'Are you saying that CD players reduce the output signal level to 0dBFS = 310mV rms? " the latter corrected at post #87 as 0.310VRMS at post #83 ... examples why I say "Your post tries to change. "

in the one sentence you are describing two entirely different parameters, but trying to suggest they are equal with the = symbol . 0dbFS is the players maximum output capability, which is never approached, vastly different to the maximum signal level embedded in the media being played which is 0.31V RMS https://en.wikipedia.org/wiki/Line_level

Re Typical amplifier sensitivities , 0.2VRMS appears as though it would certainly introduce distortion , whereas 1.5vRMS just wastes available output power. If you use the formula Stated amplifier sensitivity / by 0.31VRMS consumer line level , a amplifier with 1.5VRMS sensitivity wastes 4.83 times its capability. This applies where choice is made to use passive attenuation.The biggest change and benefit comes from maximising the passive resistance, so as never to place adverse loading on the source output impedance. It should be obvious it cannot be achieved with a conventional potentiometer either linear or log.

Last edited:

Well, now I got it right. Let someone qualified do the math. Simply put, I got a Manley Stingray in today that uses a passive pre input. All the eyebrow raising before at what I thought was distortion actually was. Now, there is no hum, no eyebrow raising artifacts that make me wonder if something is wrong with the system. Fact is, there was. I won't go into it anymore than to say that I was making good stuff that didn't match in one way or the other with other parts of the system. This went on far too long. God I feel like I missed a lot with one little problem after another with the sound my system. I will say one negative thing though. This thing is a pretty good space heater, and summer is blooming soon. Fortunately, my listening sessions are pretty short, just like my attention span.Most source components, play media that complies with consumer line level,

Worse than Pluto is now discovering, for some, that power amplifiers as a result of their sensitivity differing to consumer line level , (when used with passive attenuators for lowest distortion), are well short of their stated output.. If you try to raise voltage above consumer line level in between you always add distortion.

The best results ( amazing ) occur when passive resistances are arranged to have no adverse loading on the source component output impedance , and fixed resistance at your power amplifier is kept 20k or higher.

The key is to when using passives is to choose carefully amplifiers that are close with sensitivity,to the same consumer line level standard being played . 0.5V RMS is sensible sensitivity to play all media that is 0.310V RMS , but if you can get closer still like 0.375V RMS achieved with the Quad 306, then better still.

A very good resource for seeing specifications of input sensitivity for amplifiers is here: https://www.hifiengine.com/manual-library.shtml

We need as collective to begin informing these guys who IMO are entirely lost by doing their utter utmost to ignore consumer line level standards

https://www.audiosciencereview.com/forum/index.php

I'm just going to leave this here:

Sony CDP-CE375 SACD changer:

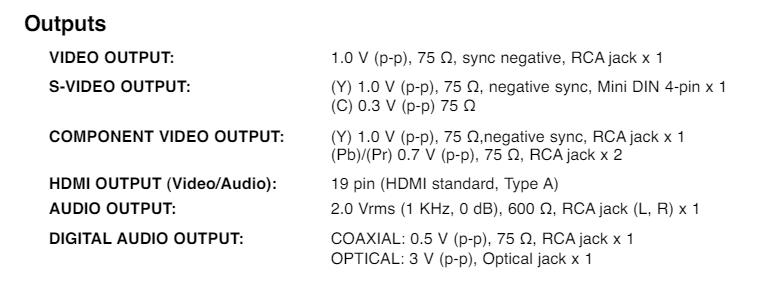

JVC DVD player:

Some CD players state their audio output as "nominal output 200mV RMS" (I know I have a DVD player manual that shows the output level that way, but I can't find it at the moment). If you think about it, a nominal level of 200mV RMS (exactly equal to 0.2V rms) allows for a 20dB peak from that nominal level to stay within the limit of 2V rms analog output when playing a 0dBFS PCM signal level from disc (or digital file). In other words, a 'nominal' level of 200mV rms is the same as saying "average program level allowing for +20dB peaks in the program material". One could argue that music on commercial recordings almost never contains audio signal peaks more than +15dB above the average signal level. If you look at it that way, a nominal signal level of 200mV rms allowing for +20dB peaks is the same exact thing as a 350mV rms nominal level allowing for +15dB peaks. They're the same thing!

I'd rather deal with absolute values.

Nominal values are... nominal.

From Merriam-Webster

3b

: of, being, or relating to a designated or theoretical size that may vary from the actual : APPROXIMATE

the pipe's nominal size

Professional audio engineers are familiar with the concept of 'gain staging', where you have to be careful to tailor the maximum/minimum signal levels to be handled by each stage of your signal chain so that each stage gets enough signal to keep the noise floor unnoticeable, but at the same time not slamming one stage with too much signal and causing that stage to overload/clip/distort.

Thus it is with a domestic music playback system.

The gain structure of a contemporary home stereo starts with the signal source, which has been standardized to be a PCM digital signal encoded at 0dBFS resulting in a 2V rms analog signal being sent out of the player. Knowing that, you can use that knowledge to figure how much or how little gain each of your preamps, tone controls, signal processors and/or amplifiers should provide.

If the amplifier clips with 0.5V rms input:

If you want your amp to be able to play that 2V rms signal peak right at its clipping level, then you'll need to attenuate that 2V rms signal down to 0.5V rms, or by -12dB. The volume control knob will likely be at about 12 o'clock or maybe 1 o'clock for that.

If your amp clips at 1V rms input, then you'll need only -6dB attenuation to accommodate that 2V rms signal peak. That will put the volume knob up at about 3 o'clock, or nearly all the way up. Many people think that looks wrong, but really, it's not. It's fine.

Fortunately, we don't normally listen with our systems turned up so that the max signal level clips our amplifiers.

As mentioned previously, there are lots of amplifiers with input sensitivities ranging from 250mV all the way up to over 1V. All will work, and they do in the real world, all the time.

It's worth noting that in the old analog days, FM tuners and RIAA phono preamps often put out no more than about 250mV to 500mV rms maximum signal peaks. Back in those days, amplifiers were made much more sensitive, often so that they'd clip with only 200mV peak signal at their input. This resulted in amplifiers that were often noisy, amplifying their own self noise, hum from nearby audio componentry, radio frequency interference, and all that sort of thing.

0dBFS is not the player's maximum output capability. It is the maximum signal level able to be stored in PCM digital format.

The maximum signal level from the player is an analog signal voltage. That has been standardized to a 0dBFS PCM signal resulting in a 2V rms analog signal voltage output from the player.

I have no idea what you mean by a "maximum signal level embedded in the media". I believe what I just wrote describes what that is, and it is not a 'nominal' level. It is an absolute level.

Again:

0dBFS PCM signal = 2V rms analog signal at output of player.

One can then translate that to whatever nominal level they like.

Sony CDP-CE375 SACD changer:

JVC DVD player:

Some CD players state their audio output as "nominal output 200mV RMS" (I know I have a DVD player manual that shows the output level that way, but I can't find it at the moment). If you think about it, a nominal level of 200mV RMS (exactly equal to 0.2V rms) allows for a 20dB peak from that nominal level to stay within the limit of 2V rms analog output when playing a 0dBFS PCM signal level from disc (or digital file). In other words, a 'nominal' level of 200mV rms is the same as saying "average program level allowing for +20dB peaks in the program material". One could argue that music on commercial recordings almost never contains audio signal peaks more than +15dB above the average signal level. If you look at it that way, a nominal signal level of 200mV rms allowing for +20dB peaks is the same exact thing as a 350mV rms nominal level allowing for +15dB peaks. They're the same thing!

I'd rather deal with absolute values.

Nominal values are... nominal.

From Merriam-Webster

3b

: of, being, or relating to a designated or theoretical size that may vary from the actual : APPROXIMATE

the pipe's nominal size

Professional audio engineers are familiar with the concept of 'gain staging', where you have to be careful to tailor the maximum/minimum signal levels to be handled by each stage of your signal chain so that each stage gets enough signal to keep the noise floor unnoticeable, but at the same time not slamming one stage with too much signal and causing that stage to overload/clip/distort.

Thus it is with a domestic music playback system.

The gain structure of a contemporary home stereo starts with the signal source, which has been standardized to be a PCM digital signal encoded at 0dBFS resulting in a 2V rms analog signal being sent out of the player. Knowing that, you can use that knowledge to figure how much or how little gain each of your preamps, tone controls, signal processors and/or amplifiers should provide.

If the amplifier clips with 0.5V rms input:

- max signal level from CD player = 2V rms (highest possible level, not often realized, but is sometimes)

- Volume control

- Amp clips at 0.5V rms in

If you want your amp to be able to play that 2V rms signal peak right at its clipping level, then you'll need to attenuate that 2V rms signal down to 0.5V rms, or by -12dB. The volume control knob will likely be at about 12 o'clock or maybe 1 o'clock for that.

If your amp clips at 1V rms input, then you'll need only -6dB attenuation to accommodate that 2V rms signal peak. That will put the volume knob up at about 3 o'clock, or nearly all the way up. Many people think that looks wrong, but really, it's not. It's fine.

Fortunately, we don't normally listen with our systems turned up so that the max signal level clips our amplifiers.

As mentioned previously, there are lots of amplifiers with input sensitivities ranging from 250mV all the way up to over 1V. All will work, and they do in the real world, all the time.

It's worth noting that in the old analog days, FM tuners and RIAA phono preamps often put out no more than about 250mV to 500mV rms maximum signal peaks. Back in those days, amplifiers were made much more sensitive, often so that they'd clip with only 200mV peak signal at their input. This resulted in amplifiers that were often noisy, amplifying their own self noise, hum from nearby audio componentry, radio frequency interference, and all that sort of thing.

0dbFS is the players maximum output capability, which is never approached, vastly different to the maximum signal level embedded in the media being played which is 0.31V RMS https://en.wikipedia.org/wiki/Line_level

0dBFS is not the player's maximum output capability. It is the maximum signal level able to be stored in PCM digital format.

The maximum signal level from the player is an analog signal voltage. That has been standardized to a 0dBFS PCM signal resulting in a 2V rms analog signal voltage output from the player.

I have no idea what you mean by a "maximum signal level embedded in the media". I believe what I just wrote describes what that is, and it is not a 'nominal' level. It is an absolute level.

Again:

0dBFS PCM signal = 2V rms analog signal at output of player.

One can then translate that to whatever nominal level they like.

Last edited:

- Home

- Source & Line

- Analog Line Level

- passive preamp