Is that an input on a PC board? That could explain the "crud"...

If the signal being handled is always digital, then no crud like that should ever find its way into the system, not mains related rectification crud, to get that into the system you'd need an ADC somewhere, surely?

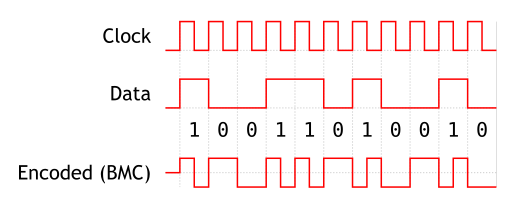

Maybe it doesn't realy miss the data, but the clock is rather "shifted" to much to stay in lock. The SPDIF encoding is biphase mark code. Shifting the clock with 1 will produce both effects on extracted data, because what is counted are the transitions in respect to that clock.This time around we've gained a bit. Surely if the S/PDIF thing were to abruptly correct for a phase difference it would always leave the bit it did it on as a zero, rather then a 1?

The analog signal overimposed to the digital modulates the timings (jitter) - because the way input circuits works. Massive jitter can account for poor recovery.

Last edited:

I think you might be missing that the left byte is actually the least significant one. So the 16 bit values are actually 0x72F6 and 0x72F7. A change in the 9th bit would certainly show up as extreme distortion on the FFT.

That would help to explain it and it would imply that when the S/PDIF receiver makes a clock alteration that it always does it on the LSB. That would also be sensible.

That would help to explain it and it would imply that when the S/PDIF receiver makes a clock alteration that it always does it on the LSB. That would also be sensible.

Yes, the rest of the bits will self-correct because of the modulation type. Only the first one is screwed.

Ok I lost you there. Can you please try explaining to me again in plain english

I don't think this is errors caused by the SPDIF receiver. If it were they'd be more randomly distributed than this - they'd not only affect the LSB. This looks like low frequency dither is being applied to the output. It could be some kind of watermarking system for copy protection.

Its a weird one for sure.

I now suspect its dither too. And its probably Windows thats messing up.

Here's why -

When I just watch any input(line, spdif,mic..) of any soundcard connected to this laptop - either the built-in souncard or a usb soundcard - even with no signal applied - I see that 7hz harmonic very clearly. This happens only when recording/monitoring at 16bits. When I switch to 24bit it disappears. So I would guess its probably a 1 bit dither being applied at a very low repetition rate most likely for recording under 24 bit only. The reason I say Windows (its Win7) is that this happens regardless of the souncard or the type of input. Oh and its not even the recording program either. I tried a couple of different recording programs and it did that on both. Unless all soundcard chips/codecs have it hardwired that it should dither even if its a digital signal. Or maybe its about copy protection.

So the next obvious question would be - What part of Windows is doing that and how can I disable it ?

Last edited:

I think it would also be a good idea for percy to download ARTA and try out measuring things with a different spectrum analyser. I also use windows7 and have tried to replicate his findings, but can't come up with anything, so I doubt this problem is simply a result of windows screwing things up.

One thing to bear in mind though is that windows has its own settings in the sound control stuff that sets the incoming and out going sampling rate in software. For my sound card that's all it represents, it doesn't change what the hard ware functions at. If it's set to 192k and I try to work with something at 44.1k, even though I've manually the sound card to 44.1k and the data rate is 44.1k windows thinks it should be 192 and will attempt to resample and it makes a real mess of it. To make sure that windows stays out of the picture, I have to set the outgoing and incoming sample rates and bit depth in the windows control panel to that of the data stream I wish to work with. Then I have to set the correct sample rate/bit depth in the program I'm using and I have to select the correct sample rate on the sound cards control panel.

One thing to bear in mind though is that windows has its own settings in the sound control stuff that sets the incoming and out going sampling rate in software. For my sound card that's all it represents, it doesn't change what the hard ware functions at. If it's set to 192k and I try to work with something at 44.1k, even though I've manually the sound card to 44.1k and the data rate is 44.1k windows thinks it should be 192 and will attempt to resample and it makes a real mess of it. To make sure that windows stays out of the picture, I have to set the outgoing and incoming sample rates and bit depth in the windows control panel to that of the data stream I wish to work with. Then I have to set the correct sample rate/bit depth in the program I'm using and I have to select the correct sample rate on the sound cards control panel.

Thanks you guys for trying it out on your computers. It helps eliminate possibilities.

I am able to reproduce the effect using ARTA as well. Its "dead" on 24bit but as soon as I switch to 16bit I see that same artefact.

and yes I set the device sample rate and size in windows audio device properties to what I am recording at.

One thing I haven't tried yet is disable the built-in soundcard when I am using the usb soundcard. If I find anything interesting I will post an update later tonight.

I am able to reproduce the effect using ARTA as well. Its "dead" on 24bit but as soon as I switch to 16bit I see that same artefact.

and yes I set the device sample rate and size in windows audio device properties to what I am recording at.

One thing I haven't tried yet is disable the built-in soundcard when I am using the usb soundcard. If I find anything interesting I will post an update later tonight.

It IS Windows, more specifically the legacy audio APIs namely Directsound and MME.

When I switch to WASAPI its perfect - and I mean bit perfect! The files now match bit by bit! and the artifact is gone.

I was able to reproduce this on another laptop as well. Make sure your FFT size is large - atleast 32k and the magnitude scale is deep in the -140db range. The soundcard noise floor should be good too otherwise the noise will mask those low level spurs. The best way I have found to smoke this effect out is to mute the selected input.

I still dont know what's it doing though in Directsound and MME that causes this artifact when recording 16bits. There are several guesses. But for now I think I have accomplished what I had actually set out for - to find if this player is bit perfect or not. And it is!

That brings me to another dilemma - so much for fancy transports and exotic clocking and transmission schemes and this little cheap light plasticy player is accomplishing the same feat. Huh. Guess thats a subject for another thread...

When I switch to WASAPI its perfect - and I mean bit perfect! The files now match bit by bit! and the artifact is gone.

I was able to reproduce this on another laptop as well. Make sure your FFT size is large - atleast 32k and the magnitude scale is deep in the -140db range. The soundcard noise floor should be good too otherwise the noise will mask those low level spurs. The best way I have found to smoke this effect out is to mute the selected input.

I still dont know what's it doing though in Directsound and MME that causes this artifact when recording 16bits. There are several guesses. But for now I think I have accomplished what I had actually set out for - to find if this player is bit perfect or not. And it is!

That brings me to another dilemma - so much for fancy transports and exotic clocking and transmission schemes and this little cheap light plasticy player is accomplishing the same feat. Huh. Guess thats a subject for another thread...

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- Digital Source

- Near-Bit-Perfect ?

Return to the topic. All further OT posts will be removed.

Return to the topic. All further OT posts will be removed.