That was . . . um . . . my first and only comment regarding your (mis)statement about "small room absorption".Really!? Are you serious? I am not the one who has shifted position here. You are seriously backpedaling to try and justify a dumb position. Go back and read what you said.

No, there was a mistake where the delay was not used on the split files right pan. I decided to leave it to see if anyone noticed. I think it's pretty audible, tho it's hard to tell by ear why there isn't as much sift.Did you use the same delay for pans both ways?

But ...

Earl, diffuse is defined as being isotropic and homogeneous. No real room has those properties. Concert halls come close whereas acoustically small rooms are far from being diffuse. The concept of RT simply doesn't apply.

A RT based calculation was presented in connection with acoustically small rooms and I simply said that the concept of a diffuse sound field doesn't apply to those rooms. Don't know why that triggered a response from you at all.

RT60 == V/Sa where V = volume, S = surface area. Consider a square room. V = L^3, S = 6 x L^2, RT60== L/6a. Smaller rooms have shorter RT60.

(Note: == means goes like)

I think Barleywater a couple of posts ago misinterpreted the Sabine equation, by not taking V into account.

There is little doubt in my mind that the Saint Peter has a longer decay time than my bathroom.

Back again behind some speakers I would like to thank Pano for putting these files together. The reason to suggest delay panning is based on the following about localization.

Since soundwaves in air get so mixed up by the environment, the human brain has evolved to use different cues for localization. We have discussed already interaural time and loudness differences as an input to the neural processes that construct localization.

However, we operate in such an acoustic soup, that the localization mechanism is not something that can be seen in isolation from the entire sensor pod from which our ears protude. Ever had a stiff neck? Your whole ability to localize sound goes down 5 notches. While locating a sound, we turn, twist and shift our heads untill we are believe we have found the best fit to localize the sound source. This is typical when both phase and loudness information are most consistent, but even then, real acoustic environments often remain ambiguous. Visual cues are all important for the brain to make a final decision on the origin of a sound. The ventriloquest effect demonstrates the power of this mechanism.

With stereo, we not only miss those visual cues, but we are also forced to listen with a stiff neck. Panned sound sources are typically reproduced without any time delay between the two speakers. When listening without head movements, we can easily construct from the loudness difference between two ears what the apparent origin of the sound source is. However, when turning our head into the sound source for more precise localization using also time delay cues, that will generates an inconsistency between loudness and time delay inputs.

By delaying one of the channels, this inconsistency can at least partly be removed, is my idea. But 5 ms is way too long; that's a 1.7 meter long wave in air. The distance between our ears is about 20 cm, and you need to triangulate from the sound source to calculate the required time delay. It will be much shorter.

The interesting things with Pano's latest .wav files I found to be the following. The 5ms time delay is so long, that the signal from that side gets strongly masked. It becomes almost completely inaudible to me, unless I make an effort to hear it.

The second observation is that with inconsistent cues, like the split pans, the brain still tries to construct localization in the presence of ambiguous cues. Because it is quite obvious, it is even possible to consciencely choose between the two possibilties. The voice can be heard either from the centre or the side with a fair amount of credibility, by choice. Almost like the picture of a nice lady that can also be an ugly vase. The obvious inconsistency in cues that remains after a choice has been made produces a quazy sensation.

Since soundwaves in air get so mixed up by the environment, the human brain has evolved to use different cues for localization. We have discussed already interaural time and loudness differences as an input to the neural processes that construct localization.

However, we operate in such an acoustic soup, that the localization mechanism is not something that can be seen in isolation from the entire sensor pod from which our ears protude. Ever had a stiff neck? Your whole ability to localize sound goes down 5 notches. While locating a sound, we turn, twist and shift our heads untill we are believe we have found the best fit to localize the sound source. This is typical when both phase and loudness information are most consistent, but even then, real acoustic environments often remain ambiguous. Visual cues are all important for the brain to make a final decision on the origin of a sound. The ventriloquest effect demonstrates the power of this mechanism.

With stereo, we not only miss those visual cues, but we are also forced to listen with a stiff neck. Panned sound sources are typically reproduced without any time delay between the two speakers. When listening without head movements, we can easily construct from the loudness difference between two ears what the apparent origin of the sound source is. However, when turning our head into the sound source for more precise localization using also time delay cues, that will generates an inconsistency between loudness and time delay inputs.

By delaying one of the channels, this inconsistency can at least partly be removed, is my idea. But 5 ms is way too long; that's a 1.7 meter long wave in air. The distance between our ears is about 20 cm, and you need to triangulate from the sound source to calculate the required time delay. It will be much shorter.

The interesting things with Pano's latest .wav files I found to be the following. The 5ms time delay is so long, that the signal from that side gets strongly masked. It becomes almost completely inaudible to me, unless I make an effort to hear it.

The second observation is that with inconsistent cues, like the split pans, the brain still tries to construct localization in the presence of ambiguous cues. Because it is quite obvious, it is even possible to consciencely choose between the two possibilties. The voice can be heard either from the centre or the side with a fair amount of credibility, by choice. Almost like the picture of a nice lady that can also be an ugly vase. The obvious inconsistency in cues that remains after a choice has been made produces a quazy sensation.

Last edited:

Acoustic clues can be sufficient to do the job, no holograms need apply! If low level detail is obscured or distorted by relatively poor quality playback then you'll need all the tricks in the book, as described in this thread, to achieve convincing imaging. But turn up the quality gain control and the ear/brain has far more material to work with, finds it easy to unscramble the acoustic "soup". This is how you get "invisible" speakers, and the "magic" sound that most experience now and again ...

Hmmmm...

The direction should be much more obvious now, as I've panned the files 50% to one side in amplitude and delayed the softer channel by 5mS (~1.7 meters) to give it distance. Those effects combined pull the sound far left or far right. Amplitude or delay alone don't pull the sound as far as both combined.

This seems familiar - see my previous post.

Shorter delay times would be interesting to try: we might yet get perfect left to right panning by varying the delay times and volume of each channel just right...

Chris

Kindhornman,

I would settle for something like the Wall of Sound in a different configuration for every piece of music. But that's not how it is going to be. Something like five speakers in the front to get more coherence between loudness and time delay differentials might be a good way to go. However, this will require a whole redesign through the chain, from recording upwards.

Fas42,

I agree that this happens and I experience summits of auditory bliss on a daily basis. Stereo has come a long way. However, to illustrate a fundamental shortcoming there is an easy experiment that can be conducted in the comfort of your own home.

Play the song with the very best localization of voices and instruments on the system with invisible speakers. Observe yourself while you focus on the sounds and where they come from. What I notice when I do it, is that my head will be completely frozen in 3D in order to maintain the picture. Now, when I move my attention a nice solo or so, I don't move my head towards that sound! Because to move my head would significantly destroy my mental picture of the soundscape. That imo has to do with the incoherence between loudness and time information, see above.

With a live performance I would turn my head into the direction of the soloist, but I have unlearned to do that with my stereo.

I would settle for something like the Wall of Sound in a different configuration for every piece of music. But that's not how it is going to be. Something like five speakers in the front to get more coherence between loudness and time delay differentials might be a good way to go. However, this will require a whole redesign through the chain, from recording upwards.

Fas42,

I agree that this happens and I experience summits of auditory bliss on a daily basis. Stereo has come a long way. However, to illustrate a fundamental shortcoming there is an easy experiment that can be conducted in the comfort of your own home.

Play the song with the very best localization of voices and instruments on the system with invisible speakers. Observe yourself while you focus on the sounds and where they come from. What I notice when I do it, is that my head will be completely frozen in 3D in order to maintain the picture. Now, when I move my attention a nice solo or so, I don't move my head towards that sound! Because to move my head would significantly destroy my mental picture of the soundscape. That imo has to do with the incoherence between loudness and time information, see above.

With a live performance I would turn my head into the direction of the soloist, but I have unlearned to do that with my stereo.

I think Barleywater a couple of posts ago misinterpreted the Sabine equation, by not taking V into account.

There is little doubt in my mind that the Saint Peter has a longer decay time than my bathroom.

The time required to reduce a sound from 60dB above threshold of audibility to the threshold of audibility.

This is much different than threshold of hearing. Decay of sound in theoretical derivation of Sabine equation assumes that after many reflections sound energy throughout room becomes defuse.

The room becomes full of sound that masks perception of remaining reflections with correlation to the source signal.

But Earl has clearly stated that he is talking about aborption. Let's start with nice simple model: A spherical room. An absorption coefficient describing unit area absorptivity of material used to construct spherical room.

A point source at center of sphere emits 100% impulse and for sphere of radius R the sphere is constructed such that 1% of energy is absorbed and 99% is reflected back to source point with round trip time T.

A second sphere is constructed with the same absorption factor, and radius 2^0.5*R. This sphere has exactly 2x the surface area, and thus 2x the absorption as sphere of radius R, but with only 98% of origianl energy reflected back through origin, and a round trip time 2^0.5T.

At 10 reflection periods for smaller sphere we have 0.99^10= 0.904, thus about 90% of original energy is present.

For larger sphere 10/(2^0.5) = 7.07 reflection periods have passed, and we have 0.98^7 = 0.868, thus about 87% of original energy is reflected back through origin.

It is very clear from above simple math, with simplified case that smaller rooms are not inherently more absorptive.

It is also quite clear that when model includes source as generator of diffuse masking field that shorter reverberation time of smaller room is not because room gets quiet quicker, but becomes incoherent quicker.

Last edited:

The difference in shift was pretty obvious to me, and my ears do not measure well. If the difference of pan is in the delay and not the amplitude, it could explain the reduced focus that I heard.No, there was a mistake where the delay was not used on the split files right pan. I decided to leave it to see if anyone noticed. I think it's pretty audible, tho it's hard to tell by ear why there isn't as much sift.

Well, my experience is that there are additional "quality gains" to be made, which don't force my head to be in a vice like condition. As you point out, in a live performance there are no restrictions on your head movement, the sound images will be maintained when you twist your head or shift your head to a complete new position. Thus, live sound certainly projects the cues which allow you to "see the image".Play the song with the very best localization of voices and instruments on the system with invisible speakers. Observe yourself while you focus on the sounds and where they come from. What I notice when I do it, is that my head will be completely frozen in 3D in order to maintain the picture. Now, when I move my attention a nice solo or so, I don't move my head towards that sound! Because to move my head would significantly destroy my mental picture of the soundscape. That imo has to do with the incoherence between loudness and time information, see above.

With a live performance I would turn my head into the direction of the soloist, but I have unlearned to do that with my stereo.

So, the "next step" I suggest is, that those cues are sufficiently captured in most recordings for the same freedom of head movement to be possible while listening in stereo. However, if those cues are too compromised by the lack of resolution, transparency, quality, however you wish to call it, then the mind has insufficient data to work with, and you will need to use techniques of the type you mention.

I find there is a very sharp delineation between the sound being good enough, and not being good enough; it's almost a digital switch, there is very little gradual transition - so I either have "invisible" speakers, or they work normally, depending upon everything ...

Thank you for taking the time to audition them.Back again behind some speakers I would like to thank Pano for putting these files together.

It really is too long, but I didn't want to be subtle about it. The 10dB difference in the left and right tracks pulls the sound almost all the way to one side, the addition of the 5mS pulls it completely to one side for me, too. I can watch the VU meters flick on the far channel, but I just can't hear it coming from that speaker.The 5ms time delay is so long, that the signal from that side gets strongly masked. It becomes almost completely inaudible to me, unless I make an effort to hear it.

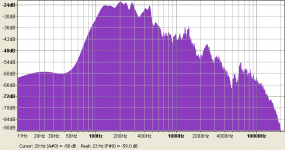

I can clearly hear the upper and lower parts of the split, which indicates that localization is still happening <700Hz for me. I don't know what other people would hear, or where the cutoff might be. FYI, that voice has almost no content below 80Hz. See below.The voice can be heard either from the centre or the side with a fair amount of credibility, by choice.

Attachments

Voice is only one part of music, I am sure that different instrument spectrums will yeald interesting results, and anything will count to some degree, the question really is how all this effect a design, which really is up to the designer to decide. If the designer cannot make sense of any difference, and associate it with technical issues, then designs generally may not have consistency in sound characteristics, and even more difficult to achieve improvements.

Just as a personal point of reference, a delay of 0.1 mS makes a noticeable shift for me. By 2.5 mS the sound has shifted all to one side. Beyond that and I start to hear a split, with the low end noticeable on the side without delay. I think that's within the norm.

Sengpiel has an interchannel level/time calculator on his website:

Calculation of the direction of phantom sources by interchannel level difference and time difference stereophony stereo time of arrival - localization curves - sengpielaudio Sengpiel Berlin

Your last 3 examples clearly demonstrate how important coherent panning of all frequencies is. It doesn't help to obsess about higher frequencies in speaker design when there are large spatial errors at lower frequencies.

That is a great reference Markus. I am sure much of this knowledge can be implemented into software to help create a process that project better sound image given the limited resources and options.

During an audio show last year over here, there was a demonstration of recording at different distances where the difference between direct sound and hall sounds were very obvious. So it seems selecting the right mic locations is not an easy task.

During an audio show last year over here, there was a demonstration of recording at different distances where the difference between direct sound and hall sounds were very obvious. So it seems selecting the right mic locations is not an easy task.

That is a great reference Markus. I am sure much of this knowledge can be implemented into software to help create a process that project better sound image given the limited resources and options.

Let's not forget that interchannel time panning doesn't go along well with the notion that amplitude panning already results in interaural phase differences caused by interaural crosstalk.

Last edited:

Kindhornman,

I would settle for something like the Wall of Sound in a different configuration for every piece of music. But that's not how it is going to be. Something like five speakers in the front to get more coherence between loudness and time delay differentials might be a good way to go. However, this will require a whole redesign through the chain, from recording upwards.

Fas42,

I agree that this happens and I experience summits of auditory bliss on a daily basis. Stereo has come a long way. However, to illustrate a fundamental shortcoming there is an easy experiment that can be conducted in the comfort of your own home.

Play the song with the very best localization of voices and instruments on the system with invisible speakers. Observe yourself while you focus on the sounds and where they come from. What I notice when I do it, is that my head will be completely frozen in 3D in order to maintain the picture. Now, when I move my attention a nice solo or so, I don't move my head towards that sound! Because to move my head would significantly destroy my mental picture of the soundscape. That imo has to do with the incoherence between loudness and time information, see above.

With a live performance I would turn my head into the direction of the soloist, but I have unlearned to do that with my stereo.

When I conduct this experiment in my home, all elements of soundscape maintain position with head rotation.

Your speakers don't truly disappear.

When I conduct this experiment in my home, all elements of soundscape maintain position with head rotation.

Your speakers don't truly disappear.

You mean his speakers don't create enough reflections that could mask the inherit problems of stereo?

- Home

- Loudspeakers

- Multi-Way

- Linkwitz Orions beaten by Behringer.... what!!?