It's very easy to demonstrate. Just take photos (16MP or more, 4K is 8MP) or use a 4K 120Hz camera.I'm wondering - is anyone seeing differences in TV image between different (but good working) HDMI cables?

But no manufacturers or resellers of HDMI cables provides this evidence, no one ...

I´m using USB for military custom development.

I´ve used COTS cable at the beginning with very bad results although cable marking High Speed USB (cheap or expensive ones, don´t care).

After a lot of expertising with a lot of measurements (LeCroy with fixture according USB standard, eye diagram...) we state that this is like russian roulette.

So we decide to use only custom made and qualified cable from Draka.

We don´t need any ferrite on cable, but the interface is ESD protected and CMC EMI filtered on PCB.

This is a technical issue.

My 2 cents.

JP

Yes - lots of 'commercial' USB cable products can be less than optimal - but ime this is usually in terms of interference (both ways) and typically the USB link will break down or be 'lost'. If your audio screeches or glitches or just stops then - yes - the cable might be the issue.

But it isn't likely to result in (making up audiophile speak

Eye Diagrams / ESD and Common Mode filtering - yes - now talking proper engineering !

The real question wrt Audio transmission would seem to be the degree to which the 'playback' clock is separate from the 'USB clock'.

for info I use USB comms in Industrial / Scientific Test Instrumentation.

The idea that there is some kind of digital signal being transferred by any cable is just as erronius as the idea that the manipulation of digital data in a CPU (made of transistors, resistors, capacitors, pathways) or a memory chip is a digital signal. It's digital *data* but the electronic signals are AC analog, they only *represent* digital data.

So, regardless of your position, or your experience, with regard to digital transmission via cable, you must acknowledge that a simple 16/44 file via USB is a square wave at almost 45 KHz. Square waves can also be mathematically described as a series of sine waves whose harmonics are at ever higher frequencies to the fundamental overlaid on top of each other with integrity in the time domain. So this is not your 20Khz analog cable, or even your 45KHz analog cable.

Higher resolutions mean higher frequency AC signals, and in every case they must be properly transmitted and "understood" by the receiving system which will process each and every bit via an analog circuit.

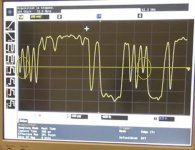

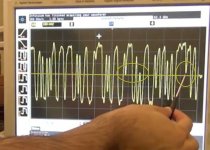

With sufficient resolution (using a GHz scope) errors in USB cables are easily discerned. Two examples reproduced below: highlighted areas indicate where a change from a digital one or zero to the opposite polarity fails to make it sufficiently across the zero-crossing point to be identified as such by any downstream device. The images are a measure of a USB cable from a Digital Frequency Generator to the Scope at 16/44 resolution.

So, regardless of your position, or your experience, with regard to digital transmission via cable, you must acknowledge that a simple 16/44 file via USB is a square wave at almost 45 KHz. Square waves can also be mathematically described as a series of sine waves whose harmonics are at ever higher frequencies to the fundamental overlaid on top of each other with integrity in the time domain. So this is not your 20Khz analog cable, or even your 45KHz analog cable.

Higher resolutions mean higher frequency AC signals, and in every case they must be properly transmitted and "understood" by the receiving system which will process each and every bit via an analog circuit.

With sufficient resolution (using a GHz scope) errors in USB cables are easily discerned. Two examples reproduced below: highlighted areas indicate where a change from a digital one or zero to the opposite polarity fails to make it sufficiently across the zero-crossing point to be identified as such by any downstream device. The images are a measure of a USB cable from a Digital Frequency Generator to the Scope at 16/44 resolution.

Attachments

Last edited:

Fine. So you'll have errors in the data and your USB link will likely fail - depending on where the errors occur in the USB data stream - or at least your audio will sound clearly wrong.The idea that there is some kind of digital signal being transferred by any cable is just as erronius as the idea that the manipulation of digital data in a CPU (made of transistors, resistors, capacitors, pathways) or a memory chip is a digital signal. It's digital *data* but the electronic signals are AC analog, they only *represent* digital data.

So, regardless of your position, or your experience, with regard to digital transmission via cable, you must acknowledge that a simple 16/44 file via USB is a square wave at almost 45 KHz. Square waves can also be mathematically described as a series of sine waves whose harmonics are at ever higher frequencies to the fundamental overlaid on top of each other with integrity in the time domain. So this is not your 20Khz analog cable, or even your 45KHz analog cable.

Higher resolutions mean higher frequency AC signals, and in every case they must be properly transmitted and "understood" by the receiving system which will process each and every bit via an analog circuit.

With sufficient resolution (using a GHz scope) errors in USB cables are easily discerned. Two examples reproduced below: highlighted areas indicate where a change from a digital one or zero to the opposite polarity fails to make it sufficiently across the zero-crossing point to be identified as such by any downstream device. The images are a measure of a USB cable from a Digital Frequency Generator to the Scope at 16/44 resolution.

This only really happens due to interference or USB cables that are too long.

Also, isn't the data rate going to be a lot higher than the 45kHz figure stated - assuming a 'Stereo' signal transmitted - each of L/R running at 44.1kHz with 16 bits encoded plus all the other stuff that goes on the USB protocol. Gets you into MHz figures.

Thanks for the explanation. I made out of it that asynchronous transfer is best for audio. That makes the DAC independent from the host with regard to clocking. But in this case there shouldn't be any influence of cables on the clock purity, right? Those who claim can hear any difference in USB cables must have used synchronous transfer, correct?I think you're confusing adaptive and asynchronous protocols. What you describe is true for adaptive endpoints (the pcm2*** serie from TI for example). Definitely not for the more modern interfaces like xmos, which work as asynchronous endpoints.

There are 3 modes for audio transfer under USB audio. All 3 are falling in the isochronous category (real time with no error correction).

From the USB Device Class Definition for Audio Devices:

For more details on the synchronization methods, see section "5.10.4.1

Synchronization Type" from the Universal Serial Bus Specification Revision 1.1.

Here I found some useful information

Since it is trivially easy to send billions of bits across USB cables without the loss or error of even one bit, not one part in a billion - the only arguments left are jitter and noise. Bit perfect is easy.

Jitter and noise are not difficult to measure in the analog output signal from the DAC. You can see them in an FFT analysis. If they aren't getting thru the DAC, it doesn't matter. Well, it doesn't matter for those of us who listen to the resulting analog signal. For those of you who listen directly to the USB bitstream, then it could make a difference.

If you can see noise and jitter in the FFT, fix it. We do have the tools to figure this out, right?

Jitter and noise are not difficult to measure in the analog output signal from the DAC. You can see them in an FFT analysis. If they aren't getting thru the DAC, it doesn't matter. Well, it doesn't matter for those of us who listen to the resulting analog signal. For those of you who listen directly to the USB bitstream, then it could make a difference.

If you can see noise and jitter in the FFT, fix it. We do have the tools to figure this out, right?

Fine. So you'll have errors in the data and your USB link will likely fail - depending on where the errors occur in the USB data stream - or at least your audio will sound clearly wrong.

This only really happens due to interference or USB cables that are too long.

Also, isn't the data rate going to be a lot higher than the 45kHz figure stated - assuming a 'Stereo' signal transmitted - each of L/R running at 44.1kHz with 16 bits encoded plus all the other stuff that goes on the USB protocol. Gets you into MHz figures.

Well, I'd have to do some searching for the original video where the screen grabs came from (a test of the 'scope) but the cable looked to be about a half meter.

People who buy cable in good faith and hear sonic differences between two examples of different construction and materials should not have to own and use a test suite to verify exactly what issue is behind it, or be belittled because their results are "impossible".

None of that requires the purchase of "exotic" cable either; consumers should expect competent performance from whatever they choose to purchase. I don't see it as surprising that a cable of a different price point might be better constructed or more suitable for the purpose it's described to meet either.

Last edited:

I've no real opinion on the matter, but don't forget when you plug in a USB cable you're also connecting power and ground wires. Even if the data side of the system works perfectly (which it should), making those other connections could conceivably alter analog performance. It's hard enough to get analog right in a pure analog system and digital equipment tends to be created in more digital-centric design environment, probably less focused on the analog side.

You can easily check it for errors. Same with SPDIF. If you find errors, then you have a very bad cable.

I think you meant to write "I can easily check for errors ..."

The average consumer of retail audio, maybe not so much. And when he is told that any typical China-sourced USB cable is "perfectly fine" it's not helpful to subsequently offer that he bought a poor cable after following that advice, or offer that is hearing things that don't really exist, or he wasted his money when he chooses to replace it with a name-brand one which, according to some, it's only attribute is it's not a "poor cable".

Last edited:

Well, I'd have to do some searching for the original video where the screen grabs came from (a test of the 'scope) but the cable looked to be about a half meter.

Bear in mind that at USB signal speeds (MHz not kHz) you need to take into account appropriate Signal impedances and termination. Otherwise you are likely to get signal reflections which will interfere with signal propogation and compromise the signal integrity.

I'd suggest probing on the output of a USB receiver, or at least a properly terminated buffer if using sig gen rather than USB signal. Thus looking at a properly terminated signal.

Bear in mind that at USB signal speeds (MHz not kHz) you need to take into account appropriate Signal impedances and termination. Otherwise you are likely to get signal reflections which will interfere with signal propogation and compromise the signal integrity.

I'd suggest probing on the output of a USB receiver, or at least a properly terminated buffer if using sig gen rather than USB signal. Thus looking at a properly terminated signal.

I'm pretty sure that someone to whom Tek decides to lend a 50 GHz scope to for review knows how to properly terminate his test setup.

Thanks for the explanation. I made out of it that asynchronous transfer is best for audio. That makes the DAC independent from the host with regard to clocking. But in this case there shouldn't be any influence of cables on the clock purity, right? Those who claim can hear any difference in USB cables must have used synchronous transfer, correct?

Here I found some useful information

In theory, asynchronous could be made near perfect. Then of course it depends on implementation.

A less than perfect receiver followed by a good asynchronous sample rate converter could be just as good though (that was the approach used by many manufacturers before the xmos chipsets became widespread).

I'm a long-time audiophile, new to computer audio in the past year. I had no opinion on whether digital cables affect sound until I tried some for myself. My computer audio system consists of a NAS and router, connected via wi-fi to a TP-Link extender in bridge mode, then via ethernet cable to an sMs-200 music renderer, then via USB to a DAC.

I upgraded the TP-Link CAT5e ethernet cable feeding the sms-200 to CAT7 Audioquest Pearl. No difference in sound, but low cost and worthwhile for the learning experience and to remove doubts about whether ethernet cable mattered.

Next I tried some generic USB cables vs. the Korg-supplied USB 2.0 cable. I did hear differences. Based on this experience and this review: Oyaide Neo d+ Class A USB 2.0 Cable | The Absolute Sound I purchased the Oyaide Class A cable and its more expensive Class S sibling, with silver coating. The more expensive cable came first and was a minor disaster. It was more revealing and transparent than the Korg cable, but irritatingly bright.

Before the less expensive Oyaide cable arrived, I purchased a Vanguard dual-head usb cable from eBay (with separate power and data wiring). I sounded better than the Korg, but was displaced by the Oyaide Class A cable, which sounded just right to me, as the review had stated.

I am no longer using the Korg as my main DAC, as the DAC in my Audiolab 8200CD player is more resolving. I noticed that CD sounded better than the computer audio system, even though I was using the same DAC and the Audiolab has asynchronous USB. The difference was not in tone or resolution, it was a difference in coherence, only evident with voice. Sibilants and lower frequencies in voices seemed disjointed, spoiling the illusion of a singer in the room. After some research, I tried blocking the power pin on the usb cable (the Audiolab does not use usb power). This eliminated the difference in coherence between CD and CA. I no longer have a preference in SQ.

Note that Korg and Oyaide are pro audio sellers. One thing you can be sure of with pro audio is that the products are designed competently, which is not always the case with audiophile products.

I upgraded the TP-Link CAT5e ethernet cable feeding the sms-200 to CAT7 Audioquest Pearl. No difference in sound, but low cost and worthwhile for the learning experience and to remove doubts about whether ethernet cable mattered.

Next I tried some generic USB cables vs. the Korg-supplied USB 2.0 cable. I did hear differences. Based on this experience and this review: Oyaide Neo d+ Class A USB 2.0 Cable | The Absolute Sound I purchased the Oyaide Class A cable and its more expensive Class S sibling, with silver coating. The more expensive cable came first and was a minor disaster. It was more revealing and transparent than the Korg cable, but irritatingly bright.

Before the less expensive Oyaide cable arrived, I purchased a Vanguard dual-head usb cable from eBay (with separate power and data wiring). I sounded better than the Korg, but was displaced by the Oyaide Class A cable, which sounded just right to me, as the review had stated.

I am no longer using the Korg as my main DAC, as the DAC in my Audiolab 8200CD player is more resolving. I noticed that CD sounded better than the computer audio system, even though I was using the same DAC and the Audiolab has asynchronous USB. The difference was not in tone or resolution, it was a difference in coherence, only evident with voice. Sibilants and lower frequencies in voices seemed disjointed, spoiling the illusion of a singer in the room. After some research, I tried blocking the power pin on the usb cable (the Audiolab does not use usb power). This eliminated the difference in coherence between CD and CA. I no longer have a preference in SQ.

Note that Korg and Oyaide are pro audio sellers. One thing you can be sure of with pro audio is that the products are designed competently, which is not always the case with audiophile products.

I'm pretty sure that someone to whom Tek decides to lend a 50 GHz scope to for review knows how to properly terminate his test setup.

I've got a lot of high falutin test equipment at work that we're encouraged to use (academic). Give me half a chance and I'll give you a screwed up measurement any time of day on stuff I don't know how to use properly.

Just saying that it's not a guarantee. But we do hope you're right. In any case, we don't know how those traces were generated from your post.

I've got a lot of high falutin test equipment at work that we're encouraged to use (academic).

I remember in my rookie days testing a modem and it had 50Hz hum everywhere. I couldn't for the life find where it was coming from.

I then moved my sig gen off the top of the scope and the hum went away !

The sig gen mains transformer sitting on the scope was interfering with it.

Had a similar problem working on an audio mixer on my desk.

Many kilohertz of signal getting into the mixer.

Turned out to be my LED mains light just above radiating into the circuit.

I can only guess it had a switching supply in it.

I should add one more category to my original list

1. Power rail

2. Ground

3. Jitter induced noise : that is usb jitter of the incoming data induces noise.

4. Data rise time.

5. Common mode current.

USB format is differential but as all differential signal, it's not all 100% differential and will have some common mode component. As the signal travel, the + and - will have different propagation time therefore will create a CM component. The longer the cable the worst it will be. A inferior cable will have more CM. CM current is very bad especially from one component to another. So this is just another interfering path from the PC to the USB DAC. This is a "fixed" CM or at least non-dynamic or a low freq. CM relatively.

Another form of CM or high freq. CM is related to jitter. As the signal jitters, the jitters won't occur perfectly the same on the + and - and whenever you have a mismatch, you have CM and this is mostly at higher freq. An inferior cable will have more mismatch and will lead to more higher freq. CM.

I would guess high freq. CM is worse than low freq. CM.

1. Power rail

2. Ground

3. Jitter induced noise : that is usb jitter of the incoming data induces noise.

4. Data rise time.

5. Common mode current.

USB format is differential but as all differential signal, it's not all 100% differential and will have some common mode component. As the signal travel, the + and - will have different propagation time therefore will create a CM component. The longer the cable the worst it will be. A inferior cable will have more CM. CM current is very bad especially from one component to another. So this is just another interfering path from the PC to the USB DAC. This is a "fixed" CM or at least non-dynamic or a low freq. CM relatively.

Another form of CM or high freq. CM is related to jitter. As the signal jitters, the jitters won't occur perfectly the same on the + and - and whenever you have a mismatch, you have CM and this is mostly at higher freq. An inferior cable will have more mismatch and will lead to more higher freq. CM.

I would guess high freq. CM is worse than low freq. CM.

Last edited:

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- Let’s talk why USB cable makes a difference