I will not dispute that software can influence jitter.XXHE said:how to influence jitter

I accept this, as I'm sure do most others, even skeptics. So please do not put up strawmen.So software can influence jitter

And this is where I claim something is amiss.No matter how USB asynchronously it is connected ...

In USB, this far only Wavelength Audio's more recent DACs support asynchronous USB audio. A few other DACs like the EMU 0404 also have asynchronous connections, but they use custom drivers rather than a standard USB Audio Class interface, and those are unknowns--for example, no guarantee that, for example, system load might not affect them by making buffer underruns, which would be more of a problem in the bulk transfer USB mode. Have you tested your software on Wavelenghts' DACs? Alternatively, have you tested your software on any DAC with the S/PDIF output from the PC slaved to the DAC clock (without any resampling)?

I am going to refute the possibility of two bit-perfect software players making a difference in a truly asynchronous setup:

A bit-perfect player sending data over a truly asynchronous interface is equivalent to copying a data file from one digital device to another digital device. The analogy is making a copy of a file from one hard disk to another. A small buffer in the DAC serves as a second level transport, a cache if you will, and with an independent-from-input read-out clock to the D/A chip, the interface jitter over the link is irrelevant as long as it is not so ludicrously enormous that data is corrupted (i.e. jitter magnitude on the order of the transmission time of a bit). If you agree that a file copied from one computer to another is identical and that a copy operation over a different interface (say Firewire instead of SATA) will produce an identical file onto the second device, then you are implicitly agreeing that data transferred from a computer to a DAC's buffer over a truly asynchronous interface is identical to data transfered in any other way that still falls under the description of going over a truly asynchronous interface.

An externally hosted image should be here but it was not working when we last tested it.

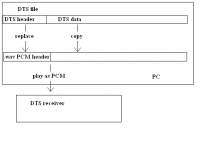

What do you mean decoded? What I'm saying is, take DTS data and copy it directly in a wave file with a header specifying PCM. If a player is bit-perfect, over the S/PDIF would come out a stream identical to a DTS file being played. Neither the OS will know that the PCM is really DTS encoded data, nor will the receiver know that the DTS stream it's receiving was stored in a PCM container.XXHE said:I don't think this can be useful. When the stream is decoded (into PCM) there's no receiver applicable anymore, and nothing will show "green lights" for a properly encoded stream.

Since I have been working on the software side of indeed such a DAC (so yes, there *is* another one !) I know exactly what you mean and talk about. The kind of "problem" is : where I am very cautious at calling the influences "jitter", you now over-do that. I still didn't say it ...

When you have the time, please read the link at the end of my one before post, so you'll get the hunch of the area to look into.

Also note that elsewhere I'm always saying that "jitter" is not to be measured at the digital side, but at the analogue side. This is not for nothing ...

(and yeah, I know, how to measure that ?!)

I already know that e.g. the "nothing worth much" DirectSound output XX can present (XP) measures the same jitter as e.g. Foobar DS. Still both sound completely different.

So, it's one dimension worse as most people (if not all) expect ...

Lastly, I *know* that things can matter at the (transformation to the) analogue output of the DAC and it is this part which is influenced.

I can't help it that this is just so, but I am very happy with it.

Now, the real test to testify *that* would be

a. Check that the output is bit perfect;

b. Measure two different versions or players for jitter;

c. When both measure equal, have an extensive ABX test.

I MUST BE HONEST though, that no matter I worked on a DAC like the Wavelength, I never applied any tests as implied, so in fact I don't know about the results really. Otoh I cannot see the difference with other asynchronous applications (who *do* sound different), so this is where my (in this case theoretical) standpoint comes from.

Please keep in mind : what you describe as "file copying" would be the most easy description of something that technically indeed looks like that. However, looking at the (C++ etc.) program doing that job of copying, that would really be not one bit (hehe) different from what XXHighEnd does. On that matter : XX works right against the driver ("into" would even be a better description).

Might it help : the code pushing through the bytes was created from the ground by me. This is different from good old Kernel Streaming (it just existed in MS kernel code), is sure different from ASIO (the API's just exist), and it is way different from something like DirectSound which in fact is invisible (and STILL XX sounds different !).

But I am open to the "Wavelength" discussion really. It can turn out that you are right.

Peter

When you have the time, please read the link at the end of my one before post, so you'll get the hunch of the area to look into.

Also note that elsewhere I'm always saying that "jitter" is not to be measured at the digital side, but at the analogue side. This is not for nothing ...

(and yeah, I know, how to measure that ?!)

I already know that e.g. the "nothing worth much" DirectSound output XX can present (XP) measures the same jitter as e.g. Foobar DS. Still both sound completely different.

So, it's one dimension worse as most people (if not all) expect ...

Lastly, I *know* that things can matter at the (transformation to the) analogue output of the DAC and it is this part which is influenced.

I can't help it that this is just so, but I am very happy with it.

Now, the real test to testify *that* would be

a. Check that the output is bit perfect;

b. Measure two different versions or players for jitter;

c. When both measure equal, have an extensive ABX test.

I MUST BE HONEST though, that no matter I worked on a DAC like the Wavelength, I never applied any tests as implied, so in fact I don't know about the results really. Otoh I cannot see the difference with other asynchronous applications (who *do* sound different), so this is where my (in this case theoretical) standpoint comes from.

Please keep in mind : what you describe as "file copying" would be the most easy description of something that technically indeed looks like that. However, looking at the (C++ etc.) program doing that job of copying, that would really be not one bit (hehe) different from what XXHighEnd does. On that matter : XX works right against the driver ("into" would even be a better description).

Might it help : the code pushing through the bytes was created from the ground by me. This is different from good old Kernel Streaming (it just existed in MS kernel code), is sure different from ASIO (the API's just exist), and it is way different from something like DirectSound which in fact is invisible (and STILL XX sounds different !).

But I am open to the "Wavelength" discussion really. It can turn out that you are right.

Peter

abzug said:

What do you mean decoded? What I'm saying is, take DTS data and copy it directly in a wave file with a header specifying PCM. If a player is bit-perfect, over the S/PDIF would come out a stream identical to a DTS file being played. Neither the OS will know that the PCM is really DTS encoded data, nor will the receiver know that the DTS stream it's receiving was stored in a PCM container.

Decoded like : it is PCM already, and DTS is invisible (has gone ... it's just interleaved channels now).

I know what you mean, but the point is, once it's PCM nothing can go wrong anymore, so it is now allowed to be not bit perfect, and everyting will play. Please keep in mind : the only thing that can hold back would be a decoder (which doesn't understand which the bits are mangled with). But the decoder is just not there in your example ...

Might it help : I play DTS-software-decoded, hence PCM right through XP's mixer. All is fine. Nothing *can* go wrong ...

And it is not bit perfect.

Ok ?

Make that a third one. I also have an asynchronous USB interface of my own. I thought I was done the digital side and was concentrating on the analog one, when I read threads like this one...XXHE said:[Since I have been working on the software side of indeed such a DAC (so yes, there *is* another one !)

If you're comparing DAC chips' jitter rejection, yes. If you're comparing jitter in the system, no reason why not measure jitter at the, say, DAC chip digital input."jitter" is not to be measured at the digital side, but at the analogue side.

The actual data contained in the digital stream, together with the jitter at the DAC chip's digital input, together wholly determine the result of conversion. If the data and the amount of jitter are identical in two cases, then the result of digital to analog conversion will be identical. There is NO other information that can affect conversion. The data and the jitter at the chip inputs are _everything_. If they are identical in two cases, such as two players with bit-perfect output over an asynchronous interface, there cannot be physically any difference in conversion whatsoever.things can matter at the (transformation to the) analogue output of the DAC and it is this part which is influenced.

Considering it's the raison d'etre for the existence of your player, that's not surprising.I can't help it that this is just so, but I am very happy with it.

I would bet the lives of my family members and anything else conceivable that if a. and b. are true, that guarantees c. will show no difference if double blind, with the qualifications that first, power supplies are properly decoupled and there is galvanic isolation and shielding on the signal lines (both of which I've taken care of in my own design), and, second, jitter measurement is based not only on magnitude of the RMS jitter but actual comparison in the distribution (phase noise profile).a. Check that the output is bit perfect;

b. Measure two different versions or players for jitter;

c. When both measure equal, have an extensive ABX test.

XXHE said:

Decoded like : it is PCM already, and DTS is invisible (has gone ... it's just interleaved channels now).

I know what you mean, but the point is, once it's PCM nothing can go wrong anymore, so it is now allowed to be not bit perfect, and everyting will play. Please keep in mind : the only thing that can hold back would be a decoder (which doesn't understand which the bits are mangled with). But the decoder is just not there in your example ...

Might it help : I play DTS-software-decoded, hence PCM right through XP's mixer. All is fine. Nothing *can* go wrong ...

And it is not bit perfect.

Ok ?

Attachments

Considering it's the raison d'etre for the existence of your player, that's not surprising.

I'm sorry, but this leads to nothing. If I say that I'm happy, I say that because of the SQ that can be created from it.

I don't think I need remarks like this.

abzug,

I have to hand it to you - you've tried valiantly to make your point. But I think it might be time to leave it be. The believers aren't going to be convinced by mere logic, and you've approached this from pretty much all the angles. I do sympathise - because it's very frustrating trying to explain things sometimes - and I agree with basically everything you've said. In fact, the only point where I wasn't sure was:

Could you explain to me how that works? Since the entire software chain is buffered and slaved to the clock of the audio device, how can the software have any effect on output jitter?

The idea of a software player written from the ground up to have proper audio handling definitely intrigued me when I first heard about it, but I can safely say based on the comments (1) in this thread that I wouldn't use XXHighEnd even if it were free. I'll stick with Winamp+ASIO, since it's not possible to do better and the code for the ASIO plugin is freely available.

(1) Along the lines of:

"Well, there's no actual reason for this to be any good. But just listen to it, man! The improvement is huge!"

I have to hand it to you - you've tried valiantly to make your point. But I think it might be time to leave it be. The believers aren't going to be convinced by mere logic, and you've approached this from pretty much all the angles. I do sympathise - because it's very frustrating trying to explain things sometimes - and I agree with basically everything you've said. In fact, the only point where I wasn't sure was:

Originally posted by abzug

So software can influence jitter

I accept this, as I'm sure do most others, even skeptics. So please do not put up strawmen.

Could you explain to me how that works? Since the entire software chain is buffered and slaved to the clock of the audio device, how can the software have any effect on output jitter?

The idea of a software player written from the ground up to have proper audio handling definitely intrigued me when I first heard about it, but I can safely say based on the comments (1) in this thread that I wouldn't use XXHighEnd even if it were free. I'll stick with Winamp+ASIO, since it's not possible to do better and the code for the ASIO plugin is freely available.

(1) Along the lines of:

"Well, there's no actual reason for this to be any good. But just listen to it, man! The improvement is huge!"

I have little faith in an application written by someone who does not even seem to understand that WAV is a container format and may carry a raw DTS bitstream.

As you like.

I know abzug tried to make it additionally clear with a picture, but apparently I don't get the grasp of his point.

My point is : you can't use the DTS stream to test for bit perfect because Vista just will switch in bit perfect mode because of seeing the DTS (etc. btw).

First decode it into PCM (at least that's what I think abzug hunts for) and it has become the most normal WAV which will just play. Bit perfect or not.

No, you can have a WAV file containing DTS bitstream. You can play this file back via ASIO and it will be decoded into 5.1 audio if you have an external decoder attached via SPDIF.

This is the whole point of Abzug's post... you play a DTS encoded WAV back, which the player believes is PCM (although it is NOT), and if the player (and sound card) is not 100% bit-perfect, the external decoder will not decode the DTS stream successfully.

This test has been known and used for a long time. I suggest you get a program such as SoundForge that allows you to save AC3 and DTS bitstreams inside a WAV file.

This is the whole point of Abzug's post... you play a DTS encoded WAV back, which the player believes is PCM (although it is NOT), and if the player (and sound card) is not 100% bit-perfect, the external decoder will not decode the DTS stream successfully.

This test has been known and used for a long time. I suggest you get a program such as SoundForge that allows you to save AC3 and DTS bitstreams inside a WAV file.

Ok, last time from my side :

a. I know that;

b. That doesn't work for Vista.

No matter how long the trick is known, Vista will switch to Bit Perfect once it detects such a stream (and it does). It has to, because otherwise the stream won't come through unharmed. This is the exception to everything else where the bits are mangled.

In XP that test *did* work IOW it really said whether the chain was inheritently bit perfect or not.

This is nothing I found or whatever, it's in Vista's audio specs.

XX does it too. Is that important ? no. Each player being able to pass through that stream will do it. Not each player can though and this is not related to bit perfect by itself.

a. I know that;

b. That doesn't work for Vista.

No matter how long the trick is known, Vista will switch to Bit Perfect once it detects such a stream (and it does). It has to, because otherwise the stream won't come through unharmed. This is the exception to everything else where the bits are mangled.

In XP that test *did* work IOW it really said whether the chain was inheritently bit perfect or not.

This is nothing I found or whatever, it's in Vista's audio specs.

You can play this file back via ASIO

XX does it too. Is that important ? no. Each player being able to pass through that stream will do it. Not each player can though and this is not related to bit perfect by itself.

Originally posted by abzug

If your source music is 16 bit but the DAC and link 24 bit, then digital volume control will not cause distortion over a range of attenuation close to 50 dB, unless someone really botched the implementation. Of course, some DACs use schemes such as adding shaped noise to lower bits to fake information there (this was in some Wadia patents from what I remember) so this may not be the optimal setup.

I know that I must be sounding like a broken record these days, but every time I see someone say that digital processing causes distortion, my right cheek twitches.

Dither, people. Dither! If you use dither, no distortion is produced. At the same time, and depending on the exact arithmetic being used, an undithered 24-bit volume control CAN introduce distortion even if the input is 16-bit and the gain is higher than -48dB (it will be quieter than the LSB of the 16-bit data that went in - which might have been what you meant - but my point is that 16-bit data won't get through such a volume control totally unmolested).

If it's done right, digital processing is your friend.

My whole point was to prevent decoding of the DTS by the OS/software and fool the computer into treating DTS data as if it were PCM. I thought that point is very simple and everyone here understood it.

If you take a wav file and replace a part of its PCM data with data you copy from a DTS file, it will go through the sound subsystem as if it were PCM--because if the system is bit-perfect, then it doesn't care about the contents of the PCM stream--it just forwards it, so it will be treated the same way regardless of what data you put into it.

If you take a wav file and replace a part of its PCM data with data you copy from a DTS file, it will go through the sound subsystem as if it were PCM--because if the system is bit-perfect, then it doesn't care about the contents of the PCM stream--it just forwards it, so it will be treated the same way regardless of what data you put into it.

How would Vista know the stream is DTS if you put the DTS data into a wav file which specifies it's a PCM stream? Vista will treat the data as PCM if you tell it it's PCM!XXHE said:Vista will switch to Bit Perfect once it detects such a stream (and it does).

That's the point.Wingfeather said:it will be quieter than the LSB of the 16-bit data that went in

abzug said:My whole point was to prevent decoding of the DTS by the OS/software and fool the computer into treating DTS data as if it were PCM. I thought that point is very simple and everyone here understood it.

Ah ... ok, now I understand too.

Please allow me to explain (if I ever can) why at least *I* don't think this way :

If you take a wav file and replace a part of its PCM data with data you copy from a DTS file, it will go through the sound subsystem as if it were PCM--because if the system is bit-perfect, then it doesn't care about the contents of the PCM stream--it just forwards it, so it will be treated the same way regardless of what data you put into it.

How would Vista know the stream is DTS if you put the DTS data into a wav file which specifies it's a PCM stream? Vista will treat the data as PCM if you tell it it's PCM!

Your mentioned "sound system" consists of two main parts only : validating the (WAV) file, and attaching the DAC (driver) to the "audio session" of the program, feeding that with the data used in mentioned validating (and the remainder of the sound system is the program pushing the bytes. Keep in mind, this is WASAPI programming, not DSound, ASIO, KS).

Both processes use the same data, and when your example would be fed to it, it will fail I think. Just because it's not consistent.

When I say that both processes (validating the file and attaching to the driver) use the same data, this is actually not true, because it can me mangled. XX does that too, because otherwise e.g. an 96/24 file just can't play in Exclusive Mode. This is a bug in there, and is related to the inherent format of the 24 file which consists of 3 bytes, while the DAC needs (padding to) 4 bytes. It just doesn't match when you again hear "both processes use the same data".

I expect similar with DTS (etc.), but sadly can't try it, because I too don't have a DTS receiver.

I think this all is related to reports from people, where one says the DTS test succeeds and the other does not with the same player (incl. WMP IIRC). It depends on the DAC ! or better, what the soundcard driver needs for padding.

It gets more complex when we see that the test already can fail because the format won't get (accepted) right. So this is unrelated to bit perfectness now and highly related to the player in combination (!) with the soundcard driver (or USB for that matter).

Ok, whether it can work or not is one, but two is that if one didn't set it up properly but the DTS light goes on, one could be fooled by Vista's switch to Exclusive Mode and *that* is bit perfect always.

Difficult ...

Assuming you meant the "solution" ... I'm not sure.

Padding means changing the BlockAlign (and some derivals) and this is a typicle thing the Engine strips on.

Again to the 96/24 file example : There is no way Vista's Audio Engine (per match of file versus Soundcard/DAC properties) will accept it. Please keep in mind : Exclusive Mode. Shared Mode will, but resampled to something it *can* handle. And that won't be bit perfect again.

The 96/24 situation was solved by me, by fooling the first part (file validation) with dummy data. But what good is that to *your* file / tests when applied with another player that doesn't mangle in this area ?

I think by now we talk more about it than a test would take time, but again, sadly I can't test it by the lack of a DTS receiver.

Please do not test this by means of XXHE itself, because that will just work always because I shut off Shared Mode at all (which of course I can switch back on when I had that receiver).

Hmmm ... forgive me. By now I kind of lost the case.

What were we actually trying to do ? use the good old DTS test to testify bit perfectness, right ? But isn't it so that we already know it isn't ? (except maybe for some tweaked CMedia driver).

It might be at 24 bits (and it WON'T at 44.1/24 because that's just rejected always !), but DTS won't be 24 bits, right ?

Thus, isn't it so that we already know the answer ?

Padding means changing the BlockAlign (and some derivals) and this is a typicle thing the Engine strips on.

Again to the 96/24 file example : There is no way Vista's Audio Engine (per match of file versus Soundcard/DAC properties) will accept it. Please keep in mind : Exclusive Mode. Shared Mode will, but resampled to something it *can* handle. And that won't be bit perfect again.

The 96/24 situation was solved by me, by fooling the first part (file validation) with dummy data. But what good is that to *your* file / tests when applied with another player that doesn't mangle in this area ?

I think by now we talk more about it than a test would take time, but again, sadly I can't test it by the lack of a DTS receiver.

Please do not test this by means of XXHE itself, because that will just work always because I shut off Shared Mode at all (which of course I can switch back on when I had that receiver).

Hmmm ... forgive me. By now I kind of lost the case.

What were we actually trying to do ? use the good old DTS test to testify bit perfectness, right ? But isn't it so that we already know it isn't ? (except maybe for some tweaked CMedia driver).

It might be at 24 bits (and it WON'T at 44.1/24 because that's just rejected always !), but DTS won't be 24 bits, right ?

Thus, isn't it so that we already know the answer ?

It does. But the file data (3 bytes per sample per channel) will never match a soundcard (needing 4 bytes).

Therefore it's rejected. In kernel code the match won't go.

Better look at it some other way around :

The file says 3 bytes per channel, and THUS the soundcard is attached with that. But no(ne that I know of) soundcard can do that.

Then it backfires on you, because the soundcard needs 32, and now the file doesn't match to that.

What it comes down to (functionally spoken) is that the FILE needs the padding.

I hope it is clear.

Therefore it's rejected. In kernel code the match won't go.

Better look at it some other way around :

The file says 3 bytes per channel, and THUS the soundcard is attached with that. But no(ne that I know of) soundcard can do that.

Then it backfires on you, because the soundcard needs 32, and now the file doesn't match to that.

What it comes down to (functionally spoken) is that the FILE needs the padding.

I hope it is clear.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- Digital Line Level

- Is Vista really capable of bit-perfect output?