The 50-120ms data comes from large room acoustics, too mutch energy in a concert hall/auditorium is bad for intelligibility. Thats why the best halls have dualsloped decay, ISD gap 20ms, slowly decaying first reflections (their level still below certain treshold not to cause excessive phase distortion at 700-4000Hz), rapid decay 50-120ms to not cause muddiness and finally slow decay after 120ms, giving good sense of envelopment.

Not applicable in small rooms, not enough reverberant energy after you dealt with getting the ISD gap sufficient.

So yeah, in a small room, listening to stereo everithing you get from after ~10-20ms is considered good (except flutter), gives a sense of spaciousness and detaches the 'reverberant image' from the speakers. Not a problem with multichannel, whitch can be confortably listened in practically anechoic conditions.

Not applicable in small rooms, not enough reverberant energy after you dealt with getting the ISD gap sufficient.

So yeah, in a small room, listening to stereo everithing you get from after ~10-20ms is considered good (except flutter), gives a sense of spaciousness and detaches the 'reverberant image' from the speakers. Not a problem with multichannel, whitch can be confortably listened in practically anechoic conditions.

Even in anechoic rooms, few have cut off frequencies down to 10Hz, 100Hz seems common.Speakers are designed to make use of the room. No speakers are designed for anechoic chamber. Bring your speaker to 2mx2m room and it will be boomy. Bring the speaker to the yard (or anechoic chamber) and there will be no bass.

When speakers are acoustically small, yes, we want to take advantage of room acoustics. As speakers get larger, ideally they would be used in larger rooms, and rooms can be taylored to just fill in what is lacking.

What do you mean here? Spacial information is part of the recording, so even in a small room you can hear as if the sound comes from behind the wall.A bedroom sounding like cathedral makes as little sense as the opposite!

Imaging is important for enjoyment. I'm always confused with headphone's imaging. Today I'm very surprised (and turned my head) when listening to a headphone and there was fast instrument sound from behind my head (what!??).

Deciding what the questions are and then doing the tests to answer them. What is very clear is that everyone's objectives are not the same that makes the questions being addressed narrowly applicable at best.Which part isn't easy? Building such a system or doing the listening tests?

For example you are always looking for what makes a particular - but given - room work best. I look to how should the room be built to work best. We would clearly ask different questions. Sometimes they may be the same, but not always. You use a sub solution that works only for one person. I won't even consider that. We have very different objectives (and yet I agree with you more than almost 95% of the people here.)

Which part isn't easy? Building such a system or doing the listening tests?

according to David Griesinger of Lexicon, delays between 50mS and 150mS will be particularly detrimental to intelligibility.

Griesinger was, and may still be the top engineer at Lexicon when it comes to reverb design. He's also done a huge amount of recording in various halls all over the world, using every imaginable technique. He is one of the most knowledgable engineers in the world on this stuff. Google his name and check out his many papers. If intelligability is blurred, why wouldn't you think the music would be blurred also?

I've met David several times. He hasn't worked at Lexicon since JBL bought it several years ago. I have read just about every paper that he has written. I am sure that he would agree with me and the other poster here that the 50 - 150 ms criteria makes no sense in a small room. That time frame is only applicable to a large auditorium. I also do not see a link between "intelligibility" and "sound quality" especially in a small room. They are simply entirely different things and could easily be counter opposed to each other in a small room.

Agree. They aren't always the same thing - tho Tom Danley may be able to prove me wrong.They are simply entirely different things and could easily be counter opposed to each other in a small room.

Think of the common experience at an amplified concert. The band is playing, the singer signing, everything sounds about right. Then the music stops and the singer starts talking to the audience. But - oops! - the sound guy forgot to turn off the reverb on the vocal mic. Were you surprised at the huge amount of reverb on the vocal mic? You didn't notice in the mix, but there's a ton of it and it's very noticable. Then back to the music and the very same reverb sounds right again. Strange effect.

The brain doesn't process music and speech in the same ways. What sounds good on music doesn't always sound good (or correct) on spoken voice. We also expect to hear different things on each.

according to David Griesinger of Lexicon, delays between 50mS and 150mS will be particularly detrimental to intelligibility.

Griesinger was, and may still be the top engineer at Lexicon when it comes to reverb design. He's also done a huge amount of recording in various halls all over the world, using every imaginable technique. He is one of the most knowledgable engineers in the world on this stuff. Google his name and check out his many papers. If intelligability is blurred, why wouldn't you think the music would be blurred also?

Hi Bob

“If intelligability is blurred, why wouldn't you think the music would be blurred also?”

NO!!

The reason is speech intelligibility is being able to make out random words, musical enjoyment is the enjoyment of sound.

An example of how these are not the same is a choir in a large old church, there can be ZERO intelligibility and still it sounds heavenly, like it’s supposed to.

It’s only when one wants to transmit information via sound (like words, like a stereo image etc) that the harm delayed sound becomes more apparent.

Outside HiFi in commercial sound where intelligibility is increasingly a legal requirement, a number of measurements were developed to help quantify that, the most recent and most accurate is called STIpa.

It is a language independent test which predicts what level of intelligibility one has with a given loudspeaker system, room and location.

In Europe, it is now a legal requirement that any public space has an intelligible emergency warning system that passes the test and will be here very soon.

Intelligibility is measured in a way very similar to resolution in optics, using a Modulation Transfer Function test .

Modulation Transfer Function - what is it and why does it matter? - photo.net

In audio, to make the STIpa measurement, MTF’s at 7 different voice band frequencies are made and weighted to voice spectrum. For each of the frequencies, it is modulated in rate up to 30Hz (from full to zero amplitude etc).

Anything like reflected sound, other flaws etc fills in the “off” period of the signal, reducing the depth of modulation and so, reduces the amount of information which arrives.

In large room acoustics where there is so much less absorption (room volume where energy is stored usually increases faster than the surface area where absorption occurs), increasing directivity (the ratio of energy within the intended pattern compared to that outside it) and minimizing the number of sources is the number one best way to deal with that.

One of the early attempts to quality the effects is the Hopkins / Stryker equation and still today, intelligibility goes up when you increase directivity and or reduce the number of sources of sound.

Sound System Engineering 4e - Don Davis, Eugene Patronis, Pat Brown - Google Books

Plane Jane MTF measurements can be made using ARTA as well, the display shows he modulation depth vs the modulation frequency at each fundamental that is included.

For example, 1000Hz might be modulated up to a 30Hz rate and normally as the rate of modulation increases, the less depth of modulation one sees.

AS you move the microphone farther away from the speaker, the modulation depth decreases, as you decrease loudspeaker directivity, the depth decreases. MANY things reduce the resolution and sadly the measurement doesn’t tell you where to look or what’s involved.

Part of the “fun” in audio is equating what you measure to what you hear . Part of the problem is your hearing system is tuned to seek out the signal and reject the noise, not only that but part of that process is taking two input signals, each of which individually has strong angular and height dependant effects hinging on incoming angles and to construct a single 3d image in our minds. That process makes it hard to hear or tune into what’s wrong instead of what’s right.

If you have a measurement mic and good (ideally sealed back) headphones, there is something neat you can do which by-passes the stereo image and all that processing. Set up and listen via the measurement mic and headphones. First, listen to your room, have others talk, get used to the sound of voices and familiar sounds BUT not having the normal stereo image (the mic signal to both ears makes the mono phantom image and lacking pina cues, appears in the middle of your head..

When you feel like your used to that perspective, NOW turn on your stereo, play some music but only play one loudspeaker. Often you will hear coloration that you didn’t hear live but after hearing it in the headphones for a while, you may well hear it live too. In a room, you will hear more room than live too because the microphone is omni directional while your ears have some directivty.

At work, as a reality check (in the beginning it wasn’t clear we were barking up the right tree) we did a number of “Generation loss” recordings with both ours and competitors loudspeakers.

The old time generation loss test was a way to exaggerate anything that was not faithful to the signal. Recording tape was another place this was often done, the better the recording system, the more generations could be played and re-recorded before degradation was too large..

If you make a recording of the loudspeaker, play it back and re-record it, everything that was wrong before will stand out much more strongly as “wrong”.

In that generation loss recording at work, it was very rare to have a loudspeaker go even 3 generations before being unlistenable or close to it, that’s how far they are from signal faithful, even if you do I on a tower and just record the loudspeakers output.

Often enough, just listening live via microphone was enough to hear what was going to stand out on generation one on the more colored speakers. With modern 24/96 recorders, it is possible to do that many generations in/out before it’s degraded.

Loudspeakers ARE still the weak link and that makes them fun to work on i think.

Best,

Tom

Agreed.

I am not clear where Mr. Richards got the 50 - 150 ms figure (although "intelligibility" is different than "sound quality".) I look for as much reflections in this range as possible as this adds to "spaciousness" (which is known to degrade intelligibility.)

Yes, me too.

What I find that matters is not whether there is any reflections between 50mS and 150mS but how fast the reflections decay.according to David Griesinger of Lexicon, delays between 50mS and 150mS will be particularly detrimental to intelligibility.

Griesinger was, and may still be the top engineer at Lexicon when it comes to reverb design. He's also done a huge amount of recording in various halls all over the world, using every imaginable technique. He is one of the most knowledgable engineers in the world on this stuff. Google his name and check out his many papers. If intelligability is blurred, why wouldn't you think the music would be blurred also?

In other words reverb time.

I completely agree with this. 100%

When I was building my room over ten years ago I emailed several people that had built a listening room and some we're listening in near Anechoic conditions..

Reducing all reflections sound best to me..

It is possible that "live sound" for home audio simply became popular without justification and many followed that idea without trying the opposite..

My experiences for live sound has changed as well.. I attended several shows at the Sony Center and the hall acoustics creates a direct focused sound with minimal reflections and sounds wonderful..Massey hall with all the reflections sounds ridiculous..

The situation is simply we do not have 1 size fits all.

Different sounds want different reflections.

That is why artificial reverb units have different settings for different music situations.

What do you mean her?

If it's in the recording, of course I want to hear it, regardless of soom size! I just tired to say that room acoustics should be fairly consistent with the visiuals.

/Anders

As an ex recording/mixing engineer, most of my time was spent in control rooms where the early reflections were suppressed and the later reflections were diffused. The primary reason was due to the Haas effect. One can hear over headphones what this sound like in this YouTube demonstration.

While the demonstration is to be listened over headphones and is on the mixing side of music production, it does demonstrate if early reflections are high in level (like specular reflections) and in the 0 to 10 millisecond range, how it can fool your ears from a localization perspective. In other words, strong early reflections mask the localization cues that are on the recording.

One of David Greisinger’s most excellent contributions is the objective measure of Interaural Coherence Coefficient (IACC). This is the measure of spaciousness of concert halls. The smaller the IACC value, the more spaciousness. An enterprising mathematician, Dr. Uli Brueggemann, uses IACC in the opposite direction, which is a measure of channel and room reflection equality for the first 80 milliseconds of sound travel. If interested, check out: Advanced Acourate Digital XO Time Alignment Driver Linearization Walkthrough.

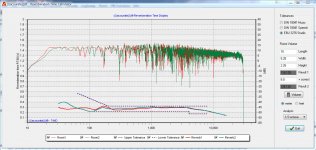

See attached EBU 3276. While this is an old paper, I would say it is still valuable guidance for folks wishing to set up their listening environments for critical listening. There are basic guidelines for stereo setup, early reflections, target frequency response, reverb time, etc. Speaking of RT 60, attached is the simulated frequency response and reverberation time of my critical listening environment, as per EBU 3276 guidelines.

Hope that helps and Happy New Year!

While the demonstration is to be listened over headphones and is on the mixing side of music production, it does demonstrate if early reflections are high in level (like specular reflections) and in the 0 to 10 millisecond range, how it can fool your ears from a localization perspective. In other words, strong early reflections mask the localization cues that are on the recording.

One of David Greisinger’s most excellent contributions is the objective measure of Interaural Coherence Coefficient (IACC). This is the measure of spaciousness of concert halls. The smaller the IACC value, the more spaciousness. An enterprising mathematician, Dr. Uli Brueggemann, uses IACC in the opposite direction, which is a measure of channel and room reflection equality for the first 80 milliseconds of sound travel. If interested, check out: Advanced Acourate Digital XO Time Alignment Driver Linearization Walkthrough.

See attached EBU 3276. While this is an old paper, I would say it is still valuable guidance for folks wishing to set up their listening environments for critical listening. There are basic guidelines for stereo setup, early reflections, target frequency response, reverb time, etc. Speaking of RT 60, attached is the simulated frequency response and reverberation time of my critical listening environment, as per EBU 3276 guidelines.

Hope that helps and Happy New Year!

Attachments

A while back I found on the web where someone had done that and posted the results for listening. It did get real bad within a few generations. He was recording the sound of the speakers in a listening room, it wasn't just the speakers. I haven't heard of anyone doing it with the speaker mounted on an infinite baffle wall of an anechoic chamber. Then the results could be argued to be just the speaker without any room effects.Hi Bob

If you make a recording of the loudspeaker, play it back and re-record it, everything that was wrong before will stand out much more strongly as “wrong”.

In that generation loss recording at work, it was very rare to have a loudspeaker go even 3 generations before being unlistenable or close to it, that’s how far they are from signal faithful, even if you do I on a tower and just record the loudspeakers output.

Often enough, just listening live via microphone was enough to hear what was going to stand out on generation one on the more colored speakers. With modern 24/96 recorders, it is possible to do that many generations in/out before it’s degraded.

Loudspeakers ARE still the weak link and that makes them fun to work on i think.

Best,

Tom

I think Tom pointed out some very interesting issues and references. It may be possible to do some analysis and simulation.

Diffraction strength and spectrum vs direct radiated strength and spectrum. The lower diffraction in respect with direct radiation will yield better performance. This varies with distance. Also the higher directivity will result in lower relative diffraction.

If you prefer to do measurements, you can start doing near field or in horn measurements gradually moving the mic out one cm at a time and see how the response varies until the response is the same within 2db of the 1M measurement. Generally, the less difference throughout the process means better performance.

Diffraction strength and spectrum vs direct radiated strength and spectrum. The lower diffraction in respect with direct radiation will yield better performance. This varies with distance. Also the higher directivity will result in lower relative diffraction.

If you prefer to do measurements, you can start doing near field or in horn measurements gradually moving the mic out one cm at a time and see how the response varies until the response is the same within 2db of the 1M measurement. Generally, the less difference throughout the process means better performance.

Loudspeakers ARE still the weak link and that makes them fun to work on i think.

IMO, if speakers are the weak link, AND it is not understood, there will be a domino effect, a mess. Why speaker distortion test is not a standard?

Hey Bob, there was a famous test done in Finland - but I can't remember the name - that did basically that. As I remember it was just one pass A/B with speaker and mic, or the direct signal. Don't know if they ever did multiple generations.He was recording the sound of the speakers in a listening room, it wasn't just the speakers. I haven't heard of anyone doing it with the speaker mounted on an infinite baffle wall of an anechoic chamber.

Someone here will remember the name of the test.

Happy New Year 2014!

Hi Pano, It is sometimes called "The Gradient test/experiment". Gradient being the name of a Finnish speaker manufacturer.

In an anechoic chamber we have loudspeakers and a high quality microphone on axis in front of them. Outside of the chamber we have a music source, an amplifier to feed the speaker(s) in the chamber and another to feed external speaker(s).

We have a switch that allows us to listen to the source or the microphone output. Lo and behold, with half decent speakers there is not a lot of difference to be heard.

The conclusion to be drawn is that much of what we hear in a normal room is reflected energy, and if directivity is out of control this experiment reminds us that the consequences can be rather detrimental.

Keith

In an anechoic chamber we have loudspeakers and a high quality microphone on axis in front of them. Outside of the chamber we have a music source, an amplifier to feed the speaker(s) in the chamber and another to feed external speaker(s).

We have a switch that allows us to listen to the source or the microphone output. Lo and behold, with half decent speakers there is not a lot of difference to be heard.

The conclusion to be drawn is that much of what we hear in a normal room is reflected energy, and if directivity is out of control this experiment reminds us that the consequences can be rather detrimental.

Keith

Why speaker distortion test is not a standard?

Because everyone who has looked at this factor has found that it is not important. It does not correlate with the sound quality that is perceived so why measure it, let alone standardize on a pointless measurement.

The conclusion to be drawn is that much of what we hear in a normal room is reflected energy, and if directivity is out of control this experiment reminds us that the consequences can be rather detrimental.

Keith

Another simple test is to have someone walk across the room between you and the speakers while your eyes are closed. It will be hard to tell where they are. If the direct sound were all-important, the walker location would be obvious at all times.

- Home

- Loudspeakers

- Multi-Way

- Beyond the Ariel