A wavefront exists in 3D space (4D if you count the progression of the wavefront). A mic captures a 2D point in the wavefront.

This makes little sense a mic certainly captures SPL vs time, there are some pretty good dummy heads out there that capture a reasonable binaural effect. The Smyth Realizer also creates a pretty good illusion.

But the brain provides way more analysis capability than a mic.

dave

That is relevant to what? All the processing in the world can't return lost information, we are back to entropy reversal and extraordinary claims.

... there are some pretty good dummy heads out there that capture a reasonable binaural effect...

Capture, but do not analyze. And the analyzer has ear lobes it has been trained to use. The "ear lobes” on a dummy haed will always be a compromize.

dave

Yes & don't forget that we regularly move our head to change the ILD & ITD in order to localize a sound more accurately or to reduce ambiguity -something that we can't do with a recording. Look at how animals often tilt their heads to locate their preyA wavefront exists in 3D space (4D if you count the progression of the wavefront). A mic captures a 2D point in the wavefront.

Ears have the ability to capture a more complex portion of that wavefront. 2 ears spaced apart on either side of the head, complex earlobes, and a huge amount of processing power trained to analyze the raw data.

dave

We also have a dynamic feedback mechanism between the brain & the ears which dynamically changes the ears operation, sensitizes or desensitizes to certain frequencies, loudness, etc. Neurons do more than just relay signals forward into the brain. They also signal back down the line, reaching out to neighboring neurons tuned to nearby frequencies, exciting some and muzzling others.

The wavefront is everywhere, recordings routinely use multiple microphones sometimes many feet apart. AFAIK you have two ears probably less than a foot apart (limited extent). As far as I'm concerned this whole discussion of not being able to sample exactly the wavefront impinging on your ears is a red herring. At some point the audio information is contained in two 2D signals (amplitude vs time) and the information they can contain is limited by fundamental principles.

OK, so the phrase "ears have a limited physical extent" is about the interaural distance which is not the same thing as "only captures a small part of the wavefront" .

Microphone configuration setups do not have any relevance to interaural distance - in fact what you are saying is that microphones are used in a totally different way to how we would perceive the auditory event if we were present at it's playing?

The whole stereo replay is an illusion which works surprisingly well, given the limitations of what is being captured although, knowing something about the workings of auditory perception, it really is down to the capabilities of the auditory processing engine & it's ability to resolve signal ambiguity which certainly defines the signals from the stereo replay system.

Last edited:

That has nothing to do with swapping of audio electronic equipment causing sound difference. If any, such changes that are too small for our ears to detect can be measured and shown on graphics / charts.True. But ears are usually a binary pair separated by a head function, with a lifetime of programming to sort out all sorts of details.

Back here;

To quote Floyd Toole:

Two ears and a brain are massively more analytical and adaptable than an omnidirectional microphone and an analyzer.

True. But ears are usually a binary pair separated by a head function, with a lifetime of programming to sort out all sorts of details.

Back here;

To quote Floyd Toole:

Yes time to appeal to authority, this like many other quotes are taken completely out of context. Yes a microphone is not a person listening to a live performance, but any recording is forever limited by the fact that a microphone(s) was used to capture it. Do you not see the difference?

Please carry on by yourselves, this is a true waste of time.

Yes a microphone is not a person listening to a live performance, but any recording is forever limited by the fact that a microphone(s) was used to capture it. Do you not see the difference?

I certainly understand that, but the mic Floyd is talking about is the one you use to measure speakers. Usually with a contrived source like sine sweep, pink noise etc. so no recording mic involved.

dave

The subject was brought up by Evenharmonics. He claims we can tell all we need by measuring. I wanted to refute that, that there is value in this discussion of doing valid listening tests.

dave

I think that is a mischaracterization of what he said, and a not very subtle moving of goal posts.

I think he said that if you can produce sound waves that are perceived to sound different, then the difference can be measured. I believe that his point was that we have measuring instruments that have been shown to be orders of magnitude more sensitve than human ears (though only in terms of parameters like amplitude, frequency, distortion, noise, phase, etc, not the important stuff like slam, PRAT, veils, blackness of blacks, microdynamics, etc).

I think that is a mischaracterization of what he said, and a not very subtle moving of goal posts.

There is also a lot of context from other discussions.

I think he said that if you can produce sound waves that are perceived to sound different, then the difference can be measured.

Yes, exactly. And i am refuting that. At least not with the current state of measurement (and interpretation).

dave

It's been over a year since you made a claim that there are lots of audible sound not measurable. You still haven't presented one example despite being asked to multiple times.

It has been many decades, not a year. I only need to provide one example to prove my point, and i have already done that in this thread.

And another… how about a measure of DDR? Something like the FR of a device 40 dB down (in the presence of a 0 dB signal) would be a good start on that. But also a measure as to how much tiny information is lost by a DUT. Certainly something that can be heard — the kind of thing that makes a great product instaed of just a good one, exactly what the kinds of tests we are trying to sort here.

dave

I have explaine dit many times. I guess you weren’t listening, or missed one of the many times.

DDR is the ability to reproduce very small details — the kind of details that put life into a voice or instrument, or the subtle details that provide for a solid 3D soundstage/image, even in the precence of a full strngth signal.

Allen Wright's attempting to put a definition to the flowery words used by audio reviewers, something he could use as a guide to help him make better products. Places to look, things to look for. Goals to achieve a better piece of electronics.

dave

DDR is the ability to reproduce very small details — the kind of details that put life into a voice or instrument, or the subtle details that provide for a solid 3D soundstage/image, even in the precence of a full strngth signal.

Allen Wright's attempting to put a definition to the flowery words used by audio reviewers, something he could use as a guide to help him make better products. Places to look, things to look for. Goals to achieve a better piece of electronics.

dave

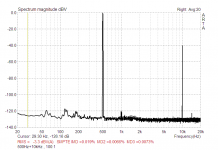

Something like this? -40dB 10kHz "floating" on top of 0dB 500Hz signal. Nothing special to observe (hear) or measure.. CCIF distortion.And another… how about a measure of DDR? Something like the FR of a device 40 dB down (in the presence of a 0 dB signal) would be a good start on that. But also a measure as to how much tiny information is lost by a DUT. Certainly something that can be heard — the kind of thing that makes a great product instaed of just a good one, exactly what the kinds of tests we are trying to sort here.

Attachments

Last edited:

You can generate the arbitrary signal what you want, two tones, multitone, square + sinus (s) .. But nothing special "tiny information" to find ..

And -40 dB / 500 Hz below 0 dB / 500 Hz is nonsense (two signals with the same frequency). Simple addition or subtraction, depending on the relative phase.

And -40 dB / 500 Hz below 0 dB / 500 Hz is nonsense (two signals with the same frequency). Simple addition or subtraction, depending on the relative phase.

I think Dave is more so describing dynamic modulation of in room sound noise floor causing obscuring/masking of low level signals at frequencies nearish to high level tones. This DDR as he calls it does indeed cause uncertainty of LR and depth imaging....masking and timbre. The right measurement methods ought to pick up this uncorrelated inter-channel difference information that causes damage and with training is subjectively easy to pick if one listens out for it. Indeed this is probably the major differentiator between otherwise similar electronics systems and drives longer term preferences.....most line level gear/amplifiers measure essentially flat/distortionless/blameless with stationary signal BUT this can all change when music/field recording/voice is the signal.Masking.. Subtle signal with frequency very near to strong signal. It will create low level (-40dB) "beat" with 1Hz frequency . Impossible to hear due to masking.

Back to the subject of the so far 'hypothetical' ABX testing protocol there are many considerations that would serve to drive null results when testing for 'minor' differences.

Included in this would be BG noises like HVAC, and the fact of multiple other subjects in the room, all breathing and the mere presence of other subjects altering the sound field.....also, who gets to sit in the optimal spot ?. The time of day would be another factor...BG acoustic/seismic noise, AC supply noise and general RF noise all change according to the time of day. Also the listening mode of the subject is variable according to time of day....ever noticed how late night listening can reveal system nuances that are not noticed during daytime listening.

Anybody care to detail their experiences of formal ABX testing ?.

Dan.

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- Is there more to Audio Measurements?