Will it now? Illegal in what way? Who wrote the law?

Its looking like you're taking the word 'illegal' too literally here. Step back for a moment.

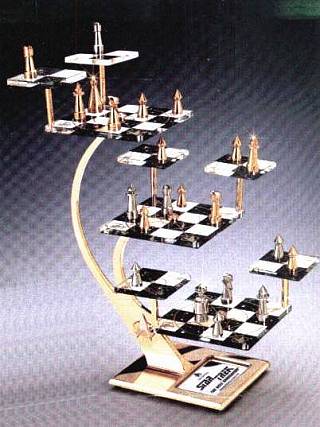

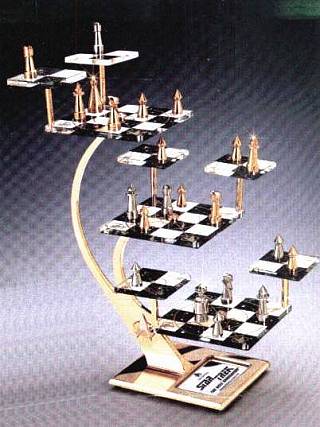

Think of a chessboard. There are some positions of pieces on it which are illegal in the sense that they could never have occurred during normal play. The pieces of course can be moved there by hand without getting anyone arrested - after all its your chessboard and you're free to do this in the privacy of your own home. They are nevertheless positions of pieces created by means of violations of the rules of chess and in that sense they're illegal positions.

Digital Recording

Great discussion, but there is still something that irks me about the digital recording discussion, not that I don't love listening to most of the 1000+ CDs I own (and many I have made in 20 years as a replicator).

Assume that a 16 bit system with 1-lsb dither has 90dB s/n (depending on dither, maybe another 10-20 dB of monotonic dynamic range), or 84dB with 2-lsb dither. This dither only "linearizes" the lowest bits, it has no effect on the resolution of the upper 14 bits.

Assume that reference "0"dB record level is 18dB below digital clipping (16dB is more traditional, but bear with me).

You are recording music that has a few peaks reaching real digital clipping, which establishes your overall record gain structure.

In this scenario lets say the RMS record level is 6dB below "0".

In this common scenario almost all of the music (except for a few peaks in the recording) is being encoded by a digital recording system which has maybe 13 bits of resolution. Much of the music (assuming you are not listening to Death Metal) utilizes even fewer bits.

This demonstrates that the resolution and distortion is dynamic with signal level in a digital system, and even a 16 bit system usually only employs 13 or fewer bits for a majority of the music. I am sure that at least part of this increase in distortion is masked by fact that it occurs at the lower levels.

This entirely different than an analog system where the resolution can be almost infinite (no discussions of atomic quanta here please) no matter what the level is, down to the noise floor. In an analog system the distortion can be constant down to the point where it is buried in the noise floor.

I am not necessarily advocating analog recording over digital, I am just hypothesizing that this dynamic distortion characteristic may be one source of audible differences between digital and analog recordings. Add this to the narrow-band distortion peaks at many sub-multiples of the sampling frequency (that the JAES recommends avoiding with test discs) and there are several mechanisms to which a different sound to digital recording could be attributed.

Am I way off base here?

Once again just my $0.02 worth. I appreciate the information from you folks more practiced in the art of digital system design.

Howie

Howard Hoyt

CE - WXYC-FM

UNC Chapel Hill, NC

www.wxyc.org

1st on the internet

Great discussion, but there is still something that irks me about the digital recording discussion, not that I don't love listening to most of the 1000+ CDs I own (and many I have made in 20 years as a replicator).

Assume that a 16 bit system with 1-lsb dither has 90dB s/n (depending on dither, maybe another 10-20 dB of monotonic dynamic range), or 84dB with 2-lsb dither. This dither only "linearizes" the lowest bits, it has no effect on the resolution of the upper 14 bits.

Assume that reference "0"dB record level is 18dB below digital clipping (16dB is more traditional, but bear with me).

You are recording music that has a few peaks reaching real digital clipping, which establishes your overall record gain structure.

In this scenario lets say the RMS record level is 6dB below "0".

In this common scenario almost all of the music (except for a few peaks in the recording) is being encoded by a digital recording system which has maybe 13 bits of resolution. Much of the music (assuming you are not listening to Death Metal) utilizes even fewer bits.

This demonstrates that the resolution and distortion is dynamic with signal level in a digital system, and even a 16 bit system usually only employs 13 or fewer bits for a majority of the music. I am sure that at least part of this increase in distortion is masked by fact that it occurs at the lower levels.

This entirely different than an analog system where the resolution can be almost infinite (no discussions of atomic quanta here please) no matter what the level is, down to the noise floor. In an analog system the distortion can be constant down to the point where it is buried in the noise floor.

I am not necessarily advocating analog recording over digital, I am just hypothesizing that this dynamic distortion characteristic may be one source of audible differences between digital and analog recordings. Add this to the narrow-band distortion peaks at many sub-multiples of the sampling frequency (that the JAES recommends avoiding with test discs) and there are several mechanisms to which a different sound to digital recording could be attributed.

Am I way off base here?

Once again just my $0.02 worth. I appreciate the information from you folks more practiced in the art of digital system design.

Howie

Howard Hoyt

CE - WXYC-FM

UNC Chapel Hill, NC

www.wxyc.org

1st on the internet

You are recording music that has a few peaks reaching real digital clipping, which establishes your overall record gain structure.

In this scenario lets say the RMS record level is 6dB below "0".

In this common scenario almost all of the music (except for a few peaks in the recording) is being encoded by a digital recording system which has maybe 13 bits of resolution. Much of the music (assuming you are not listening to Death Metal) utilizes even fewer bits.

This demonstrates that the resolution and distortion is dynamic with signal level in a digital system, and even a 16 bit system usually only employs 13 or fewer bits for a majority of the music. I am sure that at least part of this increase in distortion is masked by fact that it occurs at the lower levels.

Exactly, Howard. That's how it works with CD

And we all know that 24 bits sound much better. Of course, for those who listen mostly to over-compressed consumer music, resolution is not important

Hi,

I was being ironic...

This is actually a good analogy. The rules of chess are arbitrary and have no pressing need rooted in the physical world to be what they are. There are merely conventions.

The same holds true of all this digital audio sampling stuff. We are dealing with conventions, mostly build on mis-interpretation of pure math and wrong application of said pure math to a practical problem.

Hence these conventions are subject to challenge and revision and there is absolutely no need to obey them if one concludes empirically that to "violate gruesomely" the sampling theory sounds better.

It also suggests then that the sampling theory does not account correctly for how humans hear, as mathematically it is basically sound (if incorrectly applied), but that as they say is a whole other kettle of fish.

Ciao T

Its looking like you're taking the word 'illegal' too literally here. Step back for a moment.

I was being ironic...

Think of a chessboard. There are some positions of pieces on it which are illegal in the sense that they could never have occurred during normal play. The pieces of course can be moved there by hand without getting anyone arrested - after all its your chessboard and you're free to do this in the privacy of your own home. They are nevertheless positions of pieces created by means of violations of the rules of chess and in that sense they're illegal positions.

This is actually a good analogy. The rules of chess are arbitrary and have no pressing need rooted in the physical world to be what they are. There are merely conventions.

The same holds true of all this digital audio sampling stuff. We are dealing with conventions, mostly build on mis-interpretation of pure math and wrong application of said pure math to a practical problem.

Hence these conventions are subject to challenge and revision and there is absolutely no need to obey them if one concludes empirically that to "violate gruesomely" the sampling theory sounds better.

It also suggests then that the sampling theory does not account correctly for how humans hear, as mathematically it is basically sound (if incorrectly applied), but that as they say is a whole other kettle of fish.

Ciao T

This is actually a good analogy.

Ah now you're being ironic again by misinterpreting my analogy. All analogies can be pressed too far...

The rules of chess are arbitrary and have no pressing need rooted in the physical world to be what they are. There are merely conventions.

They're not arbitrary if what you want to play is chess. Of course they can be changed but then its no longer chess.

The same holds true of all this digital audio sampling stuff. We are dealing with conventions, mostly build on mis-interpretation of pure math and wrong application of said pure math to a practical problem.

So far its been claims of such. But math is math because its not just someone's opinion. I find claims of mis-interpretation and mis-application to be totally unconvincing - what will convert me though are demonstrations of the errors.

Hence these conventions are subject to challenge and revision and there is absolutely no need to obey them if one concludes empirically that to "violate gruesomely" the sampling theory sounds better.

Yep, agreed.

It also suggests then that the sampling theory does not account correctly for how humans hear, as mathematically it is basically sound (if incorrectly applied), but that as they say is a whole other kettle of fish.

So do please explain how (rather than merely claim that) its been incorrectly applied. That would be appreciated

Right on, HHoyt. That is the crux of the matter. It just gets better with analog, and it has less bits to work with and therefore more 'inaccuracy' as we reduce in level. All the background information that makes the sound 'real' is made fuzzy or worse. Just as I pointed out, over 30 years ago.

Howard

are you ignoring Shannon?Hartley theorem - Wikipedia, the free encyclopedia - analog signal channels definitely aren't "infinite" resolution; bandwidth and noise are always limiting

and TPDF does linearize digital response below the quantization level - a the cost of (very little) added white noise, the RMS value is independent of the signal - no pumping/breathing of the noise floor like you get with Dolby

you can listen to exaggerated dither examples with 8, 12 bit requantized music clips and a variety of dithers at Homepage of Alexey Lukin

people also sometimes don't appreciate noise shaping - its not just filtered dither - the quantization and dither noise are both shaped, there can be genuinely lower noise floor in our hearing's most sensitive frequency region

are you ignoring Shannon?Hartley theorem - Wikipedia, the free encyclopedia - analog signal channels definitely aren't "infinite" resolution; bandwidth and noise are always limiting

and TPDF does linearize digital response below the quantization level - a the cost of (very little) added white noise, the RMS value is independent of the signal - no pumping/breathing of the noise floor like you get with Dolby

you can listen to exaggerated dither examples with 8, 12 bit requantized music clips and a variety of dithers at Homepage of Alexey Lukin

people also sometimes don't appreciate noise shaping - its not just filtered dither - the quantization and dither noise are both shaped, there can be genuinely lower noise floor in our hearing's most sensitive frequency region

Last edited:

Yes, it REALLY does this. You'd be surprised what computers and DSP processors can do these days!So, essentially your software upsamples to 147 times of the source 192KHz rate and then downsamples by 640 times to get to 44.1KHz? I mean it REALLY does that?

Your question is an example of plurium interrogationum.And what if your source sample rate has a 50ppm Error?

What if the motor in your tape deck or cutting lathe has a poorly-oiled bearing that causes a periodic aberration in the speed?

Source errors are generally always completely uncorrectable, unless you can predict them and completely correct them later. If you make a bad recording, it continues to be a bad recording whether you listen to it at the original sample rate or use SRC to listen at some other rate.

EDIT: When I apply SRC, I am usually converting from one file to another, and thus there is no reclocking of the source. The clock is inherent in the word boundaries of the data storage.

CoreAudio is shipped for free with Mac OS X. It has various quality levels, mostly controlling the quality of the anti-aliasing filter, but the technique I describe is possible in real time on computers systems that are now decades old.I know nothing of the software you use (as you have omitted to mention it), but I have not come across the kind of solution you suggest in a situation where "live" signals are processed.

The correct term would be "aliasing" ... with a helping of "correlated quantization noise."Traditionally decimation includes lowpass filtering and processing, what I propose does not and I am unaware of a correct term for it. Maybe "direct decimation" then?

Last edited:

Yes: despite the apparent popularity of your brief essay, you are way off base here.Assume that a 16 bit system with 1-lsb dither has 90dB s/n (depending on dither, maybe another 10-20 dB of monotonic dynamic range), or 84dB with 2-lsb dither. [...]

Assume that reference "0"dB record level is 18dB below digital clipping (16dB is more traditional, but bear with me).

You are recording music that has a few peaks reaching real digital clipping, which establishes your overall record gain structure.

In this scenario lets say the RMS record level is 6dB below "0".

In this common scenario almost all of the music (except for a few peaks in the recording) is being encoded by a digital recording system which has maybe 13 bits of resolution. Much of the music (assuming you are not listening to Death Metal) utilizes even fewer bits.

This demonstrates that the resolution and distortion is dynamic with signal level in a digital system, and even a 16 bit system usually only employs 13 or fewer bits for a majority of the music. I am sure that at least part of this increase in distortion is masked by fact that it occurs at the lower levels.

This entirely different than an analog system where the resolution can be almost infinite (no discussions of atomic quanta here please) no matter what the level is, down to the noise floor. In an analog system the distortion can be constant down to the point where it is buried in the noise floor.

[...] I am just hypothesizing that this dynamic distortion characteristic may be one source of audible differences between digital and analog recordings. [...]

Am I way off base here?

A 16-bit system may have 90 dB S/N, but your mistake is to assume that nothing exists below the quantization noise level (or the LSB) just because it is a digital system. Without dither, small signal amplitudes below the LSB do indeed disappear from the recording, but proper dither preserves them. That's why I said that dither (of one kind or another) is an absolute necessity when quantizing a signal.

When you give specific examples of recording at peak levels below the maximum 0 dBFS, it's really not substantively different from recording on an analog system at levels below the maximum. In either case, your desired audio gets closer to the noise floor as you reduce the recording level, or as the performance reduces in loudness.

You are partially correct in recognizing the dynamic ratio between the recorded signal, which varies in level, versus the noise floor, which is basically constant. But this is not unique to digital. The exact same thing is true of analog recording. The biggest difference between analog and digital is that the margin between not-so-loud recordings and the noise floor is much, much narrower with analog recordings.

Another point is that we should be very careful about interchanging the terms 'noise' and 'distortion.' In many senses of the terms, they're the same thing. You can choose to consider harmonic distortion as 'noise' if you wish, and you wouldn't be entirely wrong. You can also choose to consider the noise floor as 'distortion,' and that would not be totally wrong, either. However, to the best of our knowledge of the human hearing system, dither noise is indistinguishable from tape noise or subtle surface noise on records (although the latter can become more distinctive and recognizable).

Anyway, analog systems are by no means infinite in resolution. Certainly not any more than digital. It has been well established that 24-bit recordings can be converted to CD and retain 'resolution' below 16-bit quantization levels that can be heard on playback by 16-bit systems. It seems that you're willing to credit 16-bit digital with another 10 to 20 dB of range below the bit depth, but why are you willing to credit analog with more than the same 10 to 20 dB? Properly handled, the digital systems can maintain "almost infinite" resolving power below the bit depth. As someone else astutely pointed out: This is more for AC signals, not for DC signals, but since the human hearing system perceives AC signals we're not really concerned with DC errors. If you're going to be realistic and draw a limit to what digital can resolve, you have to be fair and be equally realistic about analog, especially given the higher noise levels with common analog media.

So, what it all boils down to is that you choose to ignore the ability of digital to resolve signals below the bit depth, and thus analog appears superior in your mind's eye. Once you've seen and heard evidence that digital can preserve signals below the quantization level, then you realize that analog has no advantage there. I suppose it's not too surprising, though, because with certain people on this site proposing that we skip dither, such a system certainly suffers a complete loss of signal below the quantization level.

Finally, others have pointed out that the best A/D cannot do any better than the best analog stage. Where digital excels is by avoiding generational losses. Analog stages pile noise upon noise. Digital stages generally do not create any more noise (there are certainly plenty of exceptions, though), such that what you hear is equivalent to the studio, rather than hearing the effects of potentially hundreds of analog stages with traditional analog delivery methods.

Last edited:

you can listen to exaggerated dither examples with 8, 12 bit requantized music clips and a variety of dithers at Homepage of Alexey Lukin

people also sometimes don't appreciate noise shaping - its not just filtered dither - the quantization and dither noise are both shaped, there can be genuinely lower noise floor in our hearing's most sensitive frequency region

People do appreciate it - without dither and noise shaping it would be completely unlistenable

BUT - in assessing audio you always need a COMPARISON. Ear is only excellent as a relative "measuring instrument". So you would need hi resolution recording and reproduction of the same to confirm that lo res sounds "good". My recommendation is the "Resolution project"

The Resolution Project

Hi,

It may end up being a more advanced version of chess, like this...

Correct.

And much of the math quoted in the various theories on sampling audio data has limitations to it's validity which are not fulfilled by Audio Signals.

I find a lack of understanding fairly basic mathematical conceps totally unconvincing, plus, I do not give free remedials.

You are familiar with the limitations implied in Shannon/Nyquist, so why do you ask?

Ciao T

They're not arbitrary if what you want to play is chess. Of course they can be changed but then its no longer chess.

It may end up being a more advanced version of chess, like this...

So far its been claims of such. But math is math because its not just someone's opinion.

Correct.

And much of the math quoted in the various theories on sampling audio data has limitations to it's validity which are not fulfilled by Audio Signals.

I find claims of mis-interpretation and mis-application to be totally unconvincing - what will convert me though are demonstrations of the errors.

I find a lack of understanding fairly basic mathematical conceps totally unconvincing, plus, I do not give free remedials.

So do please explain how (rather than merely claim that) its been incorrectly applied. That would be appreciated

You are familiar with the limitations implied in Shannon/Nyquist, so why do you ask?

Ciao T

Last edited:

Hi,

I do not be convinced that they CAN do this, but that it actually is done this precise way.

Not at all, it is an example of the real world problems ASRC was designed to handle.

From file to file this is of course doable, so the conversion is off-line.

As far as I can tell the (A)SRC in core audio does not operate the way you claim, however I have not particular studied it. Do you have references that demonstrate that the SRC code in OSX operates this way and no other (more CPU cycle efficient) way?

I was not enquiring if this technology is possible (I know it is possible) but which precise software implements it.

Maybe. BUT, I ask again, have you tried it?

Ciao T

Yes, it REALLY does this. You'd be surprised what computers and DSP processors can do these days!

I do not be convinced that they CAN do this, but that it actually is done this precise way.

Your question is an example of plurium interrogationum.

Not at all, it is an example of the real world problems ASRC was designed to handle.

EDIT: When I apply SRC, I am usually converting from one file to another, and thus there is no reclocking of the source. The clock is inherent in the word boundaries of the data storage.

From file to file this is of course doable, so the conversion is off-line.

CoreAudio is shipped for free with Mac OS X.

As far as I can tell the (A)SRC in core audio does not operate the way you claim, however I have not particular studied it. Do you have references that demonstrate that the SRC code in OSX operates this way and no other (more CPU cycle efficient) way?

the technique I describe is possible in real time on computers systems that are now decades old.

I was not enquiring if this technology is possible (I know it is possible) but which precise software implements it.

The correct term would be "aliasing" ... with a helping of "correlated quantization noise."

Maybe. BUT, I ask again, have you tried it?

Ciao T

Hi,

To give you a few more datapoints, I actually took a few times an SPL meter to the concert halls in London, to get a handle on SPL's. I typically like seats centred and in the frontish rows (maybe 4 -6).

Big orchestras going at ffff on the finale of a piece can hit SPL's in the lower 80's (that is averaged) with high confidence, examining uncompressed recordings I find between 10-20dB crestfactor with the crestfactor at the crescondo generally lower than when levels are low.

I also found the lowest level from the Orchestra at pppp in the mid 40dB region, though I have a little less confidence in this measurement. Hall noise with an audience in RFH in London (which sits on isolators) was nearly as much.

So I would place the highest peaks from such an orchestra in the 100dB-ish region in terms of SPL at a fairly cheap seat (mid hall and back of hall cost more and are less loud).

If we scale this correctly to CD, so that the highest peaks align with 0dBFS (which is why we need MORE than 16 Bit in the actual initial recording) on the CD, the lowest musical parts will be in the -50 to -60dB region, HOWEVER, reverb tails and general ambience of the hall etc. will be at much lower levels.

They do add to the illusion of reality where they are recorded well (like Decca releases).

So yes, we only have maybe 13 Bit at the lower levels, which is why it is critical to get the levels right...

Ciao T

Assume that a 16 bit system with 1-lsb dither has 90dB s/n (depending on dither, maybe another 10-20 dB of monotonic dynamic range), or 84dB with 2-lsb dither. This dither only "linearizes" the lowest bits, it has no effect on the resolution of the upper 14 bits.

Assume that reference "0"dB record level is 18dB below digital clipping (16dB is more traditional, but bear with me).

To give you a few more datapoints, I actually took a few times an SPL meter to the concert halls in London, to get a handle on SPL's. I typically like seats centred and in the frontish rows (maybe 4 -6).

Big orchestras going at ffff on the finale of a piece can hit SPL's in the lower 80's (that is averaged) with high confidence, examining uncompressed recordings I find between 10-20dB crestfactor with the crestfactor at the crescondo generally lower than when levels are low.

I also found the lowest level from the Orchestra at pppp in the mid 40dB region, though I have a little less confidence in this measurement. Hall noise with an audience in RFH in London (which sits on isolators) was nearly as much.

So I would place the highest peaks from such an orchestra in the 100dB-ish region in terms of SPL at a fairly cheap seat (mid hall and back of hall cost more and are less loud).

If we scale this correctly to CD, so that the highest peaks align with 0dBFS (which is why we need MORE than 16 Bit in the actual initial recording) on the CD, the lowest musical parts will be in the -50 to -60dB region, HOWEVER, reverb tails and general ambience of the hall etc. will be at much lower levels.

They do add to the illusion of reality where they are recorded well (like Decca releases).

So yes, we only have maybe 13 Bit at the lower levels, which is why it is critical to get the levels right...

Ciao T

Hi,

So you mean to say that down-converting one time using the cheesehead approved mathematically correct method and the second time a method that crudely and rudely violated the virginal and perfect mathematical equations and they sounded the same!? Quel dommage!

You mean Dustin?

Not surprised at all...

Ciao T

Done it, can't hear any difference with my 61 year old ears. In fact the tape hiss coming and going between cuts is very obvious so the dither could be less important.

So you mean to say that down-converting one time using the cheesehead approved mathematically correct method and the second time a method that crudely and rudely violated the virginal and perfect mathematical equations and they sounded the same!? Quel dommage!

BTW the designer of the ESS DAC is an old friend,

You mean Dustin?

they actually built the whole thing on a giant FPGA and tweaked by listening. Some of the tweaks were not the mathematically correct thing to do.

Not surprised at all...

Ciao T

I challenge the assertion that ASRC actually does correct jitter in the kind of example that you gave. I do not doubt that people offer ASRC chips which claim to correct jitter this way, but I happen to believe that it would cause distortion rather than correct it. But perhaps there is something about these ASRC chips that I do not understand.Not at all, it is an example of the real world problems ASRC was designed to handle.

You'll note that I said 'typically.' It is also an available real-time option, from file to audio interface. Since CoreAudio implements a pull model, the DAC is in control of the clock, and the SRC is carried out in a fully synchronous manner, reading from the file in advance, as needed.From file to file this is of course doable, so the conversion is off-line.

Actually, the feature (problem?) of CoreAudio is that it offers many levels of SRC. Thus, the CPU cycle efficient way is implemented as well as the ideal way. The choice is selected by parameters to the SRC unit. When you crank all quality parameters to the maximum, you get the SRC that I described. See SRC Comparisons for examples, although that site only shows downsampling, not upsampling.As far as I can tell the (A)SRC in core audio does not operate the way you claim, however I have not particular studied it. Do you have references that demonstrate that the SRC code in OSX operates this way and no other (more CPU cycle efficient) way?

There's the rub. iTunes most certainly does not implement this. iTunes uses a CPU cycle efficient method that is probably satisfactory for the average customer. My own software implements the maximum quality, but it is rather difficult to know which software uses the ultimate settings unless they expose the settings in the user preferences and thoroughly document what's what.I was not enquiring if this technology is possible (I know it is possible) but which precise software implements it.

I have certainly listened to improperly downsampled audio in the past. Many examples. In fact, it's rather common in the audio plugin world to implement this as a sort of ring modulation or heterodyning distortion for effect. These effects operate in real time and allow the creative type the option of dialing in a specific amount of aliasing and/or quantization (truncation or otherwise).Maybe. BUT, I ask again, have you tried it?

However, I do plan on sampling some vinyl and precisely following the steps that you suggested. It should be entertaining.

Hi,

I did not give any example of an ASRC correcting jitter. In fact neither concept of jitter nor the word was even remotely touched upon.

You may indeed find so.

It may be even more fun to post the files for people to listen to "blind" as well as the original file and the necessary programmes to arrive to at the final files (as control)...

Ciao T

I challenge the assertion that ASRC actually does correct jitter in the kind of example that you gave.

I did not give any example of an ASRC correcting jitter. In fact neither concept of jitter nor the word was even remotely touched upon.

However, I do plan on sampling some vinyl and precisely following the steps that you suggested. It should be entertaining.

You may indeed find so.

It may be even more fun to post the files for people to listen to "blind" as well as the original file and the necessary programmes to arrive to at the final files (as control)...

Ciao T

Am I way off base here?

I think you are. Again, it's the mixing of signal to noise with resolution. You can express both of those in bits (or dB for that matter), but you can't interchange bits in the calculations.

edit: I see rsdio said more or less the same thing.

What other reason is there for those ASRC hardware chips to exist?I did not give any example of an ASRC correcting jitter. In fact neither concept of jitter nor the word was even remotely touched upon.

Interesting idea, but perhaps a waste of time. If I do end up having the time to waste, the unfortunate fact is that the programs would be specific to OSX.It may be even more fun to post the files for people to listen to "blind" as well as the original file and the necessary programmes to arrive to at the final files (as control)...

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- John Curl's Blowtorch preamplifier part II