Sth. new!

Today I have time to write sth down and share with you on the Arta software and sth. new

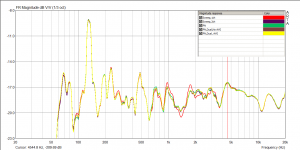

Here’s the comparison of the spectrum of pinks:PN pink,pink pink noise(the two from ARTA export) & smaartlive’s pink noise.Just very little and subtle distinction,except for the ARTA pink.So in the single channel mode for testing,I will opt the PN pink or smaart’s pink.But in the dual channel mode,either is OK.

The comparison of the two pinks for 5” studio monitor TF measurement,see the picture.

As you can see,they are essentially the same without no difference or with little defference(maybe on LF or constraints of the test environment,for better result you’d better use more averages to stabilize the data).

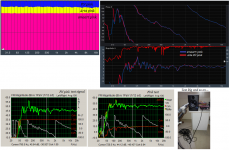

I concentrate on the “coherence” but not the mentioned above.The coherence curves of both on the image(just show the pink) are also the same,please keep in mind that the low ones around 500Hz(low coherence)!

Next step I also show the identical test using Arta,the FR2 mode measurement,to be comparison together.

The first is tested with PN pink,the second is with pink noise,the test conditions are identical,see the difference?

“Coherence” is very important to us to make corrective EQs on pro audio applications.So which one noise should be used?The low value in the pink test one gives us a good indication of the data which serves to trust or not.But in the ARTA operating manual, Ivo said: it is possible to delay the acquisition of the input channel to compensate its time difference when using the pink as the excitation source,maybe with low value coherence somewhere.PN pink can eliminate this problem even in reverberation location causing bad correlation!

Yes,I do the experimentation above shows it and verify it.But I think PN pink can “obscure” the coherence curve and make some ambiguity because of the flat coherence response.So when in this situation,I choose the pink noise as for testing in the ground plane condition that providing the “free field” measurement and monitor the coherence very close to “1”,any low value on the coherence response indicated that something wrong with the box!

Here just my thinking,maybe sth.wrong with it,so any comments are welcome!!

Today I have time to write sth down and share with you on the Arta software and sth. new

Here’s the comparison of the spectrum of pinks:PN pink,pink pink noise(the two from ARTA export) & smaartlive’s pink noise.Just very little and subtle distinction,except for the ARTA pink.So in the single channel mode for testing,I will opt the PN pink or smaart’s pink.But in the dual channel mode,either is OK.

The comparison of the two pinks for 5” studio monitor TF measurement,see the picture.

As you can see,they are essentially the same without no difference or with little defference(maybe on LF or constraints of the test environment,for better result you’d better use more averages to stabilize the data).

I concentrate on the “coherence” but not the mentioned above.The coherence curves of both on the image(just show the pink) are also the same,please keep in mind that the low ones around 500Hz(low coherence)!

Next step I also show the identical test using Arta,the FR2 mode measurement,to be comparison together.

The first is tested with PN pink,the second is with pink noise,the test conditions are identical,see the difference?

“Coherence” is very important to us to make corrective EQs on pro audio applications.So which one noise should be used?The low value in the pink test one gives us a good indication of the data which serves to trust or not.But in the ARTA operating manual, Ivo said: it is possible to delay the acquisition of the input channel to compensate its time difference when using the pink as the excitation source,maybe with low value coherence somewhere.PN pink can eliminate this problem even in reverberation location causing bad correlation!

Yes,I do the experimentation above shows it and verify it.But I think PN pink can “obscure” the coherence curve and make some ambiguity because of the flat coherence response.So when in this situation,I choose the pink noise as for testing in the ground plane condition that providing the “free field” measurement and monitor the coherence very close to “1”,any low value on the coherence response indicated that something wrong with the box!

Here just my thinking,maybe sth.wrong with it,so any comments are welcome!!

Sorry,something should be claimed:the experimentation above is using the "Uniform" window.But I try the PN pink using the Hanning that get the same coherence with the pink using "Uniform" or "Hanning".For dual-channel measurements the Hanning window is

recommended for its superior treatment of random signals.In ARTA,the window option should be the "Signal" window(used only with the continuous noise or an external excitation). So in FR2 mode for testing realtime TF,pink noise is only adoptable.If you use the PN pink in FR2,utilize the Hanning window,give the closest coherence result.

I strongly recommend that the default window functions are appropriately pre-selected by the manufacturer of the analyzer rather than left to the user,if possible.

Best Regards

Major Wong

recommended for its superior treatment of random signals.In ARTA,the window option should be the "Signal" window(used only with the continuous noise or an external excitation). So in FR2 mode for testing realtime TF,pink noise is only adoptable.If you use the PN pink in FR2,utilize the Hanning window,give the closest coherence result.

I strongly recommend that the default window functions are appropriately pre-selected by the manufacturer of the analyzer rather than left to the user,if possible.

Best Regards

Major Wong

Yes,I do the experimentation above shows it and verify it.But I think PN pink can “obscure” the coherence curve and make some ambiguity because of the flat coherence response.So when in this situation,I choose the pink noise as for testing in the ground plane condition that providing the “free field” measurement and monitor the coherence very close to “1”,any low value on the coherence response indicated that something wrong with the box!

Here just my thinking,maybe sth.wrong with it,so any comments are welcome!!

Don't be afraid of using PN Pink.

What is important is to turn on Frequency domain averaging. In that case PN pink gives real coherence which will usually be better than when using continuous pink noise. Why?

PN pink is periodic signal so we always work with maximum possible correlation of system input and output signal. It is not case with continuous noise where I/O delay reduce that correlation. By entering compensation for I/O delay a coherence will be better and close to Periodic noise measurement.

In next ARTA version I will implement slightly better pink noise generation, but it will not change this discussion.

When using continuous noise it is best to use Hanning window.

You must be careful if you measure response in time variant environments. Outdoor wind or indoor air conditioning can reduce correlation in measurement. In that case it could be better to use swept sine excitation signal, which is slightly more immune to such effects.

Best,

Ivo

In Fr2 mode you can't choose window for periodic noise it is fixed as uniform.

Ivo

Thanks for your kind response,just now I deleted the message,I am so sorry.Anyway,this is a good point!I think the signal window should pre-selected by Arta that can't be altered by hand

Best Regards

Major Wong

Don't be afraid of using PN Pink.

What is important is to turn on Frequency domain averaging. In that case PN pink gives real coherence which will usually be better than when using continuous pink noise. Why?

PN pink is periodic signal so we always work with maximum possible correlation of system input and output signal. It is not case with continuous noise where I/O delay reduce that correlation. By entering compensation for I/O delay a coherence will be better and close to Periodic noise measurement.

In next ARTA version I will implement slightly better pink noise generation, but it will not change this discussion.

When using continuous noise it is best to use Hanning window.

You must be careful if you measure response in time variant environments. Outdoor wind or indoor air conditioning can reduce correlation in measurement. In that case it could be better to use swept sine excitation signal, which is slightly more immune to such effects.

Best,

Ivo

The test above indeed is peformed in the way you said.The coherence always gets there on the figure,the matter is still on the selected window.

In the opinion,the coherence must be “1” that can be abtained the most reliable result.Many times I test the speakers which own the similar magnitude response but differ in coherence(In various test distance on axis) .

By the way,if measure the IR transformed to FR,I will check the ETC curve and exam the reflections whether influence the magnitude or not.But it get a more acid test than FR2 mode.

Best,

Interesting papers about it

http://www.artalabs.hr/papers/im-aaaa2003.pdf

http://www.artalabs.hr/papers/im-aaaa2003.pdf

Ivo, in this paper you write: This work shows that systems for measuring room impulse response with a pink RPMS

and logarithmic sweep excitation outperform systems with white spectrum excitation in a

real acoustical environment. The choice of an optimal excitation signal depends on

measurement environment. In an environment with a high noise, the pink RPMS gives the

best estimation, otherwise excellent result can be achieved with a logarithmic sweep

excitation...

Impulse response of swept-sine system in room with low-speed ventilation is too markedly

deformed...

and logarithmic sweep excitation outperform systems with white spectrum excitation in a

real acoustical environment. The choice of an optimal excitation signal depends on

measurement environment. In an environment with a high noise, the pink RPMS gives the

best estimation, otherwise excellent result can be achieved with a logarithmic sweep

excitation...

Impulse response of swept-sine system in room with low-speed ventilation is too markedly

deformed...

Yes, that paper show that swept sine also can exhibit distorted impulse response in time invariant environments. That is why I implemented both type of signals: swept sine and periodic noise. It is always good thing to use both type of signals, analyse start and tail of impulse response and decide which one is better.

Ivo

Ivo

I'd like to ask a question about smoothing.

I am measuring microphones rather than speakers.

My procedure is as follows:

1) measure loudspeaker with calibrated microphone (using calibration file)

using single gated impulse/smoothed frequency response

2)Make the resulting curve an overlay

3)Make measurement of microphone under test

4)Subtract overlay

I typically do this at higher resolution (1/24 octave smoothing) but after subtracting the overlay I'd like to change the resulting curve smoothing to 1/3 octave. When I attempt that via the chart control panel at right nothing happens.

I realize that I could do both the calibration overlay and test device response initially at 1/3 octave then subtract, but I don't think that would

be the same as changing the smoothing after the subtraction. It depends

on precisely how the smoothing is implemented.

Is there any way I can change smoothing after an overlay subtraction? I have a very old version of ARTA...perhaps this behavior has been modified

already.

Right now i'm having to postprocess the tabular file data to do this.

Les

I am measuring microphones rather than speakers.

My procedure is as follows:

1) measure loudspeaker with calibrated microphone (using calibration file)

using single gated impulse/smoothed frequency response

2)Make the resulting curve an overlay

3)Make measurement of microphone under test

4)Subtract overlay

I typically do this at higher resolution (1/24 octave smoothing) but after subtracting the overlay I'd like to change the resulting curve smoothing to 1/3 octave. When I attempt that via the chart control panel at right nothing happens.

I realize that I could do both the calibration overlay and test device response initially at 1/3 octave then subtract, but I don't think that would

be the same as changing the smoothing after the subtraction. It depends

on precisely how the smoothing is implemented.

Is there any way I can change smoothing after an overlay subtraction? I have a very old version of ARTA...perhaps this behavior has been modified

already.

Right now i'm having to postprocess the tabular file data to do this.

Les

Hi Les,

In ARTA, subtracting overlays is allowed only for the same resolution. So, you must do additional work on smoothing, but the question is what kind of smoothing is acceptable for microphone calibration.

Let me start from the fact that both: averaging and smoothing help reduce measurement variance, so we must treat smoothing as a good practice. It gives as better estimate of microphone differences than nonsmoothed and especially nonaveraged version of differences. Which level of smoothing you will be using depends on quality of measurement room (reverberation), noise and reproducibility of position and loudspeaker response.

Here is my opinion:

If you have all this factor with low variance you can use 1/12 octave differences, but in slightly worse environment it is better to go for 1/3 octave differences.

I would like to hear other thoughts about this problem.

Best,

Ivo

In ARTA, subtracting overlays is allowed only for the same resolution. So, you must do additional work on smoothing, but the question is what kind of smoothing is acceptable for microphone calibration.

Let me start from the fact that both: averaging and smoothing help reduce measurement variance, so we must treat smoothing as a good practice. It gives as better estimate of microphone differences than nonsmoothed and especially nonaveraged version of differences. Which level of smoothing you will be using depends on quality of measurement room (reverberation), noise and reproducibility of position and loudspeaker response.

Here is my opinion:

If you have all this factor with low variance you can use 1/12 octave differences, but in slightly worse environment it is better to go for 1/3 octave differences.

I would like to hear other thoughts about this problem.

Best,

Ivo

Hi Ivo.

I should mention we are commercial microphone manufacturers considering your software.

I understand the overlay and data must be the same resolution. The issue is changing the resolution after the subtraction. This seems not possible.

In analysis we want to see the response in high resolution. Gating removes

reflections from floors and walls, but the data includes near reflections from the mic stand and body. These typically cause many minor (1or 2 dB) ripples particularly at high frequency. Larger near reflections might require a change in design. (grilles,body shape, etc.)

We feel that this variation does little other than obfuscate for the non technical consumer, and a broader resolution like 1/3 octave is more representative of what they will hear.

For very low frequencies we use near field with no gate and box diffraction compensation with additional proximity effect compensation for pressure gradient microphones. For combination pressure/pressure gradient microphones (cardioid, hypercardioid, etc)we must use a plane wave tube since proximity effect is an unknown of the device under test. Minor wiggles from reflections (due to the lack of gating) are best removed with

1/3 octave smoothing here as well.

Again, if both the calibrated microphone and microphone under test are run at 1/3 octave, the overlay subtracted curve will be much more smoothed.

But we don't know if that's the same as smoothing a subtracted curve recorded at a high resolution. We suspect not. We could calculate whether it is if we know the smoothing algorithm.

In that case we would have to do two tests, since we want both high and low resolution data.

I should mention that our standard calibration microphone is fitted such that there are little or no near reflections.

Les Watts

L M Watts Technology

I should mention we are commercial microphone manufacturers considering your software.

I understand the overlay and data must be the same resolution. The issue is changing the resolution after the subtraction. This seems not possible.

In analysis we want to see the response in high resolution. Gating removes

reflections from floors and walls, but the data includes near reflections from the mic stand and body. These typically cause many minor (1or 2 dB) ripples particularly at high frequency. Larger near reflections might require a change in design. (grilles,body shape, etc.)

We feel that this variation does little other than obfuscate for the non technical consumer, and a broader resolution like 1/3 octave is more representative of what they will hear.

For very low frequencies we use near field with no gate and box diffraction compensation with additional proximity effect compensation for pressure gradient microphones. For combination pressure/pressure gradient microphones (cardioid, hypercardioid, etc)we must use a plane wave tube since proximity effect is an unknown of the device under test. Minor wiggles from reflections (due to the lack of gating) are best removed with

1/3 octave smoothing here as well.

Again, if both the calibrated microphone and microphone under test are run at 1/3 octave, the overlay subtracted curve will be much more smoothed.

But we don't know if that's the same as smoothing a subtracted curve recorded at a high resolution. We suspect not. We could calculate whether it is if we know the smoothing algorithm.

In that case we would have to do two tests, since we want both high and low resolution data.

I should mention that our standard calibration microphone is fitted such that there are little or no near reflections.

Les Watts

L M Watts Technology

Hi Les,

In ARTA, we use Type I -sixth-order butterworth filter response down to -20dB point and use it to convolve with frequency response that is interpolated on logarithmic scale (MLSSA approach).

Here is formula - for filter response

1/N-octave 6th order butterworth bandpass filter at f0 has response r at frequency f:

r = 1/(1.0 + pow(Q*(f / f0 - f0 / f), 6));

where:

Q = Q = 1.047198 / (pow(2.0, 1.0 / (2.0*N)) - pow(2.0, -1.0 / (2.0*N)));

I hope this will help you.

Best,

Ivo

In ARTA, we use Type I -sixth-order butterworth filter response down to -20dB point and use it to convolve with frequency response that is interpolated on logarithmic scale (MLSSA approach).

Here is formula - for filter response

1/N-octave 6th order butterworth bandpass filter at f0 has response r at frequency f:

r = 1/(1.0 + pow(Q*(f / f0 - f0 / f), 6));

where:

Q = Q = 1.047198 / (pow(2.0, 1.0 / (2.0*N)) - pow(2.0, -1.0 / (2.0*N)));

I hope this will help you.

Best,

Ivo

Smoothing relevance

Hi,

Lot of guys are confused with smoothing process - they often ask does it obscure the real measurement. I must say few word about it.

1) From theory of random processes we know that measurement is process where we want estimate measured value with a minimum variance. It is proved that smoothing reduce variance, but increase the resolution bandwidth.

2) From psycho-acoustics we know that ear system integrate response in loudness sensation in 1/6-1/3 octave bands, and that small variation inside that bandwidth are masked.

3) From theory of room acoustics we know that there is relationship between smoothing in frequency domain with integration of response over space.

We see that in all these cases smoothed curves are more close to show real audio stimulus than un-smoothed ones.

Best,

Ivo

Hi,

Lot of guys are confused with smoothing process - they often ask does it obscure the real measurement. I must say few word about it.

1) From theory of random processes we know that measurement is process where we want estimate measured value with a minimum variance. It is proved that smoothing reduce variance, but increase the resolution bandwidth.

2) From psycho-acoustics we know that ear system integrate response in loudness sensation in 1/6-1/3 octave bands, and that small variation inside that bandwidth are masked.

3) From theory of room acoustics we know that there is relationship between smoothing in frequency domain with integration of response over space.

We see that in all these cases smoothed curves are more close to show real audio stimulus than un-smoothed ones.

Best,

Ivo

Ivo, thanks for the information.

As far as the validity of smoothing the reference microphone/speaker response before subtracting to overcome the inability of changing smoothing after subtraction... I think it is a function of convolution properties.

So

Let f(w) be a power spectrum

Let O(w) be the speaker/reference mic overlay

Let s(w) be the 6th order filter squared

Now subtracting an overlay in dB should be the equivalent of dividing by a transfer function magnitude so...

(f(w)*s(w))/ (O(w)*s(w))=(f(w)/O(w))*s(w) ?

Is this convolution property true for any f, O, and s? I don't know. I'll have to pull my table of integrals book and see. Right now I only know that would be true if s(w) is the delta function.

If it is true, no problem. If not I think you can see the utility of being able to change the smoothing of an overlay subtraction of equal resolution curves just as you can with the original curve in the graph window.

You must have the convolution in the analysis routine but not in the overlay subtraction routine, even though the smoothing drop down menu is still there?

Les

L M Watts Technology

As far as the validity of smoothing the reference microphone/speaker response before subtracting to overcome the inability of changing smoothing after subtraction... I think it is a function of convolution properties.

So

Let f(w) be a power spectrum

Let O(w) be the speaker/reference mic overlay

Let s(w) be the 6th order filter squared

Now subtracting an overlay in dB should be the equivalent of dividing by a transfer function magnitude so...

(f(w)*s(w))/ (O(w)*s(w))=(f(w)/O(w))*s(w) ?

Is this convolution property true for any f, O, and s? I don't know. I'll have to pull my table of integrals book and see. Right now I only know that would be true if s(w) is the delta function.

If it is true, no problem. If not I think you can see the utility of being able to change the smoothing of an overlay subtraction of equal resolution curves just as you can with the original curve in the graph window.

You must have the convolution in the analysis routine but not in the overlay subtraction routine, even though the smoothing drop down menu is still there?

Les

L M Watts Technology

Last edited:

Les,

We must not look at smoothing as linear processing, so equality that you have written does not hold.

The question of smoothing was much discussed in process of spectrum estimation. Daniel has shown how periodogram estimate has smaller variance if we apply smoothing. Some other authors used smoothing process over previously ones, but it was shown by Blackman and Tukey that it leads to locally biased estimation.

Most microphone manufacturers gives calibration curves in 1/3-octave bands, and it is even worse than 1/3-octave smoothing (that is why ARTA uses spline interpolation to get necessary calibration points).

It would be nice that manufacturers gives several curves with different octave resolution, but it will be never done as some of that curve may look ugly - we simply do not have ideal measuring condition.

What is left to us (authors of measuring software) is to believe that manufacturer calibration data are the best what we can get to apply on smoothed and un-smoothed FR curves.

Now I will say something that you probably will not like to hear: calibration of microphone can be made by comparison with other microphone, but it means that nobody knows the phase of mic. response, and by applying only magnitude calibration disable further possibility of correct digital signal processing, i.e. inverse FFT will be corrupted. It means that applying calibration is one way procedure, just valid to get better estimate of magnitude of frequency response. Looking that way we can accept that our calibration has some part of dB error.

In acoustical measurement we are using microphone in acoustic field of unknown diffusity so calibration from free field also carry some error.

I hope that I cleared this:

1) In acoustical measurement some descent smoothing help us to get better insight in frequency response.

2) It is not good thing to apply to much of smoothing, as that obscure some details - 1/3 octave could be acceppted as largest smoothing band.

3) calibration of microphone in 1/N octave > 1/3 oct. band is acceptable, but not ideal one.

4) It is not good thing to make smoothing over difference of previously smoothed curves.

And finally your main question is: why not get raw DFT unsmoothed difference curve and then apply smoothing? If you have measurement of reference microphone and measured microphone in ideal anechoic, no-noise conditions then this can hold, but then we do not need smoothing!!!

To conclude:

In less than ideal condition some level of smoothing helps even in calibration of microphone.

Best,

Ivo

We must not look at smoothing as linear processing, so equality that you have written does not hold.

The question of smoothing was much discussed in process of spectrum estimation. Daniel has shown how periodogram estimate has smaller variance if we apply smoothing. Some other authors used smoothing process over previously ones, but it was shown by Blackman and Tukey that it leads to locally biased estimation.

Most microphone manufacturers gives calibration curves in 1/3-octave bands, and it is even worse than 1/3-octave smoothing (that is why ARTA uses spline interpolation to get necessary calibration points).

It would be nice that manufacturers gives several curves with different octave resolution, but it will be never done as some of that curve may look ugly - we simply do not have ideal measuring condition.

What is left to us (authors of measuring software) is to believe that manufacturer calibration data are the best what we can get to apply on smoothed and un-smoothed FR curves.

Now I will say something that you probably will not like to hear: calibration of microphone can be made by comparison with other microphone, but it means that nobody knows the phase of mic. response, and by applying only magnitude calibration disable further possibility of correct digital signal processing, i.e. inverse FFT will be corrupted. It means that applying calibration is one way procedure, just valid to get better estimate of magnitude of frequency response. Looking that way we can accept that our calibration has some part of dB error.

In acoustical measurement we are using microphone in acoustic field of unknown diffusity so calibration from free field also carry some error.

I hope that I cleared this:

1) In acoustical measurement some descent smoothing help us to get better insight in frequency response.

2) It is not good thing to apply to much of smoothing, as that obscure some details - 1/3 octave could be acceppted as largest smoothing band.

3) calibration of microphone in 1/N octave > 1/3 oct. band is acceptable, but not ideal one.

4) It is not good thing to make smoothing over difference of previously smoothed curves.

And finally your main question is: why not get raw DFT unsmoothed difference curve and then apply smoothing? If you have measurement of reference microphone and measured microphone in ideal anechoic, no-noise conditions then this can hold, but then we do not need smoothing!!!

To conclude:

In less than ideal condition some level of smoothing helps even in calibration of microphone.

Best,

Ivo

We understand fully that we aren't getting phase information. Phase response can be known of course. Most of our mics are measuring the integral of the pressure gradient.

Many (not all) microphone responses are minimum phase and can be corrected by DSP or even analog means. Polar response variation of course cannot be corrected in a single microphone by those means. Theoretically possible in arrays.

Many published mic frequency response curves are "drawn by the marketing manager" and have no tolerances and are useless.

We desire to supply individual responses for each microphone smoothed to

1/3 octave, but we typically do our calibrations at 1/24 octave. Our test speaker is suspended to allow 7ms gates, which allows us 140Hz. Our results seem fairly accurate (to about 1 dB) when we compare one calibrated standard mic to another, measured by reciprocity or other methods. One of the larger variations is paper speaker cones and humidity. So we do daily calibration.

There are always minor variations because the mics (and speaker)are not point sources and have many diffraction/reflection effects.

Low frequency measurement of pressure gradient microphones is problematic. Even the largest anechoic rooms had pressure mode and tunnel effect. Gated impulse requires large spaces. Really the only way in the lab is a plane wave tube...also huge.

We are working on a short active PWT...we just have to make the active termination look like 420 Rayl! Requires a feedforward/feedback controller.

Anyway, thanks for your help. We'll have to post process the smoothing. Please consider adding variable smoothing after an overlay subtraction in a later release.

Les

L M Watts Technolgy

Many (not all) microphone responses are minimum phase and can be corrected by DSP or even analog means. Polar response variation of course cannot be corrected in a single microphone by those means. Theoretically possible in arrays.

Many published mic frequency response curves are "drawn by the marketing manager" and have no tolerances and are useless.

We desire to supply individual responses for each microphone smoothed to

1/3 octave, but we typically do our calibrations at 1/24 octave. Our test speaker is suspended to allow 7ms gates, which allows us 140Hz. Our results seem fairly accurate (to about 1 dB) when we compare one calibrated standard mic to another, measured by reciprocity or other methods. One of the larger variations is paper speaker cones and humidity. So we do daily calibration.

There are always minor variations because the mics (and speaker)are not point sources and have many diffraction/reflection effects.

Low frequency measurement of pressure gradient microphones is problematic. Even the largest anechoic rooms had pressure mode and tunnel effect. Gated impulse requires large spaces. Really the only way in the lab is a plane wave tube...also huge.

We are working on a short active PWT...we just have to make the active termination look like 420 Rayl! Requires a feedforward/feedback controller.

Anyway, thanks for your help. We'll have to post process the smoothing. Please consider adding variable smoothing after an overlay subtraction in a later release.

Les

L M Watts Technolgy