Is that some kind of bad joke?

You'd rather seem to confound the two kinds that are very different: sound production, as from natural instruments, and sound reproduction.

In another thread you walk between the two very naturally; I guess you don't attend to a concert with microphones, oscilloscopes and all the paraphernalia

It would matter if you walked around the room and the phase information did not change. Possibly you underestimate the processing that our brain's are doing? We also need take account of how the various spectral components come and go, and the expression the violinist can endow in his/her playing as a result.

Let's stick to hifijim's evidence that phase doesn't change the sound of a violin.

If the brain "reconstitutes" the violin sound by intensive processing, it does that despite the irrelevance of the phase relationships reaching it.... as your post seems to say.

B.

Have you considered taking an opportunity to update your knowledge of psychology?

I am one of the few who chose to advance their understanding in this respect.

Is that some kind of bad joke? Of course a recording of a drum strike played backwards would sound much unlike the original. Or do I misunderstand what "time reversed" means to you?

Can you think of a better means to highlight the difference between minimum and maximum phase representations? Both are spectrally (magnitude-wise) identical but starkly different in their perception.

Let's stick to hifijim's evidence that phase doesn't change the sound of a violin.

If the brain "reconstitutes" the violin sound by intensive processing, it does that despite the irrelevance of the phase relationships reaching it.... as your post seems to say.

Not at all. All the phase relationships are maintained in the bispectrum, which a considerable body of psychological evidence now indictates is used widely by the brain - not just in audio but in things like motion optimisation that uses essentially the same cognitive processing.

What bispectral processing allows is to identify source characteristics amid other changing elements - such as the broadband changes apparent as someone moves around a performer.

Sticking to what is "known" is a path that leads only to never finding out is unknown.

Of course drum sounds (or speech, or birdcalls, or dog barks, or cat meows ) played backwards sound different!Both are spectrally (magnitude-wise) identical but starkly different in their perception.

This has absolutely nothing whatsoever to do with absolute phase, and everything to do with the fact that human hearing is quite sensitive to the envelope of a waveform.

The envelope of most sounds - such as drum hits - is not even close to being symmetrical in time. Usually the attack is much quicker than the decay, and playing the same sound backwards usually creates a very different envelope, and this changes how our ears and brain perceive the sound.

Try recording clean sine wave tone bursts, which have an envelope that is actually symmetrical in time - if you play them backwards, there is no change in sound, because there is no change in envelope!

Because human hearing is sensitive to the envelope of a sound, even crude synthesizers from decades ago had adjustable attack, decay, sustain, and release controls, because by varying these four envelope parameters, you can generate a wide variety of sounds.

Another classic example is "volume swells" on a guitar, a technique where the guitarist uses her little finger to roll the volume knob of the guitar up from zero immediately after striking a string with the pick or finger. The note swells up from silence, and sounds more like an organ and less like a guitar. The change in sound has absolutely nothing to do with phase, and everything to do with waveform envelope.

Here is a video of a guitar player demonstrating guitar volume swells - note that the sound of the guitar changes dramatically by reshaping the envelope, while the entire audio reproduction chain remains the same (i.e. no change at all in phase linearity or absolute phase): YouTube

If you are still unclear on the concept, here is a Wikipedia entry describing the envelope of a musical note: Envelope (music - Wikipedia)

And here is a Britannica.com entry on how the envelope of a sound changes the sound of an intrument: Envelope | sound | Britannica

-Gnobuddy

Hi all

I am trying to find screen shots or measured data etc of loudspeakers producing a square wave.

This is turning out to be harder to find than I first thought.

I have seen many references to it, know first hand a Manger driver can, the Dunlavy’s are suppose to but when you read the website, they are talking about electrical summation and not measured response with the drivers included.

Can anyone point me towards results of two or three way speakers doing it in real life, that is a mic signal not a simulation.

Preserving time (and so waveshape) well enough to do this has been an interest for a long time and I am interested to see where I am relative to hifi speakers.

Thanks much

Tom Danley

Danley Sound Labs

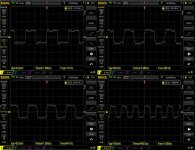

Well, here's a set only about 15 years late

in case you ever peruse here ..

A coax compression driver in between two hornloaded and ported 12"s, Peter Morris's PM60, FIR processed.

I picked out some of the prettiest ones, but all looked at least decent below 1kHz.

They started going real ragged at around 2kHz.....if i remember right from a few years back when i took them..didn't save any uglies lol

Oh, big thanks for your post #23...awesome collection of one line understandings..

Attachments

A reed warbler maybe?Does any sound in nature come close to a square wave? I don't think so, but I could be wrong.

This has absolutely nothing whatsoever to do with absolute phase

That is correct and my comments concern the relative phases of different (and nominally short-term) spectral components and how they combine to yield (change) a perception. Even excluding directional information, audible differences between minimum, maximum and linear phase filters have long been documented. The drum might be a crass example, but there are nevertheless aspects of our perception that do not fit with the notion of the ear-brain as a purely phase-insensitive spectrum analyzer.

A model assuming a steady-state analyser serves very well for the most part and my comments are not intended to infer that such a means of spectral analysis is not a dominant aspect in our perception. But it does not present a complete measure if we include transient events most evident in percussive instruments. We certainly discern instruments and voices from each other and from room effects that are also present in a recording but occupy the same spectral 'bins' (and even in mono via an old analogue telephone, for example). And where an audio reproduction system can change the relative phases of the spectral information, so there exists the possibility those changes are also incorporated in our perception.

There is some evidence in this thread Can you hear a difference between 2 solid state preamps? that the phase shift difference between two preamps, one with a bandwidth of 100 kHz, the other 1 MHz, was perceived mainly in the sibilance of the vocalsBut it does not present a complete measure if we include transient events most evident in percussive instruments.

There is some evidence in this thread Can you hear a difference between 2 solid state preamps? that the phase shift difference between two preamps, one with a bandwidth of 100 kHz, the other 1 MHz, was perceived mainly in the sibilance of the vocals

I will have a read... My experience is that many preamp differences arise from their different capabilities to drive capacitive load elements. Clipping can then often lead to bursts of oscillation that appear as sibilance.

There is some evidence in this thread Can you hear a difference between 2 solid state preamps? that the phase shift difference between two preamps, one with a bandwidth of 100 kHz, the other 1 MHz, was perceived mainly in the sibilance of the vocals

I will have a read... My experience is that many preamp differences arise from their different capabilities to drive capacitive load elements. Clipping can then often lead to bursts of oscillation that appear as sibilance.

After a very quick read, it is interesting that there are lots of magnitude responses in the thread, but not one square wave (time) response (that I found anyway) wherein oscillations would be readily viewable. Noise floor modulation gets a mention which is a possibility. But to the topic of this thread, I am not at all convinced that phase response is the reason they sound different (if indeed they do). Admittedly inter-aural time resolution has been quoted as being approximately twice that which would be inferred from a 20kHz audible bandwidth and maybe tests in mono would rule that out? But I remain sceptical. I have also used the AD797 many times and freeing it of oscillation in several of those applications was not so straightforward. It is an exceptional op-amp when implemented well...

Last edited:

The wider BW amp had less sibilance. Pavel would probably be interested in your thoughts. Re ITD, it's often a red herring, if both channels are identical it's not an issueAfter a very quick read,

Well, here's a set only about 15 years late

in case you ever peruse here ..

A coax compression driver in between two hornloaded and ported 12"s, Peter Morris's PM60, FIR processed.

I picked out some of the prettiest ones, but all looked at least decent below 1kHz.....

That's the point: you can fish around with your mic (esp up real close to the driver if most of the spectrum is coming from a single driver, and in the longer waves) to find a nice looking square wave and then move the mic a bit and - poof - the pretty square wave is gone. So when your speakers are playing square waves, purely an accident what shows up at your ears.

B.

That's the point: you can fish around with your mic (esp up real close to the driver if most of the spectrum is coming from a single driver, and in the longer waves) to find a nice looking square wave and then move the mic a bit and - poof - the pretty square wave is gone. So when your speakers are playing square waves, purely an accident what shows up at your ears.

Let's put aside for the time being any talk of near-field measures (that will look somewhat random at any particular point) and of in-room measures complicated by reflections. We can also consider without much inaccuracy that the sound path between the loudspeaker and the ear is non-dispersive, where phase information will be preserved along the resulting direct sound path and there are no accidents.

Let's also put aside the notion of a square-wave producing loudspeaker, since band-limiting is inevitable and rendering a square wave is not realistic. So we are left concerned (or not) with only the phase information within the operating band of the loudspeaker (and a less-than-square square wave response).

There is an assumption that the ears and brain work as a phase insensitive spectrum analyser: This is Ohm's Acoustical Law. We can, for example, listen to two discrete tones and vary the phase of one or other and be powerless to identify that variation: The envelope remains unchanged; The phase response is inaudible; Ohm appears to be correct.

But in certain situations, the relative phase response might explain some audible effects which Ohm's model says should not be audible. Examples include the audible differences with linear and minimum phase EQ, with and without phase compensation of the loudspeaker's low-frequency roll-off, or with adding many all-pass filters in the signal path - all of which Ohm says sound the same, but long documented experiments say do not.

In these certain circumstances, we would likely conclude that the ears and brain are not completely phase insensitive. With no clock in the brain to measure absolute delays, we are left to look for an answer in the relative phases of the spectral components - that is the relation of one spectral component to another, rather than to just the relation between the input and output. In order to measure that relation, we need look at higher order analysis than our second order spectrum analyser offers.

If we suppose the ears and brain are capable of discerning bispectral information, for example, then there exists the means whereby relative phase measures permit room reverberation to be filtered from the source and where one source can be filtered from another source of similar frequency content (especially helpful for those lucky enough to be invited to cocktail parties). We would also be able to perceive some of the differences due to the relative phase response of filters and loudspeakers with identical magnitude responses.

As I have said previously, none of this excludes the spectrum analyser model as being a dominant source of information in our auditory perception or supplants the notion that the ears and brain are predominantly phase insensitive. Just it is does not render the whole picture. Higher order analysis provides a means for discerning relative phase information that can appear scrambled when measured, a means for discarding information discerned by our brains to be irrelevant to the source we are focused upon, and also the means for discerning different phase responses in loudspeakers that are not immediately apparent from looking at group delay measures.

I do add as a caveat, however, that this is not the only explanation possible (but I have not found a thread relevant enough for that other discussion yet).

Last edited:

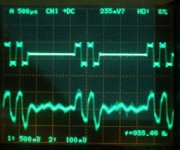

Interesting Thread.  As I am now building and testing original active 2nd order TP system.

As I am now building and testing original active 2nd order TP system.

I think that DSP based TP system probably be the winner in this TP field, but I also think this type of analog computing TP crossover has advantages over DSP , such as simple, low cost, real time etc.

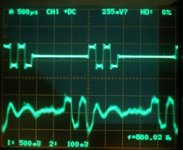

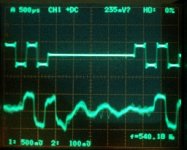

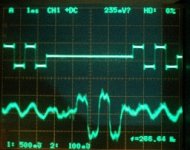

I show you part of the square wave test result.

Speaker is a 2 way System crossed at 2400Hz by 2nd order TP slope; multi amping; time aligned.

Woofer ;Kenwood LS1001 woofer, 15cm paper cone

Tweeter ; Scanspeak D3004/664000 Be. fs; 500hz. temporally sub-baffle mounted.

Square waves are generated by PC.

Upper trace; Input signal, Lower trace; mic amp output.

The frequencies are 2800Hz, 2400Hz ,1600Hz and 800Hz.

You see the reproduced square waves are pretty close to the input signals. Wiggling in between rest time must be caused by vibration of woofer and/or front baffle.

I think that DSP based TP system probably be the winner in this TP field, but I also think this type of analog computing TP crossover has advantages over DSP , such as simple, low cost, real time etc.

I show you part of the square wave test result.

Speaker is a 2 way System crossed at 2400Hz by 2nd order TP slope; multi amping; time aligned.

Woofer ;Kenwood LS1001 woofer, 15cm paper cone

Tweeter ; Scanspeak D3004/664000 Be. fs; 500hz. temporally sub-baffle mounted.

Square waves are generated by PC.

Upper trace; Input signal, Lower trace; mic amp output.

The frequencies are 2800Hz, 2400Hz ,1600Hz and 800Hz.

You see the reproduced square waves are pretty close to the input signals. Wiggling in between rest time must be caused by vibration of woofer and/or front baffle.

Attachments

Me three.

Barry. Who is enjoying a pair of SH50’s at this very moment.

Ditto, he's already a legend, and a truly nice guy to boot....

- Home

- Loudspeakers

- Multi-Way

- Making Square Waves?