Hi BYRTT,

Thank you for the feedback. Much appreciated. I am sure this isn't my last pass, I am trying to get an 8 channel DAC to replace my current gear.

======================== %< snip >% ========================

Hi Fluid,

It sounds quite good. I previously tried DRC Designer and would get good and bad sounding results most assuredly due to the pilot's ignorance use of the tool.

I have stereo OB/Dipole subs and 3-way planar/ribbon speakers (8 channels) and a stereo 3-way active XO (6 channels) and 2 passive unknown internal XOs.

There is an active known 40Hz XO between the sub and bass.

There is an active known 250Hz XO between the sub and (mid/tweet) combo.

There is a passive internal XO between the (mid/tweet) combo that the factory suggests is @ 3,000Hz, but doesn't elaborate on slopes. That is the unknown that I approximated by braille.

I currently have no way of adding delays with the current XOs. I am waiting on an 8 channel DAC and then I can time align the drivers. I will then need to know what to use in REW to gauge delays. I see REW has an "estimate IR delay" function, a "time align" function and a "IR align" function and need to learn the differences. I can also switch to linear XO's at that point.

If I get the 8 channel DAC and switch to linear XO's, there should be less phase issues to correct if I understand them correctly.

Not yet, I haven't moved the mic. I wanted to verify [before/after], [measured baseline/predicted results/measured results] without adding any additional variables into the equation while learning the software. It is hard to reliably repeat 9 different measurements at different X,Y,Z locations.

I have quite a bit of absorption and diffusion in the room and the current filters sound good at the sweet spot as well walking around the room.

I personally prefer a flat "house curve". If my ears were 30 years younger, that maybe another story.

For main quistion will say that is normal and expected because when we manipulate some typical excess phase lag in time domain it has to be a pre operation view into impulse or step response graphs that would cost some overall processing systen lag to repair.

In general looks you have a good feel on stuff

As some general tip for where you are now say everything is really perfect based, then remember than any wish for other house curve adjustment or left verse right channel calibrations has to be global adjustments. What i mean is stay away any house curve adjustments per selective band pass unlesh you really happen find some errors there, because even small EQ changes per pass band will often or probably need a new time allignment setting and that operation can often be a big workload.

Thank you for the feedback. Much appreciated. I am sure this isn't my last pass, I am trying to get an 8 channel DAC to replace my current gear.

======================== %< snip >% ========================

Hi Fluid,

Those graphs look goodRemember to listen to the correction and see if you like it, I have made many graphs that look great and sound terrible.

It sounds quite good. I previously tried DRC Designer and would get good and bad sounding results most assuredly due to the pilot's ignorance use of the tool.

So you have an active crossover between dipole subs and main speakers with an unknown passive crossover?

I have stereo OB/Dipole subs and 3-way planar/ribbon speakers (8 channels) and a stereo 3-way active XO (6 channels) and 2 passive unknown internal XOs.

There is an active known 40Hz XO between the sub and bass.

There is an active known 250Hz XO between the sub and (mid/tweet) combo.

There is a passive internal XO between the (mid/tweet) combo that the factory suggests is @ 3,000Hz, but doesn't elaborate on slopes. That is the unknown that I approximated by braille.

I currently have no way of adding delays with the current XOs. I am waiting on an 8 channel DAC and then I can time align the drivers. I will then need to know what to use in REW to gauge delays. I see REW has an "estimate IR delay" function, a "time align" function and a "IR align" function and need to learn the differences. I can also switch to linear XO's at that point.

As Byrrt said the change in the step response before the peak and the increased preringing in the impulse is the result of the phase manipulation. You have gone from 1700 degrees of phase turn to 360 degrees 20-20k so something has to give.

If I get the 8 channel DAC and switch to linear XO's, there should be less phase issues to correct if I understand them correctly.

Are these in room averaged listening positions measurements like in SwissBears tutorial?

Not yet, I haven't moved the mic. I wanted to verify [before/after], [measured baseline/predicted results/measured results] without adding any additional variables into the equation while learning the software. It is hard to reliably repeat 9 different measurements at different X,Y,Z locations.

I have quite a bit of absorption and diffusion in the room and the current filters sound good at the sweet spot as well walking around the room.

If so a flat room response will tend to be too bright. You could try adding in a room curve in REW to see how you like it.

I personally prefer a flat "house curve". If my ears were 30 years younger, that maybe another story.

Last edited:

Sounding good is the aim so that's good newsHi Fluid,

It sounds quite good. I previously tried DRC Designer and would get good and bad sounding results most assuredly due to the pilot's ignorance use of the tool.

DRC Fir is very easy to get wrong and tweaking the parameters is not easy.

Give Gmad's method and filters a try, I find this to be the easiest way to use DRC

A convolution based alternative to electrical loudspeaker correction networks

I currently have no way of adding delays with the current XOs. I am waiting on an 8 channel DAC and then I can time align the drivers. I will then need to know what to use in REW to gauge delays. I see REW has an "estimate IR delay" function, a "time align" function and a "IR align" function and need to learn the differences. I can also switch to linear XO's at that point.

You would be better to use the acoustic timing reference in REW

This is a page from minidsp, explaining the use of it, just ignore the minidsp stuff that doesn't apply

Measuring Time Delay

If you use a linear phase crossover properly time aligned with drivers EQ'd flat either side there should be nothing to correct. You will still get the same wobble in the step and IR.If I get the 8 channel DAC and switch to linear XO's, there should be less phase issues to correct if I understand them correctly.

I would suggest making a version of the correction without the phase linearization (just bypass those parts in Rephase and generate another filter) but keeping the same EQ from REW and see if you can tell the difference between them and then whether you actually have a preference either way.

This is one area where the graph will look significantly better with the correction but the sound may not reflect what you are seeing. Or it might

Not yet, I haven't moved the mic. I wanted to verify [before/after], [measured baseline/predicted results/measured results] without adding any additional variables into the equation while learning the software. It is hard to reliably repeat 9 different measurements at different X,Y,Z locations.

You don't have to get the mic in exactly the same spot as the averaging works to remove a lot of the positional differences. Try a 'moving head' average by taking 4 to 8 measurements within the space your head would occupy and use that as a base for correction.

Maybe the combination of treatment and directivity make that better, ultimately it's your preference that countsI have quite a bit of absorption and diffusion in the room and the current filters sound good at the sweet spot as well walking around the room.

I personally prefer a flat "house curve". If my ears were 30 years younger, that maybe another story.

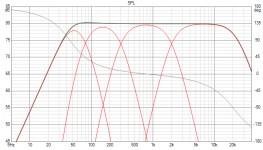

Maybe because we dont want to improve the group delay or timing isolated on its own is because the physical amplitude signal really isnt there and we then end break physics for timing in natural sounds, know its too simplistic but take below theoretical example from a recording chain, red curve is raw bandwidth of a acoustic bass guitar with 1st order stop bands at 41Hz and 7kHz, and if we feed or say cascade that bandwidth thru a very good microphone bandwidth with 1st order stop bands at 6Hz and 20kHz we end up a bit limited domain in the blue curve. Amplitude and timing (phase) for that new blue curve is what the other musicians plus mix and master process will base their cooperation or work on and therefor will guess if we change blue curves original blue phase to be the red phase we add a timing distortion because the wider amplitude performance of red curve we never get back because of the cascaded chain.

Hi BYRTT, been thinking about this.

But i keep coming back to .....i dunno....

Do low frequency natural sounds necessarily have any low freq rolloff, and hence group delay ?

Like a single note from a pipe organ or the lowest piano key?

I don't think so...could easily be wrong though.

If limited bandwidth sounds in nature don't necessarily have phase rolloff on the ends on their bandwidth, I think linear phase is the only true reproduction method...all the way down as low as possible.

If limited bandwidth sounds in nature do have phase rolloff, my mind is getting ready to be expanded, and i'd love the clarity that understanding would bring.

Now none of the above is to say that recordings will sound better fully lin phase...I can easily see how recording and mastering techniques/practices can be tuned to make playback sound better fully min phase.

But i think when we prefer (rightfully) min phase playback, we are just matching crooked sounding to a crooked made recording.

Hi BYRTT, been thinking about this.

But i keep coming back to .....i dunno....

Do low frequency natural sounds necessarily have any low freq rolloff, and hence group delay ?

Like a single note from a pipe organ or the lowest piano key?

I don't think so...could easily be wrong though.

If limited bandwidth sounds in nature don't necessarily have phase rolloff on the ends on their bandwidth, I think linear phase is the only true reproduction method...all the way down as low as possible.

If limited bandwidth sounds in nature do have phase rolloff, my mind is getting ready to be expanded, and i'd love the clarity that understanding would bring.

Now none of the above is to say that recordings will sound better fully lin phase...I can easily see how recording and mastering techniques/practices can be tuned to make playback sound better fully min phase.

But i think when we prefer (rightfully) min phase playback, we are just matching crooked sounding to a crooked made recording.

I think you could go round and round making arguments either way and never get to the "right" answer

I can't think of a low frequency musical instrument without a resonating chamber of some sort, pipe, sound box etc. All of those will behave as minimum phase devices and have rolloff's associated with that. Which will change phase and introduce group delay.

How can a natural sound have unlimited bandwidth and the phase not follow the amplitude?

If you make a speaker with a flat amplitude response with no rolloff of any kind until outside of the 20-20K region then you have a flat phase throughout the realistic range of hearing. If it rolls off minimum phase from there on it falls in the range of perception rather than hearing.

It satisfies both minimum phase target and linear in the important regions.

I don't think you can ever get to a consensus on the idea of listening to the same type of system as the engineers did at mixing and mastering unless there is an international standard on it. So you are left deciding for yourself what you think sounds right or best.

It doesn't make sense to me to create a speaker that could not exist without the aid of linear phase processing, to make the phase not follow the amplitude. I prefer the idea of using the correction to return the speaker to the best natural response it could have. Not that this helps answer whether it is right or wrong

I agree with Fluid. If we were able to create the ideal speaker, where one point in space would play our desirable bandwidth, it would still act as a minimum phase source.

As do natural sounds in our universe, so why would we want something different from our (not so perfect) speakers?

I tried to get that minimum phase timing at the listening position... By using enough room treatment and a pair of full range arrays plus EQ/DSP. I've listened to an entirely linear correction as well as phase following the band pass behaviour at that spot. I definitely prefer the latter. Acting as a minimum phase bandpass device.

Some songs just didn't sound right with a complete linear phase correction, a bit pushed, unnatural, at least in my perception. Its both a feel and listen experience and if both those perceptual tools match it just clicks into place.

Do as you wish though, you're free to make that choice for yourself.

I can't think of a single reason why any instrument wouldn't follow minimum phase, except when it has been manipulated.

As do natural sounds in our universe, so why would we want something different from our (not so perfect) speakers?

I tried to get that minimum phase timing at the listening position... By using enough room treatment and a pair of full range arrays plus EQ/DSP. I've listened to an entirely linear correction as well as phase following the band pass behaviour at that spot. I definitely prefer the latter. Acting as a minimum phase bandpass device.

Some songs just didn't sound right with a complete linear phase correction, a bit pushed, unnatural, at least in my perception. Its both a feel and listen experience and if both those perceptual tools match it just clicks into place.

Do as you wish though, you're free to make that choice for yourself.

I can't think of a single reason why any instrument wouldn't follow minimum phase, except when it has been manipulated.

Hi fluid and wesayso, thanks for comments...good food for thought...

fluid, i think you are spot on with the observation that a speaker with flat amplitude response 20-20K, or rather flat through audibility, satisfies both minimum and linear phase. I think it's more than satisfies...I think in such a case minimum phase equals linear phase.

(This is of course assuming there are no response anomalies throughout the audible spectrum. And I guess it's appropriate to define rolloff questions in terms of low frequency only)

The thing I keep coming to, is linear phase in a speaker does not mean that the recording gets altered from minimum to linear phase. It just means the recording will be reproduced exactly as recorded, doesn't it?

Whatever minimum phase rolloff is in the recording will be reproduced exactly as is by a linear phase speaker system i think.

If we add an additional degree of minimum phase rolloff via our speaker tuning, aren't we doubling up rolloff.

I guess if the recording was mastered listening to monitors that had rolloff, it might sound best if we could match the rolloff of the monitors used. Or less to no rolloff, if mastered on headphones or the new breed of linear phase monitors that are showing up in pro circles.

As far as natural sounds having low freq rolloff...wow, I wish I could wrap my head around what's going on. I've been thinking....how do you even ascertain what the phase response of a natural sound is? What do you compare it too?

I can visualize a natural sound has some combination of frequencies that originate at particular times, but is that timing of origination due to limited bandwidth? Doesn't seem so...seems like it's due to the nature of the origination..and not a lack of bandwidth...does a lion's roar lack bandwidth and hence have group delay rolloff ....I dunno....

Anyway, like you guys said...round and round and never be right

fluid, i think you are spot on with the observation that a speaker with flat amplitude response 20-20K, or rather flat through audibility, satisfies both minimum and linear phase. I think it's more than satisfies...I think in such a case minimum phase equals linear phase.

(This is of course assuming there are no response anomalies throughout the audible spectrum. And I guess it's appropriate to define rolloff questions in terms of low frequency only)

The thing I keep coming to, is linear phase in a speaker does not mean that the recording gets altered from minimum to linear phase. It just means the recording will be reproduced exactly as recorded, doesn't it?

Whatever minimum phase rolloff is in the recording will be reproduced exactly as is by a linear phase speaker system i think.

If we add an additional degree of minimum phase rolloff via our speaker tuning, aren't we doubling up rolloff.

I guess if the recording was mastered listening to monitors that had rolloff, it might sound best if we could match the rolloff of the monitors used. Or less to no rolloff, if mastered on headphones or the new breed of linear phase monitors that are showing up in pro circles.

As far as natural sounds having low freq rolloff...wow, I wish I could wrap my head around what's going on. I've been thinking....how do you even ascertain what the phase response of a natural sound is? What do you compare it too?

I can visualize a natural sound has some combination of frequencies that originate at particular times, but is that timing of origination due to limited bandwidth? Doesn't seem so...seems like it's due to the nature of the origination..and not a lack of bandwidth...does a lion's roar lack bandwidth and hence have group delay rolloff ....I dunno....

Anyway, like you guys said...round and round and never be right

So if I’m understanding this right

In nature any “sound” is a vibration of something. Something is vibrating to make the sound

Could be anything, at whatever frequencies these things vibrate for them to become louder the amplitude has to increase which means longer duration between cycles which suggests minimum phase

Even in the complex signal like hitting a stick on a object where two things vibrate as a result of an energy transfer, the vibrations are bigger as in more force and more sound is created as the duration between peaks in longer , again minimum phase.

So it makes sense to reproduce such things the system would want to be free from any artifacts of its own in the entire spectrum to faithfully reproduce whatever types of signals are given to it. In a simplistic way of saying it.

In nature it’s not possible to say or claim if something has phase rolloff or not as that’s contrary to what we use as a standard. As you have to look at the ends in which the thing was vibrating.

If a stick was beat across a rock in which is its final end, the stick will vibrate as a result of you hitting it with no scientific analysis done on how the stick was it. It’s end is it stops vibrating and resumes whatever shape is left after and continues it’s life as a stick.

So in regards to natural rolloff of a loudspeaker, it seems to me at least you would want. To try to correct anything that would be heard to have a flat phase and magnitude, if the rolloff is part of what you hear than it too should be made flat.

If the loudspeaker falls short of the spectrum and rolls off early, I think it makes sense to look at in which end are you measuring this thing. Just taking into account in how we look at graphs and what dictates to us what is or not linear phase and how we get there (an impulse perhaps) and the characteristics of in which we translate those signals could give us a better look into what we’re trying to do.

Being that we view these things in the electro/mechanical acoustical logmerithic fashion (ex. As high frequency band gets louder the phase moves forward electrically) than it makes somewhat sense to me to not linearize the low side but do linearize the high side to keep continuity on which we read these graphs

Or am I way off base?

In nature any “sound” is a vibration of something. Something is vibrating to make the sound

Could be anything, at whatever frequencies these things vibrate for them to become louder the amplitude has to increase which means longer duration between cycles which suggests minimum phase

Even in the complex signal like hitting a stick on a object where two things vibrate as a result of an energy transfer, the vibrations are bigger as in more force and more sound is created as the duration between peaks in longer , again minimum phase.

So it makes sense to reproduce such things the system would want to be free from any artifacts of its own in the entire spectrum to faithfully reproduce whatever types of signals are given to it. In a simplistic way of saying it.

In nature it’s not possible to say or claim if something has phase rolloff or not as that’s contrary to what we use as a standard. As you have to look at the ends in which the thing was vibrating.

If a stick was beat across a rock in which is its final end, the stick will vibrate as a result of you hitting it with no scientific analysis done on how the stick was it. It’s end is it stops vibrating and resumes whatever shape is left after and continues it’s life as a stick.

So in regards to natural rolloff of a loudspeaker, it seems to me at least you would want. To try to correct anything that would be heard to have a flat phase and magnitude, if the rolloff is part of what you hear than it too should be made flat.

If the loudspeaker falls short of the spectrum and rolls off early, I think it makes sense to look at in which end are you measuring this thing. Just taking into account in how we look at graphs and what dictates to us what is or not linear phase and how we get there (an impulse perhaps) and the characteristics of in which we translate those signals could give us a better look into what we’re trying to do.

Being that we view these things in the electro/mechanical acoustical logmerithic fashion (ex. As high frequency band gets louder the phase moves forward electrically) than it makes somewhat sense to me to not linearize the low side but do linearize the high side to keep continuity on which we read these graphs

Or am I way off base?

re' natural sounds

Keep in mind that they may be represented by a Fourier series. Thinking of musical tones this way is especially helpful. A tone is the sum of its fundamentals and harmonics weighted by complex coefficients that carry both magnitude and phase information. Pick something simple like a square wave, play with rephase paragraphic phase equalization and you can both see and hear the effects of phase distortion.

Re' minimum phase rolloff at the high and low ends of the range

I think that has more to do with reducing the number of taps required to reproduce the filter characteristic and or reduce pre-ringing than it does the accuracy of reproduiction

Keep in mind that they may be represented by a Fourier series. Thinking of musical tones this way is especially helpful. A tone is the sum of its fundamentals and harmonics weighted by complex coefficients that carry both magnitude and phase information. Pick something simple like a square wave, play with rephase paragraphic phase equalization and you can both see and hear the effects of phase distortion.

Re' minimum phase rolloff at the high and low ends of the range

I think that has more to do with reducing the number of taps required to reproduce the filter characteristic and or reduce pre-ringing than it does the accuracy of reproduiction

Exactly, if the bandwidth is large enough the two end up being the same.fluid, i think you are spot on with the observation that a speaker with flat amplitude response 20-20K, or rather flat through audibility, satisfies both minimum and linear phase. I think it's more than satisfies...I think in such a case minimum phase equals linear phase.

I agree with the phase part and I see the point you are making, but I don't think you are looking at the whole picture. The problem comes when you try to keep the phase flat but let the amplitude fall. You fix one problem and create another. Flat phase without flat amplitude is just the flipside of your idea of adding group delay to a recording from a speaker, two wrongs don't make a rightThe thing I keep coming to, is linear phase in a speaker does not mean that the recording gets altered from minimum to linear phase. It just means the recording will be reproduced exactly as recorded, doesn't it?

The recording won't be reproduced exactly if the phase is flat and the amplitude rolls off. It will if the phase and amplitude are flat.

If you can't make the phase and amplitude flat you are left with a trade off and you have to pick your poison.

Maybe but how many different speakers was that recording listened to and tweaked on before it got to you. Which one do you pick, the last one in the chain at mastering who had no involvement in the recording process or the mix engineer, or the tracking engineer.......I guess if the recording was mastered listening to monitors that had rolloff, it might sound best if we could match the rolloff of the monitors used. Or less to no rolloff, if mastered on headphones or the new breed of linear phase monitors that are showing up in pro circles.

There is also a difference between something that has had it's phase corrected in an anechoic environment to something that has the phase corrected at the listening position as wesayso promotes.

A simple way is to record it and have REW generate an minimum phase version. Valid at the point the microphone was placed.As far as natural sounds having low freq rolloff...wow, I wish I could wrap my head around what's going on. I've been thinking....how do you even ascertain what the phase response of a natural sound is?

I can visualize a natural sound has some combination of frequencies that originate at particular times, but is that timing of origination due to limited bandwidth? Doesn't seem so...seems like it's due to the nature of the origination..and not a lack of bandwidth...does a lion's roar lack bandwidth and hence have group delay rolloff ....I dunno....

Anyway, like you guys said...round and round and never be right

The two mechanisms aren't separate, there are timing variations due to the times at which different parts of the instrument are excited, i.e. finger presses the key, hammer strikes the string. It takes time for the sound to move through the parts or chambers of an instrument. Same with a lions roar, the vocal chords resonate, the mouth and lungs act like resonant chambers changing the sound and amplifying it. The chambers will have roll off. No natural sound has unlimited bandwidth.

The phase will follow the amplitude response and group delay will be there. Sampling theorum shows that you can recreate the input if the sampling frequency is high enough. If something only produces sound in a limited range increasing the bandwidth won't change the sound, the change will come if you limit the bandwidth below where the sound is being produced. Like in the graphs Byrtt showed earlier.

Exactly, if the bandwidth is large enough the two end up being the same.

1) I agree with the phase part and I see the point you are making, but I don't think you are looking at the whole picture. The problem comes when you try to keep the phase flat but let the amplitude fall. You fix one problem and create another. Flat phase without flat amplitude is just the flipside of your idea of adding group delay to a recording from a speaker, two wrongs don't make a right

2) A simple way is to record it and have REW generate an minimum phase version. Valid at the point the microphone was placed.

3) The phase will follow the amplitude response and group delay will be there. Sampling theorum shows that you can recreate the input if the sampling frequency is high enough. If something only produces sound in a limited range increasing the bandwidth won't change the sound, the change will come if you limit the bandwidth below where the sound is being produced. Like in the graphs Byrtt showed earlier.

Good stuff fluid

1) Oh, I also totally agree that you can't have flat phase and magnitude rolloff.

Sorry if i ever gave the impression i linearize phase past flat magnitude response. Heck, I've never used more than 6144 taps (at 48kHz) and currently have dropped down to 4096 taps on all my designs.

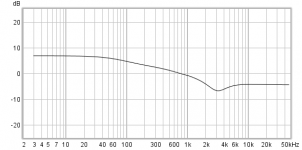

The trace below shows the normal phase lag on one of my favorite sub designs, a push-push slot loaded double 18" reflex. Green raw. Blue processed obviously.

2) Natural sound recording and generate transfers?...I didn't know REW could do such? Have you tried it, does it work well?

3) Yeah, i get that as long as the reproduction capability's bandwidth exceeds the natural sound's or recorded sound's bandwidth, all is good. And I get there are components of a natural sound that propagate from different start times, and with different freq spectra.

What i don't get is how that pragmatically matters to reproduction.

It seems to me, we build a speaker for how low we want response to go, and then linearize both mag and phase all the way to where low magnitude rolloff begins.

I'm not sure how you could linearize phase past mag rolloff anyway. Can grillions of taps do that?

I get the sense that maybe we're all close to saying the same thing, once we fully understand each other.......?

Attachments

Graphs can help a lot because it is easy to misunderstand the terms people are using.Good stuff fluid

1) Oh, I also totally agree that you can't have flat phase and magnitude rolloff.

Sorry if i ever gave the impression i linearize phase past flat magnitude response.

Sorry if I gave that impression, REW can't record directly, but it should be possible to import an impulse of the sound, or import a file as a frequency response with magnitude and phase, then REW can generate a minimum phase version to see if there is any excess phase. I haven't tried it.2) Natural sound recording and generate transfers?...I didn't know REW could do such? Have you tried it, does it work well?

I don't know that it does matter if the rolloff is low enough.What i don't get is how that pragmatically matters to reproduction.

It seems to me, we build a speaker for how low we want response to go, and then linearize both mag and phase all the way to where low magnitude rolloff begins.

Easily and with not many taps if you correct phase only. As an example in rephase on the filters linearization tab you can select a box rolloff to compensate, one of the sealed ones work well for this. Don't change the magnitude just the phase and see how few taps you need to get a perfect match. I tried it with 4096 and it was exactly the same as with 65536. Phase manipulation by itself does not need that many taps. If you use IIR EQ to correct magnitude, you could do almost any phase correction with your 4096 taps.I'm not sure how you could linearize phase past mag rolloff anyway. Can grillions of taps do that?

I think soI get the sense that maybe we're all close to saying the same thing, once we fully understand each other.......?

fluid, thx for this ongoing dialog and posts....I've never been able to grasp what BYRTT and wesayso were saying about their low end phase preference till now..

@ BYRTT and wesayso, sorry it took me so long to see what you guys were saying about preferring minimum phase down low. I do too, below flat response.

It just never crossed my mind that anyone would try to linearize phase below the frequency where flat sub response ends.

And that led me to misunderstanding, thinking since you preferred minimum phase over linear phase down low, that you were saying you preferred minimum phase even up into frequencies of flat response. But now I see

@ BYRTT and wesayso, sorry it took me so long to see what you guys were saying about preferring minimum phase down low. I do too, below flat response.

It just never crossed my mind that anyone would try to linearize phase below the frequency where flat sub response ends.

And that led me to misunderstanding, thinking since you preferred minimum phase over linear phase down low, that you were saying you preferred minimum phase even up into frequencies of flat response. But now I see

Okay so not to sound like “that guy”

In the case of my box that rolls off at 45hz but has room gain down below 20hz

Do I add the box linearization or not?

Or do I simply just eq flat (whatever the responce is and call it done and leave only the filter linearization?

Thanks in advance

In the case of my box that rolls off at 45hz but has room gain down below 20hz

Do I add the box linearization or not?

Or do I simply just eq flat (whatever the responce is and call it done and leave only the filter linearization?

Thanks in advance

Okay so not to sound like “that guy”

In the case of my box that rolls off at 45hz but has room gain down below 20hz

Do I add the box linearization or not?

Or do I simply just eq flat (whatever the responce is and call it done and leave only the filter linearization?

Thanks in advance

No box linearization for the Q0,83 @45Hz stopband only linerize subs LP filter : )

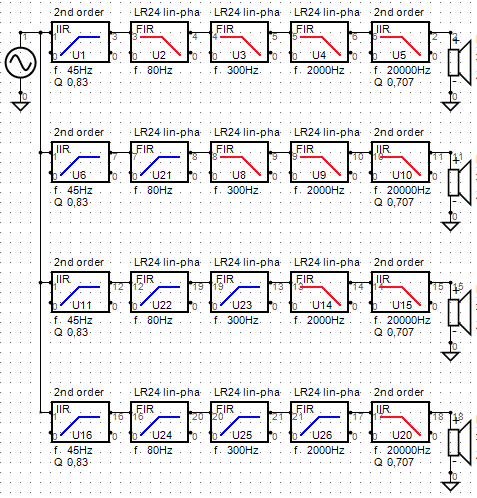

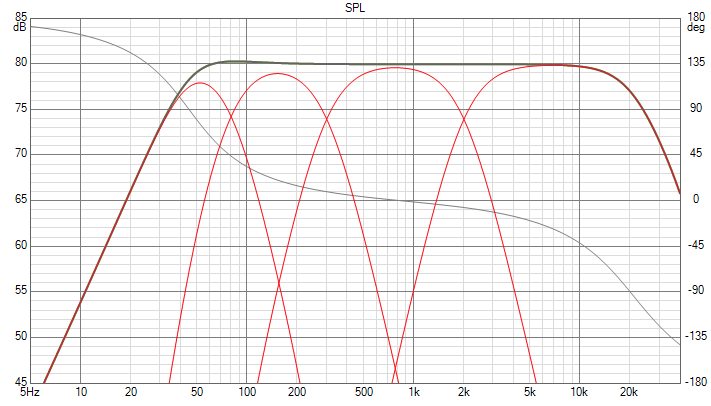

Here is a block diagram example of 4-way system where i used numbers for your sub because you told those numbers, the rest is for example. As we were around pages back to hinder destructive ripple calculate final target slope per transducer to be a cascade that includes the side band transducers slopes plus stopbands exactly as in block diagram. As you see stopbands are of minimum phase and not linearized so we end up a true system sum minimum phase curve as was it a single point wide band transducer 45Hz(Q0,83)-20kHz(Q0,707).

Curves for above block diagram, lows stopband will extend enormous into your car so find out that probably bit ragged curve and smooth it best you can, probably also you need set a highpass in 15-20Hz area to get it musical or use a linkwitz transform to transfer Q0,83 @45Hz up or down to whatever better stopband that blend smoother with the car cabin, all the room gain corrections should be global and of minimum phase.

Attachments

Last edited:

No box linearization for the Q0,83 @45Hz stopband only linerize subs LP filter : )

Here is a block diagram example of 4-way system where i used numbers for your sub because you told those numbers, the rest is for example. As we were around pages back to hinder destructive ripple calculate final target slope per transducer to be a cascade that includes the side band transducers slopes plus stopbands exactly as in block diagram. As you see stopbands are of minimum phase and not linearized so we end up a true system sum minimum phase curve as was it a single point wide band transducer 45Hz(Q0,83)-20kHz(Q0,707).

Curves for above block diagram, lows stopband will extend enormous into your car so find out that probably bit ragged curve and smooth it best you can, probably also you need set a highpass in 15-20Hz area to get it musical or use a linkwitz transform to transfer Q0,83 @45Hz up or down to whatever better stopband that blend smoother with the car cabin, all the room gain corrections should be global and of minimum phase.

Thank you!!

Aah the light bulb moment finally!

I think I get it....as per pages back I thought I got it, I think it is now making sense especially with what you and fluid and mark100 were saying earlier.

Now I get the cascade! I never thought about the ripple before like that, I’ve always seen it as a artifact that I wasn’t smart enough to solve. I tryed that with an old measurement and it’s stellar, yes It’s starting to make sense. Can’t wait to try this!

Thank you.

Last edited:

The only thing I think I’ll have to account for is I like the way the stopband sounds on certain drivers rolloff. The blending is desired especially in car to help build a soundstage and it’s components to help hide the location of the drivers and to help promote stage height. Some of these “problems” I think are of benefit in a car where your trying to get the sound off the floor and to be perceived as coming from up higher and farther back than the actual driver locations.

I think I’m going to have to experiment, maybe raise or lower crossover points in areas where I want or don’t want interaction in the stopbands on each driver.

Ultimately I’m going to have to learn this out of the car so I can get the hang of it than try to adopt it and make it work.

Does anyone know how we perceive sound height?What amplitude/phase/reflection characteristics help make sound appear higher up than it actually is. Or what is the mechanism that we hear height.

Links welcome any reading material please.

I basically just fiddle with delays between pairs +/- maybe .3ms and listen for the stage to rise, which has worked quite well , but I’m definitely changing some of the overall impulse in ways that some may think looks like a mess.

It would be really cool if I could learn how to dial the midrange -250hz-3khz to have good height and than talor the other bands around it and get a smoother overall shape.

I think knowing how we hear height is what will help me the most figure this out

I promise last question for at least 4weeks, I’ll be playing with BYRTTs methods for at least a month before I try something else .

Thanks in advance.

I think I’m going to have to experiment, maybe raise or lower crossover points in areas where I want or don’t want interaction in the stopbands on each driver.

Ultimately I’m going to have to learn this out of the car so I can get the hang of it than try to adopt it and make it work.

Does anyone know how we perceive sound height?What amplitude/phase/reflection characteristics help make sound appear higher up than it actually is. Or what is the mechanism that we hear height.

Links welcome any reading material please.

I basically just fiddle with delays between pairs +/- maybe .3ms and listen for the stage to rise, which has worked quite well , but I’m definitely changing some of the overall impulse in ways that some may think looks like a mess.

It would be really cool if I could learn how to dial the midrange -250hz-3khz to have good height and than talor the other bands around it and get a smoother overall shape.

I think knowing how we hear height is what will help me the most figure this out

I promise last question for at least 4weeks, I’ll be playing with BYRTTs methods for at least a month before I try something else .

Thanks in advance.

Last edited:

Hi Oabeieo now in reality principles are easier said than done, 2 way probably a okay workload but 3 or 4 ways are a big mouthfull of work in reality to get the acoustic right with tons of measurements and quality checks verse textbook targets.

Had below target curve laying around : ) and as far i remember its a car cabin target house curve, i hang it on below in a zip folder, but please research if its true use is for car cabin. House curves can be dialed in as a final act using global EQ, but can also be incorparated in per pass band target curves and a tip to create one is in Rephase set the string of all the cascaded filters and save as frd file and when imported in REW you now have a overlay target to live measurement sweeps and if you want to incorate the hanged on target curve to per passband target curve then go on "ALL SPL" tab "Controls" in REW and times to two curves each other (A x B).

Had below target curve laying around : ) and as far i remember its a car cabin target house curve, i hang it on below in a zip folder, but please research if its true use is for car cabin. House curves can be dialed in as a final act using global EQ, but can also be incorparated in per pass band target curves and a tip to create one is in Rephase set the string of all the cascaded filters and save as frd file and when imported in REW you now have a overlay target to live measurement sweeps and if you want to incorate the hanged on target curve to per passband target curve then go on "ALL SPL" tab "Controls" in REW and times to two curves each other (A x B).

Attachments

Last edited:

Hi Oabeieo now in reality principles are easier said than done, 2 way probably a okay workload but 3 or 4 ways are a big mouthfull of work in reality to get the acoustic right with tons of measurements and quality checks verse textbook targets.

Had below target curve laying around : ) and as far i remember its a car cabin target house curve, i hang it on below in a zip folder, but please research if its true use is for car cabin. House curves can be dialed in as a final act using global EQ, but can also be incorparated in per pass band target curves and a tip to create one is in Rephase set the string of all the cascaded filters and save as frd file and when imported in REW you now have a overlay target to live measurement sweeps and if you want to incorate the hanged on target curve to per passband target curve then go on "ALL SPL" tab "Controls" in REW and times to two curves each other (A x B).

Thanks again BYRTT,

Wow that target is almost identical to my Dirac live target that sounds the best in the car, I’m not not using Dirac live and have grown out of it, I can beat what it can do manually in the car with rephase in most aspects. (I could go on and on why I live Dirac but the things I didn’t like as few as they are were dealbreakers for me and I like the freedom over the impulse so I can break the rules as I wish)

That target is pretty much exactly what I’m using. Especially using horns in the car that play down to 1k that have a two axis flare that Dirac has a hard time resolving.

You guys have no idea how much of a help you are, I super appreciate the time from everyone. My car now is literally a one in 10million cars to listen to. It’s very special and have tons and tons of hours into it. It’s a lot of fun.

Rephase has made it that one step above Dirac that I just couldn’t get with tonality and stage height with kick panel 8s and under dash horns, the stage is above the dash , vocal nicely centered and pushed far back. Thank you rephase!

I tried that crossover setup today, second go at it from pages back this time I got it working a little better. The midrange sounds much better and more in harmony with the bass. Thank you. I like it a lot

As far as sound height , I have scoured the web and can’t find any articles.getting my stage high was trial and error, I want to learn how to go right to it as I switch gear a lot

Last edited:

Does anyone know how we perceive sound height?What amplitude/phase/reflection characteristics help make sound appear higher up than it actually is. Or what is the mechanism that we hear height.

Links welcome any reading material please.

Thanks in advance.

Hello, you may be interested in trying this:

Online LEDR™ Sound Test | Listening Environment Diagnostic Recording Test

- Home

- Design & Build

- Software Tools

- rePhase, a loudspeaker phase linearization, EQ and FIR filtering tool