Second that. I've used it before but I'm scrambling every time I open it up. Write something man!

Yes, I know I should write something but...

I do this software for fun, and writing documentation is not exactly my idea of fun

...And why should I bother to write something when the miniDSP team has already written a simple yet throughout tutorial that covers almost all possible use of rePhase (with an emphasis on hardware convolution engines, of course) :

The rePhase FIR tool | MiniDSP

I will put that link in good place on the project page on sourceforge...

I've been testing this again tonight. Got good measurements and an impulse that seems correct. Been switching it in and out.

On my system is seems very dependent on the recording whether I hear it or not. Hard to predict. When I do hear it, it's always a similar effect. The linear phase gives me a stronger center phantom image and tends to move voices forward and slightly up. Space seems a bit better defined. If there are drums they seem more dynamic, live.

On some recordings I don't hear it at all. I've tried jazz, pop, rock, dance, classical, opera and lounge. Opera and classical seem to consistently reveal the difference, other genres are hit or miss. I can understand why some people say phase isn't audible, or at least not noticeable. It's not night and day, but sometimes a nice improvement. Further listening is in order.

Hi Pano,

I am not really sensitive to imaging and stereo illusion (or maybe am I too sensitive to comb filtering?... I prefer to think about it that way

Most differences I have heard were on percussive instruments.

Would you mind sharing references of some of these particularly revealing songs?

Great to here people have similar experiences. Did you try just plain XO phase linearisation or did you make the bass rolloff also linphase? Further, any results when switching absolute polarity? These things matter as well, IMHO.[snip]

On some recordings I don't hear it at all. I've tried jazz, pop, rock, dance, classical, opera and lounge. Opera and classical seem to consistently reveal the difference, other genres are hit or miss. I can understand why some people say phase isn't audible, or at least not noticeable. It's not night and day, but sometimes a nice improvement. Further listening is in order.

And as you say, it's maybe not "mission critical" but for sure a worthwhile improvement. Thumbs up for POS for this great piece!

I'll look up the tracks I was listening to when I get home. Should be able to remember them.

I don't think I tried flipping absolute polarity, that's a good idea. I did do box, crossover and then straightening by hand for a decently linear phase. Did everything possible to phase, while leaving the amplitude the same.

When the measurement is taken in HOLMImpulse and exported with the phase unwrapped, all I see is a phase that is falling, falling falling. No big bumps or reversals. Correcting for the box phase fixes the bottom end, then correcting for the crossover gets a pretty flat phase. Some work is then needed by hand. Takes some practice, but it's not too hard.

I don't think I tried flipping absolute polarity, that's a good idea. I did do box, crossover and then straightening by hand for a decently linear phase. Did everything possible to phase, while leaving the amplitude the same.

When the measurement is taken in HOLMImpulse and exported with the phase unwrapped, all I see is a phase that is falling, falling falling. No big bumps or reversals. Correcting for the box phase fixes the bottom end, then correcting for the crossover gets a pretty flat phase. Some work is then needed by hand. Takes some practice, but it's not too hard.

Thomas,...And why should I bother to write something when the miniDSP team has already written a simple yet throughout tutorial that covers almost all possible use of rePhase (with an emphasis on hardware convolution engines, of course) :

The rePhase FIR tool | MiniDSP

What is the total latency (delay) created by flattening phase to say, 100 Hz, 50 Hz, 25 Hz, or 12.5 Hz ?

If the latency is dependent on processing speed, could you give a range for what could be expected with various computers ?

Thanks, and sorry if the question has already been answered.

Art

Hi Art,

Well, it depends

It is "easier "(ie requires less taps and less delay) to linearize a closed-box phase shift than a reflex one. In general the "sharper" the phase shift the more taps (and delay) you will need.

The centering of the impulse also enters into play. The only way to really know what delay you can expect is to try with different settings (taps, energy centering or not, etc.).

A nice feature that could be added would be to let the user define a maximum (or precise) delay and see what can be obtain with that... maybe for a future release.

Well, it depends

It is "easier "(ie requires less taps and less delay) to linearize a closed-box phase shift than a reflex one. In general the "sharper" the phase shift the more taps (and delay) you will need.

The centering of the impulse also enters into play. The only way to really know what delay you can expect is to try with different settings (taps, energy centering or not, etc.).

A nice feature that could be added would be to let the user define a maximum (or precise) delay and see what can be obtain with that... maybe for a future release.

Thomas,Hi Art,

Well, it depends

It is "easier "(ie requires less taps and less delay) to linearize a closed-box phase shift than a reflex one.

OK, the Ivan Beaver response, I deserved it

I can understand that correcting phase for headphones with only 100 degree change from 20 to 20 K would be different than correcting my PA speakers.

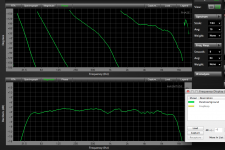

So, specifically, what would the total latency to flatten phase from 31.5 Hz to 16 kHz with a speaker system that exhibits 1170 degrees of rotation over that range, such as the one below?

The phase response posted already uses about 9 ms to align the tapped horn LF to the front horn mids, my concern is for live use additional delay could become problematic.

A general answer like "an additional 3ms, or an additional 30ms" will suffice, just trying to get an idea of what order of magnitude the delay would be.

Art

Attachments

I don't have a MiniDsp, so I can't do it myself.Weltersys. Use rePhase to create a filter to make the phase correction. You will see when there are too few filter taps because the generated filter will not implement the desiered curve.

If I had it, I would have simply measured the latency, but I don't, hence the questions.

Art, the more taps (and delay) you allow the closet to target the correction will be.

There is no clear response to your question: you would need to try for yourself using your measurement.

You don't need anything else to try it: just import your measurement into rephase (exported as freq amplitude phase columns from your measurement software) and apply your correction. Then try to reduce the number of taps gradually and generate the correction. rephase will show you the implied delay after each generation (final delay will be this delay plus the processing and buffering delay of your convolution engine, which will be around 2ms for an openDRC I think, and of course much more with a PC and FFT convolution...). If delay is your only constraint then you can choose "middle" as the impulse centering option, for consistent results (the delay will always be half the number of samples of your impulse).

If you find your correction requires too much delay (taps) you can give up some ultimate correction down low (BR or subsonic filter for example) and see what you get.

If you are not comfortable with trying rephase you can upload your impulse here and I will give it a go when time permits.

There is no clear response to your question: you would need to try for yourself using your measurement.

You don't need anything else to try it: just import your measurement into rephase (exported as freq amplitude phase columns from your measurement software) and apply your correction. Then try to reduce the number of taps gradually and generate the correction. rephase will show you the implied delay after each generation (final delay will be this delay plus the processing and buffering delay of your convolution engine, which will be around 2ms for an openDRC I think, and of course much more with a PC and FFT convolution...). If delay is your only constraint then you can choose "middle" as the impulse centering option, for consistent results (the delay will always be half the number of samples of your impulse).

If you find your correction requires too much delay (taps) you can give up some ultimate correction down low (BR or subsonic filter for example) and see what you get.

If you are not comfortable with trying rephase you can upload your impulse here and I will give it a go when time permits.

I use a Mac, it is not comfortable with rephaseArt, the more taps (and delay) you allow the closet to target the correction will be.

There is no clear response to your question: you would need to try for yourself using your measurement.

If you are not comfortable with trying rephase you can upload your impulse here and I will give it a go when time permits.

I did not take an impulse response when I tested the PA system, so unfortunately don't have one to export.

I don't understand why you can't give a ballpark estimate for latency in the specific example given in post #527, or a range of latency from "worst case" (say 7200 degrees of phase rotation from 20 Hz to 20kHz) to "best case" of 200 degrees of phase rotation over the same frequency response.

Other than the amount of phase rotation/lag between LF and HF, and LF and LF frequency, what other information is required for you to estimate the latency time?

If you have an example you could post of any typical speaker's phase, then the latency required to correct it from 20 Hz to 20kHz, perhaps an estimate of what other speakers correction could be inferred from that information.

Ok I will try to compile a few examples.

The limiting factor is not the total phase shift, but the lowest and sharper one.

For example in a typical loudspeaker linearizing the BR will require a given number of taps (for a given accepted accuracy), and almost any other crossover higher in frequency could get linearized "for free" with that same number of taps (and delay).

The limiting factor is not the total phase shift, but the lowest and sharper one.

For example in a typical loudspeaker linearizing the BR will require a given number of taps (for a given accepted accuracy), and almost any other crossover higher in frequency could get linearized "for free" with that same number of taps (and delay).

That is good to know, looking forward to your examples.Ok I will try to compile a few examples.

The limiting factor is not the total phase shift, but the lowest and sharper one.

An eyballed baseline minimum is the cycle period time of the lowest freq you want to process, times the number of 360deg rotations you need to roll back, using the tweeter as the time zero. 720deg @ 50Hz gives 40ms.I don't understand why you can't give a ballpark estimate for latency in the specific example given in post #527, or a range of latency from "worst case" (say 7200 degrees of phase rotation from 20 Hz to 20kHz) to "best case" of 200 degrees of phase rotation over the same frequency response.

Other than the amount of phase rotation/lag between LF and HF, and LF and LF frequency, what other information is required for you to estimate the latency time?

For the work I do latency isn't critical. Typically I use 32kSample kernels @ 96kHz with time zero centered, so the delay is only 16k Samples (170ms). This is good for linphase correction (as well as system EQ) down to 30Hz or so, for a 4th order highpass system (ported box) tuned at 40...50Hz. With some compromise you might reduce kernel size a lot.

170ms likely to long for most PA applications; but using partitioned convolution this can be brought down at expense of needing more computational power.

96kHz is waste of bandwidth. At 48kHz, half as many taps.

Pole count and HP corner may be looked at as determining time for filter to ring down to level where it may be truncated and smoothed to zero without creating audible artifacts.

96kHz is waste of bandwidth. At 48kHz, half as many taps.

Pole count and HP corner may be looked at as determining time for filter to ring down to level where it may be truncated and smoothed to zero without creating audible artifacts.

I have read a lot of papers about the partitioning convolution and the suggestion of reducing the delay is there; but I also don't understand how that is possible...

http://cnmat.berkeley.edu/system/files/attachments/main.pdf

http://netzspannung.org/cat/servlet/CatServlet/$files/221609/04_Rovaniemi99LLC.pdf

http://pcfarina.eng.unipr.it/Public/AES-113/Garcia-PrePrint5660.pdf

pos, by the way, thank you by your great work in rephase !

http://cnmat.berkeley.edu/system/files/attachments/main.pdf

http://netzspannung.org/cat/servlet/CatServlet/$files/221609/04_Rovaniemi99LLC.pdf

http://pcfarina.eng.unipr.it/Public/AES-113/Garcia-PrePrint5660.pdf

pos, by the way, thank you by your great work in rephase !

- Home

- Design & Build

- Software Tools

- rePhase, a loudspeaker phase linearization, EQ and FIR filtering tool