From Thesycons documentation:

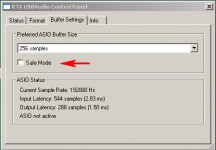

3.1.2 Safe Mode

Because the isochronous packet transfer on USB is driven by USB clock (not sample clock), the driver

is not able to exactly reproduce every ASIO processing interval. The trigger events (callbacks)

delivered by the ASIO driver at the beginning of each interval are subject to jitter. If an application

(such as a DAW) performs extensive computations in the driver callback, the current processing

interval can be extended and overlap with the next interval. In this case a drop-out in the playback path

might occur because the driver is not able to deliver isochronous packets in time.

To compensate for lengthy processing performed by a DAW in the ASIO callback, the driver supports

a “Safe Mode”. If safe mode is turned on then the driver tolerates that a processing interval extends

and overlaps with the next interval.

If audio processing in a DAW causes high CPU usage (e.g. because many effects are used) and drop-

outs occur in the playback path then Safe Mode should be used.

IIUC the safe mode allows to finish processing of the previous ASIO frame after a new frame has already started. Since ASIO flips between just two memory frames (one being processed by the software, another being written/read by the sound device), a larger overlap will cause the read/write soundcard DMA pointer to clash with the driver write/read pointer, resulting in glitches anyway.

Save mode; latency

Hi Jens,

First, thank you for response.

So as far as DiAna concerns, I don't have to worry about this kind of dropouts because computations in the driver callback routine are reduced to the absolute minimum (on my PC with a buffer size of 256 samples and a sampling rate of 192kHz, it takes only 3.8µs).

BTW, I've one more question: The control panel reports a latency of 2.83+1.50=4.33ms. In reality however I measured a little more. Also, that is after each new startup, I got not always the same latency. Instead, it varies from 5.42 to 6.30ms. Why? Is this a quirk of Windows? Note that my Lynx PCI audio interfaces doesn't show this variability. So I guess it has something to do how Windows deals with an USB audio stream, right?

Cheers,

E.

Hi Jens,

First, thank you for response.

So as far as DiAna concerns, I don't have to worry about this kind of dropouts because computations in the driver callback routine are reduced to the absolute minimum (on my PC with a buffer size of 256 samples and a sampling rate of 192kHz, it takes only 3.8µs).

BTW, I've one more question: The control panel reports a latency of 2.83+1.50=4.33ms. In reality however I measured a little more. Also, that is after each new startup, I got not always the same latency. Instead, it varies from 5.42 to 6.30ms. Why? Is this a quirk of Windows? Note that my Lynx PCI audio interfaces doesn't show this variability. So I guess it has something to do how Windows deals with an USB audio stream, right?

Cheers,

E.

PCI driver talks directly to the soundard, and latency of starting its DMA transfer varies mostly by scheduling capability of windows.

USB-audio driver talks to core usb driver which merges data from all other usb-device drivers for devices connected to the USB controller. Even though the RTX sends/receives data every USB microframe (125us), the USB-core driver accepts data in URBs - chunks with granularity of 1ms How to transfer data to USB isochronous endpoints - Windows drivers | Microsoft Docs - look at the table in item "What are the restrictions on the number of packets for each bus speed". The device driver must specify when the packets submitted to the core driver should be transferred. Even if using flag USBD_START_ISO_TRANSFER_ASAP (see the ms doc page), the core driver will schedule the submitted packets for the next 1ms block of 8 microframes.

IMO this explains the varying latency by about 1ms - sometimes the 1ms frame starts very early after submitting the first URB of the stream, sometimes the usb-audio driver just missed the start and the first packets will have to wait almost whole 1ms.

USB-audio driver talks to core usb driver which merges data from all other usb-device drivers for devices connected to the USB controller. Even though the RTX sends/receives data every USB microframe (125us), the USB-core driver accepts data in URBs - chunks with granularity of 1ms How to transfer data to USB isochronous endpoints - Windows drivers | Microsoft Docs - look at the table in item "What are the restrictions on the number of packets for each bus speed". The device driver must specify when the packets submitted to the core driver should be transferred. Even if using flag USBD_START_ISO_TRANSFER_ASAP (see the ms doc page), the core driver will schedule the submitted packets for the next 1ms block of 8 microframes.

IMO this explains the varying latency by about 1ms - sometimes the 1ms frame starts very early after submitting the first URB of the stream, sometimes the usb-audio driver just missed the start and the first packets will have to wait almost whole 1ms.

I'm really tired of the poor quality of the USB interfaces and drivers out there. The XMOS is definitely one of the best, but I wish a big player would come out with a bridge that has lower power consumption and free (full featured) drivers. Jens has made the most reasonable choice and I haven't had any issues with my RTX.

If I had spare time I would try to design a Thunderbolt (PCIe) interface. The problem is, who wants to write, test, and support their own drivers for all 3 major platforms? It might be interesting to try to create hardware that exposes the same registers as a widely supported PCIe chip, but there are not many of those (CMI8788, etc.) and most of them lack modern high sample rate support.

If I had spare time I would try to design a Thunderbolt (PCIe) interface. The problem is, who wants to write, test, and support their own drivers for all 3 major platforms? It might be interesting to try to create hardware that exposes the same registers as a widely supported PCIe chip, but there are not many of those (CMI8788, etc.) and most of them lack modern high sample rate support.

What's wrong with that USB driver? What would a constant latency every run be beneficial for? IMO the issue was just "why", not that it hurts anything. This is a measurement device, not an interactive musical instrument or a DAW interface. A latency of several hundred ms would not hurt any measurements, IMO.

IMO UAC2 is fully capable of serving the measurement device needs.

Linux supports any UAC2 samplerate/sample width/channel count. Should anything not work, a new kernel is issued every few months.

Windows - since v. 1703 Win10 have support for UAC2 in WASAPI. The specs look OK, incl. 32bit samples USB Audio 2.0 Drivers - Windows drivers | Microsoft Docs . I do not know about max samplerate, but these guys talk about running Topping E30 at 768kHz with WASAPI Topping E30 DAC - Audio Formats - Audirvana .

Unfortunately the UAC2 implicit feedback is not supported (yet), RTX being an example. The fact is that implicit-feedback devices have started to appear more recently, previously the explicit feedback endpoint was basically a rule. Proper handling of implicit feedback made it to linux only recently.

I am planning on some testing of Win10 WASAPI UAC2 stack using UAC2 gadget based on RPi4 in the coming months.

OSX - I have not tested maximum samplerate, but the official example at Technical Note TN2274: USB Audio on the Mac - 192 kHz / 32-bit / 10 channels - basically maxes out isochronous highspeed capacity (without using multiple packets per microframe). Clearly the driver can do the bitrate, just a question of samplerates in some enum/list. I have no OSX device to test but I will ask someone who does and is able to handle the RPi linux configuration for help.

Linux supports any UAC2 samplerate/sample width/channel count. Should anything not work, a new kernel is issued every few months.

Windows - since v. 1703 Win10 have support for UAC2 in WASAPI. The specs look OK, incl. 32bit samples USB Audio 2.0 Drivers - Windows drivers | Microsoft Docs . I do not know about max samplerate, but these guys talk about running Topping E30 at 768kHz with WASAPI Topping E30 DAC - Audio Formats - Audirvana .

Unfortunately the UAC2 implicit feedback is not supported (yet), RTX being an example. The fact is that implicit-feedback devices have started to appear more recently, previously the explicit feedback endpoint was basically a rule. Proper handling of implicit feedback made it to linux only recently.

I am planning on some testing of Win10 WASAPI UAC2 stack using UAC2 gadget based on RPi4 in the coming months.

OSX - I have not tested maximum samplerate, but the official example at Technical Note TN2274: USB Audio on the Mac - 192 kHz / 32-bit / 10 channels - basically maxes out isochronous highspeed capacity (without using multiple packets per microframe). Clearly the driver can do the bitrate, just a question of samplerates in some enum/list. I have no OSX device to test but I will ask someone who does and is able to handle the RPi linux configuration for help.

Hi Pavel,What's wrong with that USB driver? What would a constant latency every run be beneficial for? IMO the issue was just "why", not that it hurts anything. This is a measurement device, not an interactive musical instrument or a DAW interface. A latency of several hundred ms would not hurt any measurements, IMO.

Indeed, it doesn't hurt, but in some cases you need to know the exact latency and/or the phase relationship between DAC input and ADC output.

BTW, there are several tools out there that measure the latency, but the results are not always consistent. The RTL utility form Oblique Audio for example, says 5.620ms, while DiAna measures 5.6328ms (@ buffer size =256 and SR=192kHz).

Cheers,

E.

Edmond, I understand the need for knowing exact latency, but I would assume it must be measured for every run (start of the continuous stream) anyway. Every start will have a different latency, with any type of audio device. Therefore I do not see any problem with having a latency of 5.1ms in one run and 5.9ms in another run. But maybe I am missing something.

Actually, how do you measure the latency? IMO if a timer is used in the actual user-space application, then process scheduling changes the exact moment of running the code for reading the final timestamp. Differences in tens or even hundreds of microsecs would not be surprising.

In linux alsa the driver can be asked to accompany the data with DMA or link timestamp ALSA PCM Timestamping — The Linux Kernel documentation . The link timestamp is optional (if the driver can get it for its hardware), the DMA timestamp is obtained by the driver at IRQ callback. IMO this is as precise as it can get, yet still varies as DMA and servicing IRQs has delays too.

Actually, how do you measure the latency? IMO if a timer is used in the actual user-space application, then process scheduling changes the exact moment of running the code for reading the final timestamp. Differences in tens or even hundreds of microsecs would not be surprising.

In linux alsa the driver can be asked to accompany the data with DMA or link timestamp ALSA PCM Timestamping — The Linux Kernel documentation . The link timestamp is optional (if the driver can get it for its hardware), the DMA timestamp is obtained by the driver at IRQ callback. IMO this is as precise as it can get, yet still varies as DMA and servicing IRQs has delays too.

Latency

Hi Pavel

Cheers,

E.

Hi Pavel

No, you don't miss anything (I didn't say I've problems with variable latencies, only remarks).Edmond, I understand the need for knowing exact latency, but I would assume it must be measured for every run (start of the continuous stream) anyway. Every start will have a different latency, with any type of audio device. Therefore I do not see any problem with having a latency of 5.1ms in one run and 5.9ms in another run. But maybe I am missing something.

I measure the latency by comparing the phase of the DAC input and ADC output at two distinct frequency, normally around 997Hz. In my applications it's the phase difference that counts, because in this way the phase lead or lag from filters (or whatever) in the whole DAC-ADC chain is also taken into account.Actually, how do you measure the latency? IMO if a timer is used in the actual user-space application, then process scheduling changes the exact moment of running the code for reading the final timestamp. Differences in tens or even hundreds of microsecs would not be surprising.

[...]

Cheers,

E.

IMO UAC2 is fully capable of serving the measurement device needs.

I agree, but I never see things like dropouts or high DPC latency in Windows with PCIe devices, and I see them occasionally across various systems with some USB Audio Class devices.

For measurement, I have a non-audio board I designed using multiple LTC2387-18 and I'm pretty sure it would be hard to find a combination of UAC stack and drivers that work at ~15 MHz. I use FTDI FT601 for now.

Latency

There is one more reason to not use an ADC channel for synchronization: crosstalk. Believe it or not, even a tiny pure sine wave at the one channel introduces harmonics at the other channel. This appears to be a nasty habit of single chip stereo ADC's and a hindrance for ultra low THD measurements. Read here for more info on this topic.

Cheers,

E.

Indeed a good question. And yes, DiAna can operate this way. But if the fundamental is heavily attenuated and/or phase shifted when using notch filters on both channels, the only thing that is left for synchronization is the sine at the DAC input. Now the latency comes into play and a phase correction has to be applied.Dear Edmond, wouldn't it be the easiest to use a ADC reference channel (usually the second input of the DAQ/soundcard) in order to obtain phase/amplitude response of a DUT independently from hardware latencies in the acquisition path?

There is one more reason to not use an ADC channel for synchronization: crosstalk. Believe it or not, even a tiny pure sine wave at the one channel introduces harmonics at the other channel. This appears to be a nasty habit of single chip stereo ADC's and a hindrance for ultra low THD measurements. Read here for more info on this topic.

Cheers,

E.

Last edited:

Yes, got your point. In case you need a reference of the stimulus for THD measurements the second aquisition channel is useless. That is a very unique analysis implemented in DiAna.

My statement was targeted to generic transfer function measurements (either derived by stepped sine oder impulse response), where ultra low THD is not a prime requirement.

My statement was targeted to generic transfer function measurements (either derived by stepped sine oder impulse response), where ultra low THD is not a prime requirement.

For measuring electrostatic headphone amps I would like to increase the input attenuation.

Would it be good practice to add a, say, 900k resistor in series to the single ended input? This would make a 10:1 divider with the 100k input impedance of the RTX6001. A hight input impedance is desired for electrostatic amps anyway.

Would it be good practice to add a, say, 900k resistor in series to the single ended input? This would make a 10:1 divider with the 100k input impedance of the RTX6001. A hight input impedance is desired for electrostatic amps anyway.

That will probably work fine. To fine tune it and avoid drop off at high frequencies, you could mount a small (high voltage NP0 or similar quality) capacitor in parallel with the 900k resistor, e.g. 3.9 pF or ideally around 4.1 pF.

If you mount the components inside the XLR connector you can avoid attenuation from the cable capacitance. And you get some shielding as well.

Without the capacitors you will get a drop of around 3 dB at 50 kHz.

If you mount the components inside the XLR connector you can avoid attenuation from the cable capacitance. And you get some shielding as well.

Without the capacitors you will get a drop of around 3 dB at 50 kHz.

It's been very little for several months. In fact I haven't really worked on any spare time (audio) projects for some time, mainly because I moved to a new house recently.

I will take a new look at it and try to figure out a way to make it available, perhaps as a bare board solution.

any joy?

- Home

- Design & Build

- Equipment & Tools

- DIY Audio Analyzer with AK5397/AK5394A and AK4490