38 years ago, Blade Runner predicted we'd be able to enhance images with great accuracy, by 2019

As of 2019, we have numerous ways to enhance images, and some of them work with startling accuracy

Naturally, someone has applied this technology to audio:

Audio Super Resolution

"We train neural networks to impute new time-domain samples in an audio signal; this is similar to the image super-resolution problem, where individual audio samples are analogous to pixels.

For example, in the adjacent figure, we observe the blue audio samples, and we want to "fill-in" the white samples; both are from the same signal (dashed line).

To solve this underdefined problem, we teach our network how a typical recording "sounds like" and ask it to produce a plausible reconstruction."

It would be interesting to see if this technology could be used to resample or upsample old recordings to higher resolution. I know that most people assume that it's impossible to leverage upsampling to extract more detail from a recording. This is logical; you can't retrieve information that isn't there.

But these technologies are different. They take the signal, then use artificial intelligence to make an attempt to interpolate the missing data intelligently.

Check out the linked website; hearing is believing!

Attachments

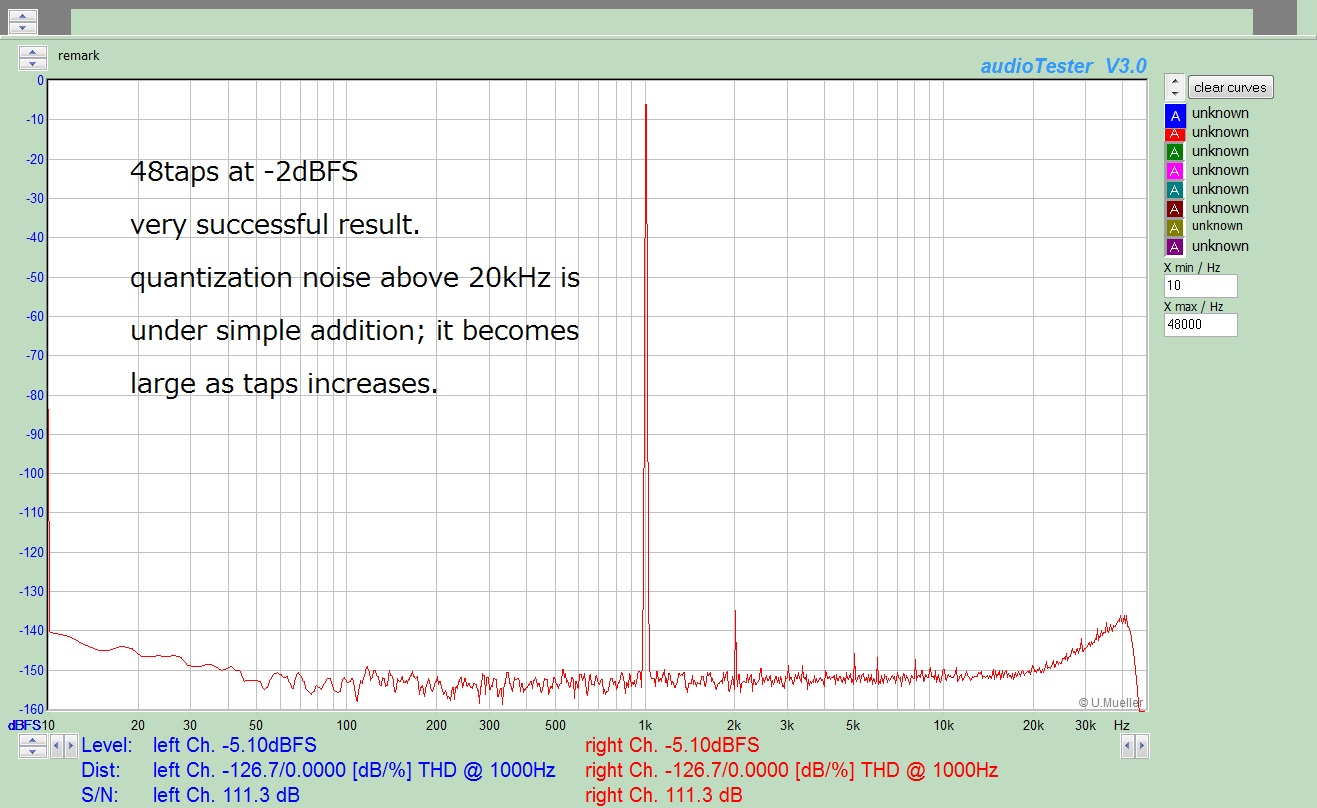

With digital audio, the bottom line is that sampling works, and it is theoretically a perfect process: you get back exactly what you put in. It allows for a totally accurate and faithful means of conveying a complete audio signal, from one place to another.

So, the numbers arrive from one place to another, it's all good, right? It must be, according to the above paragraph.

But, there is more; we can easily increase the amount of those numbers and make a copy sound much better than the original. Frequency response? Up to 100kHz, easily. But, there is (still)... more! Converting the bit depth to 32 bits.... even more numbers. The dynamic range through the roof!

Now, who would say no to that! Everyone's excited, the crowd is clapping.

Audio Nirvana for all of us.

So, the numbers arrive from one place to another, it's all good, right? It must be, according to the above paragraph.

But, there is more; we can easily increase the amount of those numbers and make a copy sound much better than the original. Frequency response? Up to 100kHz, easily. But, there is (still)... more! Converting the bit depth to 32 bits.... even more numbers. The dynamic range through the roof!

Now, who would say no to that! Everyone's excited, the crowd is clapping.

Audio Nirvana for all of us.

Very interesting and relevant topic!

It's a hot topic as well, just look at the number vacancies in this field.

I've been studying the numerous opportunities facilitated by FPGAs.

Most of us are aware of the the FPGA-based DACs (Chord ao.), as well as FPGAs for DSP (Q-Sys ao.).

It doesn't end here as illustrated by Alessio Meddathis in this article:

"In recent years, the commercial audio world has seen an increasing number of application of machine learning in commercial audio products. This should not be surprising, as the audio business was an early adopter data-driven tools aimed at performing standard operations on audio tracks normally performed by audio engineers. Nowadays, companies like CEDAR Audio, iZotope, and Accusonus lead the way in application of modern machine learning (ML) and artificial intelligence (AI) for audio products.

Traditional tasks usually performed by audio engineers are being slowly replaced by tools based on ML/AI using algorithms based on a combination of statistical models and neural networks. In 2014, the DNS One from CEDAR Audio, a multichannel dialog noise suppressor, was the first product in the company lineup to explicitly use machine learning by employing the LEARN algorithm. LEARN is designed to compute estimates of the background noise level and determine suitable noise attenuations at each frequency for optimum suppression. In 2016, CEDAR included the evolution of the LEARN algorithm in its CEDAR Cambridge 10 product, with the new FNR algorithm. According to the company, “FERN is an automated noise reduction system for speech recordings suffering from poor signal to noise ratios, and is capable of performance that would have seemed impossible just a few years ago.”

Another company that uses ML/AI in many of its product is iZotope, a company with products aimed a musicians, producers, and audio engineers. At iZotope, ML is used to automatically identify instruments, to automatically detect song structures and for improved waveform navigation. The company’s latest RX7 audio repair toolkit comprise the De-rustle module that uses a trained deep neural network to remove all varieties of rustle in recordings, the Spectral DeNoiser that leverages ML/AI to minimize disturbances from audio recorded in highly variable background noise situations like a stadiums, thunderstorms, or public places. In addition, the company Neutron 3 plugin features a Mixing Assistant that by using ML creates a balanced starting point for an initial-level mix saving time and energy when making creative mix decisions.

Furthermore, another example is Accusonus, a company with its own patented ML/AI technology which is applied to the ERA range of audio clean up tools. This include algorithms for denoise and de-reverberation, de-essing and audio repair, voice leveling, and de-clipping.

Hopefully future improvements will lead to more and more companies integrating ML/AI in their commercial products. This also will likely result in a shift in the music and audio industry, where bad audio does not have to be re-recorded but can be process to become usable. To identify precisely where the next innovation will be is difficult for a field advancing at such a rapid pace, but maybe the next big innovation could be the use of generative audio models like WaveNet for replacing audio that is missing or too corrupt to keep. Perhaps, in 20 years people may look back and see this as the beginning of a new way to think about audio."

It's a hot topic as well, just look at the number vacancies in this field.

I've been studying the numerous opportunities facilitated by FPGAs.

Most of us are aware of the the FPGA-based DACs (Chord ao.), as well as FPGAs for DSP (Q-Sys ao.).

It doesn't end here as illustrated by Alessio Meddathis in this article:

"In recent years, the commercial audio world has seen an increasing number of application of machine learning in commercial audio products. This should not be surprising, as the audio business was an early adopter data-driven tools aimed at performing standard operations on audio tracks normally performed by audio engineers. Nowadays, companies like CEDAR Audio, iZotope, and Accusonus lead the way in application of modern machine learning (ML) and artificial intelligence (AI) for audio products.

Traditional tasks usually performed by audio engineers are being slowly replaced by tools based on ML/AI using algorithms based on a combination of statistical models and neural networks. In 2014, the DNS One from CEDAR Audio, a multichannel dialog noise suppressor, was the first product in the company lineup to explicitly use machine learning by employing the LEARN algorithm. LEARN is designed to compute estimates of the background noise level and determine suitable noise attenuations at each frequency for optimum suppression. In 2016, CEDAR included the evolution of the LEARN algorithm in its CEDAR Cambridge 10 product, with the new FNR algorithm. According to the company, “FERN is an automated noise reduction system for speech recordings suffering from poor signal to noise ratios, and is capable of performance that would have seemed impossible just a few years ago.”

Another company that uses ML/AI in many of its product is iZotope, a company with products aimed a musicians, producers, and audio engineers. At iZotope, ML is used to automatically identify instruments, to automatically detect song structures and for improved waveform navigation. The company’s latest RX7 audio repair toolkit comprise the De-rustle module that uses a trained deep neural network to remove all varieties of rustle in recordings, the Spectral DeNoiser that leverages ML/AI to minimize disturbances from audio recorded in highly variable background noise situations like a stadiums, thunderstorms, or public places. In addition, the company Neutron 3 plugin features a Mixing Assistant that by using ML creates a balanced starting point for an initial-level mix saving time and energy when making creative mix decisions.

Furthermore, another example is Accusonus, a company with its own patented ML/AI technology which is applied to the ERA range of audio clean up tools. This include algorithms for denoise and de-reverberation, de-essing and audio repair, voice leveling, and de-clipping.

Hopefully future improvements will lead to more and more companies integrating ML/AI in their commercial products. This also will likely result in a shift in the music and audio industry, where bad audio does not have to be re-recorded but can be process to become usable. To identify precisely where the next innovation will be is difficult for a field advancing at such a rapid pace, but maybe the next big innovation could be the use of generative audio models like WaveNet for replacing audio that is missing or too corrupt to keep. Perhaps, in 20 years people may look back and see this as the beginning of a new way to think about audio."

Last edited:

Machine learning would be an interesting way to do upmixing.

For instance, a lot of the current upmixers work like this:

Step 1: merge the left and right into a mono center channel.

Step 2: create a new left and right by using a mix of the original left with a fraction of the center removed.

With machine learning, it should be possible to write software that can detect what a drum sounds like, and send that to the back. Then detect what vocals sound like, and send that to the center.

Instead of being based on simple acoustic subtraction and addition, a ML based upmixers would upmix based on pattern recognition.

This could be improved further using crowdsourcing. For instance, if you've ever seen websites that showed you nine pictures and asked you which one had a truck in the pic, that's crowdsourcing. Basically the machine needed some help.

You could do similar with audio. For instance, two samples would play and a human would choose which sounds better.

For instance, a lot of the current upmixers work like this:

Step 1: merge the left and right into a mono center channel.

Step 2: create a new left and right by using a mix of the original left with a fraction of the center removed.

With machine learning, it should be possible to write software that can detect what a drum sounds like, and send that to the back. Then detect what vocals sound like, and send that to the center.

Instead of being based on simple acoustic subtraction and addition, a ML based upmixers would upmix based on pattern recognition.

This could be improved further using crowdsourcing. For instance, if you've ever seen websites that showed you nine pictures and asked you which one had a truck in the pic, that's crowdsourcing. Basically the machine needed some help.

You could do similar with audio. For instance, two samples would play and a human would choose which sounds better.

This is an example of a so called audio blade, based on Intel's Arria 10 SoC FPGA.

One may wonder why an external (though built-in) audio codec is used when a superior converter can be built around a FPGA.

One may wonder why an external (though built-in) audio codec is used when a superior converter can be built around a FPGA.

Last edited:

Greetings,

I don't see why if you have powerfull calculator and sampled capture of voices and instruments you could not make it again from scratch... it's maths and zero and one. A voice speach of your voice by a computer is always a reality and certainly exists for some reasons in the non civil domain.

Now, about my prefered movie you talked. The picture scene is not really imho only about resolution enhancement, we see a scene of the woman who is not enterely on the picture but a reconstruction by the machine of a scene that not exist in the picture...like if you can visit a flat from the simple picture of the entrance room. The mirror is not explaining the final rzsult as far I remember... but I avoid I was each time weeded after the first Vangelis scene...

What I want to say is a adc capture is needed first not of the tune but of the voices and instruments one by one then the machine analyse the tune and reconstruct the tune from scratch with the high samples of the sampled instruments with a proper adc to make it numbers. Voices being not exactly copied, it will be sampled from reccordings and maybe cleaned....

I don't see why if you have powerfull calculator and sampled capture of voices and instruments you could not make it again from scratch... it's maths and zero and one. A voice speach of your voice by a computer is always a reality and certainly exists for some reasons in the non civil domain.

Now, about my prefered movie you talked. The picture scene is not really imho only about resolution enhancement, we see a scene of the woman who is not enterely on the picture but a reconstruction by the machine of a scene that not exist in the picture...like if you can visit a flat from the simple picture of the entrance room. The mirror is not explaining the final rzsult as far I remember... but I avoid I was each time weeded after the first Vangelis scene...

What I want to say is a adc capture is needed first not of the tune but of the voices and instruments one by one then the machine analyse the tune and reconstruct the tune from scratch with the high samples of the sampled instruments with a proper adc to make it numbers. Voices being not exactly copied, it will be sampled from reccordings and maybe cleaned....

Last edited:

Cleaning up audio to get rid of background noise

RTX Voice: Noise-destroying tech put to the test - BBC News

Brian

RTX Voice: Noise-destroying tech put to the test - BBC News

Brian

Machine learning would be an interesting way to do upmixing.

For instance, a lot of the current upmixers work like this:

Step 1: merge the left and right into a mono center channel.

Step 2: create a new left and right by using a mix of the original left with a fraction of the center removed.

With machine learning, it should be possible to write software that can detect what a drum sounds like, and send that to the back. Then detect what vocals sound like, and send that to the center.

Instead of being based on simple acoustic subtraction and addition, a ML based upmixers would upmix based on pattern recognition.

This could be improved further using crowdsourcing. For instance, if you've ever seen websites that showed you nine pictures and asked you which one had a truck in the pic, that's crowdsourcing. Basically the machine needed some help.

You could do similar with audio. For instance, two samples would play and a human would choose which sounds better.

This exists now: Five Channel Soundstage | Page 9 | DiyMobileAudio.com Car Stereo Forum

There are from a long time now some mic system than correct the voices on the fly during musical events to make then sound not disharmonics... there are some famous singer that using it because they are average mi level singers, like Noel Galhager for instance (well I have not proven source for that and it makes not the timbers to be good)

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- Digital Source

- Cleaning up Audio With Artificial Intelligence