\

I can easily hear the difference between 16 bits and 18 bits. I also found that 12 bit floating point sounded just as good as 16 bit linear. My testing was done when I worked for Pioneer. In the late 70s.

There are test conditions where everyone can easily hear the difference between 16 and 18 bits! But the problem here is you never defined what yours were, nor the test methodology. So...yeah, ok, and so what?

Talk about imprecise.

Also, those test conditions where the 16 vs 18 bit differences become obvious have nothing to do with actual use with real audio recorded at normal levels.

Isn't the problem that most 16 bit ADCs are nothing of the sort, especially when built into a real world circuit. When I was designing ADC boards, I found it incredibly hard to prevent digital interface noise coupling back into the input and creating spurious tones.

That was certainly true a while back, and the same issues now apply to 24 bit ADCs. We can now do clean 16 bits easily, actually up to 20 bits. Beyond that, nope. 24 bit ADCs produce 20 bis of actual audio and 4 bits of noise, but only in lab test conditions where the input signals are clean and quiet enough to discern that. Not a chance with signals from mics in studios.

The document "Sound Directions: Best Practices for Audio Preservation" by By Mike Casey, Indiana University and Bruce Gordon, Harvard University, touches lightly on the two institutions choices of ADCs and DACs. Under section 2.2.4.1 the specific devices are defined as the Prism AD2 ADC and DA2 DAC, and the Benchmark ADC1 and DAC1, chosen for the accuracy of their conversion. They state they are "very satisfied" with the results.

The digital format they've chosen for their archival work is 24/96, which is stated in several places in the document. However, there is no rationale given for the choice other than this, "This seems the best compromise among coding format, file size and audio fidelity. We say compromise because we realize that choosing a coding, a sample frequency and a word length is a limiting choice in an ever-changing landscape of audio formats and tools. At this time, the wide support for PCM audio at this resolution should ensure that our digital objects have a fairly long life before migration to the next form becomes necessary."

Their choice seems reasonable for the project only in that it was practical at the time (2007), and considered "safe" in that the bit depth allowed capture to be made without peaks being close to 0dBFS. No mention is made of any other necessity relating to captured signal dynamic range or frequency range.

It seems the choice may actually be one of 'practical safety", where the writers were unable to completely analyze the real requirements, so the chose the safe route due to lack of full analysis.

The full document is here: http://www.dlib.indiana.edu/projects/sounddirections/papersPresent/sd_bp_07.pdf

The digital format they've chosen for their archival work is 24/96, which is stated in several places in the document. However, there is no rationale given for the choice other than this, "This seems the best compromise among coding format, file size and audio fidelity. We say compromise because we realize that choosing a coding, a sample frequency and a word length is a limiting choice in an ever-changing landscape of audio formats and tools. At this time, the wide support for PCM audio at this resolution should ensure that our digital objects have a fairly long life before migration to the next form becomes necessary."

Their choice seems reasonable for the project only in that it was practical at the time (2007), and considered "safe" in that the bit depth allowed capture to be made without peaks being close to 0dBFS. No mention is made of any other necessity relating to captured signal dynamic range or frequency range.

It seems the choice may actually be one of 'practical safety", where the writers were unable to completely analyze the real requirements, so the chose the safe route due to lack of full analysis.

The full document is here: http://www.dlib.indiana.edu/projects/sounddirections/papersPresent/sd_bp_07.pdf

There are test conditions where everyone can easily hear the difference between 16 and 18 bits! But the problem here is you never defined what yours were, nor the test methodology. So...yeah, ok, and so what?

Talk about imprecise.

Also, those test conditions where the 16 vs 18 bit differences become obvious have nothing to do with actual use with real audio recorded at normal levels.

I was working for Pioneer America. We had directly recorded sound, of course.

I built an 18 bit 50khz ADC from little parts. In 1977. Hand made .0003% resistors. It was the fastest most precise ADC made at the time. I was especially proud of my sample and hold, made from 3906's and 2222's and switched at 3ghz.

I learned my digital signal processing at Caltech. You don't get to just dismiss people 'cause you don't like their arguments. You ever watch The Big Bang Theory? I can match your arrogance all day every day.

Last edited:

I'm not challenging your credentials at all.I was working for Pioneer America. We had directly recorded sound, of course.

I built an 18 bit 50khz ADC from little parts. In 1977. Hand made .0003% resistors. It was the fastest most precise ADC made at the time. I was especially proud of my sample and hold, made from 3906's and 2222's and switched at 3ghz.

I learned my digital signal processing at Caltech. You don't get to just dismiss people 'cause you don't like their arguments. You ever watch The Big Bang Theory? I can match your arrogance all day every day.

But what were the test conditions in which you could reliably distinguish 16 vs 18 bits?

What test conditions would satisfy you?

The test conditions he used.

Please understand, I'm not trying to debunk his test. As I said, there are conditions under which anyone could hear the difference. There are also many conditions under which no one could hear the difference between 16 and 18 bits.

When a statement is made like "I can tell the difference between X and Y", the implication to the average reader is that the difference is always obvious, when in reality, it may be highly conditional, somewhat conditional, or not conditional at all.

I'm only asking what his test conditions were so his statement of observation makes sense in context.

I'm not challenging your credentials at all.

But what were the test conditions in which you could reliably distinguish 16 vs 18 bits?

We had recorded some live music at 18 bits 50khz. I was not present for the recording. The music was stored on, as I recall (remember, this was 40 years ago), a PDP-10 where we could do all the DSP we wanted. The project was in Pasadena, everyone there was a Caltech grad or student. I was a senior. We were using, of course, all Pioneer equipment - their top of the line speakers and electronics, which were not so bad. We down sampled the music to various formats, including 16/50 and 12fp/50. None of the tests were double blind, we were investigating and convincing ourselves, not trying to prove anything. The 16 and 12fp sounded about the same. The 18 was slightly better. I repeated the tests on my home stereo - Tympani IIIs, Ampzilla 500 / Marantz DC 300, home made preamp. The DC 300 was modified by me, the Marantz designers had made a beginners mistake in the current amp section which I fixed, lowering the crossover distortion from slight to negligible. The preamp was 356s for the high end signal, 318/394 phono preamp. I would use different stuff today, but at the time it was as good as anything out there, and the phono preamp was better than anything else out there.

It's worth remembering how this format got started. 16 bits because that was the realistic limit for finite dollar ADC converters at the time. Actual CD players at the time frequently had 12 bit DACs and sounded horrible. 16 bits also because it was a computer word - 2 bytes. Recording formats - CDs - were chosen to hold just over an hour of music, 'cause they had to hold the 9th. This was the actual spec, CDs had to hold the 9th. 44.056khz 'cause that could be done and allow the CDs to play for 65min. Doesn't it seem rather lucky that today, when we can easily do 24 bits at 200+khz, that a 2 byte computer word at a sample frequency >40khz but could fit 65min in a CD would turn out to be exactly optimal? Not 15 bits, not 17 bits, but 16 exactly? Doesn't that seem a rather unlikely coincidence?

The best fit to the observed hydrogen / helium ratio of the universe is for 3.4 families of elementary particles. But you can't have .4 of an electron, so the actual universe has 3 families, electron, muon, taon. What's the actual optimal number of bits for audio? To my knowledge, that number has never been calculated. Audio engineers aren't typically that good at math, and it's doubtful the test data for human hearing is good enough to support the analysis. BTW, your human hearing charts looked just like that in the 70s, I question their accuracy. It would be nice is someone redid them with better equipment and better procedures. Unfortunately, as we all know, stereo is all but dead. It's all MP3 or youtube or home theater now. I'm really quite stunned that so many speaker manufacturers have survived. But then we also note that Martin Logan is now pumping out cheap crap from Canada instead of electostats from Kansas.

As you say, upconverting has solved many of the filtering problems of the 80s and 90s, but up and down converting include an implicit filter function. There's no free lunch. The upconverted signal does not sound identical to the base signal. This fact is central to DSP, both audio and visual - it's also understood at Pixar.

Your statement that we can make more nearly optimal filters today is incorrect. We're still using the math from the 30s and 40s: Butterworth, Bessel, Chebyshev, Eliptic. That math is optimal. It was optimal then, it's optimal now. But optimal is an engineering term. A perfect filter, as I presume you know, requires violating causality - you have to know the entire signal, past and future. If such a filter function existed, it would be proprietary property of Goldman Sachs or Warren Buffet and they would use it to all become billionaires. It would certainly not be available to squids like us.

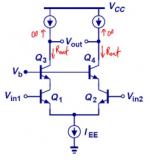

Cascode differential pair. Picture attached.

The lower two transistors are the 3906. They are the input. Their Ft is due to the parasitic capacitance between the collector and the base. Since they drive the upper two transistors, the 2222s, which are in common base mode, the collector of the 3906 basically doesn't change voltage so the capacitance is irrelevant. The 2222s are driven in common base, so their base-collector capacitance is also irrelevant. There's a frequency limit to this circuit, but it has almost nothing to do with Ft. So you get a world-class switch for a couple pennys.

This is engineering - anyone can get it done with cubic dollars, the good guys can get it done cheap.

The lower two transistors are the 3906. They are the input. Their Ft is due to the parasitic capacitance between the collector and the base. Since they drive the upper two transistors, the 2222s, which are in common base mode, the collector of the 3906 basically doesn't change voltage so the capacitance is irrelevant. The 2222s are driven in common base, so their base-collector capacitance is also irrelevant. There's a frequency limit to this circuit, but it has almost nothing to do with Ft. So you get a world-class switch for a couple pennys.

This is engineering - anyone can get it done with cubic dollars, the good guys can get it done cheap.

Attachments

Last edited:

We had recorded some live music at 18 bits 50khz. I was not present for the recording. The music was stored on, as I recall (remember, this was 40 years ago), a PDP-10 where we could do all the DSP we wanted. The project was in Pasadena, everyone there was a Caltech grad or student. I was a senior.

Mark did you know James Boyk, I took my daughter to visit Cal Tech when she was interviewing colleges and got a very sad message on his answering machine that his position was eliminated. I really wanted to meet him.

I didn't know him personally, but I heard him perform a couple times. He was superb.

Before that we had a poet in residence who wrote this:

At the California Institute of Technology

BY RICHARD BRAUTIGAN

I don’t care how God-damn smart

these guys are: I’m bored.

It’s been raining like hell all day long

and there’s nothing to do.

Before that we had a poet in residence who wrote this:

At the California Institute of Technology

BY RICHARD BRAUTIGAN

I don’t care how God-damn smart

these guys are: I’m bored.

It’s been raining like hell all day long

and there’s nothing to do.

At the California Institute of Technology

BY RICHARD BRAUTIGAN

He has a special place for me, I took an incomplete in a humanities course and two years later read "In Watermelon Sugar" and sent a fairly incoherent assessment of it to the teacher who had moved to SUNY. She sent in a grade so I could secure my escape from MIT.

Thanx to all. Very interesting info and viewpoints. I think I've found the best bang for the buck solution. Rega Fono Mini A2D ~$160. Great sound, specs, price and does 16-bit/48 kHz ADC using Audacity. I can't hear past 10 kHz and it's only getting harder as the years march on.

Oh... and my cats always get all the sweet spots

Oh... and my cats always get all the sweet spots

Last edited:

I'm not convinced recorded bandwidth is only to do with limits of higher frequency hearing ability.I can't hear past 10 kHz and it's only getting harder as the years march on.

We had recorded some live music at 18 bits 50khz. I was not present for the recording. The music was stored on, as I recall (remember, this was 40 years ago), a PDP-10 where we could do all the DSP we wanted. The project was in Pasadena, everyone there was a Caltech grad or student. I was a senior. We were using, of course, all Pioneer equipment - their top of the line speakers and electronics, which were not so bad. We down sampled the music to various formats, including 16/50 and 12fp/50. None of the tests were double blind, we were investigating and convincing ourselves, not trying to prove anything. The 16 and 12fp sounded about the same. The 18 was slightly better. I repeated the tests on my home stereo - Tympani IIIs, Ampzilla 500 / Marantz DC 300, home made preamp. The DC 300 was modified by me, the Marantz designers had made a beginners mistake in the current amp section which I fixed, lowering the crossover distortion from slight to negligible. The preamp was 356s for the high end signal, 318/394 phono preamp. I would use different stuff today, but at the time it was as good as anything out there, and the phono preamp was better than anything else out there.

It's worth remembering how this format got started. 16 bits because that was the realistic limit for finite dollar ADC converters at the time. Actual CD players at the time frequently had 12 bit DACs and sounded horrible. 16 bits also because it was a computer word - 2 bytes. Recording formats - CDs - were chosen to hold just over an hour of music, 'cause they had to hold the 9th. This was the actual spec, CDs had to hold the 9th. 44.056khz 'cause that could be done and allow the CDs to play for 65min. Doesn't it seem rather lucky that today, when we can easily do 24 bits at 200+khz, that a 2 byte computer word at a sample frequency >40khz but could fit 65min in a CD would turn out to be exactly optimal? Not 15 bits, not 17 bits, but 16 exactly? Doesn't that seem a rather unlikely coincidence?

The best fit to the observed hydrogen / helium ratio of the universe is for 3.4 families of elementary particles. But you can't have .4 of an electron, so the actual universe has 3 families, electron, muon, taon. What's the actual optimal number of bits for audio? To my knowledge, that number has never been calculated. Audio engineers aren't typically that good at math, and it's doubtful the test data for human hearing is good enough to support the analysis. BTW, your human hearing charts looked just like that in the 70s, I question their accuracy. It would be nice is someone redid them with better equipment and better procedures. Unfortunately, as we all know, stereo is all but dead. It's all MP3 or youtube or home theater now. I'm really quite stunned that so many speaker manufacturers have survived. But then we also note that Martin Logan is now pumping out cheap crap from Canada instead of electostats from Kansas.

As you say, upconverting has solved many of the filtering problems of the 80s and 90s, but up and down converting include an implicit filter function. There's no free lunch. The upconverted signal does not sound identical to the base signal. This fact is central to DSP, both audio and visual - it's also understood at Pixar.

Your statement that we can make more nearly optimal filters today is incorrect. We're still using the math from the 30s and 40s: Butterworth, Bessel, Chebyshev, Eliptic. That math is optimal. It was optimal then, it's optimal now. But optimal is an engineering term. A perfect filter, as I presume you know, requires violating causality - you have to know the entire signal, past and future. If such a filter function existed, it would be proprietary property of Goldman Sachs or Warren Buffet and they would use it to all become billionaires. It would certainly not be available to squids like us.

Thank you. As an engineer and scientist, I hope you can understand my point of view here.

From the above, I got:

1. The conclusion that 18 bits was "better" than 16 bits was the result of uncontrolled sighted testing.

2. The conclusion was stated anecdotally without reference to the average results of the total test group.

3. "slightly better" has not been defined. Care to elaborate?

4. No criteria mentioned re: recording level re: 0dBFS, specific content, playback SPL reference, system gain, input system gain and acoustic noise floor, etc.

Because of the above I find it a bit of a stretch to put an awful lot of stock in the claim of 18 bit superiority as being reliably discernible. More recent testing has not revealed consistent differentiation between 16 and 24 bits in controlled double-blind testing.

And just a couple of comments:

1. CD sampling frequency is 44.1, not 44.056. The latter was at one time a possibility for the CD, but ultimately was rejected. It was chosen for the EIAJ consumer PCM digital recording system (Sony PCM-F1 and similar) that used video transports designed for NTSC color at 29.97 fps. The format was defined as 14 bits, but in Sony's products they dropped a bit of error code and added two more data bits per sample. The Pro gear of the early PCM/CD era (Sony PCM-1600 family used for CD mastering) ran at 44.1, used pro U-Matic decks set for "monochrome" at 30fps.

2. The CD maximum capacity re: Beethovens 9th, may have been a goal, but though the disc had sufficient capacity, the practical maximum was 72 minutes play time of a single U-Matic tape. Thus, the reference performance of that work couldn't be put on the original CD until U-Matic tape left the chain. The original reference performance, that of a 1951 mono recording by Wilhelm Furtwängler, noted to be the slowest performance of the piece. That piece would not be released on a single CD until 1997. The somewhat more famous Herbert von Karajan recording of the 9th symphony was played faster and fit on one disc. Von Karajan also participated in the early CD promotion.

(a good reference for the points 1 and 2 above is the paper Shannon, Beethoven, and the Compact Disc by Dr. Kees A. Schouhamer Immink of Philips)

3. The higher performance filters I mention relate to the common use today of digital over-sampling filters to improve performance over the initially all analog anti-aliasing filters of the early days. Yes, the math still has to work, but over-sampling changes a few things for the better, such as a relaxed requirement for the final analog filters, and the application of high-order FIR digital filters. I'm sure there's no need to explain this, but the result is a more nearly optimal filter as compared to high-order analog filters of several decades ago, both in the quality of the composite result and in the in-band phase performance. I doubt there's a single audio device in current production that still uses analog-only filtering today.

4. The "stereo is all but dead" comment seems a bit out of line with the facts. Yes, a lot of home systems have been sold that are capable of driving more that two speakers, but the very largest share of all recorded and released music today is still two-channel stereo regardless of the final container, a point not at all arguable. Two-channel stereo played on a multi-channel system most often defaults to two speaker channels only, with processing available that could extract more channels at the option of the user. Most users don't know the option exists. The brief flirtation the industry had with 5.1 music has become almost obscure. Car stereo is still two channel stereo, as is the massive headphone audience, arguably the largest segment of total music listeners.

5. I'm unaware of any CD players that used 12 bit DACs. 14 bit, yes, a few, but very few, like the Philips/Magnevox CDP-100. But that's perhaps just a result of my limited scope. The original CDP-101 that Sony brought in to us for the first CD broadcast in the US was a 16 bit. I think Philips tried to hang on to the 14 bit concept because they already had a 14 bit DAC chip ready to go.

Last edited:

The Philips players that used 14bit DACs (TDA1540) also used modest oversampling (SAA7030) to get better than 14bit performance (noise floor). In a similar way to DAC chips today using large oversampling ratios and noise shaping to give a 6bit DAC a 22bit noise floor. I've also never come across a 12bit DAC in a CD player.

It appears you weren't satisfied with his test conditions after all.

I don't think we have all of the test conditions.

It's very hard to accept that any sort of research done at that level in academia would not have been conducted with the proper controls.

- Home

- Source & Line

- Analogue Source

- 24-Bit/192 kHz USB Audio Interface for vinyl A/D archiving?