Yes. IIRC the results were at some point described as "below audibility." I would like to know how that could be determined from DeltaWave?...we could then look at the DeltaWave results to help understand the differences at those moments in time.

If you don't have a response to the above, thank you anyway. Your comments have been helpful

Last edited:

Interesting. Multitones can produce rather complex waveforms in the time domain. Wasn't noise loading used for highly nonlinear systems, more-than-weakly non-LTI in a different way for every different frequency, or something like that?...add noise loading, that is a multiband filtered white noise spectrum that is the noise equivalent of multi tone testing to single frequency sine wave signals.

@Hans Polak

The audio-frequency intermodulation products appear to be second-order products, at least the ones I looked at in detail in the report of post #2696. A perfectly balanced circuit cannot create second-order intermodulation products, and everything is perfectly balanced in simulation as long as you don't deliberately add imbalance or do Monte Carlo simulations with mismatch.

One way to cause imbalance is to look at a single-ended rather than differential output signal. Did you ever see anything that looked realistic when looking at a single-ended output signal?

The audio-frequency intermodulation products appear to be second-order products, at least the ones I looked at in detail in the report of post #2696. A perfectly balanced circuit cannot create second-order intermodulation products, and everything is perfectly balanced in simulation as long as you don't deliberately add imbalance or do Monte Carlo simulations with mismatch.

One way to cause imbalance is to look at a single-ended rather than differential output signal. Did you ever see anything that looked realistic when looking at a single-ended output signal?

No need to guess.We are still guessing at what DeltaWave is doing. Its getting us nowhere. IMHO its useless without proper documentation.

DW is well documented in the main thread at ASR, plus you can always ask Paul detailed questions about the internal workings and he will freely share the info.

DW is pretty much flawless in my book but like with any sophisticated tool of this kind you need to really know what you are doing with it and how to set it up -- I definitely suggest a training phase with analytic signals one has created on purpose with exactly known differences.

@ThorstenL ; I've spent many years and lots of efforts to do nulling in the analog domain... but it's so awkward and the result with DW are orders of magnitude better and reproducible.

The biggest problem, like always, is clock sync. Without clock sync, analog nulling fails immediately even with initially aligned start offsets. DW has some provisions to correct for average clock drift which often is good enough for a compare, unless the clocks really wobble like crazy.

-----:-----

Are we still talking about different modulator schemes we want to compare, so basically a software thing?

If so, we could process L and R channels with the two different modulators and then record the L and R channels in one go, and thus in perfect sync.

Then DW can do an excellent job, but analog nulling has also chances to show something.... typically linear differences (FR of mag and phase) quickly dominate the residual, after "perfect" level matching (which must be restricted to the common flat FR range). Linear channel differences of the used DAC and ADC can contribute/skew the result but the delta can be factored out (takes a bit of effort, though).

This was a test to see if there are differences in PCM2DSD v03 and v04, not to see if there are differences against the original.IIRC the results were at some point described as "below audibility." I would like to know how that could be determined from DeltaWave?

Here are examples of things that can be found from DeltaWave:

Original vs. recordings

Spectrum of delta below -100dB in audio range. Phase delta below 1.5 degrees in audio range.

v03 vs. v04

Spectrum of delta below -110dB in audio range. Phase delta below 0.1 degrees in audio range.

Just as a reminder, regarding audibility so far nobody has reliably found anything audible between original and recordings or between recordings.

I wonder why you close your eyes to the plethora of pointless sighted listenings tests in this site. E.g. the ones in this thread. Is this a bias you are holding?NO, IT IS NOT BETTER to perform pointless tests that give at best a false confidence at the reality of the outcome.

The recordings I made were synchronized (i.e. dac uses adc clock). Between the 2 recordings the rms null in DeltaWave is -100,57dB (without clock drift correction or any non-linear EQ). I doubt making the recording of both modulators in one go would change the results much.If so, we could process L and R channels with the two different modulators and then record the L and R channels in one go, and thus in perfect sync.

I think for h) it could be questionable if its better with or w/o dither. I don't doubt one could hear a difference... Is there a description of how all these where tested?@mdsimon2

According to an AES paper presented by Paul Frindle, chief designer of the Sony Oxford digital mixing console, the following findings of audibility were reported:

View attachment 1310205

Please describe which the above audible effects would be determined to be audible or inaudible by use of DeltaWave.

//

@Hans Polak

The audio-frequency intermodulation products appear to be second-order products, at least the ones I looked at in detail in the report of post #2696. A perfectly balanced circuit cannot create second-order intermodulation products, and everything is perfectly balanced in simulation as long as you don't deliberately add imbalance or do Monte Carlo simulations with mismatch.

One way to cause imbalance is to look at a single-ended rather than differential output signal. Did you ever see anything that looked realistic when looking at a single-ended output signal?

Marcel, that's certainly a valid point.

Most simulations were done while using the balanced output.

I can't remember the cases where it was done in SE.

The only problem is the noise level in my sims at -130dB, while Bohrok's spectra are also showing IM products below that level.

But nevertheless, let me give it a fresh try in SE.

Hans

@ThorstenL ; I've spent many years and lots of efforts to do nulling in the analog domain... but it's so awkward and the result with DW are orders of magnitude better and reproducible.

Hmmm, I used other "audio file nulling" software tools before and found them rather useless.

Maybe DW is different from earlier attempts, but I find I do not trust the process, especially not ADC's that are non-deterministic and sample aligned.

There is just too much manipulation in the recording process.

Are we still talking about different modulator schemes we want to compare, so basically a software thing?

It was never as such about modulators, but modulators interaction with the DAC.

If so, we could process L and R channels with the two different modulators and then record the L and R channels in one go, and thus in perfect sync.

The modulators are in FPGA, I have doubts this is possible.

Thor

I wonder why you close your eyes to the plethora of pointless sighted listenings tests in this site.

I don't. They are exactly your philosophy though.

As in 'We can do these tests, so let's do these tests!"

E.g. the ones in this thread. Is this a bias you are holding?

I suggested not do pointless tests. I do not remember recommending pointless tests.

We have a member who uses listening tests and makes observations. These are questionable in terms of reliability, but I do not like to dismiss such things.

If we have observations of signal fidelity impairments AND reports audible differences, I feel that it is valid to investigate more and see if there is any relation. Suggesting an ABX tests in this context in not useful and depending on the individual may be seen as insulting.

I asked for reliable scientific evidence that Audio ABX is suitable to allow the reliable listening evaluation of subtle differences - which is what we are talking about here - in order to be able to revise my well, founded scientific view that ABX is a poor to useless tool for this.

Allow me to tell you a little story. In the ancient past (late 70's) an author in a east german electronics magazines published a new amplifier design. It was truly remarkable, according to the author.

There was no need of quiescent current adjustment, thermal runaway so the amplifier could use smaller heatsinks and was absolutely reliable in replication. It was DC coupled, so the frequency response was ruler-flat "DC to ultrasonics" and measured distortion and noise was at record low levels, claimed to outperform the best available designs from the capitalist west at the time. The Amplifier also offered more than double the power of the amplifiers common in east german production which tended to top out at 25W/4Ohm.

The Author also recommended his design for urgent mass production by the state owned industry instead of the horribly noisy, distorted and bandwidth limit designs in production at the time.

Naturally, measurements were made using 1970's instrumentation, so a needle meter for THD and basic analogue oscilloscope, low distortion sinewave generator.

In the following magazine issues we saw a number of letters. Some people at the RFZ (the technical section of the communist radio/tv systems) had build and tested the amplifier design and confirmed the objective results, but stated that the amplifier in their listening tests failed to offer even the same levels of audible fidelity as the previous studio reference offered, that is despite an apparently much improved SIGNAL FIDELITY, AUDIBLE FIDELITY was significantly worse than a traditional "warm bias" AC coupled class AB amplifier.

Something that was frequently mentioned was that when playing music there seemed to be audible distortion with highly dynamic music, specifically from LP. Even more, there were observations that the same track played from FM radio lacked this distortion. Other letters observed that very quiet passages seemed audibly distorted. Naturally the Author robustly defended his design.

Eventually letters came in that demonstrated that the unbiased Class B pseudo complementary output stage in the Amplifier that author had used in order to avoid larger heatsinks and adjustment of quiescent caused crossover distortion that significantly altered small signals, replacing the class B output stage with one that used standard bias, heatsinks and power supply voltages comparable to the common design was found to remove the low level distortion.

Eventually the Author grudgingly published an update that introduced correct biasing, so by now the Amplifier had lost the advantages of reduced heatsinks with increased power and lack of need for adjustment. But at least there was still super low distortion.

Still letters came citing the same problems with highly dynamic music from LP causing audible distortion. Some smart cookie who read english fluently and frequently read western and especially US electronics magazines at the Library of the US embassy in Berlin wrote in mentioning "Slew Rate Induced Distortion" and suggested a test method. Said individual did not mention the source (Ottala in the evil capitalist world) as this would have resulted in his letter not being published, though he mentioned that it was not his discovery.

The folks at the RFZ picked up on this, tested and confirmed that the design suffered from SID.

The reason was that the Op-Amp used for the Voltage Gain (east german uA709 copy, bootstrapped rail) was overcompensated and the output stage lacked gain.

The folks at the RFZ (which by that time I frequented as very junior assistant in R&D) also found in russian literature an amplifier design that added a cascode transistor to the Op-Amp output (base to GND) that also forced the output into class A, stopped almost the Op-Amp Output moving and experimented extensively with compensation schemes ultimately wrapping the output stage into the output compensation. This eliminated SID in tests.

In listening tests the classic monitor amplifier was still judged as offering better fidelity than this multiple stages design. Eventually the RFZ generated a new design that was developed in parallel and was a very generic "Lin" Style amplifier with CCS Tail differential pair, CCS loaded VAS and quasi complementary output stages that used only COMECON semiconductors, mostly east german.

This design was finally in listening tests to be equal or better than classic design or old tube amplifier's.

While in many ways structured and controlled, I am sure you would reject the kind of listening tests we did back then as "pointless sighted listening tests", yet even though they readily highlighted audible fidelity impairments that were eventually traceable to objective signal fidelity impairments and highlighted the need for extended and new tests in objective signal fidelity to ensure acceptable levels of audible fidelity.

Of course this is ancient history and the problems struggled with back then, we don't have them any more. We improved our science and technology. But that should not make us arrogant and cocky enough to think that we know it all and that now there is not possibility for hidden fidelity impairments we simply do not pick up in our tests.

Thanks to anyone who actually read through the whole shaggy dog that I met in Saigon during the 'nam war decades ago story, you deserve a thank you.

Thor

As I said this is not about science. Foobar ABX brings along the "blinding" which is an important control as it helps to reduce the biases. There is no need to look at the ABX scores. If there is another simple tool that removes the sighted observation that can be used instead.I asked for reliable scientific evidence that Audio ABX is suitable to allow the reliable listening evaluation of subtle differences - which is what we are talking about here - in order to be able to revise my well, founded scientific view that ABX is a poor to useless tool for this.

Foobar ABX brings along the "blinding" which is an important control as it helps to reduce the biases.

Did you read my previously linked article from the Technical University Dresden, on how our expectations shape our hearing?

WE HEAR WHAT WE EXPECT TO HEAR

Simply blinding the source of A or B is not useful in this context if the subject is aware that A is one of two choices (s)he has an opinion on and B is another.

Instead, by blinding and creating a forced choice you create additional stressors that make the subject less likely to actually consciously try to bypass the expectation bias build into our hearing. The idea "ABX removes bias" is easily debunked. It removes one aspect of bias

In other words, if I take two items and ask people to compare them and rate them for how much they like them and how the rate specific aspects of sound quality, I can then analyse if I see any pattern in the outcome that is statistically significant and can be correlated with preference score.

For example, in one case I had the whole office (around 20 people mostly mainland Chinese) a bunch of foreign friends test two identical devices with one being in a red box the other in a black box.

The mainland Chinese greatly preferred the red unit, finding it to sound much preferable, while foreigners reported the red unit sounded awful, HOWEVER individual sound quality scores were pretty much identical between the two groups and showed the items to "sound identical".

If you want to do blind testing, it only works if the subject is not biased in any way and has this perfectly balanced mind Charles Lutwidge Dodgson referred to in the preface to "The Hunting Of the Snark":

For instance, take the two words "fuming" and "furious." Make up your mind that you will say both words, but leave it unsettled which you will say first. Now open your mouth and speak. If your thoughts incline ever so little towards "fuming," you will say "fuming-furious;" if they turn, by even a hair's breadth, towards "furious," you will say "furious-fuming;" but if you have the rarest of gifts, a perfectly balanced mind, you will say "frumious."

Supposing that, when Pistol uttered the well-known words--

"Under which king, Bezonian? Speak or die!"

Justice Shallow had felt certain that it was either William or Richard, but had not been able to settle which, so that he could not possibly say either name before the other, can it be doubted that, rather than die, he would have gasped out "Rilchiam!"

The only other option is to literally blind the subject to the nature of the difference (in this case keeping A & B identified IF no other bias is resulting still removes the bias) and force the listener to rely on her or his hearing and not pre-biased neural pathways.

So I repeat, "Audio ABX" is useless and counter productive in the context.

What might be useful is to make a standard questionnaire for listening tests, which must include things like level matching and allow listeners to listen any way they like (blind, sighted, ABX) and get them to give absolute scores out of ten (integer please, no fractions) for a lot of detailed sound quality aspects.

If you find that multiple listeners have similar scoring you can use statistical analysis to determine if there is a "clustering" of results and how likely it is that this clustering is down to randomness. You in this case not only answer the question "how likely is it that there is there an audible difference" (though you answer it indirectly by drawing conclusions from a much richer dataset - the way it done for example in medical trials).

But you gain an insight into what aspects are seen lacking and you may be able to use prior experience in linking audible fidelity impairments to signal fidelity impairments to improve the outcome. And I believe that is our aim here.

One is perfectly entitled to question if the results of a given sighted, blind or ABX listening test are able to be generalised, if the author referring to them considers them generalisable and why they should be generalised (or not).

One might suggest improvements in methodology, but to in effect say "go away and do an Audio ABX test and don't come back until you get a 9/10 result." is not constructive UNLESS one has reliable proof that this test is valid in context.

I think all that needs to be said has been said, I'll leave you the last word.

Thor

Unfortunately that does not work here. If the test participant has formed an opinion prior to taking the test the answers will be based on confirmation bias which is exactly what happened here. Only way to remove the confirmation bias is to use ABX or similar protocol.What might be useful is to make a standard questionnaire for listening tests, which must include things like level matching and allow listeners to listen any way they like (blind, sighted, ABX) and get them to give absolute scores out of ten (integer please, no fractions) for a lot of detailed sound quality aspects.

I tried again with Bohrok's -60dB file, but looked now to one SE output only and used your latest filter design starting with 39nF.@Hans Polak

The audio-frequency intermodulation products appear to be second-order products, at least the ones I looked at in detail in the report of post #2696. A perfectly balanced circuit cannot create second-order intermodulation products, and everything is perfectly balanced in simulation as long as you don't deliberately add imbalance or do Monte Carlo simulations with mismatch.

One way to cause imbalance is to look at a single-ended rather than differential output signal. Did you ever see anything that looked realistic when looking at a single-ended output signal?

Unfortunately the result is as before.

See both attachments, one spectrum up to 10Mhz and the second up to 20Khz,

Hans

Attachments

On the subject of ABX:

If someone wants people to willingly use blind testing software, then I would suggest they need to write a better app for it than the poorly-designed Foobar200 ABX plugin. I used it before and so have a number of other people. The complaints about it are right on, but generally ignored/denied by people who want to force other people to submit to it. More on that subject later below.

Also, the people that demand everyone submit to ABX at every drop of the hat don't seem to know or care how much work it is to prepare for it. Forum member Howie Hoyt described what you have to do to score well, so did Paul Frindle for that matter. Here is Howie's description:

...for better or worse I have been dragged into hundreds of A/B, ABX/ Random long selection repeats, etc. and in my experience Mark is right, they show excessive negative results, due to lack of training in hearing the difference, as well as the mental confusion of having things switch up. They do show decent correlation for a large number of people for gross differences. Also, IMHO none of these tests do much to eliminate pre-existing biases towards certain SQ contours (the "I'm used to my own speakers" thing).

The only way I have been able to get reliable results and consensus is by weeks of training with specific exaggerated SQ problems (missing bits, odd noise floor contour, mechanical noises on magnetic recordings, etc.) and then reducing them to near-inaudibility. Done this way people train their brains to identify specific sounds. In a similar way we hired and trained pre-press graphics people by placing cards in front of them with small color variations and asked them to pick out the outlier. Some show an ability immediately, but many can learn by repeated testing, resulting in a crew of artists who could pick out microscopic color variations I personally could not see.

https://www.diyaudio.com/community/threads/the-black-hole.349926/post-7310119

I would also add the the above that I am strongly against "forced choice." The justification for using it is understood, but it brings problems too such as stress. For me, if I don't know the answer at one moment in time, then my policy is to decline to vote at at that time. If that choice on my part ends the test, the so be it. Best to fix the test if it desired that people will agree to use it.

--------------------------------------------

Long ago I also wrote about Foobar ABX and what needs to be fixed to make it more practically usable:

I have proposed a change to ABX as it currently exists in Foobar ABX, that I think would move it a into the direction of being more useful. Maybe even to the whole way to definitely fair and useful. It would involve adding a loop checkbox, and single button switching between samples with eyes closed such as a hotkey on the keyboard. Those two things should help a lot. At that point I would want to test again. Not sure about at the end a question how the answer choices are presented. Don't remember if its okay or not since I haven't tried it for quite awhile. A programmer here in the forum did contact me by PM at one time and offer to do it so we would have a something a little better than Foobar ABX, but he eventually decided it was more than he could take on.

With regard to 'if it doesn't sound different its bad,' don't know what that is supposed to mean. Some things are indistinguishable from one another. Just not usually the things in PMA's listening tests, although they can be quite hard to use Foobar ABX on. My only complaint about it is that if I can reliably hear a difference blind using a different protocol myself, I would like to see us find a protocol as good that we can agree on and that everybody here can use. The problem is that Foobar ABX can't be changed and it is the only program with a validation test system (although it can be cheated). Like many things, it would appear to take funding to fix it, and nobody wants to pay. They only want to argue.

https://www.diyaudio.com/community/...rch-preamplifier-part-iii.318975/post-5603115

-------------------------------------------

Bottom line for me: If someone in this thread thinks I am going to suffer as Howie described, and do it over and over again for he pleasure of the person demanding it from me for every little listening observation, its not going to happen. Either write a new A/B blind test app, or pay a programmer to do it. Then you will see more people willing to cooperate. Until that time I will continue to report listening observations as I see fit.

Mark

If someone wants people to willingly use blind testing software, then I would suggest they need to write a better app for it than the poorly-designed Foobar200 ABX plugin. I used it before and so have a number of other people. The complaints about it are right on, but generally ignored/denied by people who want to force other people to submit to it. More on that subject later below.

Also, the people that demand everyone submit to ABX at every drop of the hat don't seem to know or care how much work it is to prepare for it. Forum member Howie Hoyt described what you have to do to score well, so did Paul Frindle for that matter. Here is Howie's description:

...for better or worse I have been dragged into hundreds of A/B, ABX/ Random long selection repeats, etc. and in my experience Mark is right, they show excessive negative results, due to lack of training in hearing the difference, as well as the mental confusion of having things switch up. They do show decent correlation for a large number of people for gross differences. Also, IMHO none of these tests do much to eliminate pre-existing biases towards certain SQ contours (the "I'm used to my own speakers" thing).

The only way I have been able to get reliable results and consensus is by weeks of training with specific exaggerated SQ problems (missing bits, odd noise floor contour, mechanical noises on magnetic recordings, etc.) and then reducing them to near-inaudibility. Done this way people train their brains to identify specific sounds. In a similar way we hired and trained pre-press graphics people by placing cards in front of them with small color variations and asked them to pick out the outlier. Some show an ability immediately, but many can learn by repeated testing, resulting in a crew of artists who could pick out microscopic color variations I personally could not see.

https://www.diyaudio.com/community/threads/the-black-hole.349926/post-7310119

I would also add the the above that I am strongly against "forced choice." The justification for using it is understood, but it brings problems too such as stress. For me, if I don't know the answer at one moment in time, then my policy is to decline to vote at at that time. If that choice on my part ends the test, the so be it. Best to fix the test if it desired that people will agree to use it.

--------------------------------------------

Long ago I also wrote about Foobar ABX and what needs to be fixed to make it more practically usable:

I have proposed a change to ABX as it currently exists in Foobar ABX, that I think would move it a into the direction of being more useful. Maybe even to the whole way to definitely fair and useful. It would involve adding a loop checkbox, and single button switching between samples with eyes closed such as a hotkey on the keyboard. Those two things should help a lot. At that point I would want to test again. Not sure about at the end a question how the answer choices are presented. Don't remember if its okay or not since I haven't tried it for quite awhile. A programmer here in the forum did contact me by PM at one time and offer to do it so we would have a something a little better than Foobar ABX, but he eventually decided it was more than he could take on.

With regard to 'if it doesn't sound different its bad,' don't know what that is supposed to mean. Some things are indistinguishable from one another. Just not usually the things in PMA's listening tests, although they can be quite hard to use Foobar ABX on. My only complaint about it is that if I can reliably hear a difference blind using a different protocol myself, I would like to see us find a protocol as good that we can agree on and that everybody here can use. The problem is that Foobar ABX can't be changed and it is the only program with a validation test system (although it can be cheated). Like many things, it would appear to take funding to fix it, and nobody wants to pay. They only want to argue.

https://www.diyaudio.com/community/...rch-preamplifier-part-iii.318975/post-5603115

-------------------------------------------

Bottom line for me: If someone in this thread thinks I am going to suffer as Howie described, and do it over and over again for he pleasure of the person demanding it from me for every little listening observation, its not going to happen. Either write a new A/B blind test app, or pay a programmer to do it. Then you will see more people willing to cooperate. Until that time I will continue to report listening observations as I see fit.

Mark

Last edited:

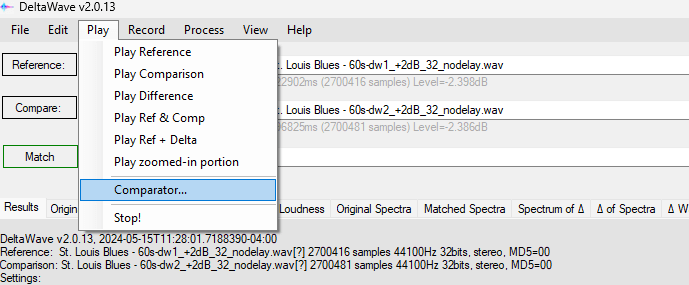

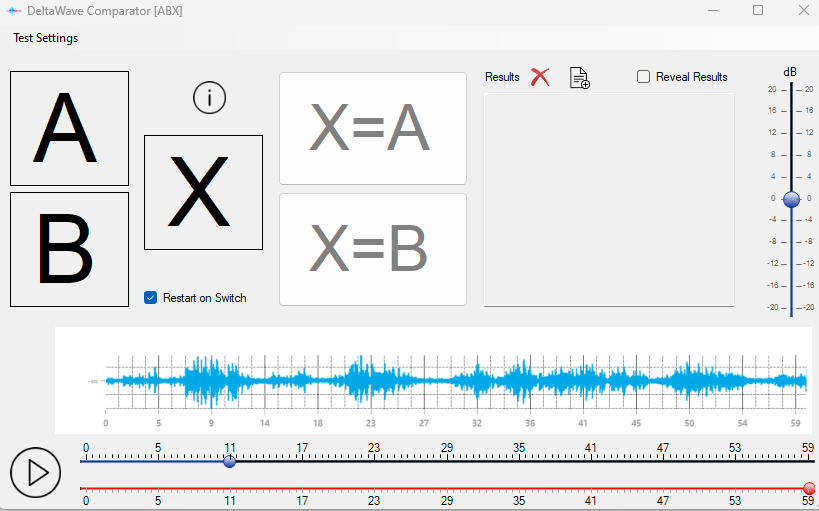

@Markw4 Can you please give the DeltaWave comparator a try? It is super easy to use, just load the files you want to compare and hit Match. Then go to Play -> Comparator.

You can start the comparison from any point in the track. You can also choose whether to restart from that point while switching or to have track keep playing. You can do as many comparisons as you like. It doesn't have hotkey switching but I bet we could get Paul to add it.

I gave it a try. Really thought I might have heard a difference on some of the percussive hits. The results are initially hidden so you don't know how you are doing until you hit reveal results. Turns out I did great in the beginning and then it all fell apart.

Michael

You can start the comparison from any point in the track. You can also choose whether to restart from that point while switching or to have track keep playing. You can do as many comparisons as you like. It doesn't have hotkey switching but I bet we could get Paul to add it.

I gave it a try. Really thought I might have heard a difference on some of the percussive hits. The results are initially hidden so you don't know how you are doing until you hit reveal results. Turns out I did great in the beginning and then it all fell apart.

Code:

Trial 1, user: A actual: A 1/1

Trial 2, user: B actual: B 2/2

Trial 3, user: A actual: A 3/3

Trial 4, user: B actual: B 4/4

Trial 5, user: B actual: B 5/5

Trial 6, user: B actual: A 5/6

Trial 7, user: B actual: A 5/7

Trial 8, user: A actual: A 6/8

Trial 9, user: A actual: B 6/9

Trial 10, user: A actual: A 7/10

Trial 11, user: A actual: B 7/11

Trial 12, user: B actual: A 7/12

Trial 13, user: B actual: B 8/13

Trial 14, user: A actual: B 8/14

Trial 15, user: B actual: A 8/15

Probability of guessing: 50%

A=Reference,B=Comparison

Test type: ABX

-----------------

DeltaWave v2.0.13, 2024-05-15T11:45:09.2061618-04:00

Reference: St. Louis Blues - 60s-dw1_+2dB_32_nodelay.wav[?] 2641924 samples 44100Hz 32bits, ch=0, MD5=00

Comparison: St. Louis Blues - 60s-dw2_+2dB_32_nodelay.wav[?] 2641924 samples 44100Hz 32bits, ch=0, MD5=00

LastResult: Ref=C:\Users\mdsim\Downloads\Transfer\St. Louis Blues - 60s-dw1_+2dB_32_nodelay.wav:[?], Comp=C:\Users\mdsim\Downloads\Transfer\St. Louis Blues - 60s-dw2_+2dB_32_nodelay.wav:[?]

LastResult: FilterType=0, Bandwidth=0

LastResult: FilterType=0, Bandwidth=0

LastResult: Drift=0, Offset=1874.308787556 Phase Inverted=False

LastResult: Freq=44100

Settings:

Gain:True, Remove DC:True

Non-linear Gain EQ:False Non-linear Phase EQ: False

EQ FFT Size:65536, EQ Frequency Cut: 0Hz - 0Hz, EQ Threshold: -500dB

Correct Non-linearity: False

Correct Drift:False, Precision:30, Subsample Align:True

Non-Linear drift Correction:False

Upsample:False, Window:Kaiser

Spectrum Window:Kaiser, Spectrum Size:32768

Spectrogram Window:Hann, Spectrogram Size:4096, Spectrogram Steps:2048

Filter Type:FIR, window:Kaiser, taps:262144, minimum phase=False

Dither:False bits=0

Trim Silence:True

Enable Simple Waveform Measurement: FalseMichael

This is a good point. So far only three people have offered opinions on the sound for the two FPGA versions. They are in full agreement on a few simple points. However, its only three people, and most people who are willing to opine may not participate further if their contributions are not appreciated.If you find that multiple listeners have similar scoring you can use statistical analysis to determine if there is a "clustering" of results and how likely it is that this clustering is down to randomness. You in this case not only answer the question "how likely is it that there is there an audible difference" (though you answer it indirectly by drawing conclusions from a much richer dataset - the way it done for example in medical trials).

So, we need more people with Marcel dacs who are willing to listen and to opine.

On another subject, regarding the Marcel dac here, with the external IanCanada 45MHz clock its the best this dac has ever sounded. To the best of my recollection that includes better than the Acko version setup here previously (may decide to do some tests later to see if I can verify that). The main problem now would seem to be finding an output stage to do the dac justice.

Last edited:

Hi Michael,

First problem with using DeltaWave here is I don't have an ADC that is of the same or better quality than my dac. Can't see a reason to compare two blurred and damaged recordings just because that's the best ADC I have. What am I trying to accomplish by that? Its obviously not what I want to compare, nor what I want to talk about as a difference of the dac sound, etc. Its a difference of the ADC sound which is something else again.

The most practical way I have found to validate/disprove my listening observations is to use multiple trained listeners then compare verbal descriptions after everyone has listened one at a time so as not to influence each other. Its just that getting together a listening group at every little drop of the hat is also not always practical. So far only Thor seems to understand the utility of that methodology. Don't know why it isn't obvious to others.

In the meantime, some preliminary listening observations are going to need later revision. That's the way it works when you have a noisy detector and you want the latest news without a lot of integration time. There is going to be a little noise in the data, but its not so noisy so as to make it unusable nor worthless. In principle that doesn't just apply just to preliminary listening tests either, it can be an issue in any area of technical endeavor.

Mark

First problem with using DeltaWave here is I don't have an ADC that is of the same or better quality than my dac. Can't see a reason to compare two blurred and damaged recordings just because that's the best ADC I have. What am I trying to accomplish by that? Its obviously not what I want to compare, nor what I want to talk about as a difference of the dac sound, etc. Its a difference of the ADC sound which is something else again.

The most practical way I have found to validate/disprove my listening observations is to use multiple trained listeners then compare verbal descriptions after everyone has listened one at a time so as not to influence each other. Its just that getting together a listening group at every little drop of the hat is also not always practical. So far only Thor seems to understand the utility of that methodology. Don't know why it isn't obvious to others.

In the meantime, some preliminary listening observations are going to need later revision. That's the way it works when you have a noisy detector and you want the latest news without a lot of integration time. There is going to be a little noise in the data, but its not so noisy so as to make it unusable nor worthless. In principle that doesn't just apply just to preliminary listening tests either, it can be an issue in any area of technical endeavor.

Mark

Last edited:

Have you tried comparing recordings against the original?I gave it a try. Really thought I might have heard a difference on some of the percussive hits. The results are initially hidden so you don't know how you are doing until you hit reveal results. Turns out I did great in the beginning and then it all fell apart.

- Home

- Source & Line

- Digital Line Level

- Return-to-zero shift register FIRDAC