Bob Cordell said:Bear in mind that zero PIM does not mean zero lagging phase shift from input to output, but rather the absence of change in that phase when open-loop gain changes.

Hi Bob,

I think you mean the change in phase as the output signal amplitude changes, right?

That's expressed in equation (25) on this page. Vo in that equation is the output signal amplitude. That equation covers intermediate phase shifts.

There are a bunch of substitutions required in order to get the actual numbers showing the change in phase using that formula. I'll try to run two scenarios with the same GBW - one where the open-loop amp is an integrator and another with a wide open-loop bandwidth (maybe 10x the frequency of the signal) and look at input/output phase shift vs. signal level.

Hi, Mr. Pass,

Yes I cannot make a direct relation too.

I cannot make a direct relation too.

Reading that, I make a very distance possibility (very possibly wrong).

I know that everything mass and flowing cannot escape from wave theory. Will have self-resonance, peak and dip responses.

I always wonder, why experienced designers like yourself and JC put fixed resistor voltage divider for cascoding Jfet input differential. It will have more harmonic distortion. Steady cascode reference powered by a CCS and resistor to the emitor junction of differential pair will have less distortion, since the Jfet's Vds is constant towards music signal. Yet you both don't use this. I assume you pick this because it sounds better, not measured better.

I never read about hearing system before, but from that website, I read that mammals (human) hearing system is not formed by a single diaphragm (like microphone), but consist of 15,000-20,000 hair cells that receives sound energy. It is placed in a conical tunnel filled with fluid. ( http://hyperphysics.phy-astr.gsu.edu/hbase/sound/place.html#c1 )

the wide conical receives high frequency, the other edge receives low frequency.

The interesting part is the "sharpening"

http://hyperphysics.phy-astr.gsu.edu/hbase/sound/place.html#c2

and its behavior

http://hyperphysics.phy-astr.gsu.edu/hbase/sound/vowel.html#c1

http://hyperphysics.phy-astr.gsu.edu/hbase/sound/bekesy.html#c1

I know that human likes harmonics, otherwise all guitar, violin, piano will be coated with thick tar to prevent all their box resonance. The expensive accoustic guitar has different sound from cheap ones, maybe the expensive one has better harmonic composition to the human haircells position.

I did not find that particularly enlightening. I recommend

The Psychology of Music, Second Edition, Diana Deutsch.

It is quite clear that hearing is far more complex and subtle than

the approaches used to measure amplifiers.

Yes

Reading that, I make a very distance possibility (very possibly wrong).

I know that everything mass and flowing cannot escape from wave theory. Will have self-resonance, peak and dip responses.

I always wonder, why experienced designers like yourself and JC put fixed resistor voltage divider for cascoding Jfet input differential. It will have more harmonic distortion. Steady cascode reference powered by a CCS and resistor to the emitor junction of differential pair will have less distortion, since the Jfet's Vds is constant towards music signal. Yet you both don't use this. I assume you pick this because it sounds better, not measured better.

I never read about hearing system before, but from that website, I read that mammals (human) hearing system is not formed by a single diaphragm (like microphone), but consist of 15,000-20,000 hair cells that receives sound energy. It is placed in a conical tunnel filled with fluid. ( http://hyperphysics.phy-astr.gsu.edu/hbase/sound/place.html#c1 )

the wide conical receives high frequency, the other edge receives low frequency.

The interesting part is the "sharpening"

http://hyperphysics.phy-astr.gsu.edu/hbase/sound/place.html#c2

and its behavior

http://hyperphysics.phy-astr.gsu.edu/hbase/sound/vowel.html#c1

http://hyperphysics.phy-astr.gsu.edu/hbase/sound/bekesy.html#c1

If some hair cells become active, while the ones before and after those is not too active, due their energy is used by the active hair cells, then at some distance before and after those active haircells, the haircells are normal (the energy is there). In conical chamber, it makes sense for me that the whole arrangement can sense harmonics, or maybe perceived the "right" harmonic composition, where the right positioned haircells receive the right energy.Since it seems unlikely that the basic place theory for pitch perception can explain the extraordinary pitch resolution of the human ear, some sharpening mechanism must be operating. Several of the proposed mechanism have the nature of lateral inhibition on the basilar membrane. One way to sharpen the pitch perception would be bring the peak of the excitation pattern on the basilar membrane into greater relief by inhibiting the firing of those hair cells which are adjacent to the peak. Since nerve cells obey an "all-or-none" law, discharging when receiving the appropriate stimulus and then drawing energy from the metabolism to recharge before firing again, one form the lateral inhibition could take is the inhibition of the recharging process since the cells at the peak of the response will be drawing energy from the surrounding fluid most rapidly. Inhibition of the lateral hair cells could also occur at the ganglia, with some kind of inhibitory gating which lets through only those pulses from the cells which are firing most rapidly. It is known that there are feedback signals from the brain to the hair cells, so the inhibition could occur by that means.

I know that human likes harmonics, otherwise all guitar, violin, piano will be coated with thick tar to prevent all their box resonance. The expensive accoustic guitar has different sound from cheap ones, maybe the expensive one has better harmonic composition to the human haircells position.

Attachments

lumanauw said:I always wonder, why experienced designers like yourself and JC put fixed resistor voltage divider for cascoding Jfet input differential. It will have more harmonic distortion. Steady cascode reference powered by a CCS and resistor to the emitor junction of differential pair will have less distortion, since the Jfet's Vds is constant towards music signal. Yet you both don't use this. I assume you pick this because it sounds better, not measured better.

I never read about hearing system before, but from that website, I read that mammals (human) hearing system is not formed by a single diaphragm (like microphone), but consist of 15,000-20,000 hair cells that receives sound energy. It is placed in a conical tunnel filled with fluid. The wide conical receives high frequency, the other edge receives low frequency.

If some hair cells become active, while the ones before and after those is not too active, due their energy is used by the active hair cells, then at some distance before and after those active haircells, the haircells are normal (the energy is there). In conical chamber, it makes sense for me that the whole arrangement can sense harmonics, or maybe perceived the "right" harmonic composition, where the right positioned haircells receive the right energy.

I know that human likes harmonics, otherwise all guitar, violin, piano will be coated with thick tar to prevent all their box resonance. The expensive accoustic guitar has different sound from cheap ones, maybe the expensive one has better harmonic composition to the human haircells position.

1) I assume that you have measured the differences in cascode

bias systems and verified that a constant voltage on the cascode

measures better. I have examples where it does not.

2) The human hearing system consists of the mechanical system

described and a system of nearly unfathomable neural networks.

Most of the mystery resides in the latter.

Nelson Pass said:

1) I assume that you have measured the differences in cascode

bias systems and verified that a constant voltage on the cascode

measures better. I have examples where it does not.

2) The human hearing system consists of the mechanical system

described and a system of nearly unfathomable neural networks.

Most of the mystery resides in the latter.

Hi Nelson,

I *was* just going (OT) to ask if your avatar is an image of one of the eyes of Colossus, from the movie 'Colossus: The Forbin Project'. [EDIT: Oh hell. Maybe it's Hal, from '2001...'.] But, then, reading your post, I remembered proposing, in the late 1970s, that eventually we might have medical-type scanners that could resolve individual neurons, and their interconnections, at which point a computer might be able to construct a working model of even an entire (individual's) brain (and senses, etc etc). (Now I'm realizing that setting 'the initial conditions' could be the 'bear', there; different scanner, maybe <grin>. But it might at least be cool for studying certain subsystems.) [Sorry if that's too 'off the wall'. I should have been asleep, hours ago. I actually only casually mentioned it, during a conversation with a grad student in an EE building hallway, in Dec 1978 I think. But, by the next semester, her professor had applied for a grant to study exactly that possibility.] I reckon it'll be 'a while', before that can be done. But, if and when it can be done well, the possibilities would be intriguing, to say the least.

gootee said:

Hi Nelson,

I *was* just going (OT) to ask if your avatar is an image of one of the eyes of Colossus, from the movie 'Colossus: The Forbin Project'. [EDIT: Oh hell. Maybe it's Hal, from '2001...'.] But, then, reading your post, I remembered proposing, in the late 1970s, that eventually we might have medical-type scanners that could resolve individual neurons, and their interconnections, at which point a computer might be able to construct a working model of even an entire (individual's) brain (and senses, etc etc). (Now I'm realizing that setting 'the initial conditions' could be the 'bear', there; different scanner, maybe <grin>. But it might at least be cool for studying certain subsystems.) [Sorry if that's too 'off the wall'. I should have been asleep, hours ago. I actually only casually mentioned it, during a conversation with a grad student in an EE building hallway, in Dec 1978 I think. But, by the next semester, her professor had applied for a grant to study exactly that possibility.] I reckon it'll be 'a while', before that can be done. But, if and when it can be done well, the possibilities would be intriguing, to say the least.

Tom,

The problem with that approach is that although brains have by and large similar organisations, the details can be quite different between individuals. And as if that is not enough, brains are very dynamic. They reconfigure and re-wire themselves constantly, depending on what you are doing and experiencing. Learning is a good example of how brains dynamically adapt to what you are doing. Another is after a stroke: within a few hours researchers can see shifting of activity in the brain when certain areas start to take over from damaged areas.

Suppose you are to debug an amplifier with a problem. You can identify the power supply, the output stage, the input stage and such. But while you are probing say the output stage, and reading your meters, the amplifier reacts to this by reconfiguring the Vas stage. So after checking the output stage, you go back to the Vas and all of a sudden your readings are different then before. What the ...! While you are trying to get a handle on that, the amp reconfigures... etc. You get the point. Now multiply this complexity by many orders of magnitude and you begin to glimpse the formidable task before us.

Jan Didden

janneman said:

Tom,

The problem with that approach is that although brains have by and large similar organisations, the details can be quite different between individuals. And as if that is not enough, brains are very dynamic. They reconfigure and re-wire themselves constantly, depending on what you are doing and experiencing. Learning is a good example of how brains dynamically adapt to what you are doing. Another is after a stroke: within a few hours researchers can see shifting of activity in the brain when certain areas start to take over from damaged areas.

Suppose you are to debug an amplifier with a problem. You can identify the power supply, the output stage, the input stage and such. But while you are probing say the output stage, and reading your meters, the amplifier reacts to this by reconfiguring the Vas stage. So after checking the output stage, you go back to the Vas and all of a sudden your readings are different then before. What the ...! While you are trying to get a handle on that, the amp reconfigures... etc. You get the point. Now multiply this complexity by many orders of magnitude and you begin to glimpse the formidable task before us.

Jan Didden

Thanks, Jan. I understand that much. I'm just glad you didn't think I was crazy for even thinking about it. ;-)

In some of the types of cases you mentioned, a 'proper' model, if attainable, might have the same behavior, e.g. learning, and re-wiring itself, et al. Today's relatively-simple 'neural network' models already do that (although, I realize that they are defined completely differently, and aren't really comparable, even in that sense).

The myriad details of a particular model would presumably come from the actual scan of an individual brain, and would not just have a 'similar organization'. It would be more-or-less an exact structural/neural model, from the time of the scan at least.

But, yes, I was only speaking 'off the cuff'. Certainly, for now at least, there are many seemingly-insurmountable problems, with this approach. Yet, it is still tantalizing food for thought.

Just now, I'm thinking that even doing it on a much smaller scale, maybe only for some of the 'dumber' subsystem areas of brains, even if the resulting models remained static, might produce some practical artificial systems of significant value, possibly with applications in things like sensor or control systems, or other areas. Of course, with similar and maybe only slightly less intractable problems, I'm afraid that even that will have to be in the unforseeable future.

Yes, it is fascinating and certainly food for thought. And a lot of research is being done and progress is being made. We much better understand how the brain functions then even 10 years ago; the opinion that the brain is 'just like a computer, only more complex' is heard less and less.

But it seems it suffers from the onion syndrome: Whenever a skin is peeled back to take a look at the inside of the onion, what we find is - another onion.

Jan Didden

But it seems it suffers from the onion syndrome: Whenever a skin is peeled back to take a look at the inside of the onion, what we find is - another onion.

Jan Didden

Droste effect

Hi Scott,

I too was puzzled by 'Droste'. I never thought that the fame our Dutch cacao nurse has crossed the ocean.

Cheers, Edmond.

PS

To all,

It's the open loop gain - bandwidth product that matters, not just the (-3dB) corner frequency. So, loading the VAS output with a resistor for example (although it does increase the open loop bandwidth), is pointless. It only degrades the performance.

scott wurcer said:

Is this where "feedback goes 'round and 'round" came from? The veritable sonic Droste, indeed. Martin IIRC did some interesting stuff when he mounted miniature accelerometers on tone arms.

Hi Scott,

I too was puzzled by 'Droste'. I never thought that the fame our Dutch cacao nurse has crossed the ocean.

Cheers, Edmond.

PS

To all,

It's the open loop gain - bandwidth product that matters, not just the (-3dB) corner frequency. So, loading the VAS output with a resistor for example (although it does increase the open loop bandwidth), is pointless. It only degrades the performance.

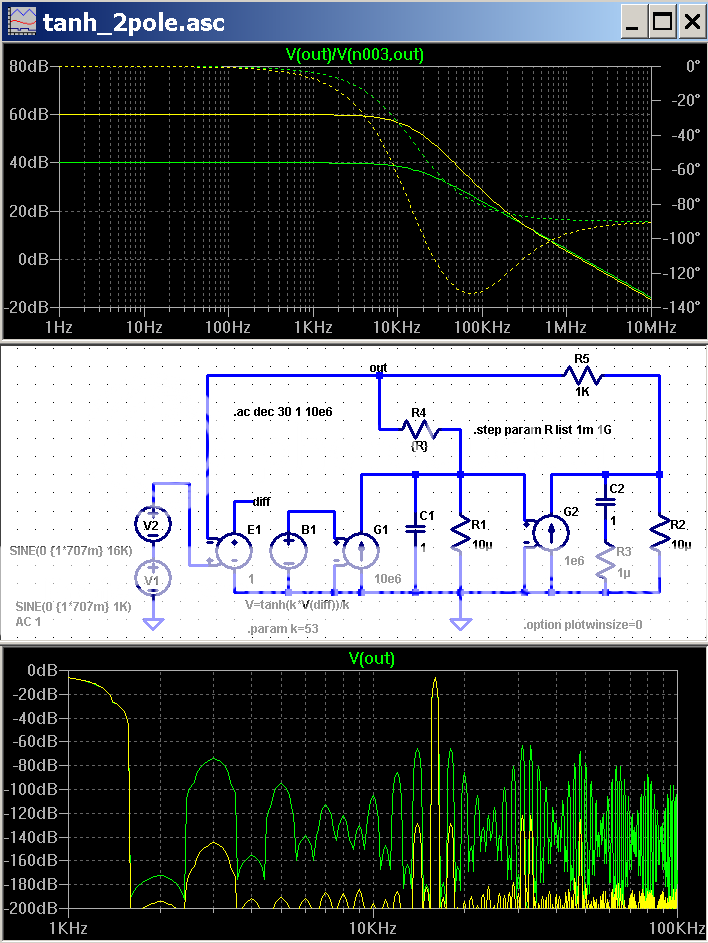

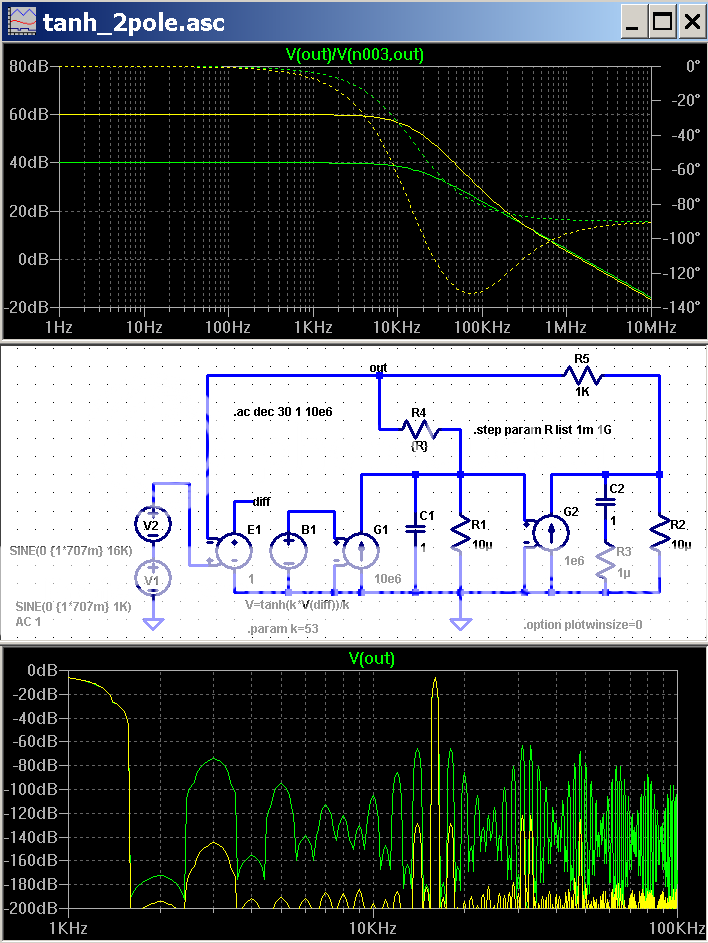

I think this sim is a OK "sandbox" for playing with tanh input distortion and loop gain, tanh = idealized bjt diff pair gm distortion

G1 is a single pole gain stage with 10 Mrad/s unity gain frequency, call it 1.6 Mhz

Loop gain of G1 stage is 40dB giving ~ 16 KHz loop gain corner

the E1 VCVS takes the input and gives a single ended diff - which is then distorted in the B1 source

parameter k adjusts the tanh scale factor while keeping the 0 V slope constant at 1

I hand trimmed k to give -40 dB HD3 with 16KHz @ 1.41 V input to the loop ( 0 dB in LtSpice) with only the single pole G1 loop gain

then IMD is probed with 1:1 1 KHz, 16KHz that sum to the same 1.41 V peak that gives 1% distortion with single pole gain

R4,5 selects between the single pole response of G1 and a 2-pole response with the added 20 dB gain of G2

the Green traces are the single pole results, the yellow is the 2-pole

top is loop gain

bottom shows the huge distortion reduction that comes from simply 20 dB more gain

G1 is a single pole gain stage with 10 Mrad/s unity gain frequency, call it 1.6 Mhz

Loop gain of G1 stage is 40dB giving ~ 16 KHz loop gain corner

the E1 VCVS takes the input and gives a single ended diff - which is then distorted in the B1 source

parameter k adjusts the tanh scale factor while keeping the 0 V slope constant at 1

I hand trimmed k to give -40 dB HD3 with 16KHz @ 1.41 V input to the loop ( 0 dB in LtSpice) with only the single pole G1 loop gain

then IMD is probed with 1:1 1 KHz, 16KHz that sum to the same 1.41 V peak that gives 1% distortion with single pole gain

R4,5 selects between the single pole response of G1 and a 2-pole response with the added 20 dB gain of G2

the Green traces are the single pole results, the yellow is the 2-pole

top is loop gain

bottom shows the huge distortion reduction that comes from simply 20 dB more gain

Attachments

In the future, artificial intelligences are likely to be organized in

such a way that, as Gibson foretells it, they give great results

but the details of their process and motivations will be obscure.

In the meantime, we seem to understand that the brain assembles

a picture of audio reality from all the resources it has. The color

of the front panel LED is one of those.

such a way that, as Gibson foretells it, they give great results

but the details of their process and motivations will be obscure.

In the meantime, we seem to understand that the brain assembles

a picture of audio reality from all the resources it has. The color

of the front panel LED is one of those.

Nelson Pass said:In the future, artificial intelligences are likely to be organized in

such a way that, as Gibson foretells it, they give great results

but the details of their process and motivations will be obscure.

In the meantime, we seem to understand that the brain assembles

a picture of audio reality from all the resources it has. The color

of the front panel LED is one of those.

Amen.

Jan Didden

PMA said:That is why the experienced listeners close their eyes when assessing the product. We do not need to hear shiny surface and color of LEDs.

Most people, experienced or not, close their eyes when asked to concentrate on the sound. The subconcious automatically, in a reflex, reverts to blind testing, even while that stubborn ego is still resisting the idea

Don't you love it when it all falls together....

Jan Didden

Bob, I have a feeling that your e-mail isn't working so well, as I've tried contacting you to ask some questions about your speaker designs  Perhaps the message will get through, but you should consider obfuscating your e-mail addresses on your website to avoid people harvesting valid e-mail addresses to send spam to...

Perhaps the message will get through, but you should consider obfuscating your e-mail addresses on your website to avoid people harvesting valid e-mail addresses to send spam to...

Sometime ago I have a conversation with a recording engineer. He said the difficulty about microphones (miking technique). Microphones are not perfect yet. There's a single microphone for a single piece of musical instrument (a set of drum set will have many microphones) to get good recording.

In recording studios, the pure actual recording never sound like the real instrument, the most difficult is a grand piano.

The piano sound we hear in CD/vinyl is not a direct sound from the original take, but already pass many processors, to make the sound in the CD/vinyl is like we used to hear. Adjusted/processed to resemble the sound of the real instrument when we hear it directly.

Knowing this, what about pursuing the real musical reproduction when the source (CD/vinyl) has a story like that? I mean we can make perfect reproduction of 'that CD/vinyl' actual content, but never the real live sound, since it is not in the CD/vinyl itself in the first place.

In recording studios, the pure actual recording never sound like the real instrument, the most difficult is a grand piano.

The piano sound we hear in CD/vinyl is not a direct sound from the original take, but already pass many processors, to make the sound in the CD/vinyl is like we used to hear. Adjusted/processed to resemble the sound of the real instrument when we hear it directly.

Knowing this, what about pursuing the real musical reproduction when the source (CD/vinyl) has a story like that? I mean we can make perfect reproduction of 'that CD/vinyl' actual content, but never the real live sound, since it is not in the CD/vinyl itself in the first place.

lumanauw said:Sometime ago I have a conversation with a recording engineer. He said the difficulty about microphones (miking technique). Microphones are not perfect yet. There's a single microphone for a single piece of musical instrument (a set of drum set will have many microphones) to get good recording.

In recording studios, the pure actual recording never sound like the real instrument, the most difficult is a grand piano.

The piano sound we hear in CD/vinyl is not a direct sound from the original take, but already pass many processors, to make the sound in the CD/vinyl is like we used to hear. Adjusted/processed to resemble the sound of the real instrument when we hear it directly.

Knowing this, what about pursuing the real musical reproduction when the source (CD/vinyl) has a story like that? I mean we can make perfect reproduction of 'that CD/vinyl' actual content, but never the real live sound, since it is not in the CD/vinyl itself in the first place.

David,

Good point. As I argued before, we should stop telling each other that we try to 'emulate' a live concert with our systems. People who still tell us that we don't like their amplifiers or speakers because "you don't know what a live concert sounds" haven't got it yet.

Jan Didden

janneman said:

....we should stop telling each other that we try to 'emulate' a live concert with our systems...

Jan, this is another example higlighting why there is little hope about consunsus regarding amplifier (and reproduction chain for that matter) performance.

As with any other manifestation of art, its appreciation hinges on individual preferences, and what is good for one may be hideous to other - nothing new certainly.

Because of my musical tastes, I tend to prefer as much transparency as as possible in the reproduction with reference to the live thing, something that even does not make sense for example with electroacustic music.

So what I look for is not necessarily shared by others.

Rodolfo

ingrast said:

Jan, this is another example higlighting why there is little hope about consunsus regarding amplifier (and reproduction chain for that matter) performance.

As with any other manifestation of art, its appreciation hinges on individual preferences, and what is good for one may be hideous to other - nothing new certainly.

Because of my musical tastes, I tend to prefer as much transparency as as possible in the reproduction with reference to the live thing, something that even does not make sense for example with electroacustic music.

So what I look for is not necessarily shared by others.

Rodolfo

Rodolfo, I'm not sure we agree or disagree, but I didn't mean that it is somehow wrong in itself to try to 'emulate' a live performance; my point was that it is futile to even try. In a sense, we can even do better.

Jan Didden

luvdunhill said:Bob, I have a feeling that your e-mail isn't working so well, as I've tried contacting you to ask some questions about your speaker designsPerhaps the message will get through, but you should consider obfuscating your e-mail addresses on your website to avoid people harvesting valid e-mail addresses to send spam to...

Thanks for the heads-up. I think the email is OK now. If that one does not work, contact me at audiohead7 at yahoo dot com. I'll be glad to try to answer any questions that you have.

Cheers,

Bob

If you can reproduce the 'essence' of the live performance, then you have been successful. However, MP-3 and many other sources usually just give the 'overview' of the performance. IF you have the memory of real events that are similar, it might be possible to factor these into what you are hearing. Many professional musicians do this.

- Home

- Amplifiers

- Solid State

- Bob Cordell Interview: Negative Feedback