The good news is that I seem to have most everything working as I had envisioned. I tested out the input mixing, multiple channel streaming (well, at least more than 2 channels!), the LADSPA DSP plugins, etc. on multiple hardware platforms including the TinkerBoard and a Pi3 running Rapbian Stretch. These generally are running Gstramer version 1.10.4 or later. I will continue to do more testing and listening but so far it's looking very positive.

With that in the rear mirror I can move ahead with some ideas that would improve the flexibility of the program. Currently mixing is only done on the server side, and the LADSPA DSP crossover filtering is only done on the client side. It would be beneficial if all capabilities were available on both the server and client. Maybe you want to apply the DSP filtering on the server and then just stream the audio right to the client's DAC? Or perhaps you want to implement a DSP crossover on the "server" (where the audio source is located) without any streaming. I will figure out how to implement this during the next few days.

Check back here for additional updates and progress reports.

Quick update - have been making progress on merging channel mixing into the client side. After taking a hard look at the code and trying to figure out how to implement all the various ideas I came up with I decided to simplify things a little. To do this I have nixed processing of the audio on the server - instead audio inputs are streamed directly to clients "as-is". On the client side audio channels can be processed and routed using mixers, tees, and LADSPA-DSP.

I have the concept figured out and snippets of code written. I now will begin the process of integrating this code into the existing larger application followed by debugging and testing.

Last edited:

Charlie, when you started this is was "bash script". Is it still the case, or is there lower level code or kernel mods?

I never mod the kernel. It's still run via a 1300+ line bash script. It's a real, bonafide application.

The previous version is still available for download here:

GSASysCon - A bash-script-based streaming audio system controller

UPDATE - I see light at the end of the programming tunnel!

After a lot of planning, and coding, and debugging over the past week I am happy to say the code is finally coming together. The hurdle of getting the script to function and to produce the correct gstreamer pipeline now seems to be overcome. There is still some ground to cover while the code is further tested, audio output listened to, things are cleaned up, and so on. This will probably take another couple of weeks on account of some travel plans, but so far this is very encouraging.

This new upgraded version of GSASysCon will replace my go-to audio filtering/crossover/processing tool, Ecasound. It can also serve as a software-based replacement for hardware IIR DSPs like the miniDSP, but with additional features like streaming, multiple clients, and centralization of code and crossover commands on the server-side. Hopefully this will gain some interest from the DIY loudspeaker community. Spread the word!

After a lot of planning, and coding, and debugging over the past week I am happy to say the code is finally coming together. The hurdle of getting the script to function and to produce the correct gstreamer pipeline now seems to be overcome. There is still some ground to cover while the code is further tested, audio output listened to, things are cleaned up, and so on. This will probably take another couple of weeks on account of some travel plans, but so far this is very encouraging.

This new upgraded version of GSASysCon will replace my go-to audio filtering/crossover/processing tool, Ecasound. It can also serve as a software-based replacement for hardware IIR DSPs like the miniDSP, but with additional features like streaming, multiple clients, and centralization of code and crossover commands on the server-side. Hopefully this will gain some interest from the DIY loudspeaker community. Spread the word!

... and just when I started using ecasound, you've obsoleted it.

What's providing the LADSPA host for the plugins? and can other plugins be used?

P.S. thanks Charlie for the many previous trouble shooting posts on getting ecasound running. Too many to reference.

Well, ecasound isn't going to be obsolete. But gstreamer IS a ladspa host, so I can fold the ladspa stuff into the same application. Gstreamer has many features that ecasound does not, e.g. dithering, high quality sample rate and format conversion, etc.

... more specifically, obsolete w.r.t your future developments as you've replaced it

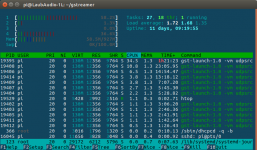

What's the CPU load and memory usage for this new approach? Do you have a htop snapshot?

I'm running a 2.1 system on a Pi 3 at the moment, as a streaming client, receiving 2 channels of 16/48 PCM audio. The crossover consists of 5 ACDf ladspa filters and output is into two stereo DACs (DACs are running synchronized). TOP shows 42% CPU and 1.3% memory usage. Most of this is gstreamer overhead, not ladspa CPU demand. You could use 50 ladspa filters if you wanted. Since gstreamer is multi-threaded (unlike ecasound) the application should be able to smoothly scale up to the full potential of all cores on the system.

The "server" system streaming the audio is a 4-core BayTrail (J1900) based fanless linux box. That is using 5.3% CPU and 0.4% memory. The server could simultaneously stream to many systems, stream higher sample rates, etc. because it is just loafing along.

What I like about the "software DSP" approach is that the choice of hardware on which to deploy the system can be scaled to your needs. A multiway loudspeaker crossover fed by a 16/48 stream can easily done on a Pi 3. As you increase the stream bitrate and sample rate, at some point the USB bus on the Pi runs out of bandwidth (I am using both a USB WiFi dongle and two USB DACs on this system). If you want 16 channels of 24/192, the Pi is probably not going to cut it. But I have successfully run a system with 10 output channels of 32-bit audio at 48kHz (via USB) on a Pi! Amazing that works, but it does. So don't think you need any fancy "computer power" to do this stuff.

What's quite flexible is the choice of DAC or DACs. I can use multiple stereo DACs that are relatively inexpensive (e.g. $50) or I can use high end DACs ($2000 each) and the choice is up to me. I can use my onboard audio codec, a multichannel pro-audio recording interfaces or even ADAT with a USB adapter. I could start out with cheap hardware to get a feel for the system, and then upgrade to more expensive DACs later. Or even upgrade the computer for server or clients - the software is completely portable across Linux systems.

I'm running a 2.1 system on a Pi 3 at the moment, as a streaming client, receiving 2 channels of 16/48 PCM audio. The crossover consists of 5 ACDf ladspa filters and output is into two stereo DACs (DACs are running synchronized). TOP shows 42% CPU and 1.3% memory usage. Most of this is gstreamer overhead, not ladspa CPU demand. You could use 50 ladspa filters if you wanted. Since gstreamer is multi-threaded (unlike ecasound) the application should be able to smoothly scale up to the full potential of all cores on the system.

That must be 42% of a core, not the entire CPU. "Top" always confuses me with its irix mode ([shift]) that reports either total or core. I prefer "htop" and its semi-graphical.

What's quite flexible is the choice of DAC or DACs. I can use multiple stereo DACs that are relatively inexpensive (e.g. $50) or I can use high end DACs ($2000 each) and the choice is up to me. I can use my onboard audio codec, a multichannel pro-audio recording interfaces or even ADAT with a USB adapter. I could start out with cheap hardware to get a feel for the system, and then upgrade to more expensive DACs later. Or even upgrade the computer for server or clients - the software is completely portable across Linux systems.

I'm currently using a DAC hat, to check the capacity of a rpi3B, running ecasound for eq and brutefir for phase correction. Next step may be an external DAC.

Since you mentioned BruteFIR... keep in mind that most every LADSPA plugin I have ever seen implements IIR filters. What piques my interest is that gstreamer has a native FIR filter element. In theory one could supply the kernel to it - you would then have the ability to implement both IIR and FIR under gstreamer, in the same pipeline, the same program. I assume (but would need to double check) that the gstreamer FIR element uses FFTW, so performance might be pretty good.

Unfortunately I tried to play around with the FIR filter element (called audiofirfilter and I could not get it to work under the gstreamer version I was using (1.10). The problem is that you need to supply the kernel as an array. This is easy when writing a full-fledged gstreamer application in C++ or via the python bindings, but not so easy via gst-launch, which I how I am invoking gstreamer. This is a capability that I would like to have as part of GSASysCon, so I will do some more testing again in the near future.

Unfortunately I tried to play around with the FIR filter element (called audiofirfilter and I could not get it to work under the gstreamer version I was using (1.10). The problem is that you need to supply the kernel as an array. This is easy when writing a full-fledged gstreamer application in C++ or via the python bindings, but not so easy via gst-launch, which I how I am invoking gstreamer. This is a capability that I would like to have as part of GSASysCon, so I will do some more testing again in the near future.

Perhaps looking at python would make your project in the long run easier. Complicated bash scripts are difficult to maintain (workarounds due to limited language, no debugging etc.). Today I would not use bash for a project of this scale.

Hats off to what you have achieved.

Hats off to what you have achieved.

OK thanks, that looks like about 40% of the overall CPU, I see 2 idle cores waiting for a convolution engine.

Splitting hairs here, but I see more like 25% of the total CPU judging by the bar graphs at the top. The first core is running at 58% and the last core at 37%. Taken together that's about 1 core out of 4, or 25% of total. But whether it's 25% or 40%, there are still plenty of untapped resources!

Perhaps looking at python would make your project in the long run easier. Complicated bash scripts are difficult to maintain (workarounds due to limited language, no debugging etc.). Today I would not use bash for a project of this scale.

Hats off to what you have achieved.

Thanks! I have to admit that the current scope of this project was never planned. One day I just started experimenting with bash and gstreamer - I was just trying to get something working to see what it could do. The code slowly evolved over time until it became what it is today (1500+ lines).

If I were to start over from scratch I would probably do it in C++. The problem is that you need to write about 10 times as much code compared to my shell script approach to accomplish the same thing! Also, working with bash makes interfacing with the O/S very easy, or at least that's how I see it. Maybe I just don't have enough experience writing C++ applications that do that kind of thing. Also, the application does not make use of any of gstreamer's dynamic pipeline capabilities, where you can turn on and off inputs, change element properties in real time, etc. Gst-launch is perfectly suited for static pipelines - the pipeline launches to stream/play audio and the only thing to do is to shut it down later.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- PC Based

- A bash-script-based streaming audio system client controller for gstreamer