I have been streaming audio throughout my house over my WiFi system. I thought I would share some of my experiences on this topic, and what I have been able to do and not do. Hopefully others will chime in with their experiences. Much of this effort has been in response to my need for well synchronized playback across multiple clients, with synchrony better then 500 microseconds in between each, and as good fidelity as possible over wireless home networking using standard and inexpensive hardware and free or open source software.

My goal has been to develop a system by which I can playback multiple types of audio sources, controlled using some portable interface, and directed to one of several loudspeaker systems. The loudspeaker system(s) may have one or more receiving "clients" (e.g. one in the right speaker, another in the left speaker, and possibly one or more subwoofers each with a client) and if there are multiple clients in a loudspeaker system these must all be playing "in sync".

I have been able to do pretty much all of that using VLC, the videoLAN player/software that is available for a wide range of operating systems. I have a small BayTrail powered PC that is used as the music server, running Ubuntu. Clients are typically Raspberry Pi Model 2 units. I use an Android tablet to control the playback. The tablet runs Xwindows (XDSL) which is used to host a VLC player window, the player running on the server. I can bring the tablet wherever in my home and control the source that is being played and the playback volume. The WiFi consists of a very inexpensive router and cheap (e.g. WiPi) wireless dongles on the clients. The music server, however, is connected to the router via ethernet cable.

The following summarizes what I can do with VLC:

Until recently I was using MP3 encoding with RTSP (mp3lame encoder). After installing (separately) the AAC codec I was able to use it in VLC and I feel that it is superior (this is supported by measurements done by Stereophile a few years ago). I plan to stick with the AAC codec in the future, since it can be streamed under RTSP and this satisfies my need for synchronized streaming.

I am still experimenting with RTSP to maximize the synchrony of clients. For the large majority of the time playback is very well sync'd but I would like this to be 100% and not 99% of the time. I am still experimenting with some VLC settings that influence this somewhat, and I can post the details at a later date with more detailed info if others want to try and duplicate what I am doing.

For now I thought that this would be a good way to focus the streaming audio discussion in one thread that provides a forum on the topic.

My goal has been to develop a system by which I can playback multiple types of audio sources, controlled using some portable interface, and directed to one of several loudspeaker systems. The loudspeaker system(s) may have one or more receiving "clients" (e.g. one in the right speaker, another in the left speaker, and possibly one or more subwoofers each with a client) and if there are multiple clients in a loudspeaker system these must all be playing "in sync".

I have been able to do pretty much all of that using VLC, the videoLAN player/software that is available for a wide range of operating systems. I have a small BayTrail powered PC that is used as the music server, running Ubuntu. Clients are typically Raspberry Pi Model 2 units. I use an Android tablet to control the playback. The tablet runs Xwindows (XDSL) which is used to host a VLC player window, the player running on the server. I can bring the tablet wherever in my home and control the source that is being played and the playback volume. The WiFi consists of a very inexpensive router and cheap (e.g. WiPi) wireless dongles on the clients. The music server, however, is connected to the router via ethernet cable.

The following summarizes what I can do with VLC:

- Play streams, local files, CDs, etc using the VLC GUI as a player via the Xwindows interface on the tablet.

- Direct the output of the "player" VLC instance to an ALSA loopback channel.

- Stream audio across my LAN using a command line version of VLC, accepting audio from the loopback.

- I have successfully streamed audio using the RTSP and HTTP protocols.

- When multiple synchronized clients will receive the stream I use RTSP.

- If I want to stream FLAC I use HTTP streaming. The playback is not synchronized across multiple clients, but this allows me to use the lossless codec.

- When I need to use RTSP, I use the AAC codec. This provides the most transparent compressed audio and can be streamed at high sample rates if desired.

- A VLC instance is used on each client to receive the streaming audio and direct it to a DAC or on for other processing (e.g. software DSP crossover).

Until recently I was using MP3 encoding with RTSP (mp3lame encoder). After installing (separately) the AAC codec I was able to use it in VLC and I feel that it is superior (this is supported by measurements done by Stereophile a few years ago). I plan to stick with the AAC codec in the future, since it can be streamed under RTSP and this satisfies my need for synchronized streaming.

I am still experimenting with RTSP to maximize the synchrony of clients. For the large majority of the time playback is very well sync'd but I would like this to be 100% and not 99% of the time. I am still experimenting with some VLC settings that influence this somewhat, and I can post the details at a later date with more detailed info if others want to try and duplicate what I am doing.

For now I thought that this would be a good way to focus the streaming audio discussion in one thread that provides a forum on the topic.

Last edited:

Hi Charlie,

Is it possible to use SIP (too much compression?) instead RTSP for multiple clients diffusion?

Is it possible to use SIP (too much compression?) instead RTSP for multiple clients diffusion?

Hi Charlie,

Is it possible to use SIP (too much compression?) instead RTSP for multiple clients diffusion?

Possibly. SIP also uses RTP which contains the timestamps that are used to achieve synchronous playback. But I don't have a way to test SIP, and it seems similar to RTSP, which I am using now.

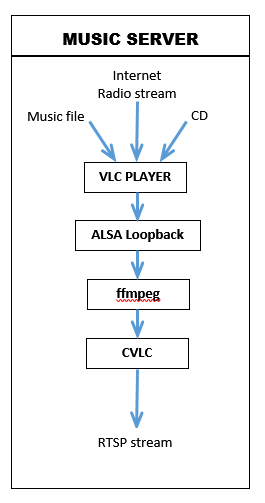

I've put together a graphical depiction of how my streaming audio system works (see attachments for reference). Here are some details...

PLAYING AUDIO AND STREAMING IT ON THE LAN

For all audio "sources" that I want to play I use an instance of VLC running on the server. This is shown as "VLC player" in the music server schematic. I use an x-windows application over WiFi to control this VLC instance from a 10" tablet, which is easy to bring to the listening location. The output of the VLC player is directed to a local ALSA loopback (the music server is running Ubuntu, any Linux distro will work in the same way). Any "music player" software can be used in place of VLC here, as long as it can output to the ALSA loopback.

FFmpeg accepts the other "end" of the loopback "pipe" as input. FFmpeg transcodes the audio stream to 320k AAC at 48kHz and sends it as an RTP stream to a local port on the computer. The local streaming is used just like the loopback and allows the RTP stream to be recovered by another local application.

A command line version of VLC, "cvlc", gets the RTP stream as input from the local port and re-streams it across my LAN in the RTSP protocol. After trying other ways, I have found that RTSP is well suited for playback by multiple clients and seems to work better than anything else in that regard. I still need to look into multicast RTP, which might be a better alternative.

RECEIVING STREAMING AUDIO OVER THE LAN, PROCESSING USING DSP, AND MULTICHANNEL PLAYBACK

The loudspeaker system comprises multiple playback clients (each a Raspberry Pi 2). These independently receive the streaming audio from the LAN via WiFi (802.11b/g/n adapters). Onboard, VLC (actually cvlc, since the client is headless) receives the stream and passes it on to the ecasound program via an ALSA loopback. I use ecasound as a software DSP crossover using the LADSPA plugins I wrote for this purpose. N-channel audio output is produced by ecasound, one for each driver, via USB DACs. The analog audio output from the DACs is connected to amplifiers located in the loudspeaker.

The playback from all clients must be closely synchronized. This is done by a combination of VLC software parameters for buffering and tight clock synchronization on all clients using NTP, with the music server's clock acting as the reference "time server" clock. I have found that this NTP setup works best when the music server clock is left free-running (or you could set it once per day at 3am for instance using sntp). The local clients query the music server clock about one a minute and adjust their clocks accordingly. With this scheme I am able to get everything synchronized to <500 microseconds, and usually <250 microseconds). By optimizing the parameters in VLC and keeping the clocks tightly sync'd the playback is very much like a wire, except it's done using inexpensive WiFi hardware over a wide area.

This setup is flexible in many ways, and can work with any playback software that can direct its output to a specific ALSA device (e.g. the ALSA loopback). Anyone can set up this type of streaming audio system, and it uses inexpensive hardware and free software. Even if you do not need to serve multiple clients but just want to stream audio across your home, this will work. There is some latency involved due the buffering used, but this is only around 750 msec, which I find tolerable.

PLAYING AUDIO AND STREAMING IT ON THE LAN

For all audio "sources" that I want to play I use an instance of VLC running on the server. This is shown as "VLC player" in the music server schematic. I use an x-windows application over WiFi to control this VLC instance from a 10" tablet, which is easy to bring to the listening location. The output of the VLC player is directed to a local ALSA loopback (the music server is running Ubuntu, any Linux distro will work in the same way). Any "music player" software can be used in place of VLC here, as long as it can output to the ALSA loopback.

FFmpeg accepts the other "end" of the loopback "pipe" as input. FFmpeg transcodes the audio stream to 320k AAC at 48kHz and sends it as an RTP stream to a local port on the computer. The local streaming is used just like the loopback and allows the RTP stream to be recovered by another local application.

A command line version of VLC, "cvlc", gets the RTP stream as input from the local port and re-streams it across my LAN in the RTSP protocol. After trying other ways, I have found that RTSP is well suited for playback by multiple clients and seems to work better than anything else in that regard. I still need to look into multicast RTP, which might be a better alternative.

RECEIVING STREAMING AUDIO OVER THE LAN, PROCESSING USING DSP, AND MULTICHANNEL PLAYBACK

The loudspeaker system comprises multiple playback clients (each a Raspberry Pi 2). These independently receive the streaming audio from the LAN via WiFi (802.11b/g/n adapters). Onboard, VLC (actually cvlc, since the client is headless) receives the stream and passes it on to the ecasound program via an ALSA loopback. I use ecasound as a software DSP crossover using the LADSPA plugins I wrote for this purpose. N-channel audio output is produced by ecasound, one for each driver, via USB DACs. The analog audio output from the DACs is connected to amplifiers located in the loudspeaker.

The playback from all clients must be closely synchronized. This is done by a combination of VLC software parameters for buffering and tight clock synchronization on all clients using NTP, with the music server's clock acting as the reference "time server" clock. I have found that this NTP setup works best when the music server clock is left free-running (or you could set it once per day at 3am for instance using sntp). The local clients query the music server clock about one a minute and adjust their clocks accordingly. With this scheme I am able to get everything synchronized to <500 microseconds, and usually <250 microseconds). By optimizing the parameters in VLC and keeping the clocks tightly sync'd the playback is very much like a wire, except it's done using inexpensive WiFi hardware over a wide area.

This setup is flexible in many ways, and can work with any playback software that can direct its output to a specific ALSA device (e.g. the ALSA loopback). Anyone can set up this type of streaming audio system, and it uses inexpensive hardware and free software. Even if you do not need to serve multiple clients but just want to stream audio across your home, this will work. There is some latency involved due the buffering used, but this is only around 750 msec, which I find tolerable.

Attachments

Some quick remarks:

1. NTP isn't critical here. What matters the most is monotonic, linear clock source used by the streaming PC server. See RFC 3550.

2. If timing matters, Ethernet network seems more reasonable solution. At the price of your convenience.

3. Use multicast, if your l2/l3 devices support it. Deploy QoS if that's not an isolated network.

4. RTP can stream raw audio. See RFC 3190. You can try it readily using GStreamer plugins rtpL24pay/rtpL24depay

Synchronized multidevice playback is not my top priority, and I'm more interested in GStreamer world. That's why I've chosen GStreamer Data Protocol buffers instead of RTP (but maybe switch to the latter some day) and wrote my own client/server apps. Otherwise your setup is similar to mine. I also use snd-aloop.

1. NTP isn't critical here. What matters the most is monotonic, linear clock source used by the streaming PC server. See RFC 3550.

2. If timing matters, Ethernet network seems more reasonable solution. At the price of your convenience.

3. Use multicast, if your l2/l3 devices support it. Deploy QoS if that's not an isolated network.

4. RTP can stream raw audio. See RFC 3190. You can try it readily using GStreamer plugins rtpL24pay/rtpL24depay

Synchronized multidevice playback is not my top priority, and I'm more interested in GStreamer world. That's why I've chosen GStreamer Data Protocol buffers instead of RTP (but maybe switch to the latter some day) and wrote my own client/server apps. Otherwise your setup is similar to mine. I also use snd-aloop.

Some quick remarks:

1. NTP isn't critical here. What matters the most is monotonic, linear clock source used by the streaming PC server. See RFC 3550.

2. If timing matters, Ethernet network seems more reasonable solution. At the price of your convenience.

3. Use multicast, if your l2/l3 devices support it. Deploy QoS if that's not an isolated network.

4. RTP can stream raw audio. See RFC 3190. You can try it readily using GStreamer plugins rtpL24pay/rtpL24depay

Synchronized multidevice playback is not my top priority, and I'm more interested in GStreamer world. That's why I've chosen GStreamer Data Protocol buffers instead of RTP (but maybe switch to the latter some day) and wrote my own client/server apps. Otherwise your setup is similar to mine. I also use snd-aloop.

I tried some of your suggested actions. I changed over to RTP multicast streaming - this is only over my local 192.168.x.x network using WiFi. An ethernet (wired) connection is not possible for my application. I turned off NTP and waited for an hour. The playback was stuttering and very de-synchronized (by 20 milliseconds or more). I confirmed this by restarting NTP and checking the time difference with the audio server's clock. I then restarted NTP and waited another hour to give the client clocks some time to settle. Synchrony was much improved, but the playback is constantly re-buffering (every few seconds). My guess is that some buffer sizes are not correctly set, or that PTS are being set by ffmpeg and by the time the packets get to the clients they are almost expired. This is much much worse than what I have been able to achieve with RTSP directly. Combined with NTP and some carefully chosen values for the DTS/PTS and buffer sizes, I get clean synchronized streaming with no problems.

I would prefer to use RTP multicast because it is only a single outgoing stream, but I have never been able to get it to work without the types of problems I just described. Any help in this regard would be greatly appreciated.

Last edited:

A little clarification and update on the above post:

I'm actually doing UNICAST RTP streaming. Currently I'm only using RTSP to distribute the SDP file for the AAC encoded stream, which is needed by the clients to know where to look for the stream and what it consists of. WHen I used MP3 encoding this was all in the header, but now that I am using AAC I need to specify this explicitly.

On the music server, cvlc is run in a shell script with several command line options to tell it what to do, where to send the stream, etc. This includes destination options, e.g. dst=IP, port=xxxx. When the IP is set to my music server, everything works fine - this is UNICAST streaming from the server. However, if I specify a MULTICAST IP address, e.g. 239.255.1.1, port 5004, the clients experience regular rebuffering and the synchronicity of the audio is poor.

I'm not really sure why this is the case. Could the router be at fault? Does multicast streaming quality vary with hardware or is it only a case of possible/not-possible?

Any ideas on what I can try? I honestly would prefer to use multicast streaming, but not if these issues can't be eliminated.

I'm actually doing UNICAST RTP streaming. Currently I'm only using RTSP to distribute the SDP file for the AAC encoded stream, which is needed by the clients to know where to look for the stream and what it consists of. WHen I used MP3 encoding this was all in the header, but now that I am using AAC I need to specify this explicitly.

On the music server, cvlc is run in a shell script with several command line options to tell it what to do, where to send the stream, etc. This includes destination options, e.g. dst=IP, port=xxxx. When the IP is set to my music server, everything works fine - this is UNICAST streaming from the server. However, if I specify a MULTICAST IP address, e.g. 239.255.1.1, port 5004, the clients experience regular rebuffering and the synchronicity of the audio is poor.

I'm not really sure why this is the case. Could the router be at fault? Does multicast streaming quality vary with hardware or is it only a case of possible/not-possible?

Any ideas on what I can try? I honestly would prefer to use multicast streaming, but not if these issues can't be eliminated.

Adding to the above thoughts... I just read that multicast RTP "doesn't work well over WiFi". Since WiFi is a must for me, perhaps this is the problem with the multicast streams that is solved when I use unicasted RTP?

Did you try powerline ethernet adaptors ? Really cheap these days, sold by pair ! You keep wifi for the tablett only !

An odd idea : is it possible to extend the buffer size on the Pi clients network receiver and playback all the Pi with a delay setuped in Ubuntu to get rid of the 1% problem you have with wifi or maybe the bigger buffer size could introduce this delay by itself if you don't care of the gap with the server as far all the clients play together? So no Qos but a "mechanical time" patch to play asynchrone from the source .I don't know if a delay in the network between your different clients sockett could be hearable ?(i.e. buffers filled before others ?).

Qos should not be a problem on a local little home network for music (btw it's not ATM and distance are shorts in an house like congestion) ? really two cents from me, I don't understand maybe your real problem about this 1% problem you described !

An odd idea : is it possible to extend the buffer size on the Pi clients network receiver and playback all the Pi with a delay setuped in Ubuntu to get rid of the 1% problem you have with wifi or maybe the bigger buffer size could introduce this delay by itself if you don't care of the gap with the server as far all the clients play together? So no Qos but a "mechanical time" patch to play asynchrone from the source .I don't know if a delay in the network between your different clients sockett could be hearable ?(i.e. buffers filled before others ?).

Qos should not be a problem on a local little home network for music (btw it's not ATM and distance are shorts in an house like congestion) ? really two cents from me, I don't understand maybe your real problem about this 1% problem you described !

Adding to the above thoughts... I just read that multicast RTP "doesn't work well over WiFi". Since WiFi is a must for me, perhaps this is the problem with the multicast streams that is solved when I use unicasted RTP?

Hi Charlie,

Since wireless lan is shared PHY and not capable of being switched like wired lan, IP Multicast RTP ends up as a broadcast on wireless lan and causes packet flooding.

Using multi-unicast is perfectly fine for applications where the number of endpoints isn't too large.

Regards,

Tim

Hi Charlie,

Since wireless lan is shared PHY and not capable of being switched like wired lan, IP Multicast RTP ends up as a broadcast on wireless lan and causes packet flooding.

Using multi-unicast is perfectly fine for applications where the number of endpoints isn't too large.

Regards,

Tim

Hi Tim,

That's definitely good news for me! When you say "the number of endpoints is not too large", what order of magnitude are you talking about? 2, 5, 10, 20, or more? If you or anyone has practical experience in this regard, please share your thoughts.

Hi Tim,

That's definitely good news for me! When you say "the number of endpoints is not too large", what order of magnitude are you talking about? 2, 5, 10, 20, or more? If you or anyone has practical experience in this regard, please share your thoughts.

Hi Charlie,

Number of multi-unicast RTP streams that a well engineered WLAN could sustain is probably something like:

( WLAN total bandwidth / ( stream bandwidth X num streams ) ) / throughput_fudge_factor

WLAN latency is also important when the streams need to arrive at the endpoint in sync with one another. The latency has to be consistently low enough so that variations in RTP/UDP packet delivery times won't be detected at-the-ear. This is not as important if using software for example shairport-sync that can detect/correct for timing variances, but IME time correction not really necessary if using UDP and WLAN is solid.

Packet loss can also be an issue since RTP is encapsulated in UDP which has low overhead but does not have guaranteed delivery unlike the relativly high overhead TCP protocol which does have guaranteed delivery. If the WLAN or any of the endpoints have issues that result in significant packet loss, then audible glitches will result.

Finally, don't turn on any magnetrons when streaming audio over WLAN 🙂

I've used VLC in the past for to-the-ear/eye sync of music and HDTV w/5.1 audio using multi-unicast RTP on both LAN and WLAN. As I recall, 6 endpoints for CD audio on 802.11N 5GHz band resulted in a few percent avg bandwidth utilization. Something like that.

Regards,

Tim

Hi Charlie,

Number of multi-unicast RTP streams that a well engineered WLAN could sustain is probably something like:

( WLAN total bandwidth / ( stream bandwidth X num streams ) ) / throughput_fudge_factor

WLAN latency is also important when the streams need to arrive at the endpoint in sync with one another. The latency has to be consistently low enough so that variations in RTP/UDP packet delivery times won't be detected at-the-ear. This is not as important if using software for example shairport-sync that can detect/correct for timing variances, but IME time correction not really necessary if using UDP and WLAN is solid.

Packet loss can also be an issue since RTP is encapsulated in UDP which has low overhead but does not have guaranteed delivery unlike the relativly high overhead TCP protocol which does have guaranteed delivery. If the WLAN or any of the endpoints have issues that result in significant packet loss, then audible glitches will result.

Finally, don't turn on any magnetrons when streaming audio over WLAN 🙂

I've used VLC in the past for to-the-ear/eye sync of music and HDTV w/5.1 audio using multi-unicast RTP on both LAN and WLAN. As I recall, 6 endpoints for CD audio on 802.11N 5GHz band resulted in a few percent avg bandwidth utilization. Something like that.

Regards,

Tim

Hi Tim,

Thanks for the thoughts and the practical example at the end. Very much appreciated.

WiFi seems to work well for the most part. Recently we had a holiday party at our home with about 40-50 people attending. The system was experiencing some dropouts during the party, and at the time I was wondering if this was because there were suddenly 40+ mobile phones nearby. Not a magnetron, but vastly increased EMF. I didn't confirm that this was the source of the problem, but it seems plausible.

Hi Tim,

Thanks for the thoughts and the practical example at the end. Very much appreciated.

WiFi seems to work well for the most part. Recently we had a holiday party at our home with about 40-50 people attending. The system was experiencing some dropouts during the party, and at the time I was wondering if this was because there were suddenly 40+ mobile phones nearby. Not a magnetron, but vastly increased EMF. I didn't confirm that this was the source of the problem, but it seems plausible.

Hi Charlie,

Could be. Phones probably had their WiFi transceivers turned on. They would be sending broadcast probes for available SSID's.

Regards,

Tim

Charlie,

If you are willing to test a new approach, I could prepare for you a simple client-server environment that can be characterized as follows:

1. pure rtp (no session management)

2. network broadcast used for data transmission

3. raw audio data transmitted (whatever supported by your alsa devices)

4. data grabbed from alsa loop to be compatible with your player choice

Python and Gstreamer 1.x will be required.

Let me know.

If you are willing to test a new approach, I could prepare for you a simple client-server environment that can be characterized as follows:

1. pure rtp (no session management)

2. network broadcast used for data transmission

3. raw audio data transmitted (whatever supported by your alsa devices)

4. data grabbed from alsa loop to be compatible with your player choice

Python and Gstreamer 1.x will be required.

Let me know.

Charlie,

If you are willing to test a new approach, I could prepare for you a simple client-server environment that can be characterized as follows:

1. pure rtp (no session management)

2. network broadcast used for data transmission

3. raw audio data transmitted (whatever supported by your alsa devices)

4. data grabbed from alsa loop to be compatible with your player choice

Python and Gstreamer 1.x will be required.

Let me know.

That is a very kind offer! How can I say no to that?

I checked and I have Python 2.7.10 installed, and I believe that I just installed Gstreamer 1.4 on my audio server (not sure how to confirm this without writing some code, however). I used this apt repository (but note that I am running Ubuntu 15.10):

https://launchpad.net/~ddalex/+archive/ubuntu/gstreamer

I think that the only drawback is that there is no transcoding to a fixed sample rate. The DACs I am currently using can only handle up to 48kHz audio. I have been trying to transcode on my server so that the clients don't have to, and to reduce transmission bandwidth (along with using AAC compression). We will have to see if my WiFi system can handle 16/44.1 raw audio streams. If this is not a feature, that is no problem, I will just have to enable transcoding in the clients. It would likely be, in general, more flexible without any transcoding.

I will check back here for instructions. Thanks!

-Charlie

Last edited:

I've put together a graphical depiction of how my streaming audio system works (see attachments for reference). Here are some details...

PLAYING AUDIO AND STREAMING IT ON THE LAN

For all audio "sources" that I want to play I use an instance of VLC running on the server. This is shown as "VLC player" in the music server schematic. I use an x-windows application over WiFi to control this VLC instance from a 10" tablet, which is easy to bring to the listening location. The output of the VLC player is directed to a local ALSA loopback (the music server is running Ubuntu, any Linux distro will work in the same way). Any "music player" software can be used in place of VLC here, as long as it can output to the ALSA loopback.

FFmpeg accepts the other "end" of the loopback "pipe" as input. FFmpeg transcodes the audio stream to 320k AAC at 48kHz and sends it as an RTP stream to a local port on the computer. The local streaming is used just like the loopback and allows the RTP stream to be recovered by another local application.

A command line version of VLC, "cvlc", gets the RTP stream as input from the local port and re-streams it across my LAN in the RTSP protocol. After trying other ways, I have found that RTSP is well suited for playback by multiple clients and seems to work better than anything else in that regard.

I wanted to give some background about why I stream audio using this combination of VLC and ffmpeg. When you look at the VLC interface, it would appear that VLC could do all the transcoding and streaming itself. So why am I using ffmpeg? Unfortunately it turns out that VLC has several bugs and flaws related to transcoding the input stream into the desired output stream. While VLC seems to include the "Secret Rabbit Code" (SRC) resampler, in practice it defaults to the "ugly_resampler". This is either a bug, or stems from a conversion to integer format (s16) before sample rate conversion, in which case it seems that the SRC converter cannot be used because it only works for float inputs. The ugly resampler results in very obvious ringing or aliasing of the audio, and should be avoided. Also, VLC only allows transcoding to 48kHz (max) rate, even with codecs like AAC and FLAC that can support higher rates.

The solution to these problems is to use ffmpeg to transcode. I have not experienced any issues with ffmpeg and the resulting audio quality is exactly what is expected from whatever codec I choose to use. On the other hand, ffmpeg cannot stream audio to my LAN - it can only output audio locally.

In order to stream the audio, I again use VLC (cvlc). This simply accepts the output from ffmpeg as input, and then restreams it to my LAN using RTP unicast, with the SDP information sent via RTSP. Streaming this way works very well and I am pretty happy with the result.

The remaining challenges have to do with how to startup ffmpeg (for transcoding) and cvlc so that they don't bonk. For example, currently I must use a certain startup order: ffmpeg MUST be launched first. This is required because (a) the cvlc experiences problems when it tries to read from the local port and ffmpeg is not streaming to it. Also, if the (VLC) music player is already playing audio before ffmpeg is started, ffmpeg sometimes complains that it needs to know the audio format and then exits. When ffmpeg is started first, everything works as advertised. I can then startup the music player and the cvlc and everything works seamlessly thereafter.

For now this setup is working for me and seems sufficiently flexible for my needs. I am interested in exploring new ways to transcode and stream audio that don't require any commercial software or hardware. I will post my thoughts and experiences with any alternative methods in this thread.

.

Last edited:

Main premises of this solution:

1. RTP clients of comparable performance/load

2. no world clock or similar overhead

3. LAN-restricted.

4. one server, numbers of clients limited by the size of the LAN

5. Linux only (could be ported easily, but that's not my concern)

1. Install dependencies

Python and Gstreamer 1.x (1.6 tested) is needed. Details for Debian-like distro can be found at [1]. Those packages need to be installed on the server and clients. I also recommend using/installing screen utility - that will ease managing of persistent terminal sessions. I didn't bothered to daemonize and the like. Alsa-utils also comes in handy.

2. Check system variables

2.1 Identify LAN broadcast address to be used. On the server issue the following:

$ ip r s t 255|grep '^broad.*255'|grep -v '127.255.255.255'|cut -d ' ' -f 2

One of displayed addresses is your broadcast.

2.2 On every client issue the following:

# for i in /proc/sys/net/core/rmem_*; do echo "${i##*/}: $(cat $i)"; done

If values of both are at least 32-64KB, you should be OK. For higher sample rates or more than 2-channel streams these values should be increased. E.g. to change default read buffer size to 96KB issue the following:

# for i in /proc/sys/net/core/rmem_*; do echo 98304 > $i; done

To make changes persistent: man sysctl.conf

2.3 Identify ALSA loopback capture device on the server. Should be something like "hw:x,1" or "hw:x,1,y"

2.4 For every client identify a DAC as seen by ALSA. Should be something like "hw:x,0".

2.5 You may stop NTP daemons. No need for them running.

3 Grab software [2] and customize:

In file mserver-udp.py replace DEFAULT_BROADCAST with 2.1 and DEFAULT_ALSASRC with 2.3

In file mclient-udp.py replace DEFAULT_ALSASINK with 2.4. You may also change sample rate that will be mandated by RTP clients with DEFAULT_RATE. More on this limitation later.

Copy mserver-udp.py to the server and mclient-udp.py to clients.

Ensure client ingress firewall policies permit UDP traffic at DEFAULT_PORT.

4. Launch software

Some digression, first. Launching server side script might be tricky, because of how ALSA loopback works and because this software won't do any resampling explicitly (my design decision). ALSA loopback won't resample either, but what's more important audio stream parameters are defined by the first application that connects to loopback device, remain constant and cannot be changed unless all ends of the loopback substream are closed [3]. E.g. either single loopback substream will handle only tracks with one designated sample rate or player that connects to ALSA loopback will handle resampling, or ALSA will do that implicitly (if you define ALSA device in your player config using plughw plugin), or simply your player returns an error. This also shows that if you plan to use ALSA loopback explicit RTP channel definition as found in mclient-udp.py won't restrict functionality even further. You may overcome this easily by having separate infrastructures, e.g. for 44.1kHz 2-channel stream and 44.1kHz 2-channel stream. To accomplish this you need two substreams of single ALSA loop device (instead of one), two instances of mserver-udp.py (with the same broadcast but different UDP ports and ALSA sources), two instances of mclient-udp.py (with different UDP ports and same ALSA sinks). Instances of mclient-udp.py cannot be run simultaneously on a given host; some form of management is required, not necessarily RTSP, but this is beyond the scope of this lengthy post, so I will stop here.

4.1 Server side

Ensure that ALSA loopback substream will be configured to the same specs as RTP clients are.

Launch a player first or issue something like this (assuming hw:1,0 is playback end of the ALSA loopback):

$ gst-launch-1.0 filesrc location=path.to.some.flac ! flacparse ! flacdec ! audioconvert ! alsasink device=hw:1,0

path.to.some.flac contains standard CD audio format track.

On a new screen terminal session:

$ /path/to/script/mserver-udp.py

Detach screen session. Once Python script running, you may stop/terminate gst-launch-1.0/player.

4.2 Client side

On a new screen terminal session:

$ /path/to/script/mclient-udp.py

Detach screen session. Then issue the following (this optional if client is under load):

# chrt -arp 60 $(ps -eo pid,cmd|grep [m]clie | cut -d ' ' -f 1)

[1] https://wiki.ubuntu.com/Novacut/GStreamer1.0#Installing_GStreamer_1.0_packages

[2] https://github.com/fortaa/tmp/archive/master.zip

[3] Matrix:Module-aloop - AlsaProject

1. RTP clients of comparable performance/load

2. no world clock or similar overhead

3. LAN-restricted.

4. one server, numbers of clients limited by the size of the LAN

5. Linux only (could be ported easily, but that's not my concern)

1. Install dependencies

Python and Gstreamer 1.x (1.6 tested) is needed. Details for Debian-like distro can be found at [1]. Those packages need to be installed on the server and clients. I also recommend using/installing screen utility - that will ease managing of persistent terminal sessions. I didn't bothered to daemonize and the like. Alsa-utils also comes in handy.

2. Check system variables

2.1 Identify LAN broadcast address to be used. On the server issue the following:

$ ip r s t 255|grep '^broad.*255'|grep -v '127.255.255.255'|cut -d ' ' -f 2

One of displayed addresses is your broadcast.

2.2 On every client issue the following:

# for i in /proc/sys/net/core/rmem_*; do echo "${i##*/}: $(cat $i)"; done

If values of both are at least 32-64KB, you should be OK. For higher sample rates or more than 2-channel streams these values should be increased. E.g. to change default read buffer size to 96KB issue the following:

# for i in /proc/sys/net/core/rmem_*; do echo 98304 > $i; done

To make changes persistent: man sysctl.conf

2.3 Identify ALSA loopback capture device on the server. Should be something like "hw:x,1" or "hw:x,1,y"

2.4 For every client identify a DAC as seen by ALSA. Should be something like "hw:x,0".

2.5 You may stop NTP daemons. No need for them running.

3 Grab software [2] and customize:

In file mserver-udp.py replace DEFAULT_BROADCAST with 2.1 and DEFAULT_ALSASRC with 2.3

In file mclient-udp.py replace DEFAULT_ALSASINK with 2.4. You may also change sample rate that will be mandated by RTP clients with DEFAULT_RATE. More on this limitation later.

Copy mserver-udp.py to the server and mclient-udp.py to clients.

Ensure client ingress firewall policies permit UDP traffic at DEFAULT_PORT.

4. Launch software

Some digression, first. Launching server side script might be tricky, because of how ALSA loopback works and because this software won't do any resampling explicitly (my design decision). ALSA loopback won't resample either, but what's more important audio stream parameters are defined by the first application that connects to loopback device, remain constant and cannot be changed unless all ends of the loopback substream are closed [3]. E.g. either single loopback substream will handle only tracks with one designated sample rate or player that connects to ALSA loopback will handle resampling, or ALSA will do that implicitly (if you define ALSA device in your player config using plughw plugin), or simply your player returns an error. This also shows that if you plan to use ALSA loopback explicit RTP channel definition as found in mclient-udp.py won't restrict functionality even further. You may overcome this easily by having separate infrastructures, e.g. for 44.1kHz 2-channel stream and 44.1kHz 2-channel stream. To accomplish this you need two substreams of single ALSA loop device (instead of one), two instances of mserver-udp.py (with the same broadcast but different UDP ports and ALSA sources), two instances of mclient-udp.py (with different UDP ports and same ALSA sinks). Instances of mclient-udp.py cannot be run simultaneously on a given host; some form of management is required, not necessarily RTSP, but this is beyond the scope of this lengthy post, so I will stop here.

4.1 Server side

Ensure that ALSA loopback substream will be configured to the same specs as RTP clients are.

Launch a player first or issue something like this (assuming hw:1,0 is playback end of the ALSA loopback):

$ gst-launch-1.0 filesrc location=path.to.some.flac ! flacparse ! flacdec ! audioconvert ! alsasink device=hw:1,0

path.to.some.flac contains standard CD audio format track.

On a new screen terminal session:

$ /path/to/script/mserver-udp.py

Detach screen session. Once Python script running, you may stop/terminate gst-launch-1.0/player.

4.2 Client side

On a new screen terminal session:

$ /path/to/script/mclient-udp.py

Detach screen session. Then issue the following (this optional if client is under load):

# chrt -arp 60 $(ps -eo pid,cmd|grep [m]clie | cut -d ' ' -f 1)

[1] https://wiki.ubuntu.com/Novacut/GStreamer1.0#Installing_GStreamer_1.0_packages

[2] https://github.com/fortaa/tmp/archive/master.zip

[3] Matrix:Module-aloop - AlsaProject

Last edited:

@Forta: Wow, that seems... quite complicated! For example, I am currently able to stream using a concatenated (piped) combination of ffmpeg and vlc, like this:

There are only a few small changes I make in the VLC preferences in addition to the above. Otherwise I just open a player and go. The above script accepts the audio stream from the ALSA loopback and streams the audio as RTP on the LAN with the SDP for that send via RTSP. The clients just open the RTSP feed and are directed to pickup the RTP stream. If the clients need to, they just re-open the stream as needed. For instance I can shut down the clients at night, and then in the morning when they start up again they pick up the stream (that has been continuously going the whole time) and the audio begins. This is automated with a couple of scripts on the clients to make it easy but you could manually do this as well.

After reading through your post about Gstreamer I noticed that you mentioned this about the ALSA loopback:

One of the most vexing problems that I am having is that the rate of the loopback seems always set to 44.1k and S16_LE data. I would prefer these to be 48k and F32_LE but after searching and searching for a way to set the rate for the loopback I haven't found anything. It seems that I should be able to put some ALSA configuration lines into my .asoundrc file (it is now empty) to do this, but I haven't been able to make those changes.

The result is that (I believe) that the player is resampling its output (when different) to 44.1k to match the loopback, and then ffmpeg is resampling this to 48kHz before passing it on to vlc to stream.

I am very happy with the audio quality I get with the AAC codec. The only issue is an occasional wandering of the synchronization between L and R speakers (the two clients) of a few msec, which only lasts 10 or 20 seconds before its back in sync. It's not as perfect as a cable but it's hardly anything to complain about. I will try to gather up all of the scripts that I use and other info and post them here.

In the meantime, I am still considering using Gstreamer, but am still deciding whether it will bring me much more than I have now in terms of performance.

Code:

#!/bin/sh

ffmpeg -fflags nobuffer -f alsa -acodec pcm_s16le -ar 44100 -i hw:0,1 -af aresample=resampler=soxr -acodec aac -strict -2 -ar 48000 -b:a 320k -f mpegts - | vlc -vvv --intf dummy --no-media-library --network-caching 0 --sout-rtp-caching 0 - ':sout=#rtp{dst=192.168.10.105,port=16384,sdp=rtsp://@:5004/stream}'After reading through your post about Gstreamer I noticed that you mentioned this about the ALSA loopback:

I hadn't heard about the rule that the first to connect to the loopback set the rate and format, etc. I did some testing on this today and I can see some effects but not in the "upstream" direction. When I connect the output of the loopback to ffmpeg I seem to be able to set the rate and it doesn't complain but then when I subsequently connect the player to the loopback input it doesn't pickup this rate change.Launching server side script might be tricky, because of how ALSA loopback works and because this software won't do any resampling explicitly (my design decision). ALSA loopback won't resample either, but what's more important audio stream parameters are defined by the first application that connects to loopback device, remain constant and cannot be changed unless all ends of the loopback substream are closed [3]. E.g. either single loopback substream will handle only tracks with one designated sample rate or player that connects to ALSA loopback will handle resampling, or ALSA will do that implicitly (if you define ALSA device in your player config using plughw plugin), or simply your player returns an error.

One of the most vexing problems that I am having is that the rate of the loopback seems always set to 44.1k and S16_LE data. I would prefer these to be 48k and F32_LE but after searching and searching for a way to set the rate for the loopback I haven't found anything. It seems that I should be able to put some ALSA configuration lines into my .asoundrc file (it is now empty) to do this, but I haven't been able to make those changes.

The result is that (I believe) that the player is resampling its output (when different) to 44.1k to match the loopback, and then ffmpeg is resampling this to 48kHz before passing it on to vlc to stream.

I am very happy with the audio quality I get with the AAC codec. The only issue is an occasional wandering of the synchronization between L and R speakers (the two clients) of a few msec, which only lasts 10 or 20 seconds before its back in sync. It's not as perfect as a cable but it's hardly anything to complain about. I will try to gather up all of the scripts that I use and other info and post them here.

In the meantime, I am still considering using Gstreamer, but am still deciding whether it will bring me much more than I have now in terms of performance.

Finally, a small breakthrough with ALSA! I am now able to get my VLC player to resample the audio stream to 48kHz rate using the SRC converter at "best quality" settings.

To pull this off, I followed an example for writing a plugin that creates a "new" alsa device that is like a wrapper for an existing device within which you can set one or more parameters of the existing device. Here is the alsa code from my .asoundrc file:

The above code creates a new alsa device called "loop48". It has as its slave alsa hw:0,0 (card 0, device 0) which is how my loopback device is enumerated using aplay -l. Within the definition of the slave, the format (32 bit floating point) and rate (48kHz) parameters for hw:0,0 are fixed and whatever is providing input to loop48 must match these values. Other parameters can be fixed using this method as well (like buffering related parameters). I plan to explore this more in the near future.

Interestingly enough, loop48 doesn't show up in the list of available alsa audio playback devices in the GUI for VLC, but I can force VLC to use it by invoking it like this:

In the stream of info that is produced (because I used the vvv option) I can see that vlc inspects all the available alsa devices and loop48 is listed.

Now when I fire everything up and take a look at the output from vlc and ffmpeg I can see that all is well! One of the advantages of this setup is the rate and format are known a priori for both ends of the loopback "pipe". Therefore the startup order for what is putting audio data into the loopback (VLC player) and what is taking it out (ffmpeg) should no longer matter.

.

To pull this off, I followed an example for writing a plugin that creates a "new" alsa device that is like a wrapper for an existing device within which you can set one or more parameters of the existing device. Here is the alsa code from my .asoundrc file:

Code:

pcm_slave.sl3 {

pcm "hw:1,0"

format FLOAT_LE

channels 2

rate 48000

}

pcm.loop48 {

type plug

slave sl3

}Interestingly enough, loop48 doesn't show up in the list of available alsa audio playback devices in the GUI for VLC, but I can force VLC to use it by invoking it like this:

Code:

vlc -vvv --aout alsa --alsa-audio-device=loop48Now when I fire everything up and take a look at the output from vlc and ffmpeg I can see that all is well! One of the advantages of this setup is the rate and format are known a priori for both ends of the loopback "pipe". Therefore the startup order for what is putting audio data into the loopback (VLC player) and what is taking it out (ffmpeg) should no longer matter.

.

Last edited:

- Status

- Not open for further replies.

- Home

- Source & Line

- PC Based

- the DIY Streaming Audio Thread