Latency is directly dependent on tap count, and in case of partitioned convolution partition count. Apparent latency with minimum phase filters is good, but once time reversal is invoked for phase correction this advantage disappears.

If direct convolution were faster, PC setups would use it.

If direct convolution were faster, PC setups would use it.

VSTconvolver use FFTW 3.1.2 library.

from FFTW.org

i trust this way to convolve.(also in medical,military,imaging...).

i trust this way to convolve.(also in medical,military,imaging...).

from FFTW.org

...

...

The FFTW package was developed at MIT by Matteo Frigo and Steven G. Johnson.

Our benchmarks, performed on on a variety of platforms, show that FFTW's performance is typically superior to that of other publicly available FFT software, and is even competitive with vendor-tuned codes. In contrast to vendor-tuned codes, however, FFTW's performance is portable: the same program will perform well on most architectures without modification. Hence the name, "FFTW," which stands for the somewhat whimsical title of "Fastest Fourier Transform in the West."

...

...

Awards

FFTW received the 1999 J. H. Wilkinson Prize for Numerical Software, which is awarded every four years to the software that "best addresses all phases of the preparation of high quality numerical software." Wilkinson was a seminal figure in modern numerical analysis as well as a key proponent of the notion of reusable, common libraries for scientific computing, and we are especially honored to receive this award in his memory.

I would only find latency an issue if I was using the system with video, or in a recording studio situation. For strictly purist audio hi fi I'm happy to add up to a second of latency if that's what it takes.

Re destroying the speakers if the system goes wrong, I have my amps set to a level (using pots) that is a bit too high for a typical loud piece of music when the software volume is at max, and no more. The tweeter has a series cap to protect it. I have a 'boost' button in my software for quiet stuff (classical often doesn't reach full scale I find) and a latching clipping indicator so I'll know next time not to use it if the piece gets too loud. I did, at one point, have a software meltdown where a coding error caused the FIR filters to be calculated way too loud when a certain combination of settings was selected. The resulting cacophany

was unbelievable, but no damage done.

Re destroying the speakers if the system goes wrong, I have my amps set to a level (using pots) that is a bit too high for a typical loud piece of music when the software volume is at max, and no more. The tweeter has a series cap to protect it. I have a 'boost' button in my software for quiet stuff (classical often doesn't reach full scale I find) and a latching clipping indicator so I'll know next time not to use it if the piece gets too loud. I did, at one point, have a software meltdown where a coding error caused the FIR filters to be calculated way too loud when a certain combination of settings was selected. The resulting cacophany

was unbelievable, but no damage done.

Thierry, I am talking about real elapsed time (delay), not CPU time.

In direct convolution with a hardware solution like the openDRC and a DAC, you can expect only a few ms of delay in addition to the "normal" delay implied by the impulse (depending on the position of the peak within the impulse).

A PC would already add some buffering delay for the soundcard, and some more if FFT is used...

I have missed this message.

ok.I misundestood you.

is it very important if no feedback is used,only delayed video to sync with sound.

about miniSharc or miniDSP,openDRC,the message you've posted tells that FFT convolution will be implemented.

once coding done,they will probably change all direct conv. to FFT.

Not sure they will (or can, because of memory constraints) implement FFT convolution.

If they do, I hope they will let the final choice to the user, in the plugin configuration.

With downsampling I don't see the need for such "complication" in an active filtering situation.

Time will tell, but I think hardware convolution will soon become commonplace, and optimization technics like FFT convolution or downsampling will probably rapidly become obsolete, exactly like palleted color modes in PC graphical cards...

Convolution will soon become the de facto standard for filtering and EQ, with the cursor set anywhere between fully linear phase and minimum phase to accommodate any situation (ie allowed delay).

If they do, I hope they will let the final choice to the user, in the plugin configuration.

With downsampling I don't see the need for such "complication" in an active filtering situation.

Time will tell, but I think hardware convolution will soon become commonplace, and optimization technics like FFT convolution or downsampling will probably rapidly become obsolete, exactly like palleted color modes in PC graphical cards...

Convolution will soon become the de facto standard for filtering and EQ, with the cursor set anywhere between fully linear phase and minimum phase to accommodate any situation (ie allowed delay).

Last edited:

I2S in all communication with either sources or targets.

What sort of source is that? As I understood it, I2S is for linking together ICs on the one PCB, or in the same box at least.

Time will tell, but I think hardware convolution will soon become commonplace, and optimization technics like FFT convolution or downsampling will probably rapidly become obsolete, exactly like palleted color modes in PC graphical cards...

I'm not so sure. I have a feeling that the future will be very energy-conscious compared to now, whether that's literally because of a shortage of fuel, or because everything will be judged on how much it drains the batteries of portable devices. Direct convolution is hideously expensive in terms of energy and yet for most purposes is identical in its result to the FFT-ed version, whereas palleted colour modes were not identical to true RGB.

Last edited:

The entier openDRC (miniSHARC + IO card + infrared compatible volume control and selector) is powered by a 5V 600mA powersupply. So it shall not consume more than 2.5W!

And future evolutions of such cards are likely to consume even less power for even more capabilities.

I don't think you could build a PC with that kind of power consumption

In fact it could already be possible without problem to do a complete 8-way linear-phase crossover with only one miniSHARC and with direct convolution if they implemented taps distribution and downsampling.

(Look at what Four Audio manage to do with the limited power of the HD2)

So why bother with a PC? Having a unique media center is not what I would like to have.

I want to be able to connect any device to my speakers, even TV (which means 0 delay *should* be an option, with only minimal-phase correction in this case of course), and let the whole thing on all day long if I want, or turn it off and on whenever I want without having to wait XX seconds to get it up and running.

0 delay is impossible with FFT convolution, even with a minimal phase impulse, so if it can be avoided let it be!

And it can, today already, with energy-conscious, compact and affordable hardware. What not to like?

And future evolutions of such cards are likely to consume even less power for even more capabilities.

I don't think you could build a PC with that kind of power consumption

In fact it could already be possible without problem to do a complete 8-way linear-phase crossover with only one miniSHARC and with direct convolution if they implemented taps distribution and downsampling.

(Look at what Four Audio manage to do with the limited power of the HD2)

So why bother with a PC? Having a unique media center is not what I would like to have.

I want to be able to connect any device to my speakers, even TV (which means 0 delay *should* be an option, with only minimal-phase correction in this case of course), and let the whole thing on all day long if I want, or turn it off and on whenever I want without having to wait XX seconds to get it up and running.

0 delay is impossible with FFT convolution, even with a minimal phase impulse, so if it can be avoided let it be!

And it can, today already, with energy-conscious, compact and affordable hardware. What not to like?

Last edited:

Which problems, specifically? I'm familiar with the motivation for apodizing filters but my experience is subjective audio quality tends to be dominated by the impulse ringing introduced by the filter. Since the Fourier relation mandates an abrupt transition in the frequency domain is a slow one in the time domain brickwall is often a worst case choice in this regard---it's my experience a more even tradeoff of frequency and time domain behavior sounds better. Upsampling from 44.1 mitigates the issue but requires caution as most sample rate conversion defaults to brickwall antialiasing (miniDSP is unlikely to document their implementation on the miniSHARC---someone'll have to get one and measure it---but Analog's ASRC implementations are all brickwall so I'd expect miniDSP's to also be brickwal), as do the majority of DACs. So adding a third brickwall in one's own filtering is an interesting choice.You should add a short FIR to do a brickwall LP at Nyquist nonetheless, to avoid any problem.

Probably the simplest option is asynchronous audio, where whatever device that has the master clock tells the data source how fast to send data. This is used in the ASIO and USB Audio Device Class 2.0 specifications, for example, and avoids the jitter and lock concerns of PLLs or the additive impulse ringing of ASRC. If you do a search you'll find oceans of discussion about ASRC and PLL methods here on DIYA and elsewhere. But I guess my main remark would be that DIYers are fond of elaborate solutions to possible problems and not necessarily so focused on determining what is and is not audible in ABX testing (and finding ways to address what is audible). My personal ABX results have favored PLLs over ASRC (though if one does the ASRC right it can be almost as good) in most cases (also, TI makes several jitter cleaners that one can apply to PLL recovered clocks if one's really worried about jitter---I don't know of any ABX results on this but the jitter cleaners outperform the phase noise of what are widely considered reference quality crystals by an order of magnitude or more). ESS's approach of combining the ASRC and DAC antialiasing is an elegant one, particularly as their slow rolloff is the slowest linear phase roll in the industry that I know of, and can be attractive if one wants simple clock mangement (hence my feature asks earlier in this thead to enable rePhase to synthesize antialiasing filters for ESS DACs). However, choosing a PLL with good jitter rejection and cleanly routing its recovered clock to a DAC is really not that hard.How are you going to link your audio source to the DSP card? Can you avoid opening a whole new can of worms, worrying about real or imaginary jitter, resampling etc.?

the just midlle,

using a basic laptop with usb sound card.(7.1+spdif in+line in).

you have:

-the power

-low consumption

-remote

-multisource

-and a keyboard+screen to type your message on DIY audio while listenning.

-(and lot a flexibility to load/create impulse response/measure)

total consumption with a 3 stereo (8000+4000+1000 taps) IRs

about 15 Watts (estimated).

what a basic laptop is able to convolve (91% cpu load on 1 core)

18 stereo convolution of 8192 taps (FFT of course

)

)

using a basic laptop with usb sound card.(7.1+spdif in+line in).

you have:

-the power

-low consumption

-remote

-multisource

-and a keyboard+screen to type your message on DIY audio while listenning.

-(and lot a flexibility to load/create impulse response/measure)

total consumption with a 3 stereo (8000+4000+1000 taps) IRs

about 15 Watts (estimated).

what a basic laptop is able to convolve (91% cpu load on 1 core)

18 stereo convolution of 8192 taps (FFT of course

An externally hosted image should be here but it was not working when we last tested it.

Last edited:

I understand the fear of PC based crossovers (I've had the problem), but the Behringer? Never had one output what it was not supposed to, ever. You can set it wrong, but you can do that with a DSP board.

What's your experience?

I had a Behringer CX 3400 Pro. It broke down the first nigth I used it (MTBF 6 hours). Possibly a heatsink broke loose because of the vibrations and square or triangular waves was output. It was in a rack next to my 4530. Party sound levels.

What sort of source is that? As I understood it, I2S is for linking together ICs on the one PCB, or in the same box at least.

It could be a USB interface like miniStreamer or an ADC.

The important thing here is that I2S is a proper protocol with a clock, not a Mickey Mouse protocol like spdif where you need to extract the clock from the signal.

I will put all the cards in the same box.

Which problems, specifically? I'm familiar with the motivation for apodizing filters but my experience is subjective audio quality tends to be dominated by the impulse ringing introduced by the filter. Since the Fourier relation mandates an abrupt transition in the frequency domain is a slow one in the time domain brickwall is often a worst case choice in this regard---it's my experience a more even tradeoff of frequency and time domain behavior sounds better. Upsampling from 44.1 mitigates the issue but requires caution as most sample rate conversion defaults to brickwall antialiasing (miniDSP is unlikely to document their implementation on the miniSHARC---someone'll have to get one and measure it---but Analog's ASRC implementations are all brickwall so I'd expect miniDSP's to also be brickwal), as do the majority of DACs. So adding a third brickwall in one's own filtering is an interesting choice.

You would agree that to do the downsampling, for example from 48khz to 16khz, you first have to remove any frequency above the Nyquist frequency of the targeted sampling freq.

So you first have to apply a LP filter that reject anything above 8khz low enough (lets say -100dB), and then take 1 sample out of 3.

I said "brickwall", but of course with the shortest possible impulse it will end up much more like a gentle filter

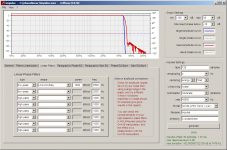

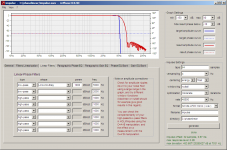

Here are two examples of such filters, generated with rephase for a 48khz initial sampling freq, targeting a -100dB rejection at 8khz.

They were generated by trial and error

First example is with a 128 taps LP filter, and you get a usable range up to 6khz.

Good enough if you are planning to use a 48dB/oct LP at 2000Hz for example, as it end up "brickwall" (and possibly ringing) or at least steeper than expected -80dB down the target slope (albeit this could also be taken into account with EQ...).

Now with a 64 taps LP filter, the slope is more gentle and you end up with a usable band up to 5khz.

With the same -80dB target you can do a 48dB/oct LP at ~1500Hz.

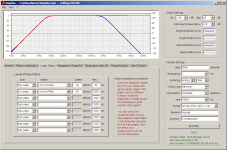

Now with the first example (128 taps), lets calculate the available power that would get us. Lets pretend we have 1024 taps available at 48khz, and just count naively for the sake of an example.

After that first 128taps convolution we might have 896 taps "left".

After the filter you get 1 sample out of 3 and do the convolution on that.

So for each sample you have 3 times the processing time: 896 => 2688.

And then we are applying the convolution on a 16khz signal, so we have basically the same filtering capabilities than if we had 3 times the taps at 48khz (8064 taps, that is!).

That means that be can for example do a 48dB/oct HP filter at 80Hz without a stretch:

The final implied delay with direct convolution will be 1.33ms for the initial 128taps filter + 84ms for the 2688taps@16khz linear-phase filter.

So the overhead is only 1.33ms (and half that with th 64 taps scenario), nothing compared to an FFT based convolution...

If you add the possibility of freely assigning the taps accross channels I think any real world 8-way crossover could be generated with a single miniSHARC and direct convolution...

Attachments

Last edited:

Thanks for clarifying. It's not actually necessary to antialias before downsampling. In particular, polyphase filters filter after decimation and are commonly used in multirate filtering like the decimated mid/woofer/subwoofer/whatever structure we're discussing here. I've not coded polyphase myself but in the examples I'm aware of it saves on multiplies but not adds compared to a conventional FIR. Useful for minimizing ASIC die area or fitting more filter into an FPGA but, I suspect, probably not so useful for getting more out of a processor whose instruction set includes single cycle MAC. Could be worth a look, though.You would agree that to do the downsampling, for example from 48khz to 16khz, you first have to remove any frequency above the Nyquist frequency of the targeted sampling freq.

Another multirate implementation that's long looked interesting to me is IIR XO followed by decimation. This is mainly an LR6 or LR8 kind of thing (or B7 or higher order) to get good image rejection but in a typical three way crossed around 200Hz and 2kHz it allows downsample by 2 on the mid and by 20 or so on the sub. Like FIR this could benefit from polyphase IIR to move the filtering post decimation but IIR is pretty cheap already.

Unfortunately multirate doesn't play nicely with multichannel DACs and most audio DACs that are interesting for use with hi-fi aren't specified for use with sampling rates below 32kHz. It's probably fine but I would chacterize the DACs one intends to use at low sampling rates before investing heavily in multirate. (There are other, more minor, issues too; clock generation becomes more involved as can cable routing.)

Last edited:

It could be a USB interface like miniStreamer or an ADC.

The important thing here is that I2S is a proper protocol with a clock, not a Mickey Mouse protocol like spdif where you need to extract the clock from the signal.

I will put all the cards in the same box.

Am I correct in thinking that Ministreamer cannot do asynchronous, however? (there may be products that can do it..?)

Forum | miniDSP

And is there a significant difference between I2S and SPDIF anyway, except for the clock being on a separate wire from the data? Once you introduce a less-than-perfect interconnect you have the same jitter problem regardless of which you use. Short PCB traces between chips introduce one level of jitter, and cables and connectors presumably are a little worse. The problem may not be significant, but it's another imaginary worry.

Can't you get your DSP board to do the asynchronous transfer from the source itself via USB or some such?

(but it's these sorts of headaches that I had in mind when being smug about the PC earlier

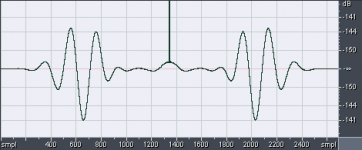

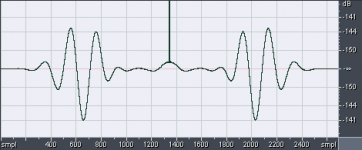

I use rePhase for creating linear phase Linkwitz-Riley 48dB/oct 80Hz high/low pass filters for crossover, sample rate 16kHz and 2688 taps. These I import into Cool Edit for inspection. Summation of filters produces ripple in tails:

This is completely avoidable if high pass filters are all derived from low pass counterpart via single sample inversion (subtractive filter).

This is completely avoidable if high pass filters are all derived from low pass counterpart via single sample inversion (subtractive filter).

Am I correct in thinking that Ministreamer cannot do asynchronous, however? (there may be products that can do it..?)

You are correct. Thanks for finding that info.

WaveIO Asynchronous USB-to-I2S interface is a better choice.

Of course there is a risk interconnecting equipment not using differential signalling. Grounding will be a major headace. The picture of the Wave device says "isolated I2S outputs", very clever marketing. I will be looking for DACs marked "isolated I2S inputs".

I like your persistence CopperTop! I could do an Arch Linux based filter using BruteFIR for example. Using an ASUS Xonar D2X for input/output. It will be fun doing it. The Intel® Xeon® Processor E3-1220LV2 has only 17W TDP, it can be passively cooled. The E3-1265LV2 is 45W. It will need a slow fan.

Their USBStreamer is async and multi-channel.

http://www.minidsp.com/images/documents/Product%20Brief%20-%20USBstreamer.pdf

I2S format for input/outputs: 24 bits I2S master

Supported sample rate: 44.1/48/88.2/96/176.4/ 192kHz

You cannot run it as slave though.

- Home

- Design & Build

- Software Tools

- rePhase, a loudspeaker phase linearization, EQ and FIR filtering tool