By the way, a week before the fire I updated my W10 Pro 1511 compilation to the last, Creators... 1703 I think. The sound was much worse!!! I reinstalled the 1511 copy.

W10 Pro 1511 has better sound than Linux Mint 18.2 Sonya. And LM has better sound than W10 Pro 1703 (and yes, optimized to play multimedia like 1511).

With my loved and cheap tweaked ODAC (USB DAC).

So you do not have a valid ABX protocol.

Mooly on speakers seem like he got a positive.

No, 6/8 is not enough, as I have shown when I got the same result just by clicking the button, without listening.

Test your ears in my new ABX test

Especially if there were about 5 another unsuccessful trials. The probability of guessing must be much lower, like 1%. Not 15%.

foobar2000: Components Repository - ABX Comparator

I have installed the ABX component but I can not see the function with the DSP manager!

W10 Pro 1511 and foobar2000 1.3.6

I have installed the ABX component but I can not see the function with the DSP manager!

W10 Pro 1511 and foobar2000 1.3.6

Yes, well, you remember my test re-recording thru fruit, veggies and mud.I find it truly remarkable that a ripped WAV file and a CD-DAC-Power Opamp driving a real load both seem to provide seemingly identical results subjectively

Only the noise floor gave it away, and then bot to many people.

The 7/8 was not certain enough?

Sorry, I have overlooked this one. 7/8 should be enough.

Excellent point about these unmonitored web tests. What's to prevent someone doing 20 trials and submitting only one?

What's to prevent someone from taking the digital output from foobar and running it into an analysis program? Measure a difference, then vote.

Fact is, it's very hard to prevent all possible cheating with home testing, if that is a major concern. Home testing might be more useful to help identify potential candidates for later proctored testing. Maybe hold proctored sessions at AES, Burning Amp, NAMM, etc., places where possibly interested folks might congregate. Bring lots of DAC-3's, of course. Did I mention it might be costly?

What's to prevent someone from...

Hard to prevent all cheats, as you say.

But the main problem is likely to be self selection, often not deliberate fraud, just a tendency to report "successes" and leave out "failures".

Without full data the tests are practically useless.

The test process could be administered from a central site that only allows a repeat test if the previous test result is reported.

This could even be done manually but would be awfully tedious, I doubt Pavel is that keen.

There is still risk of self selection, when people decide to quit after a bad result, whether it's the first test or later.

This sort of stuff is a serious problem even in pharmaceutical tests, and they have better statistics nous than most DIY audio buffs, to say the least.

It is also a problem in physics experiments in hi-E particle accelerators and the like.

Their solution is to demand very extreme levels of probability as evidence.

Which is fairly simple but unlikely to make much headway with the audio crowd that doesn't even understand the importance of unbiased ("blind") tests.

Best wishes

David

Last edited:

Yeah, we have to have some amount of good faith.

I'm only on my work computer (travel) so don't have foobar on this system -- is it possible to change the number of trials per ABX test from 8 to something like 20? 6/8 is very very different (even if you're just picking the best-of's) from 15/20.

Edit -- yeah, there's a huge amount of effort towards having your stats already assembled BEFORE running the experiment and not peeking until you get the final report. Much to avoid just what you mention.

I'm only on my work computer (travel) so don't have foobar on this system -- is it possible to change the number of trials per ABX test from 8 to something like 20? 6/8 is very very different (even if you're just picking the best-of's) from 15/20.

Edit -- yeah, there's a huge amount of effort towards having your stats already assembled BEFORE running the experiment and not peeking until you get the final report. Much to avoid just what you mention.

Self-selection could be one probable issue, agreed.

Even if that were not an issue, it still might not be clear if there were not a positive result (files shown to be audibly different), why not. Is it because files are not audibly different to humans, masking by low quality reproduction systems, ABX has some of the attributes described by its critics, etc.?

Even if that were not an issue, it still might not be clear if there were not a positive result (files shown to be audibly different), why not. Is it because files are not audibly different to humans, masking by low quality reproduction systems, ABX has some of the attributes described by its critics, etc.?

Have to agree 100% there, and that is exactly what I am doing which is listening for some fleeting 'something' to try and pick up on.

I find it truly remarkable that a ripped WAV file and a CD-DAC-Power Opamp driving a real load both seem to provide seemingly identical results subjectively. Oh, and not forgetting the A to D process to get it back again.

That is remarkable to me.

The same as I have done.

For me it's incredible the little to none difference between tracks taking into account all the parts involved on the "processed" track.

Yes, this is what I expected, but the values look very...suspect, to be polite.

The ABX creator may know software but seems not to understand statistical inference.

The values are wrong, I would agree.

If trials are random, guessing should give 50% correct answers, so 4/8 should show as maximum likelihood of guessing.

Similarly, getting zero correct answers by guessing should be just as unlikely as all correct answers by guessing.

Also interesting: in the past people have reported getting improbably low scores, that is to say, consistently lower than 4/8. Perhaps suggestive their brains are reacting to semi-audible differences without them being fully aware of what the differences are.

T

The difference is very appreciable, with one with less life and another with many highs.

Maty, its not polite to call people deaf. If you want to be taken seriously you need to find a better way to talk to people. You may actually have very good ears, so to speak, but nobody will give you credit for it if you are rude about it.

However, for casual listening on my laptop I get the same type of impression about a difference as you, but to a lesser degree. I haven't moved the files to my audio processing computer as of yet for a closer listen.

Mark made a very good point the other day on the focus effect. Many times people even admit to listening for a single hit of a drum rim or cymbal to catch a fleeting anomaly. To me this is not listening to music.

Sometimes I don't listen to music if I am listening for distortion or something else. To me, ABX testing is not especially listening to music either.

What about music majors who are learning transcription skills? It can take some very focused listening at first, and some people never get as good as it as others. Are they listening to music?

Also, there is a difference between listening to music in the car or at work and sitting in the living room without any distractions. Are all situations equally "listening to music?" I wouldn't say they are.

Lastly, I don't know if whether listening to music, or distortion, or whatever else, is an important distinction. I do know that the mere act of thinking about it makes it seem more important than it really is: Edge.org

... improbably low scores, that is to say, consistently lower than 4/8. Perhaps...

Possible to concoct an explanation of some subconscious, perverse desire to answer incorrectly.

But much more likely that it's just random statistical variations.

Humans tend to see pattern, especially casual ones, even in noise.

Elvis faces in peanut butter smears, Jesus in a cloud swirl, and most of all, motivation and intent.

I am sure there are evolutionary reasons for this but it means humans are poor at evaluation of randomness.

That's why statistics was created, of course.

And these "improbably low scores" put the "correct" ones in perspective.

Best wishes

David

Yeah, we have to have some amount of good faith.

I'm only on my work computer (travel) so don't have foobar on this system -- is it possible to change the number of trials per ABX test from 8 to something like 20? 6/8 is very very different (even if you're just picking the best-of's) from 15/20.

.

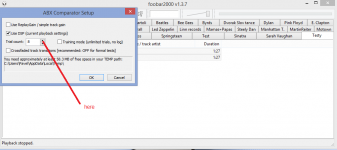

Sure, just in the beginning when you mark the files and call ABX utility from the menu. Please see the image.

Attachments

Last edited:

This test was intended to show that even relatively "high" value of crossover distortion (0.01 - 0.1%) is still too low to be audible. It is rather the plot that looks bad, our ears will tolerate almost everything that is 60 - 70dB below main program. I wanted to perform the test on the real amplifier and the OPA549 was a perfect candidate. It has low distortion when unloaded and all the artifacts get up only with load. Inaudible artifacts. And the magnificent 7th as well. End of the myth  .

.

Edit: the amp module shown built in 2006.

Edit: the amp module shown built in 2006.

Attachments

Last edited:

If the results of being able to discern differences in this test are accepted as statistically valid, how can we be sure the difference was down to crossover distortion when the two files have been through totally different processes (ripped original for one and a replay and record chain for the other).

It is one thing saying there is a difference, quite another to say it is down to one specific artefact.

Would a better test for crossover distortion not be a single amplifier with adjustable bias ? Optimum bias @100ma vs sub optimal @10ma for instance.

It is one thing saying there is a difference, quite another to say it is down to one specific artefact.

Would a better test for crossover distortion not be a single amplifier with adjustable bias ? Optimum bias @100ma vs sub optimal @10ma for instance.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- General Interest

- Everything Else

- Test your ears in my new ABX test