And then let us run a file compare to sort the samples. According to their sound, of course.

This was my concern when I first saw the test, for the test to work (with the files being available to the participants for examination) they DO need to be different from each other in some way. ie they can't all be exact copies of either A or B or it is a trivial matter to sort them via software means.

I was wondering how you could get around this. I think what Mooly has is actually quite an elegant solution to that problem. As I said before provided the sonic signature of the caps is more significant than any other subtle difference imparted due to playback and recording, the fact that there are other differences present should not matter. I guess there lies the rub (we don't know what is more significant). Damned if you do damned if you don't.

I don't see that anyone has anything to lose by trying though. If you sort them and get them right then that is a very statistically significant result. If it's a null result you don't lose any face, as there is always the fact that there were other differences which may have clouded the ability to pick up the specific sonic signature of the caps.

So I don't see that anyone has anything to lose (except some time). Personally myself I don't see any point in me trying until I can pick a difference in the ABX because if I can't do that, I've got no hope with the sorting!

I'm actually wondering what it means statistically if I consistently pick wrong (ie 0/10)

Tony.

That is from what I recall from a day ago, and that was a pretty quick session to get a first appraisal....with more listening I could come up with extra descriptors.Thanks Dan, results like this are what the test is all about. Listening. That's quite a list

I am only concerned with listening tests in this context.Unfortunately copy and paste renders the test not statistically reliable. Once a difference is identified by file analysis then its easy to match all the identical files to it. A 100% perfect result is assured. That's not what we want here. What we want is to see if the sonic difference between film and electro (if there are any) carry through and can be identified on sonics alone.

Analysis of the files (by Paqvel) has shown that we are dealing with 14 different files !.

I mean that there should be, say, 5 exact copies of A and 7 exact copies of B distributed through the list according to the random generator.

A test result returning 100% correlation with the list would solidly confirm audibility of differences between A and B, despite the pb/rec chain perhaps not really being up to the task.

Pavel states that the magnitude of difference between A, B and Nr4 are similar...IOW at least three different recordings are captured.

IMHO, this invalidates this test, and because of the extra (12?) variables added, the test becomes too difficult, and can never be of any proper statistical value.

If you had stated that there are 14 different recordings, then with perseverance one could come up with an accurate list/result, but the difficulty becomes extreme.

Much better to run multiple exact copies of A and B.

Dan.

Last edited:

Opposite, no filter, thus step-like output and -3.92dB amplitude decrease at Fs/2. This is the very basis of D/A conversion, and the most important is written in English in the image. Just click on the image

You're confusing antialiasing (a recording function) with anti-imaging (reconstruction, a playback function).

See, for example, the first page of this paper:

http://www.nanophon.com/audio/antialia.pdf

Also see the Kester paper that your figure is taken from.

Oversampling and digital filtering eases the requirements on the antialiasing filter which precedes an ADC...

(emphasis mine) Also see Figure 2 from that paper, which shows the block diagram explicitly.

It's important to understand the difference between these filters and where they're used.

Dan, sorting 12 files by ear absolutely gives valid statistics. Of course the files are different, they were recorded independently, a necessary condition for controls. If you (or Pavel or anyone else) record the same thing 12 times, there will be some differences. You will be unlikely to hear the differences due to the recording- of course, your mind will perceive differences on repeated listening due to well-known factors.

The basic point is that if a factor is claimed to be audible, it will also involve at least two listenings, right? The only difference here is that you have to determine the difference by ear- if it can indeed be determined. If someone wants to use the excuse that the equipment isn't good enough to show the difference between a string of electrolytics and a good film cap, they are welcome to demonstrate otherwise- using actual controls appropriate to the experiment.

The same trained engineers who would sneer at a sensory scientist trying to measure distortion using an analog oscilloscope to display input and output traces, not seeing any difference, then concluding that the system has zero distortion, will blithely make the same error when they get outside their own expertise, set up an uncontrolled test, then claim that there's some validity. Mooly is smart and open-minded enough to institute real controls; the excuse-making has been highly amusing and sadly predictable.

The basic point is that if a factor is claimed to be audible, it will also involve at least two listenings, right? The only difference here is that you have to determine the difference by ear- if it can indeed be determined. If someone wants to use the excuse that the equipment isn't good enough to show the difference between a string of electrolytics and a good film cap, they are welcome to demonstrate otherwise- using actual controls appropriate to the experiment.

The same trained engineers who would sneer at a sensory scientist trying to measure distortion using an analog oscilloscope to display input and output traces, not seeing any difference, then concluding that the system has zero distortion, will blithely make the same error when they get outside their own expertise, set up an uncontrolled test, then claim that there's some validity. Mooly is smart and open-minded enough to institute real controls; the excuse-making has been highly amusing and sadly predictable.

Mooly, this is an interesting thread which I have been enjoying fully. However, as some are questioning the integrity of the recordings I would like to question the integrity of peoples memory. As one grow older ones short term memory gets worse to a point where remembering a phone number or a name for more than a few minutes becomes a daunting task.

Can anyone partaking in this thread state that they can remember more than say 38 million samples of a data stream and accurately cross correlate it with another 38 million samples of another data stream with some fractional differences after some time passed. In my opinion, people are dreaming.

Can anyone partaking in this thread state that they can remember more than say 38 million samples of a data stream and accurately cross correlate it with another 38 million samples of another data stream with some fractional differences after some time passed. In my opinion, people are dreaming.

Last edited:

I agree with Wally. I would agree on different takes only in case that the replay/record chain was 'good enough', which is not the case here.

I disagree with Pavel. The only differences are the two audio streams, the rest is a constant. The equipment has no effect on what you perceive as being different in the audio streams because both are affected equally by the equipment.

Last edited:

I disagree with Pavel. The only differences are the two audio streams, the rest is a constant. The equipment has no effect on what you perceive as being different in the audio streams because both are affected equally by the equipment.

His point is that if the recording process is so flawed that it obscures the differences under test, that invalidates any null result. And I think it's a valid point, assuming the "if" to be true. I am agnostic as to whether that's actually true here or not (though I suspect not), but if he thinks the recordings can be done better, he can run his own test but using real controls appropriate to the test instead of the uncontrolled stuff he's done previously.

From the standpoint of appropriate controls for this kind of audibility test, Mooly has done a superb job.

Hi Nico. I was going to type something then but have forgotten what it was

An interesting angle you have there. If any statistical preference emerges could those listeners then return after a short while and just listen and say, yes that's the electros or yes, that's the film. That would be a tall order and yet if someone came in the night and swapped your CD player for another or your amp, would you notice ? Maybe not at first but after a while "something" wouldn't sound right... hopefully. We are pretty good at knowing what we like long term. Maybe that's a bit different to carrying a musical or sonic signature in your head.

One thought I just had, if we took one output from a source, split it and then digitised it on two PC's at the same time, would the two files stand comparison and show as "identical". I suspect not for all the reasons that have been pointed out to me. If we then listened to those files, would they sound the same. I think to a listener they would even if file analysis showed a difference.

Sorry, that's all a bit rambly. Hope it makes sense.

An interesting angle you have there. If any statistical preference emerges could those listeners then return after a short while and just listen and say, yes that's the electros or yes, that's the film. That would be a tall order and yet if someone came in the night and swapped your CD player for another or your amp, would you notice ? Maybe not at first but after a while "something" wouldn't sound right... hopefully. We are pretty good at knowing what we like long term. Maybe that's a bit different to carrying a musical or sonic signature in your head.

One thought I just had, if we took one output from a source, split it and then digitised it on two PC's at the same time, would the two files stand comparison and show as "identical". I suspect not for all the reasons that have been pointed out to me. If we then listened to those files, would they sound the same. I think to a listener they would even if file analysis showed a difference.

Sorry, that's all a bit rambly. Hope it makes sense.

Anyone interested to know how "sounds" the difference between A and B, here you are. The file is not long, it covers about first 30 seconds only.

https://www.dropbox.com/s/e8t7dn75fhq8i5c/Bs-As.wav

The constant chirp between 2 - 7kHz is the method error. Compare it, in audibility, with the musical sound difference.

I would like to repeat that:

1) I have passed the ABX test between A and B files with 8/10 score

2) Mooly sent me another set of recordings of the same thing and I got 8/9

3) I have described to Mooly, in a PM, the sound difference and paired which is which, in (1) and (2)

4) I have tried Test04 and paired it to one of A or B in a PM

I will NOT try another 11 files.

To me, the test is technically flawed. Regardless test method (algorithm) used, it must be technically perfect. To me, this is amateurish, so I will leave the thread and not bother you with my comments.

https://www.dropbox.com/s/e8t7dn75fhq8i5c/Bs-As.wav

The constant chirp between 2 - 7kHz is the method error. Compare it, in audibility, with the musical sound difference.

I would like to repeat that:

1) I have passed the ABX test between A and B files with 8/10 score

2) Mooly sent me another set of recordings of the same thing and I got 8/9

3) I have described to Mooly, in a PM, the sound difference and paired which is which, in (1) and (2)

4) I have tried Test04 and paired it to one of A or B in a PM

I will NOT try another 11 files.

To me, the test is technically flawed. Regardless test method (algorithm) used, it must be technically perfect. To me, this is amateurish, so I will leave the thread and not bother you with my comments.

To me, this is amateurish, so I will leave the thread and not bother you with my comments.

To me, you produced only an excuse to avoid the test.

A and B may be compared by ABX and found to be statistically different. The test subject may even find a subjective description of the difference, and a preference.

Keeping A as master reference and doing ABX with files 1-12 leads to possible results:

Slam dunk result is that each comparison leads to high confidence difference for each of 1-12 and a perfect sort of recording conditions results.

A much higher probability exists that the sort will result in a partially correct sort, and the test would need to be repeated to find if statistical significance exists. A lot of work, multiplied by number of subjects.

The systems under test are modulated by the test signal, in this case a music track. The modulation depth and bandwidth are constrained by that of the system under test and that of the test signal. In this case the test signal is limited by bandwidth of musical instruments, recording chain used to capture the music, and additional filters potentially used in rendering the recording.

Clearly the music signal is not capable of stimulating the DUT units to level where the modeled high pass functions may be resolved. Likewise the temporal distribution of signal components, and harmonic content of the signal components mask possible analysis of DUT contribution of temporal and harmonic aberration.

Pavel's use of difference file technique resolves temporal aberration. Under better controlled conditions it may reveal underlying transfer function characteristic. Here it primarily reveals only aberrations of original recording and the recording's rendering process. This is partially responsible for lumpy mess around 4kHz seen in his posted difference file spectrum. Lack of perfect temporal alignment is also big factor. This picture also shows lack of content below 60Hz returning what is effectively a noise result that precludes resolving the high pass aspect of the devices under test, and of the D/A and A/D units in the test chains.

Difference file technique is a direct form of correlation. Difference results need only be long enough for spectral analysis. Pavel's window is 65536 samples, quite adequate for audio spectrum of 48kHz sample rate. Movement of window across different regions of result file yields very stable result.

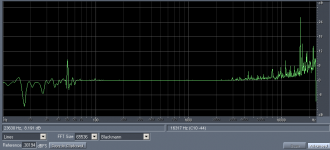

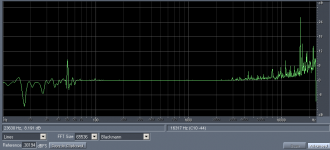

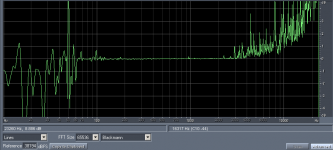

Nico mentions cross correlation. Here too complete files need not be analyzed, bringing solution easily within realm of PC. Division of spectra from same sample region returns flattened spectrum for the stimulated bandwidth. With B/A result look like this:

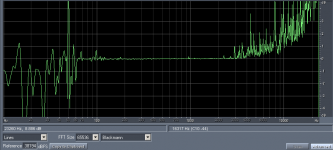

And zoomed in:

The above two pics tell essentially same story as Pavel's pic.

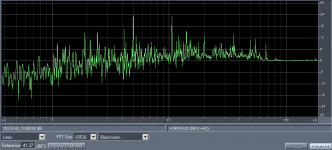

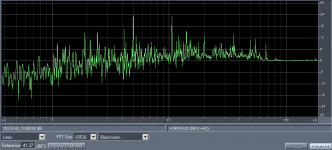

Sample rate conversion to fs 375Hz allows 65k spectrum to look at low frequencies:

Smoothing could be applied to the above and would reveal some manner of low frequency high pass filter, but it would not just be that of differences of devices under test. It would include convolved response of both D/A and A/D converters.

Even if testing in this thread results in statistically relevant results, nothing new is learned. It only would ask question why differences exist.

Measurements with controlled bandwidth and high signal to noise are only useful path to real answers.

Working with music files only answers if listeners can hear differences. This may be useful in identifying least expensive, or smallest part that may be used in a design. Design exclusively by such methods is hack work.

Keeping A as master reference and doing ABX with files 1-12 leads to possible results:

Slam dunk result is that each comparison leads to high confidence difference for each of 1-12 and a perfect sort of recording conditions results.

A much higher probability exists that the sort will result in a partially correct sort, and the test would need to be repeated to find if statistical significance exists. A lot of work, multiplied by number of subjects.

The systems under test are modulated by the test signal, in this case a music track. The modulation depth and bandwidth are constrained by that of the system under test and that of the test signal. In this case the test signal is limited by bandwidth of musical instruments, recording chain used to capture the music, and additional filters potentially used in rendering the recording.

Clearly the music signal is not capable of stimulating the DUT units to level where the modeled high pass functions may be resolved. Likewise the temporal distribution of signal components, and harmonic content of the signal components mask possible analysis of DUT contribution of temporal and harmonic aberration.

Pavel's use of difference file technique resolves temporal aberration. Under better controlled conditions it may reveal underlying transfer function characteristic. Here it primarily reveals only aberrations of original recording and the recording's rendering process. This is partially responsible for lumpy mess around 4kHz seen in his posted difference file spectrum. Lack of perfect temporal alignment is also big factor. This picture also shows lack of content below 60Hz returning what is effectively a noise result that precludes resolving the high pass aspect of the devices under test, and of the D/A and A/D units in the test chains.

Difference file technique is a direct form of correlation. Difference results need only be long enough for spectral analysis. Pavel's window is 65536 samples, quite adequate for audio spectrum of 48kHz sample rate. Movement of window across different regions of result file yields very stable result.

Nico mentions cross correlation. Here too complete files need not be analyzed, bringing solution easily within realm of PC. Division of spectra from same sample region returns flattened spectrum for the stimulated bandwidth. With B/A result look like this:

And zoomed in:

The above two pics tell essentially same story as Pavel's pic.

Sample rate conversion to fs 375Hz allows 65k spectrum to look at low frequencies:

Smoothing could be applied to the above and would reveal some manner of low frequency high pass filter, but it would not just be that of differences of devices under test. It would include convolved response of both D/A and A/D converters.

Even if testing in this thread results in statistically relevant results, nothing new is learned. It only would ask question why differences exist.

Measurements with controlled bandwidth and high signal to noise are only useful path to real answers.

Working with music files only answers if listeners can hear differences. This may be useful in identifying least expensive, or smallest part that may be used in a design. Design exclusively by such methods is hack work.

"The systems under test are modulated by the test signal, in this case a music track. The modulation depth and bandwidth are constrained by that of the system under test and that of the test signal. In this case the test signal is limited by bandwidth of musical instruments, recording chain used to capture the music, and additional filters potentially used in rendering the recording.

Clearly the music signal is not capable of stimulating the DUT units to level where the modeled high pass functions may be resolved. Likewise the temporal distribution of signal components, and harmonic content of the signal components mask possible analysis of DUT contribution of temporal and harmonic aberration."

But apart from all that, whatever it means, can you HEAR a difference between a "good" plastic cap and a chain of grot elkos?

I bet you think you can ..... Night & Day etc etc

Clearly the music signal is not capable of stimulating the DUT units to level where the modeled high pass functions may be resolved. Likewise the temporal distribution of signal components, and harmonic content of the signal components mask possible analysis of DUT contribution of temporal and harmonic aberration."

But apart from all that, whatever it means, can you HEAR a difference between a "good" plastic cap and a chain of grot elkos?

I bet you think you can ..... Night & Day etc etc

I'm really sorry you feel that way Pavel, but I'm puzzled. You mentioned more than once the differences were clearly audible and to that end you have produced files and images that may or may not show the real differences (and I'm just not qualified to say on that, as to whether the difference files and images have flaws in the same way my files may have ... or may not have ... I just don't know). And now you post a file that lets us hear the difference as you "see" it, and where you relate that to the audible sound of the files.

Have to ask... can you be so sure that your processes, however better the equipment may or may not be, produce flawless results. I have to question that.

Ultimately, whatever methods you are using, ears, analysis, both, intuition, whatever it is... if you can do all that you have, then you have cracked the key... you can identify which is which.

The time taken to debate it could have got you the result

I'm calling it a night... you all enjoy the weekend.

Have to ask... can you be so sure that your processes, however better the equipment may or may not be, produce flawless results. I have to question that.

Ultimately, whatever methods you are using, ears, analysis, both, intuition, whatever it is... if you can do all that you have, then you have cracked the key... you can identify which is which.

The time taken to debate it could have got you the result

I'm calling it a night... you all enjoy the weekend.

The fallout from this test is certainly very interesting,  ...

...

Part of the equation is the playback environment, it 'taints' what is heard, because of all the usual factors. Plus, the experience, and perspective of the listener - so many variables ... ,

,  !!

!!

My assessment of the A and B samples is quite different from what has already been mentioned, but for the sake of not adding more 'confusion' to the mix I won't say for the moment - I have "learnt" to key into certain aspects of the A and B, that are particularly important to me, and using this criteria found it straightforward to sort half the samples into 2 bins. Later today I'll do the rest of the samples, and then double check some of the earlier choices, to see if a consistency is still there.

Part of the equation is the playback environment, it 'taints' what is heard, because of all the usual factors. Plus, the experience, and perspective of the listener - so many variables ...

My assessment of the A and B samples is quite different from what has already been mentioned, but for the sake of not adding more 'confusion' to the mix I won't say for the moment - I have "learnt" to key into certain aspects of the A and B, that are particularly important to me, and using this criteria found it straightforward to sort half the samples into 2 bins. Later today I'll do the rest of the samples, and then double check some of the earlier choices, to see if a consistency is still there.

Last edited:

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- General Interest

- Everything Else

- Listening Test Part 1. Passives.