you cannot step into the same river twice

Karl, I wrote this for the reason you are asking. But, I do not suspect CD player that much (it always plays same data), but rather the sound card used (sampling is not simultaneous for different recordings, the samples occur in different time points, we have some jitter, etc. etc.). That's also the reason why I asked for 24 bit recordings. We also/always should have a set of distortion measurements of the devices used (both D/A and A/D) to predict if they are sufficient for the task we would like to investigate.

The sampling aspect makes sense on a theoretical level, I am assuming the same processes are at work when the files are analysed too.

I can't help feel we have ended up with two "separate" tests running here. Those who are listening and evaluating differences and those using software to pick minute differences between files. Don't misunderstand me, both are valid approaches, but what we are interested in here is purely listening impressions. Would minute differences such as you observe disappear with 24 bit depth ? I haven't the expertise or experience to say one way or the other on that.

And as Tony goes on to say,

If all the files could be determined to be identical to iether A or B via software analysis then we would not have a blind test!!

What is being tested here is the ability to pick up on a sonic signature imparted by particular components. If this sonic signature is large (and detectable) then regardless of other very minor differences (perhaps due to jitter or low level noise etc) then it should be possible to sort the files regardless of other minor differences.

So whilst there may be some other differences between files, what is being tested is the ability to pick up a particular sonic difference which should be consistent.

It is of course possible that differentiation between A and B (and successful ABX testing) could be due to differences other than the one being tested, in which case the sorting may fail (as it is not the thing being tested that is what is being heard as a difference).

One would hope however that the sonic signature being tested for would swamp any random differences in playback/recording.

Tony.

That to me sums it up nicely. What you are listening for is the character of each component combination. If the files were all the same (if I had just copied the same one over and over) then those using software analysis would be able to match all the files easily... job done

Tony's last paragraph "One would hope that....." is the crux of the matter.

An analogy. For those of you that like vinyl...... imagine perfect sampling of your favourite record being played. You repeat the process a few times such that you have a few files. You could guarantee that each would be "different" due to the mechanical nature of the process yet each time you listen you listen you "hear" the characteristic sound of your record playing set up, even though it is actually different every time. The characteristic sound of your set up swamps those differences every time and that is what shines through.

Good observation/analysis, Tony. Did you know that this is a strawman test?

Quite the opposite, I'm pretty sure it isn't. However I do think it is interesting just how hard it is to discern any difference (at least so far for me with the equipment I have tried).

I have read SY's "Testing 1 2 3" article from Linear Audio, which details tests like this. It is well worth reading. I think anyone who reads it will realise that tests like this are not "set up's" but are a tool. The important thing is to keep an open mind, and to learn something in the process, whatever the outcome turns out to be!

I did quite well on Pano's tests with the different interconnects, but this one has me scratching my head so far.

Tony.

I did quite well on Pano's tests with the different interconnects, but this one has me scratching my head so far.

That's the point. You can set up an objective question and get the right process to get to the right answer. Or you can set up a conclusion and get the suitable process to get to the conclusion.

I can't help feel we have ended up with two "separate" tests running here. Those who are listening and evaluating differences and those using software to pick minute differences between files. Don't misunderstand me, both are valid approaches, but what we are interested in here is purely listening impressions. Would minute differences such as you observe disappear with 24 bit depth ? I haven't the expertise or experience to say one way or the other on that.

Karl, I am not sure about this. I was the 1st who posted a positive, 8/10 ABX screenshot proving I have discerned "A" and "B" files by listening. Without any previous analysis of these files. The result says, to me, there is an audible difference between files. Is the difference caused by capacitor exchange? I do not know. Maybe yes, maybe no, the only conclusion to me is that files are different and that I can hear it and prove it by ABX.

Then I was asked to pair A and B with 12 other files. Huh! this would be very difficult job. So I was curious what is the difference between the files and if it is worth time spent. 1st, I checked A and B files and found differences. OK, 2 files differ, it was audible, and I appreciated your job. Then I downloaded "Test04" and compared it to A and B, respectively, by analysis. You know why, it should resemble one of them. But what? Test04 differs from A, differs from B and the 'quantity' of the difference is similar. So what, how to 'pair' it? I started to suspect the technical quality of the method used and came to the conclusion that differences caused by method itself might be higher (at least according to my opinion) than the difference caused by capacitors. After that, I cannot 'pair' files to A and B, I do not want to pair impairments of the method.

This is my personal conclusion. But I do not agree that I was doing analysis prior to listening, I have discerned A x B purely by listening and started to analyze when I was asked to listen to another 12 files, which would be very tiring job resulting in hearing fatigue and loss of concentration and resolution, so I wanted to know if it was worth doing. To me, not. Of course, anyone can make his own conclusion, different from mine.

Last edited:

Hi Pavel,

The time factor is the killer on a test like this, that goes without saying I suppose, but it is necessary for the outcome to be statistically valid.

If the difference you hear is down to the cap then that same difference should be apparent in all the files. That is what I was hoping for. As you know without me even saying, the files can't be matched by examination alone, they are all unique. Perhaps my "record player" analogy above is similar.

Can I ask... is it a clear difference you hear between A and B ?

The time factor is the killer on a test like this, that goes without saying I suppose, but it is necessary for the outcome to be statistically valid.

If the difference you hear is down to the cap then that same difference should be apparent in all the files. That is what I was hoping for. As you know without me even saying, the files can't be matched by examination alone, they are all unique. Perhaps my "record player" analogy above is similar.

Can I ask... is it a clear difference you hear between A and B ?

Hi Karl,

in case that method error masked possible distinctions caused by parts, then the method would not be useful.

I would not call the difference between "A" and "B" as significant. But I can describe it. Compared to "A", "B" was more "rounded" and sounded less dynamic, to me. Something in mids/highs, less defined for "B". Not very big difference, though. If the difference was really big, I would get 10/10 instead of 8/10.

in case that method error masked possible distinctions caused by parts, then the method would not be useful.

I would not call the difference between "A" and "B" as significant. But I can describe it. Compared to "A", "B" was more "rounded" and sounded less dynamic, to me. Something in mids/highs, less defined for "B". Not very big difference, though. If the difference was really big, I would get 10/10 instead of 8/10.

Hi Karl,

in case that method error masked possible distinctions caused by parts, then the method would not be useful.

I would not call the difference between "A" and "B" as significant. But I can describe it. Compared to "A", "B" was more "rounded" and sounded less dynamic, to me. Something in mids/highs, less defined for "B". Not very big difference, though. If the difference was really big, I would get 10/10 instead of 8/10.

Thanks... appreciate you trying to describe what you hear.

Karl, may I ask you a question. What DAC have you used for these tests?

It is like this, Philips TDA1540.

http://www.diyaudio.com/forums/ever...test-part-2-active-circuitry.html#post3800034

So, it has no anti-aliasing output filter?

I thought anti-aliasing was before the A/D, not at the output. Most D/As I'm familiar with use a reconstruction or anti-imaging filter.

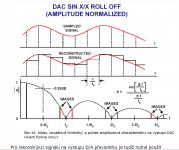

Yes, but early Philips chips had step-like waveform. You need output anti-aliasing otherwise you get sinx/x envelope.

Is it this TDA1540? 14-bit converter?

http://www.dutchaudioclassics.nl/philips_tda1540/

Is it this TDA1540? 14-bit converter?

http://www.dutchaudioclassics.nl/philips_tda1540/

Attachments

Last edited:

....Can I ask... is it a clear difference you hear between A and B ?

Hi Pavel, hi Karl......I would not call the difference between "A" and "B" as significant. But I can describe it. Compared to "A", "B" was more "rounded" and sounded less dynamic, to me. Something in mids/highs, less defined for "B". Not very big difference, though. If the difference was really big, I would get 10/10 instead of 8/10.

My take on the sounds is that A sounds quite clear, with a 'politeness' wrt B which to my ear has a subtle lack of clean highs, mids slight hardness/harshness, slightly lumpy bass, and a 'false' sense of dynamics.

A is listenable long term, but B is not....in comparison.

Dan.

Yes, but early Philips chips had step-like waveform. You need output anti-aliasing otherwise you get sinx/x envelope.

I can't read the Czech, but that sure looks like an anti-imaging/reconstruction filter.

I can't read the Czech, but that sure looks like an anti-imaging/reconstruction filter.

Opposite, no filter, thus step-like output and -3.92dB amplitude decrease at Fs/2. This is the very basis of D/A conversion, and the most important is written in English in the image. Just click on the image

Gold Star For Trying.....

Pavel, thank you for doing your analysis, and showing that there are extra (unintended) variables in this test.

So sorry Karl, in this case your experiment is greyed, and not quite the test that it could be.

Your method of swapping the series cap networks in and out according to your random generator list is adding extra variables, unfortunately.

Perhaps you might have been better to record A and B once only, and then copied/pasted and renamed according to that random generated list.

Dan.

For bandwidth reason, I have not downloaded the extra 12 (24) files yet.Karl, I am not sure about this. I was the 1st who posted a positive, 8/10 ABX screenshot proving I have discerned "A" and "B" files by listening. Without any previous analysis of these files. The result says, to me, there is an audible difference between files. Is the difference caused by capacitor exchange? I do not know. Maybe yes, maybe no, the only conclusion to me is that files are different and that I can hear it and prove it by ABX.

Then I was asked to pair A and B with 12 other files. Huh! this would be very difficult job. So I was curious what is the difference between the files and if it is worth time spent. 1st, I checked A and B files and found differences. OK, 2 files differ, it was audible, and I appreciated your job. Then I downloaded "Test04" and compared it to A and B, respectively, by analysis. You know why, it should resemble one of them. But what? Test04 differs from A, differs from B and the 'quantity' of the difference is similar. So what, how to 'pair' it? I started to suspect the technical quality of the method used and came to the conclusion that differences caused by method itself might be higher (at least according to my opinion) than the difference caused by capacitors. After that, I cannot 'pair' files to A and B, I do not want to pair impairments of the method.

This is my personal conclusion. But I do not agree that I was doing analysis prior to listening, I have discerned A x B purely by listening and started to analyze when I was asked to listen to another 12 files, which would be very tiring job resulting in hearing fatigue and loss of concentration and resolution, so I wanted to know if it was worth doing. To me, not. Of course, anyone can make his own conclusion, different from mine.

Pavel, thank you for doing your analysis, and showing that there are extra (unintended) variables in this test.

So sorry Karl, in this case your experiment is greyed, and not quite the test that it could be.

Your method of swapping the series cap networks in and out according to your random generator list is adding extra variables, unfortunately.

Perhaps you might have been better to record A and B once only, and then copied/pasted and renamed according to that random generated list.

Dan.

Perhaps you might have been better to record A and B once only, and then copied/pasted and renamed according to that random generated list.

And then let us run a file compare to sort the samples. According to their sound, of course.

So, it has no anti-aliasing output filter?

Yes, but early Philips chips had step-like waveform. You need output anti-aliasing otherwise you get sinx/x envelope.

Is it this TDA1540? 14-bit converter?

Philips TDA1540 14-bit d/a converter

Perhaps a terminology issue (like calling a suction cleaner a hoover no matter what it is). I would have said anti aliasing without giving thought to it... if you said reconstruction filter I wouldn't question it. It needs the low pass active filter to remove the final traces of the reconstruction (there we go... reconstruction) process.

Whatever, it is the same for both test chains.

Hi Pavel, hi Karl.

My take on the sounds is that A sounds quite clear, with a 'politeness' wrt B which to my ear has a subtle lack of clean highs, mids slight hardness/harshness, slightly lumpy bass, and a 'false' sense of dynamics.

A is listenable long term, but B is not....in comparison.

Dan.

Thanks Dan, results like this are what the test is all about. Listening. That's quite a list

For bandwidth reason, I have not downloaded the extra 12 (24) files yet.

Pavel, thank you for doing your analysis, and showing that there are extra (unintended) variables in this test.

So sorry Karl, in this case your experiment is greyed, and not quite the test that it could be.

Your method of swapping the series cap networks in and out according to your random generator list is adding extra variables, unfortunately.

Perhaps you might have been better to record A and B once only, and then copied/pasted and renamed according to that random generated list.

Dan.

Unfortunately copy and paste renders the test not statistically reliable. Once a difference is identified by file analysis then its easy to match all the identical files to it. A 100% perfect result is assured. That's not what we want here. What we want is to see if the sonic difference between film and electro (if there are any) carry through and can be identified on sonics alone.

And then let us run a file compare to sort the samples. According to their sound, of course.

Of course

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- General Interest

- Everything Else

- Listening Test Part 1. Passives.