Cadstar is one of the cheaper options, though Altium is good value at the moment.

Yes the higher end software is not cheap, and for a lot of designs overkill, but when you do a 12-24 layer design with say 10,000+ pins you appreciate it

Been thinking how I would do a digital design, well more an integrated design, but it would be by the numbers!!! First thing I would do is use a one box solution, then you only have one protective earth connection, thus avoiding in one go any earth loops..

More later, I'm suppose to be working!!

Yes the higher end software is not cheap, and for a lot of designs overkill, but when you do a 12-24 layer design with say 10,000+ pins you appreciate it

Been thinking how I would do a digital design, well more an integrated design, but it would be by the numbers!!! First thing I would do is use a one box solution, then you only have one protective earth connection, thus avoiding in one go any earth loops..

More later, I'm suppose to be working!!

I have posted the following reasoning in this threard ("NOS DAC idea", started by Barrows and sponsored by Twisted Pear Audio):

It seems that multi-bit raw output from sigma delta ADC's (usually 6 bits with samples as high as the master clock frequency allows) is usually processed by the IC itself, which delivers at its interface a 16-bit, 24-bit or DSD stream. TI PCM4222 is one exception.

Q.1 - What is the bottleneck in the audio chain preventing the use of such raw output as "distribution file"? Is it storage? Is it Internet bandwidth? Is it USB bandwidth?

Q.2 - Would a "discrete, 6-bit, R-2R ladder, with voltage output using foil resistors" also suffer with noise modulation? Would it also require such large negative feedbacks of Sigma Delta DAC IC's?

Q.3 - Converting and down sampling a 1-bit, 2.8224 MHz, DSD file to a 6 bit, 2.048 MHz file requires any mathematical estimation? Or is just a matter of transposition?

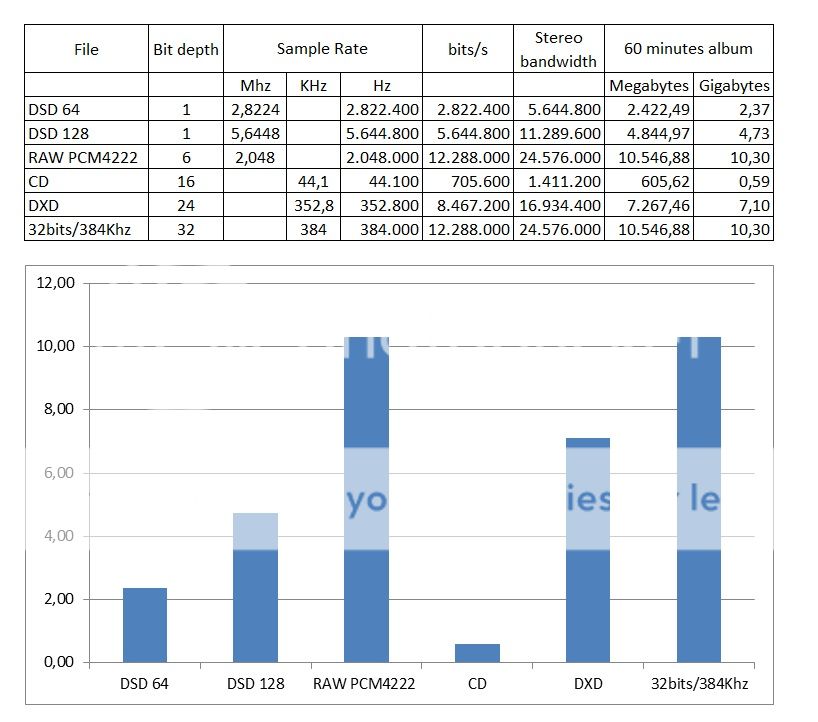

I see bit depth and sample frequency as axis of the same component (resolution or mbytes/s).

If I were allowed to use a gross analogy, it would be the relativeness of time and space. Time and space are axis of the same component, which is the speed of light. Time and space are relative, but you cannot change the speed of light.

So you can reduce bit depth (space) and increase sample frequency (time) and vice-versa, and you will always obtain the same "resolution", "dynamic range" or "mbytes/s" (the speed of light...) .

But the "resolution", the "dynamic range" or "mbytes/s" of your master recording file must remain the same of your distribution file.

Otherwise, you will need digital filters. Nothing wrong using them to convert your raw ADC output to lower resolution files. But digital filters seems to be more complicated when used at the playback side, which usually a has distribution file with lower resolution then the digital filter output. That means such digital filters must rely on estimation algorithms.

Even if master recording files and distribution files have the same resolution, but different bit depths and sampling rates, I suppose there are side effects of translating bit depth and sampling rate.

A raw output from a 1 bit sigma delta that is sampled with a high jitter clock may result in a 6-bit file with wrong voltage levels since the sample was made in the wrong moment in time, when the wave energy was higher or lower. The same thing happens with a multi sigma-delta raw output that is also sampled with a high jitter clock. Such raw output file will be translated into a 24 bit file that also has wrong quantization levels. But at least the 2^6 different voltage levels as the raw output reduces the magnitude of such quantization error.

So I believe that not only the resolution of your master recording file must remain the same of your resolution file, but also its bit depth and its sample rate.

It is just a matter of choosing a viable standard for the benefit of the whole industry and consumers.

I understand that reducing the bit depth of you master recording has its virtues (storage, bandwidth) and compromises (quantization noise). The same goes when increasing sample rate of your master recording, which has virtues (easing analog filters) and compromises (storage, bandwidth).

IMHO, and is just an opinion, since I have no background to support the idea, the audio chain that I described in that post is a good compromise.

If a master recording file with enough resolution (mbytes/s) is also used as distribution file, there is no need for estimation oversampling, in other words, digital filters.

Sure it would not be "the best" playback hardware for the vast majority of distribution files available nowadays (16 bit or 24 bit, with moderate sampling rate). Converting such files to the 6 bit, 2.048 MHz, supported by such discrete DAC, would still require a digital filter with an estimation algorithm (at least a computer software can convert the files asynchronously with all the CPU computation power, using in several iterations) and they would also had the quantization inaccuracies related to the jitter at the raw ADC output.

But it will be the best for everybody if the distribution file stick to 6 bits and ~= 2.048 MHz:

Some people give up of the digital filters, which hardens the burden of analog filters.

Some people give up of analog filters, but then they need digital filters with estimation algorithms or they leave the playback chain under the influence of high frequency energy (images from 16 bit or 24 bit files or quantization noise from DSD).

Q.4 - Why not to reduce the resolution of the raw output of a widely disseminated sigma delta ADC, using it as a master recording and distribution file without any estimation filter?

The resolution of the raw output must be low enough to allow its use as distribution file and high enough to avoid playback digital filters, easing the work of playback analog filters.

I think the 6 bit, 2.048 MHz file would be a good compromise to be adopted as standard for all the audio chain (raw ADC output, master recording files and distribution files).

Someone would argue instead: "so why not using 16 bit, 24 bit files as standard and then just increasing the sample rate?"

It seems expensive to manufacture IC ADC's and DAC's with large R-2R ladders. 6 bit is definitely the best compromise at both sides (ADC and DAC). It allows mass produced IC's, but also discrete designs with Z-foils.

I had no response at that thread.

Q.5 - Did I say something really wrong?

Forgive me the long post.

Thread resurrection...

Suppose there is no need to stick to a certain media.

Then the audio chain starts with a multi-bit output ADC like Texas Instrument PCM4222. It has a 6-bit modulator output.

There is no fixed media, but there is still internet bandwidth.So the PCM4222 works at its minimum master clock input: 2.048 MHz.

What is the bandwidth of such file in Mbit per second?

So our code has 6 bit of quantization and 2.048 MHz of sampling rate. Not a novel idea as it seems like DSD-Wide.

That means quantization noise at higher frequencies then 1 bit DSD and Nyquist sampling images way after DXD (352.8 KHz) easing the analog filter requirements at both sides (ADC and DAC).

The low bit quantization (6-bit) and 2.048 MHz frequency also allows a discrete non-oversampling DAC. I suppose it is possible to use switches with low crosstalk at 2MHZ region and a lower number of Z-foil resistors in a logarithm R2R ladder.

This discrete DAC also allows a voltage output. In other words, no I/V converter or op-amps. Then we need a direct coupled buffer to keep the audio output impedance as low as possible.

Is this a good idea? Is it feasible? Is it affordable?

If the Z-Foil resistor ladder makes such discrete DAC too expensive, is it possible to use ES9018 with a lower 2.048MHz synchronous master clock (from TPA USB transport) and no oversampling filter?

Is it possible to transmit such 6-bit, 2.048MHz audio code through USB or Firewire?

Is it possible to implement a digital volume control using such 6-bit files. Does someone have any idea?

Last but not least, is there any computer software to convert DSD and DXD to a 6-bit, 2.048 MHz file while on-line providers do not adopt such format? If they ever adopt such format...

p.s.: I prefer a voltage DAC since I would like to use an electrostatic transducer... That means the whole chain works at voltage mode...

It seems that multi-bit raw output from sigma delta ADC's (usually 6 bits with samples as high as the master clock frequency allows) is usually processed by the IC itself, which delivers at its interface a 16-bit, 24-bit or DSD stream. TI PCM4222 is one exception.

Q.1 - What is the bottleneck in the audio chain preventing the use of such raw output as "distribution file"? Is it storage? Is it Internet bandwidth? Is it USB bandwidth?

Charles has a valid point about resolution - why are S-D DACs marketed with the high resolutions that they claim for them? Since they all suffer from noise modulation - meaning the noise varies with the signal level - they cannot have a constant resolution. Resolution means the ability to resolve, tell the difference, between two things. But with an S-D this ability to resolve two different input codes depends on signal level. At lower signals, the resolution is higher than at higher levels.

The opamp non-linearity would be a different thing - it produces IMD products which are fairly well characterised in terms of their correlation with signal. S-D noise modulation is not, and the datasheets don't give this information in any form, apart from ESS who indicate how SNR changes with DC offset if I recall correctly. S-D incorporates feedback too - around a much more serious non-linearity than any I've seen in an opamp.

not really, my comment simply related to just about every single piece of music will be sampled with SD already, so what can a non SD dac do about this problem of which you speak to make these distinct samples again? its a red herring

re the dac, I know that, but its still supposed to be R2R according to the claims. I guessed it at the 45 straight after it was released. not much can operate on such tiny voltage, tiny current and without large complexity thats impossible in a little portable.

anyway how about we leave it, nothing is going to change my mind here, my stance is the same as going in, just now its all been confirmed from the horses mouth.

Q.2 - Would a "discrete, 6-bit, R-2R ladder, with voltage output using foil resistors" also suffer with noise modulation? Would it also require such large negative feedbacks of Sigma Delta DAC IC's?

Q.3 - Converting and down sampling a 1-bit, 2.8224 MHz, DSD file to a 6 bit, 2.048 MHz file requires any mathematical estimation? Or is just a matter of transposition?

I see bit depth and sample frequency as axis of the same component (resolution or mbytes/s).

If I were allowed to use a gross analogy, it would be the relativeness of time and space. Time and space are axis of the same component, which is the speed of light. Time and space are relative, but you cannot change the speed of light.

So you can reduce bit depth (space) and increase sample frequency (time) and vice-versa, and you will always obtain the same "resolution", "dynamic range" or "mbytes/s" (the speed of light...) .

But the "resolution", the "dynamic range" or "mbytes/s" of your master recording file must remain the same of your distribution file.

Otherwise, you will need digital filters. Nothing wrong using them to convert your raw ADC output to lower resolution files. But digital filters seems to be more complicated when used at the playback side, which usually a has distribution file with lower resolution then the digital filter output. That means such digital filters must rely on estimation algorithms.

Even if master recording files and distribution files have the same resolution, but different bit depths and sampling rates, I suppose there are side effects of translating bit depth and sampling rate.

A raw output from a 1 bit sigma delta that is sampled with a high jitter clock may result in a 6-bit file with wrong voltage levels since the sample was made in the wrong moment in time, when the wave energy was higher or lower. The same thing happens with a multi sigma-delta raw output that is also sampled with a high jitter clock. Such raw output file will be translated into a 24 bit file that also has wrong quantization levels. But at least the 2^6 different voltage levels as the raw output reduces the magnitude of such quantization error.

So I believe that not only the resolution of your master recording file must remain the same of your resolution file, but also its bit depth and its sample rate.

It is just a matter of choosing a viable standard for the benefit of the whole industry and consumers.

I understand that reducing the bit depth of you master recording has its virtues (storage, bandwidth) and compromises (quantization noise). The same goes when increasing sample rate of your master recording, which has virtues (easing analog filters) and compromises (storage, bandwidth).

IMHO, and is just an opinion, since I have no background to support the idea, the audio chain that I described in that post is a good compromise.

If a master recording file with enough resolution (mbytes/s) is also used as distribution file, there is no need for estimation oversampling, in other words, digital filters.

Sure it would not be "the best" playback hardware for the vast majority of distribution files available nowadays (16 bit or 24 bit, with moderate sampling rate). Converting such files to the 6 bit, 2.048 MHz, supported by such discrete DAC, would still require a digital filter with an estimation algorithm (at least a computer software can convert the files asynchronously with all the CPU computation power, using in several iterations) and they would also had the quantization inaccuracies related to the jitter at the raw ADC output.

But it will be the best for everybody if the distribution file stick to 6 bits and ~= 2.048 MHz:

So there is a compromise between analog filters, digital filters (with estimation algorithms, at least in the DAC side) and the imbalance with the master recording distribution file in terms of resolution, bit depth and sample rate.what will be interesting is when multibit DSD becomes more available; as in available anywhere apart from a couple of insane hobbiests programming FPGAs

Some people give up of the digital filters, which hardens the burden of analog filters.

Some people give up of analog filters, but then they need digital filters with estimation algorithms or they leave the playback chain under the influence of high frequency energy (images from 16 bit or 24 bit files or quantization noise from DSD).

Q.4 - Why not to reduce the resolution of the raw output of a widely disseminated sigma delta ADC, using it as a master recording and distribution file without any estimation filter?

The resolution of the raw output must be low enough to allow its use as distribution file and high enough to avoid playback digital filters, easing the work of playback analog filters.

I think the 6 bit, 2.048 MHz file would be a good compromise to be adopted as standard for all the audio chain (raw ADC output, master recording files and distribution files).

Someone would argue instead: "so why not using 16 bit, 24 bit files as standard and then just increasing the sample rate?"

It seems expensive to manufacture IC ADC's and DAC's with large R-2R ladders. 6 bit is definitely the best compromise at both sides (ADC and DAC). It allows mass produced IC's, but also discrete designs with Z-foils.

I had no response at that thread.

Q.5 - Did I say something really wrong?

Forgive me the long post.

On a second thought, storage may still be the bottleneck.

On the other hand, PCM formats compress pretty well using lossless compression - have no idea about the compression properties of DSD.

Well, this might sound bizarre but the tiny Cowon music player of a friend on mine, using a Wolfson DAC, running on battery power easily outdid a highly tweaked low cost TT, and a reasonably tweaked Quad CD player.

Frank

Thank you for your comment. I think the iphone and some ipods also use a Wolfson DAC. I suspect there are a few factors at play here.

Battery power, reading the data from flash memory, and short track lengths, I think are contributing factors for good sound.

erin:

In response to your surprise at my comment I was going by what had been said in this thread. I had no other information to go on. The information you now draw my attention to would not have changed anything.

The original poster is an engineer. To what level of expertise, no one here will know, but the proof would be in the listening.

I appreciate his observation that there are many people who find even the best engineered delta sigma designs to have a sound quality which annoys or offends.

I unfortunately fall into this category of people, and this is why I was interested in this thread.

A person who has never had any problems with digital sound might have difficulty understanding the problem that "digititis" causes some people.

But if we can trust that this is an issue for some people, and then find out exactly what the cause of it is, and correct the issue, then I think this would make a vast improvement to digital audio for everybody.

Thanks for the suggestion. It looks pretty. I doubt I will ever get to hear one.

Is there anything more affordable that comes even close?

At a simplistic level the problems of delta sigma appear to lie in 2 key areas: they need long conditioning times, of the order of hours before properly coming on song, when started from cold; and, they are very susceptible to interference.But if we can trust that this is an issue for some people, and then find out exactly what the cause of it is, and correct the issue, then I think this would make a vast improvement to digital audio for everybody.

This has been my experience, with units some years old. The latest offerings may be better, perhaps ...

Frank

At a simplistic level the problems of delta sigma appear to lie in 2 key areas: they need long conditioning times, of the order of hours before properly coming on song, when started from cold; and, they are very susceptible to interference.

This has been my experience, with units some years old. The latest offerings may be better, perhaps ...

Frank

and your explanation for that is what ??

and your explanation for that is what ??

Trevor, at least in the case of ES9018/2 this is completely legit, particularly if running a very low DPLL bandwidth setting, they take some time to warm up...weird I know but its true. It happens across commercial and DIY dacs. some will set the DPLL bandwidth to 'best' to avoid this (this is the default) and in this setting it will simply seek the lowest bandwidth needed to get a stable lock.

using a lower bandwidth than 'best' is preferred and during this 'warm up' time it seems effected by ripple on ground, fridges starting up etc. and will drop lock, pause etc. I normally just let it warm up before use if i'm running in asynchronous mode, I havent tested to see if there are other more subtle audible issues. running synchronously its not an issue.

Last edited:

At a simplistic level the problems of delta sigma appear to lie in 2 key areas: they need long conditioning times, of the order of hours before properly coming on song, when started from cold; and, they are very susceptible to interference.

This has been my experience, with units some years old. The latest offerings may be better, perhaps ...

Frank

I do listen to equipment for a couple of hours before making a judgement.

My experience is that the Burr Brown PCM58 and PCM1702 both need about 30 minutes to sound good.

The TDA1541 sounds very good straight away, but will improve subtly over a few hours.

Qusp

Is this problem with the ES9018 prevelant on every design incorporating this chip? Do we know the change in temerature etc.

It sounds quite an interesting phenonema, and one (involving integrated circuits)that I haven't heard about for many many years, I am going back to mid/late 80's and work that the firm I was with then did in conjuction with Daresbury.

Most circuitry will if designed properly reach its operating temperature within a few to tens of minutes and then be stable (depending on ambient temp) but as this is commercial the temerature difference between homes isn't that much as we humans like to live within a nice comfy range of temps.

Is this problem with the ES9018 prevelant on every design incorporating this chip? Do we know the change in temerature etc.

It sounds quite an interesting phenonema, and one (involving integrated circuits)that I haven't heard about for many many years, I am going back to mid/late 80's and work that the firm I was with then did in conjuction with Daresbury.

Most circuitry will if designed properly reach its operating temperature within a few to tens of minutes and then be stable (depending on ambient temp) but as this is commercial the temerature difference between homes isn't that much as we humans like to live within a nice comfy range of temps.

it may not be temp at all, its just using the term 'warm up' is habit. I live in QLD Australia, it doesnt usually have to warm up haha. I havent tried actively heating it. there are lots of factors that seem to contribute, but it seems tied to the grounding most of all. it may just take a little while for the loop to settle down, since jitter at these levels is a pretty long term/low frequency effect..

if you leave it on the default, as many commercial dacs will, its not an issue unless youve done something silly with your i2s connections, or have a VERY high jitter source and bad system grounding. Some commercial dacs do indeed have the problem. its only really when you manually set/force the DPLL bandwidth to the lower settings (best jitter rejection). its worst if you have a direct ground path to the dac from outside, like with a non-isolated USB->i2s (single ended), or with an MCU/i2c connection and ripple on ground gets into the system.

glt has done extensive testing and recorded the results

I repeat, the issue is with US DIYERs or manufacturers pushing the boundaries with DPLL bandwidth settings, use the default 'best' setting and it doesnt happen.

my best results when using lowest DPLL BW have been gained by using Ian's fifo and grounding the fifo clock power supply and ground on the same star point as the onboard clock used to with a thick wire

mainly i'm using synchronous clocking now though

Phoenix, I prefer Synchronous mode, but its more different than better than a very well done async mode build. I'm sorry I dont really do describing sound quality of such things when you and I have different ears and I can guarantee different gear/probably different taste and different music

unless you are going to do it well with a very low jitter source clock and proper interconnections I think you are better off staying with async

if you leave it on the default, as many commercial dacs will, its not an issue unless youve done something silly with your i2s connections, or have a VERY high jitter source and bad system grounding. Some commercial dacs do indeed have the problem. its only really when you manually set/force the DPLL bandwidth to the lower settings (best jitter rejection). its worst if you have a direct ground path to the dac from outside, like with a non-isolated USB->i2s (single ended), or with an MCU/i2c connection and ripple on ground gets into the system.

glt has done extensive testing and recorded the results

I repeat, the issue is with US DIYERs or manufacturers pushing the boundaries with DPLL bandwidth settings, use the default 'best' setting and it doesnt happen.

my best results when using lowest DPLL BW have been gained by using Ian's fifo and grounding the fifo clock power supply and ground on the same star point as the onboard clock used to with a thick wire

mainly i'm using synchronous clocking now though

Phoenix, I prefer Synchronous mode, but its more different than better than a very well done async mode build. I'm sorry I dont really do describing sound quality of such things when you and I have different ears and I can guarantee different gear/probably different taste and different music

unless you are going to do it well with a very low jitter source clock and proper interconnections I think you are better off staying with async

Last edited:

I wonder if its PSU start up then, or supplies settling.

We did a job as I said back in the dim and different past, and using commercial chips we had similar problems, we solved it by bying military grade ADC's and DAC's (they were available and expensive in those days), lovely ceramic package with gold plated caps etc. Ended up with tubes of the things, cos no one else wanted them. Threw them away about 3/4 years ago when we moved, only to find out later they had a decent scrap value because of the gold...SOB!

We did a job as I said back in the dim and different past, and using commercial chips we had similar problems, we solved it by bying military grade ADC's and DAC's (they were available and expensive in those days), lovely ceramic package with gold plated caps etc. Ended up with tubes of the things, cos no one else wanted them. Threw them away about 3/4 years ago when we moved, only to find out later they had a decent scrap value because of the gold...SOB!

I think its probably more of a case of Dustin pushing the boundaries with the DPLL BW settings he made available. when he designed the 9018 coming from the 9008, he made the default DPLL 128x narrower and also allowed manual setting of even more extreme narrow BW.

it could indeed have something to do with the voltage the clock swings around and the voltage the digital core logic swings around settling into equilibrium, made worse if the i2s BCK driver/buffer is external on another PCB and using another ground/voltage again. which was part of my reasoning for connecting the grounds from the single point with thick wire.

if you use the spdif inputs you dont get it either, because their 'lowest' is equal to 'best' with i2s.

its exacerbated by the fact this dac runs at 1.5MHz internally and we are all pushing the BW down and pushing up the sample-rates.

bitch about the gold, yeah even with copper these days you almost need to think about throwing things away.

it could indeed have something to do with the voltage the clock swings around and the voltage the digital core logic swings around settling into equilibrium, made worse if the i2s BCK driver/buffer is external on another PCB and using another ground/voltage again. which was part of my reasoning for connecting the grounds from the single point with thick wire.

if you use the spdif inputs you dont get it either, because their 'lowest' is equal to 'best' with i2s.

its exacerbated by the fact this dac runs at 1.5MHz internally and we are all pushing the BW down and pushing up the sample-rates.

bitch about the gold, yeah even with copper these days you almost need to think about throwing things away.

it may not be temp at all, its just using the term 'warm up' is habit. I live in QLD Australia, it doesnt usually have to warm up haha. I havent tried actively heating it. there are lots of factors that seem to contribute, but it seems tied to the grounding most of all. it may just take a little while for the loop to settle down, since jitter at these levels is a pretty long term/low frequency effect..

if you leave it on the default, as many commercial dacs will, its not an issue unless youve done something silly with your i2s connections, or have a VERY high jitter source and bad system grounding. Some commercial dacs do indeed have the problem. its only really when you manually set/force the DPLL bandwidth to the lower settings (best jitter rejection). its worst if you have a direct ground path to the dac from outside, like with a non-isolated USB->i2s (single ended), or with an MCU/i2c connection and ripple on ground gets into the system.

glt has done extensive testing and recorded the results

I repeat, the issue is with US DIYERs or manufacturers pushing the boundaries with DPLL bandwidth settings, use the default 'best' setting and it doesnt happen.

my best results when using lowest DPLL BW have been gained by using Ian's fifo and grounding the fifo clock power supply and ground on the same star point as the onboard clock used to with a thick wire

mainly i'm using synchronous clocking now though

Phoenix, I prefer Synchronous mode, but its more different than better than a very well done async mode build. I'm sorry I dont really do describing sound quality of such things when you and I have different ears and I can guarantee different gear/probably different taste and different music

unless you are going to do it well with a very low jitter source clock and proper interconnections I think you are better off staying with async

Hello qusp,

Its my intention to use a low jitter clock and to do it well.

Just picked on another forum the latest in a discussion thread, which essentially bashes Redbook, against 128xDSD. This is from my POV, a nonsense, it's all about the quality of the playback electronics, that's determining the quality of the heard sound ...

I would like do an experiment of taking a highest quality, direct to 128xDSD recording, and then using the best software around downsample that to RB, then resample that 16/44.1 back up to 128xDSD. Do this exercise 100 times, so we're talking about 100 generations of resampling. Take the last generation copy of the DSD, and then see how many people can differentiate that from the original DSD ...

Why I say this is that I've done a number of experiments of upsampling nominally poor, low bit rate recordings, and every time this markedly improves the perceived quality, on a very ordinary playback setup. This is not because something has been "added" to the sound, but because less high speed processing has to be done to "digest" the digital info on playback, IMO ...

Frank

I would like do an experiment of taking a highest quality, direct to 128xDSD recording, and then using the best software around downsample that to RB, then resample that 16/44.1 back up to 128xDSD. Do this exercise 100 times, so we're talking about 100 generations of resampling. Take the last generation copy of the DSD, and then see how many people can differentiate that from the original DSD ...

Why I say this is that I've done a number of experiments of upsampling nominally poor, low bit rate recordings, and every time this markedly improves the perceived quality, on a very ordinary playback setup. This is not because something has been "added" to the sound, but because less high speed processing has to be done to "digest" the digital info on playback, IMO ...

Frank

Last edited:

I would like do an experiment of taking a highest quality, direct to 128xDSD recording, and then using the best software around downsample that to RB, then resample that 16/44.1 back up to 128xDSD. Do this exercise 100 times, so we're talking about 100 generations of resampling. Take the last generation copy of the DSD, and then see how many people can differentiate that from the original DSD ...

Well, as just one data point, something like a year ago I did run a blind listening test on another forum with a number of different versions of the same track - resampled at different sample rates, truncated from 24 to 16 bits, and one version even encoded using (high bit rate) mp3. The only consistent result was that the one version that had been amplified by 1 dB was the clear winner - the rest was practically random.

Listener fatigue is the unknown factor, in listening tests.

This is why I prefer AB (two points of comparison) tests rather than ABCDEFG tests. Anyone can become muddled when listening to too many tracks, or equipment. But it is usually easy to declare a preference when comparing only one thing to another.

This is why I prefer AB (two points of comparison) tests rather than ABCDEFG tests. Anyone can become muddled when listening to too many tracks, or equipment. But it is usually easy to declare a preference when comparing only one thing to another.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- Digital Line Level

- Digital, but not by the numbers