Tetrachromacy - Wikipedia, the free encyclopedia

One study suggested that 2–3% of the world's women might have the kind of fourth cone that lies between the standard red and green cones, giving, theoretically, a significant increase in color differentiation.[10] Another study suggests that as many as 50% of women and 8% of men may have four photopigments.[9]

Further studies will need to be conducted...

I was actually expecting you to argue that light doesn't exist

Exist? How would you get there from what I said about sounds? Do show your reasoning.

unless someone actually sees it, just as you argue that there is no sound unless someone hears it.

The corollary of no sound unless someone hears it is that there's no colour unless someone sees it, not that light doesn't exist. I can only conclude that you're not really paying attention

If a man says something in a forest and a woman's not there to hear it, is he still wrong?I was actually expecting you to argue that light doesn't exist unless someone actually sees it, just as you argue that there is no sound unless someone hears it.

First of all, I trust my own ears more than I trust others. That's a bias. Guilty and unrepentant. Usually means I get the book thrown at me, if the movies are any indication.

Anyway, I'm sure everyone here means "double-blind". A "blind" test is biased.

I'm not sure what the "Scientific Method" has to do with most of the audio testing I've come across. For the most part its at best a very limited version of the Scientific Method which is another way of saying "Not The Scientific Method". Plus you have those pesky humans and self-reporting involved, which has been proven unreliable via the Scientific Method. Oh well, we carry on.

Lastly, I don't often find audio tests published in the peer-reviewed literature, although certainly the professional Audio Societies do publish some of them.

But, being published in a Peer-Reviewed Journal is no guarantee of accuracy or validity.

The press is fond of sending the lowest, most scientific-illiterate junior journalist to create a story, usually just a rehash of a press release, trumping every little advance in health care that gets a Journal entry. They then throw the report out to the masses, generating some "I told you so''s, " I don't care I'm not changing my diet"s, and "Why do the [insert evil conspirator] keep these drugs off the market?'s.

Then we look at the reproducibility. In order for a study to be taken seriously, it has to be reproducible and get the same results. Call me crazy, but I don't see much of this in the double-blind audio world. Maybe I'm just not paying attention, but I suspect not.

As for the medical journals alluded to earlier, between 70 and 95% of all Cancer research studies published in Peer Reviewed Journals are not reproducible and therefore invalid. The public still remembers them, suspiciously, and the conspiracy theories arise. But there's nothing to them; the results are bunk. I suppose I could suspend my disbelief and just go along with the premise that audio component double-blind testing is done with the same rigour as Cancer research.

But I just can't go there. Sorry.

I do find what little scientific studies on audio I read interesting, as I do opinions found in forums such as this, and the verbiage from the usual cast of equipment reviewers (whom are useless until you get to know the individual reviewer's tastes and biases, but remarkably useful after you do).

Plus there are a few people whose audio judgement I trust amongst my circle of friends, acquaintances, and people who work in the business in some role dealing with sound and music, not equipment design. Oh, and I listen to a stupid amount of live amplified and unamplified music.

It's an imperfect system, I know. But it's an imperfect art as well, so they go together somehow in a way that works.

Anyway, I'm sure everyone here means "double-blind". A "blind" test is biased.

I'm not sure what the "Scientific Method" has to do with most of the audio testing I've come across. For the most part its at best a very limited version of the Scientific Method which is another way of saying "Not The Scientific Method". Plus you have those pesky humans and self-reporting involved, which has been proven unreliable via the Scientific Method. Oh well, we carry on.

Lastly, I don't often find audio tests published in the peer-reviewed literature, although certainly the professional Audio Societies do publish some of them.

But, being published in a Peer-Reviewed Journal is no guarantee of accuracy or validity.

The press is fond of sending the lowest, most scientific-illiterate junior journalist to create a story, usually just a rehash of a press release, trumping every little advance in health care that gets a Journal entry. They then throw the report out to the masses, generating some "I told you so''s, " I don't care I'm not changing my diet"s, and "Why do the [insert evil conspirator] keep these drugs off the market?'s.

Then we look at the reproducibility. In order for a study to be taken seriously, it has to be reproducible and get the same results. Call me crazy, but I don't see much of this in the double-blind audio world. Maybe I'm just not paying attention, but I suspect not.

As for the medical journals alluded to earlier, between 70 and 95% of all Cancer research studies published in Peer Reviewed Journals are not reproducible and therefore invalid. The public still remembers them, suspiciously, and the conspiracy theories arise. But there's nothing to them; the results are bunk. I suppose I could suspend my disbelief and just go along with the premise that audio component double-blind testing is done with the same rigour as Cancer research.

But I just can't go there. Sorry.

I do find what little scientific studies on audio I read interesting, as I do opinions found in forums such as this, and the verbiage from the usual cast of equipment reviewers (whom are useless until you get to know the individual reviewer's tastes and biases, but remarkably useful after you do).

Plus there are a few people whose audio judgement I trust amongst my circle of friends, acquaintances, and people who work in the business in some role dealing with sound and music, not equipment design. Oh, and I listen to a stupid amount of live amplified and unamplified music.

It's an imperfect system, I know. But it's an imperfect art as well, so they go together somehow in a way that works.

Last edited:

As she wasn't in the forest, she'd better be in the kitchen making me a sandwich and getting me a beer.Yup.

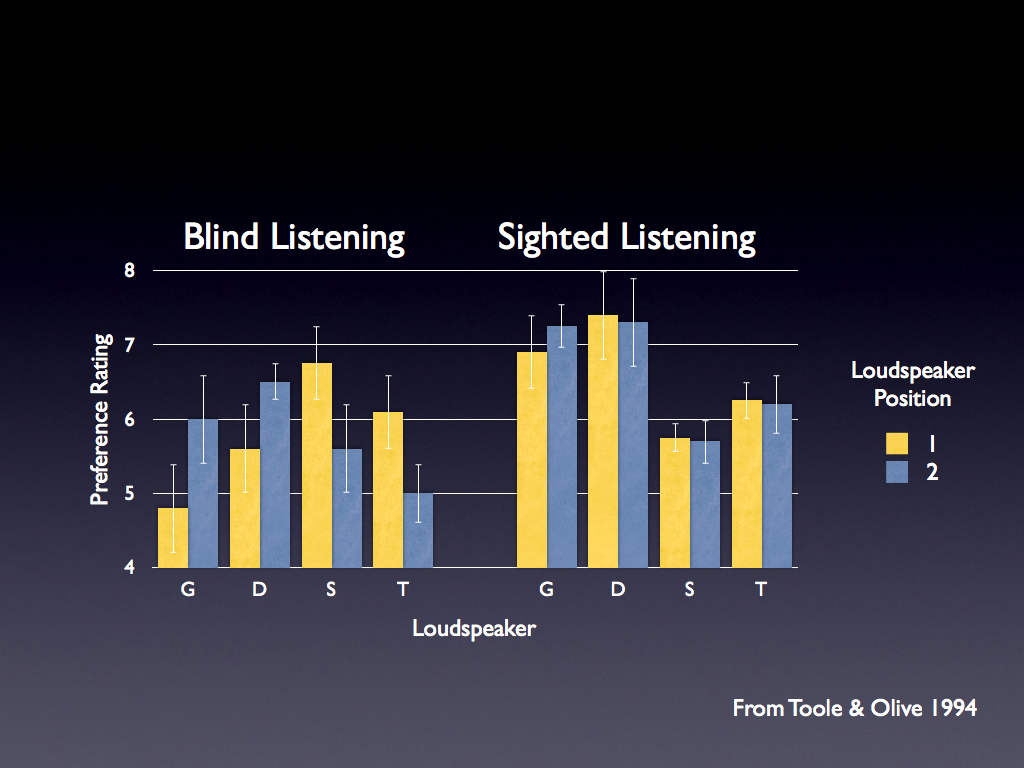

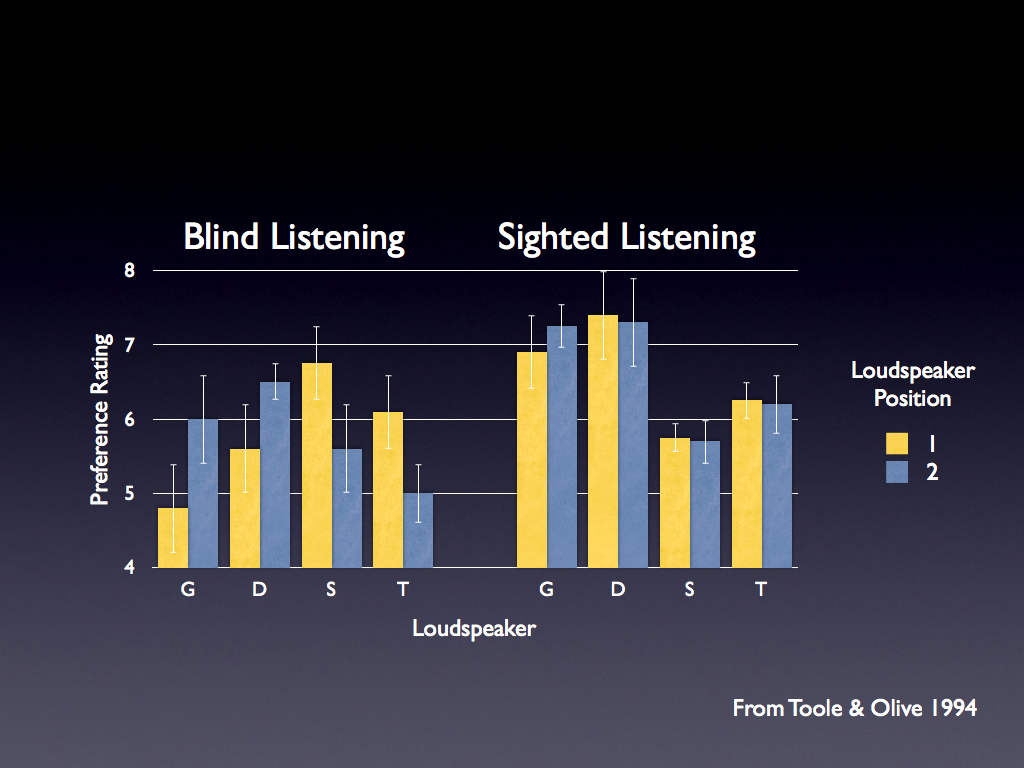

Sean Olive on sighted listening tests

Dr. Sean Olive conducted a test to specifically disprove your statement above: A Blind Versus Sighted Loudspeaker Experiment

If you had honest and unbiased listeners with highly gifted hearing, then non-blind testing would be just fine.

Dr. Sean Olive conducted a test to specifically disprove your statement above: A Blind Versus Sighted Loudspeaker Experiment

This question was tested in 1994, shortly after I joined Harman International as Manager of Subjective Evaluation [1]. My mission was to introduce formalized, double-blind product testing at Harman. To my surprise, this mandate met rather strong opposition from some of the more entrenched marketing, sales and engineering staff who felt that, as trained audio professionals, they were immune from the influence of sighted biases. Unfortunately, at that time there were no published scientific studies in the audio literature to either support or refute their claims, so a listening experiment was designed to directly test this hypothesis.

...

The scientific method needs just ONE repetitive fact to prove/disprove a theory. I can tell the difference and I was amazed that my wife can tell it too (she really was a blind tester, doesn't have a clue what SACD is).

Was this a *double blind* test? It's difficult, but important, to refrain from draw conclusions based on uncontrolled tests. Rather, one should keep an open mind to the influence of outside factors (ie. level matching, different masters, your apparent enthusiasm, or even repressed cues indicating that the SACD was better).

Science is not a "probability". Because that "probability" and "average value" is hiding just our incapacity to analize all the posible causes (causality).

Relaying blindly on probability is like... when you have a hand on a stove and another in ice cold water, we can tell that in "average" we feel good.

Sample testing a population that is half deaf to establish if the CD is better than SACD, and saying that 50% didn't hear differences, is meningless from scientific method point of view. ONE person that can proove constantly the fact that can identify the difference is enough.

As you put it, the 50% that didn't hear any difference could not reliably discern between the figurative ice cold water and the hot stove. But that isn't what was reported in the AES study:

AES Study Abstract said:The tests were conducted for over a year using different systems and a variety of subjects. The systems included expensive professional monitors and one high-end system with electrostatic loudspeakers and expensive components and cables. The subjects included professional recording engineers, students in a university recording program, and dedicated audiophiles. The test results show that the CD-quality A/D/A loop was undetectable at normal-to-loud listening levels, by any of the subjects, on any of the playback systems. The noise of the CD-quality loop was audible only at very elevated levels.

Others have pointed out that your 'one person' would be an outlier. I for one, would totally be interested in any single person who could reliably identify between CD and SACD, various kinds of cables, etc. But more in a Guinness Book of Records sort of way - not a "we should develop technology to suit this person's needs" kind of way.

Valid test methodologies (ITU recommendations)

Back to the subject of the original post, which solicited advice on the development of valid subjective test methodologies!

The ITU has published several recommendations on this subject. Most applicable to DACs is ITU-R BS.1116-3 Methods for the subjective assessment of small impairments in audio systems (PDF).

This test methodology was developed for comparing perceptual audio codecs (lossy formats) to a reference (the original lossless file). In the case of DACs, one could compare the original lossless (reference) file to those submitted to an additional DA-AD conversion (those under evaluation). The ADC should be as transparent as possible, ideally an order of magnitude better performance than the DACs under testing.

As others have noted, an objection could be made that the ADC is masking the differences between DACs. The inclusion of the original file as reference addresses this concern.

Back to the subject of the original post, which solicited advice on the development of valid subjective test methodologies!

The ITU has published several recommendations on this subject. Most applicable to DACs is ITU-R BS.1116-3 Methods for the subjective assessment of small impairments in audio systems (PDF).

ITU-R BS.1116-3 said:Contents

...

1. General

2. Experimental design

3. Selection of listening panels

4. Test method

5. Attributes

6. Programme material

7. Reproduction devices

8. Listening conditions

9. Statistical analysis

10. Presentation of the results of the statistical analyses

11. Contents of test reports.

Also included are Attachments containing guidance on the selection of expert listeners and an example of the instructions given to the test subjects.

This test methodology was developed for comparing perceptual audio codecs (lossy formats) to a reference (the original lossless file). In the case of DACs, one could compare the original lossless (reference) file to those submitted to an additional DA-AD conversion (those under evaluation). The ADC should be as transparent as possible, ideally an order of magnitude better performance than the DACs under testing.

As others have noted, an objection could be made that the ADC is masking the differences between DACs. The inclusion of the original file as reference addresses this concern.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- Digital Line Level

- Blind DAC Public Listening Test Results