Noise floor, not signal-to-noise. The integration is important!

Yes, for measurements of static test tones.

To be clear, although he made a fundamental mistake in interpretation, Kunchur's experimental results are solid and have not, to my knowledge, been refuted.

Yes, Kunchur measured experimentally the timing resolution of human hearing. This is the good part of the paper.

Saying that redbook audio does not reach that timing resolution was wrong.

There is a lot of wishful thinking among those who are in need to defend this long obsolete gramophone technology developed in the middle of the 19th century.There is a lot of wishful thinking among those who are in need to defend this long obsolete CD technology developed in the late seventies.

Its fine when people prefer vinyl playback.

But saying vinyl comes closer to the electrical signal coming out of the mastering studio, than digital, is wrong.

There is a lot of wishful thinking among those who are in need to defend this long obsolete gramophone technology developed in the middle of the 19th century.

Well "grammophone technology" evolved a bit from in the middle of the 19th century

until now. CD is bound to 16/44 forever.

Its fine when people prefer vinyl playback.

But saying vinyl comes closer to the electrical signal coming out of the mastering studio, than digital, is wrong.

Depends on your criteria. Hard bandwith limited at 20 kHz ? Preringing of

digital filters (which has no analogy in the real world) ?

Increasing distortion with decreasing amplitude (which has no analogy in the real world) ? etc.

Fortunately nowadays we already really can get what comes "out of the mastering studio" (24bit, 96kHz or more),

of course the high priests of the church of DBT will say there is no difference to CD...

Last edited:

Kunchur was incorrect, and many people with actual training and background in DSP have corrected him in the intervening years; that's the nature of science and its greatest strength.

References? Many people who? What did they do? What did they use? A simple statement cannot argue against a published article (peer-reviewed).

Kunchur was incorrect, and many people with actual training and background in DSP have corrected him in the intervening years...

Yes please bring on the "data".

Quote-mining is a poor substitute for understanding, but perhaps useful in propagandizing. Fortunately, the engineers who designed the CAT scanner that saved my life were more interested in getting things correct than rationalizing nostalgia.

Your quoting is poor as well because you give no proof! A CAT scanner is not a human being that is FUNDAMENTAL mistake!!!

See post 12.Yes please bring on the "data".

If you do not believe in proper scientific listening tests and draw your conclusions from that, than your mind is free to believe anything it wants...Well "gramophone technology" evolved a bit from in the middle of the 19th century

until now. CD is bound to 16/44 forever.

Depends on your criteria. Hard bandwidth limited at 20 kHz ? Pre ringing of digital filters (which has no analogy in the real world) ?

Increasing distortion with decreasing amplitude (which has no analogy in the real world) ? etc.

Fortunately nowadays we already really can get what comes "out of the mastering studio" (24bit, 96kHz or more),

Of course the high priests of the church of DBT will say there is no difference to CD...

Last edited:

See post 12.

If you do not believe in proper scientific listening tests and draw your conclusions from that, than your mind is free to believe anything it wants...

Yes "proper" and "scientific" is the key. Unfortunately such listening tests are

quite rare.

Here's 2 from 1984, showing that a consumer level ad/da chain has no audible influence on a "relatively high end" LP playback system.Yes "proper" and "scientific" is the key. Unfortunately such listening tests are

quite rare.

Boston Audio Society - ABX Testing article

Or do you think Stanley Lipshitz is not a proper scientist and doesn't know what he's doing?

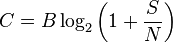

The maximum amount of information in any signal (including vinyl) is given by the Shannon-Hartley theorem:

https://en.wikipedia.org/wiki/Shannon–Hartley_theorem

Timing resolution is a form of information, so this applies here.

If you run the numbers than the CD format can handle more information than the best vinyl reproduction systems.

I do not agree because you are assuming from the start that a REAL system follows the theorem perfectly. I don't think so. So your conclusion is arbitrary.

Here's 2 from 1984, showing that a consumer level ad/da chain has no audible influence on a "relatively high end" LP playback system.

...

What do you want to "proove" with that ?

First it shows that under the conditions of ABX testing no differences where

detected by this (very small) listening panel, deducting from this that "a consumer level ad/da chain has no audible influence on a "relatively high end" LP playback system"

is highly unscientific. Actually this was not done by those conducting this test. It appears that this was merely a test if one person (Ivor Tiefenbrunn) could identify reliably the presence of ad/da chain when playing

back LPs.

Second, this has nothing to to with differences between 16 and 24 bit resolution and higher sample rates.

...Or do you think Stanley Lipshitz is not a proper scientist and doesn't know what he's doing?...

I would appreciate if you would not attribute sentences to me I did not say.

To be clear, although he made a fundamental mistake in interpretation, Kunchur's experimental results are solid and have not, to my knowledge, been refuted.

Oh yes! This is a very scientific conclusion.

There is a lot of wishful thinking among those who are in need to defend this long obsolete gramophone technology developed in the middle of the 19th century.

Its fine when people prefer vinyl playback.

But saying vinyl comes closer to the electrical signal coming out of the mastering studio, than digital, is wrong.

You are wrong again because it's not defending analog vs digital but just wishing for a better digital source that is EFFECTIVELY better than the vinyl. It's quite different concept! The CD is not better. It is worse.

You might consider that the typical stereo systems people have can make a lot a systematic errors in reproduction, starting from the speakers and their "integration" in the room. If there are such big errors one might not find a difference or even the contrary but is INconclusive because one still doesn't know how much is missing respect to best possible available.

Yamaha apparently has different people writing different pages- this most recent bout of quote-mining is more about latency than actual time or phase resolution. The page linked in the closed thread correctly states that the temporal resolution for a recorded and played back signal using a 48kHz sampling rate (standard in studios) is on the order of magnitude of a nanosecond, worst case, and explicitly says that this is inaudible (being a thousand times lower than any experimentally determined human threshold). And, of course, even the very best mechanical recording and playback systems are far worse than this, as anyone who has ever done a spectral analysis of tape or turntables can readily see.

The temporal resolution of the clock is not by definition the temporal resolution you get into the signal. There are several operations performed in the process and you cannot certainly take the best case! The temporal resolution has to do with the time evolution of the signal that is instant by instant composed in a more o less complicated way of RELATIVE amplitudes. If you change the relative amplitudes even in a very short interval, like the jitter, this does not mean that you have a signal with that time resolution for a human being! That's why a CAT scanner comparison is a fundamental mistake. You have to see how the relative amplitudes are affected. To make a very basic example, if you just change a bit of the initial slope of a single instrumental note and you listen to it the sonic sensation will be different. It will have a different expression! If you then change a bit a more complex signal (i.e. music) you cannot assume that the result is the same or better. In principle is worse because a listener associates a more complex meaning to music than simple notes. Otherwise would not be music! You cannot measure this.

FWIW, my digital gear is all 24/96 capable. Some of it will get up to 24/192 on a good day. Unfortunately almost all the music I like happens to be CD quality, the material available at high bit rates is all audiophile waffle.

We trust that the changes made to the waveform by the digital sampling and reconstruction process are the kind that aren't audible. One example is the "pre-ringing" of linear phase digital filters. It freaks out old-school analog people but I don't know of any evidence that it is audibly bothersome. It actually leads to a tighter group delay spec than an equivalent analog ("minimum-phase") filter.

Some changes are audible, others aren't. There have been some studies done. Also we can look at the known limits of human hearing and apply time-frequency duality to get an idea of what the limits look like in the frequency domain. The ear drops off sharply at 20kHz and has a simultaneous dynamic range of about 30dB, so we know its timing resolution can't be all that great.To make a very basic example, if you just change a bit of the initial slope of a single instrumental note and you listen to it the sonic sensation will be different. It will have a different expression!

We trust that the changes made to the waveform by the digital sampling and reconstruction process are the kind that aren't audible. One example is the "pre-ringing" of linear phase digital filters. It freaks out old-school analog people but I don't know of any evidence that it is audibly bothersome. It actually leads to a tighter group delay spec than an equivalent analog ("minimum-phase") filter.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- Analogue Source

- Temporal resolution