As people are quite confused and it is me that have to read carefully about this argument let's start from what qualified people say about this:

5.9 Temporal resolution | 5) Sampling issues | Audio Quality in Networked Systems | Self Training | Training & Support | Yamaha

"cut....with the human auditory system being capable of detecting changes down to 6 microseconds."

Comment: This is only the actual upper limit that has been possible to measure until now. It is not a definitive value!

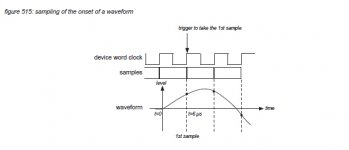

To also accurately reproduce changes in a signal’s frequency spectrum with a temporal resolution down to 6 microseconds,the sampling rate of a digital audio system must operate at a minimum of the reciprocal of 6 microseconds = 166 kHz. Figure 515 presents the sampling of an audio signal that starts at t = 0, and reaches a detectable level at t = 6 microseconds. To capture the onset of the waveform, the sample time must be at least 6 microsecond....cut"

Comment: the attached picture is Figure 515 in the text.

No such issue in the analog domain!

5.9 Temporal resolution | 5) Sampling issues | Audio Quality in Networked Systems | Self Training | Training & Support | Yamaha

"cut....with the human auditory system being capable of detecting changes down to 6 microseconds."

Comment: This is only the actual upper limit that has been possible to measure until now. It is not a definitive value!

To also accurately reproduce changes in a signal’s frequency spectrum with a temporal resolution down to 6 microseconds,the sampling rate of a digital audio system must operate at a minimum of the reciprocal of 6 microseconds = 166 kHz. Figure 515 presents the sampling of an audio signal that starts at t = 0, and reaches a detectable level at t = 6 microseconds. To capture the onset of the waveform, the sample time must be at least 6 microsecond....cut"

Comment: the attached picture is Figure 515 in the text.

No such issue in the analog domain!

Attachments

So, confusing temporal resolution with jitter is already a mistake?

In multiple clinical tests, the perception threshold of jitter has been reported to lie between 10 nanoseconds(* 5U) for sinusoidal jitter and 250 nanoseconds(* 5V) for noise shaped jitter

Yes, I would say so!

http://www.yamahaproaudio.com/europ...elftraining/audio_quality/chapter5/10_jitter/

In multiple clinical tests, the perception threshold of jitter has been reported to lie between 10 nanoseconds(* 5U) for sinusoidal jitter and 250 nanoseconds(* 5V) for noise shaped jitter

Yes, I would say so!

http://www.yamahaproaudio.com/europ...elftraining/audio_quality/chapter5/10_jitter/

"However, apart from the temporal resolution of a digital part of an audio system, the temporal characteristics of the electro-acoustic components of a system also have to be considered. In general, only very high quality speaker systems specially designed for use in a music studio are capable of reproducing temporal resolutions down to 6 microseconds assumed that the listener is situated on-axis of the loudspeakers (the sweet spot). For the average high quality studio speaker systems, a temporal resolution of 10 microseconds might be the maximum possible. "

Comment: Ops! This reminds something about considering the room as integral part of the system and trying to separate its contribution as much as possible respect to the direct signal rather than equalizing that just generates masking (i.e. loss of information, despite apparent boosts where it applies)!

Comment: Ops! This reminds something about considering the room as integral part of the system and trying to separate its contribution as much as possible respect to the direct signal rather than equalizing that just generates masking (i.e. loss of information, despite apparent boosts where it applies)!

And now something related to recording and live music monitored by means of digital equipment:

Where dynamic range and frequency range of digital audio systems have developed to span almost outside the reach of the human auditory system, time performance is a bottleneck that can not be solved.

5.4 Timing issues | 5) Sampling issues | Audio Quality in Networked Systems | Self Training | Training & Support | Yamaha

Comment: unfortunately music is a "story in the space-time domain!"

Where dynamic range and frequency range of digital audio systems have developed to span almost outside the reach of the human auditory system, time performance is a bottleneck that can not be solved.

5.4 Timing issues | 5) Sampling issues | Audio Quality in Networked Systems | Self Training | Training & Support | Yamaha

Comment: unfortunately music is a "story in the space-time domain!"

As people are quite confused and it is me that have to read carefully about this argument let's start from what qualified people say about this:

5.9 Temporal resolution | 5) Sampling issues | Audio Quality in Networked Systems | Self Training | Training & Support | Yamaha

"cut....with the human auditory system being capable of detecting changes down to 6 microseconds."

Comment: This is only the actual upper limit that has been possible to measure until now. It is not a definitive value!

To also accurately reproduce changes in a signal’s frequency spectrum with a temporal resolution down to 6 microseconds,the sampling rate of a digital audio system must operate at a minimum of the reciprocal of 6 microseconds = 166 kHz. Figure 515 presents the sampling of an audio signal that starts at t = 0, and reaches a detectable level at t = 6 microseconds. To capture the onset of the waveform, the sample time must be at least 6 microsecond....cut"

Comment: the attached picture is Figure 515 in the text.

No such issue in the analog domain!

Very disapointing for Yamaha; wording is very poor. Based on what they show, is it your belief that because at a sampling rate of 44100 samples per second for CD, with each sample 1/44100 seconds = 22.68 microseconds apart, that this is smallest delay that may be represented between two channels?

Very disapointing for Yamaha; wording is very poor. Based on what they show, is it your belief that because at a sampling rate of 44100 samples per second for CD, with each sample 1/44100 seconds = 22.68 microseconds apart, that this is smallest delay that may be represented between two channels?

No, I don't think so. This is not strictly related to delays used for the stereo imaging, although they fall in the few milliseconds range.

There is a paper I posted elsewhere that is more accurate being the actual experiment where actually headphones are used:

http://www.physics.sc.edu/kunchur/temporal.pdf

There's quite a difference between inter-channel timing resolution in a multichannel system and the timing resolution that can be stored within one channel. In the inter-channel case, sample rate (or channel bandwidth) has nothing to do with it; analog or digital, inter-channel timing resolution is limited by the noise floor of the system. In the one channel case, it is also limited by the bandwidth of the channel, analog or digital.

Here's a simple derivation of the inter-channel case. Assume you have a 1KHz sine wave and you feed it to two identical converters running at 44.1KHz. The sample train that will come out of each converter will be substantially the same, plus or minus the noise in each converter. Now, assume we add ~600 feet of cable in series with one of the converters and leave the other alone. This will delay the signal to one converter by about 1 microsecond using typical cable. The sample train coming from the two converters will thus be different, with the delayed samples lagging by about 0.36 degrees (or 0.003 radians).They will both contain a sampled waveform of a sine wave of the same frequency, but there will be a 1 microsecond delay added to one set of samples relative to the other set.

How could you verify this? Well, you could look at the amplitudes of the two samples, and determine the timing difference that would cause the observed voltage difference between the two sets of samples. What's the limit to your resolution here? The S/N ratio of the sampled waveform; if one used a converter with less noise, a finer amplitude difference could be accurately resolved. If the converter noise was too high, it would swamp the small amplitude difference that you'd need to measure in order to identify the inter-channel timing difference.

So, as you notice here, the 44.1KHz sample rate (or 22.7 microsecond sample period) is irrelevant when determining the timing difference between the two channels - it is solely a function of the resolution limit of the channel, i.e. the channel's signal to noise ratio. In the analog domain, this is limited by the channel's noise floor, and in the digital domain, this is limited by the bit depth of the channel.

Here's a simple derivation of the inter-channel case. Assume you have a 1KHz sine wave and you feed it to two identical converters running at 44.1KHz. The sample train that will come out of each converter will be substantially the same, plus or minus the noise in each converter. Now, assume we add ~600 feet of cable in series with one of the converters and leave the other alone. This will delay the signal to one converter by about 1 microsecond using typical cable. The sample train coming from the two converters will thus be different, with the delayed samples lagging by about 0.36 degrees (or 0.003 radians).They will both contain a sampled waveform of a sine wave of the same frequency, but there will be a 1 microsecond delay added to one set of samples relative to the other set.

How could you verify this? Well, you could look at the amplitudes of the two samples, and determine the timing difference that would cause the observed voltage difference between the two sets of samples. What's the limit to your resolution here? The S/N ratio of the sampled waveform; if one used a converter with less noise, a finer amplitude difference could be accurately resolved. If the converter noise was too high, it would swamp the small amplitude difference that you'd need to measure in order to identify the inter-channel timing difference.

So, as you notice here, the 44.1KHz sample rate (or 22.7 microsecond sample period) is irrelevant when determining the timing difference between the two channels - it is solely a function of the resolution limit of the channel, i.e. the channel's signal to noise ratio. In the analog domain, this is limited by the channel's noise floor, and in the digital domain, this is limited by the bit depth of the channel.

Input signal to converter does not determine sampling time of converter.

16bits is noise floor about -130dB of full scale.

The samples of delayed channel will all have amplitudes based on the delayed signal. Peak amplitudes of 1kHz signals leaving the DA converters when signal is reconstructed will have same amplitudes, with peaks delayed 1 microsecond. All zero crossing of reconstructed waveforms will be separated by 1 microsecond as well. Noise floor at zero crossing of reconstructed signals determines how fuzzy the signal is and sets range of error.

Storing analog signals in analog system is typically done with magnetic tape, hiss of tape is readily captured by 16bit digital system. Fuzziness of tape noise in results in wider range of error. Very good records capture some of tape hiss too, but add rumble noise that is a determinant of error, even if signals are sent directly to cutter head instead of tape head.

Jitter do to noise in clock generation of common converters is on order of nano seconds for cheap converters, and picoseconds for the really good ones. So not much contribution here to timing errors.

Instead of 1kHz sine, pink noise or white noise bandpass limited to within Nyquist frequency for sampling rate can be delayed by 1 microsecond between two channels and all peaks and zero crossings will be shifted by 1 microsecond in reconstructed waveforms.

16bits is noise floor about -130dB of full scale.

The samples of delayed channel will all have amplitudes based on the delayed signal. Peak amplitudes of 1kHz signals leaving the DA converters when signal is reconstructed will have same amplitudes, with peaks delayed 1 microsecond. All zero crossing of reconstructed waveforms will be separated by 1 microsecond as well. Noise floor at zero crossing of reconstructed signals determines how fuzzy the signal is and sets range of error.

Storing analog signals in analog system is typically done with magnetic tape, hiss of tape is readily captured by 16bit digital system. Fuzziness of tape noise in results in wider range of error. Very good records capture some of tape hiss too, but add rumble noise that is a determinant of error, even if signals are sent directly to cutter head instead of tape head.

Jitter do to noise in clock generation of common converters is on order of nano seconds for cheap converters, and picoseconds for the really good ones. So not much contribution here to timing errors.

Instead of 1kHz sine, pink noise or white noise bandpass limited to within Nyquist frequency for sampling rate can be delayed by 1 microsecond between two channels and all peaks and zero crossings will be shifted by 1 microsecond in reconstructed waveforms.

No, I don't think so. This is not strictly related to delays used for the stereo imaging, although they fall in the few milliseconds range.

There is a paper I posted elsewhere that is more accurate being the actual experiment where actually headphones are used:

http://www.physics.sc.edu/kunchur/temporal.pdf

Estimating timing acuity of hearing is very simple. Physically small broadband source (such as rubbing thumb and fingers together) located directly in front of head has identical path length and HRTF for both ears. Small shift on order of 2 degrees changes path length to each ear, and does very little in terms of change to HRTF of each ear. Motion and shift are easily detected.

Ignoring HRTF and using only delay with recorded signals and using headphones or earbuds produces shift in perception. Most loudspeakers produce diffraction, panel radiation and sound reflections from inside from panels, driver motors, and baskets that come back through driver cones and otherwise influence radiation in ways that smear the signals. Add to this superpositon of two speaker signals and HRTF of both signals at each ear which need to work out as well so that variation in sound pressure at each eardrum mimics single source and then resolving a phantom image to less than 10 degrees gets really hard.

It is amazing that intensity based stereo works as well as it does.

Multi way speakers with drivers more than 1/4 wave length apart at crossover to tweeter are problematic in presenting to both ears as small source, further degrading imaging.

Loud speakers

How could you verify this? Well, you could look at the amplitudes of the two samples, and determine the timing difference that would cause the observed voltage difference between the two sets of samples. What's the limit to your resolution here? The S/N ratio of the sampled waveform

The problem I have with this argument is that it only works for sine waves. Sine waves are single frequencies, therefore they extend infinitely in time. They can't ever begin or end, because these are discontinuities that would imply other frequency components.

So this kind of "time resolution" is really just phase resolution, only good for resolving the phase difference between two sine waves. Other studies have shown that allpass filters aren't audible, suggesting that the ear doesn't care too much about these phase differences.

I still believe the time resolution of CD quality digital audio is adequate for the purpose, I just don't think the Shannon-Nyquist type arguments constitute proof. I think the real issue is the tradeoff between aliasing, ringing in high-order anti-alias filters, and high frequency extension. You have to choose a sample rate high enough that none of these effects is audible.

Last edited:

Kunchur and Yamaha are wrong in saying that timing resolution depends on the sample rate. Their fault lies in the fact that they think digital signals are not band limited and that the staircase wave you see in a sample editor is the actual sound wave that the da converter outputs..

A very simple demonstration clearly debunks this myth. Its done in this video at 20min 50sec.

D/A and A/D | Digital Show and Tell (Monty Montgomery @ xiph.org) - YouTube

A very simple demonstration clearly debunks this myth. Its done in this video at 20min 50sec.

D/A and A/D | Digital Show and Tell (Monty Montgomery @ xiph.org) - YouTube

Kunchur and Yamaha are wrong in saying that timing resolution depends on the sample rate. Their fault lies in the fact that they think digital signals are not band limited

I don't think so.

The maximum amount of information in any signal (including vinyl) is given by the Shannon-Hartley theorem:

https://en.wikipedia.org/wiki/Shannon–Hartley_theorem

Timing resolution is a form of information, so this applies here.

If you run the numbers than the CD format can handle more information than the best vinyl reproduction systems.

https://en.wikipedia.org/wiki/Shannon–Hartley_theorem

Timing resolution is a form of information, so this applies here.

If you run the numbers than the CD format can handle more information than the best vinyl reproduction systems.

Kunchur and Yamaha are wrong in saying that timing resolution depends on the sample rate.

Kunchur was incorrect, and many people with actual training and background in DSP have corrected him in the intervening years; that's the nature of science and its greatest strength.

Yamaha apparently has different people writing different pages- this most recent bout of quote-mining is more about latency than actual time or phase resolution. The page linked in the closed thread correctly states that the temporal resolution for a recorded and played back signal using a 48kHz sampling rate (standard in studios) is on the order of magnitude of a nanosecond, worst case, and explicitly says that this is inaudible (being a thousand times lower than any experimentally determined human threshold). And, of course, even the very best mechanical recording and playback systems are far worse than this, as anyone who has ever done a spectral analysis of tape or turntables can readily see.

Quote-mining is a poor substitute for understanding, but perhaps useful in propagandizing. Fortunately, the engineers who designed the CAT scanner that saved my life were more interested in getting things correct than rationalizing nostalgia.

16bits is noise floor about -130dB of full scale.

...

? 20*LOG(2^16) = 96.33 dB

Kunchur was incorrect, and many people with actual training and background in DSP have corrected him in the intervening years; that's the nature of science and its greatest strength.

Yamaha apparently has different people writing different pages- this most recent bout of quote-mining is more about latency than actual time or phase resolution. The page linked in the closed thread correctly states that the temporal resolution for a recorded and played back signal using a 48kHz sampling rate (standard in studios) is on the order of magnitude of a nanosecond, worst case, and explicitly says that this is inaudible (being a thousand times lower than any experimentally determined human threshold). And, of course, even the very best mechanical recording and playback systems are far worse than this, as anyone who has ever done a spectral analysis of tape or turntables can readily see.

Quote-mining is a poor substitute for understanding, but perhaps useful in propagandizing. Fortunately, the engineers who designed the CAT scanner that saved my life were more interested in getting things correct than rationalizing nostalgia.

Thanks for correcting me.

The Kunchur paper and some Yamaha papers have been used over and over again to attack the "poor timing of redbook". I just did not read the links.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Source & Line

- Analogue Source

- Temporal resolution