The latest -what i call a BRIDGE computer- is here now from Intel... it's called: Sandy Bridge! or i7 Extreme Edition;

6 cores, 12 threads. Well its a start... as a bridge.

Dell will put together a system for you [the Dell Precision T7600] How much? .... if you have to ask....[cpu chip is $1K]. Sigh... someday. Thx-RNMarsh

6 cores, 12 threads. Well its a start... as a bridge.

Dell will put together a system for you [the Dell Precision T7600] How much? .... if you have to ask....[cpu chip is $1K]. Sigh... someday. Thx-RNMarsh

Last edited:

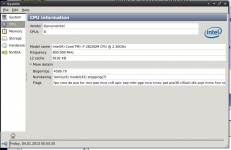

Rather 2 CPUs / 4 threads ..?..

No, I paid for and see 8 threads under Xubuntu

OOhps, it has switched down to 2.3 GHz. Does not wait fast enough.

Gerhard

Attachments

Last edited:

Current X86 CPUs do not ressemble to their ancestors.

X86 code is no more directly executed , it is translated in the fly in simple

RISC instructions.

Moreover , the old X86/87 instructions sets are there only for backward compatibility ,

most of the used instructions are at most ten years old starting with SSE2 up to

SSSE3/SSE3/4.1/4.2 and more recently AVX , most dedicated to maths computation ,

particularly the recent FMA3/4 (available on AMD CPUs and starting next year for Intel)

wich allow a floating point multiply+add in a single oiperation and with no intermediary

rounding that would render the operation less precise than a sequentialy executed one.

Currents CPUs have more than 1000 instructions , to compare with the 200 or so

twenty years ago.

Quad cores CPUs and beyond can process more than 100 gigaflps in double

precision and this will be vastly increased in the next years thanks to GPUs being

capable of offloading the CPU of Floating Point massively parralelizable tasks , so

i dont think that current PCs are not adequate for scientific calculations..

Thanks Wahab, I no longer have any idea on this stuff. The last time I played with this was during a brief stint as a grad student in the 90's.

The FFTW team at MIT claim some of the instruction set is unpublished only accesssible to Intel $$$ compilers. Is this true?

The latest -what i call a BRIDGE computer- is here now from Intel... it's called: Sandy Bridge! or i7 Extreme Edition;

6 cores, 12 threads. Well its a start... as a bridge.

Dell will put together a system for you [the Dell Precision T7600] How much? .... if you have to ask....[cpu chip is $1K]. Sigh... someday. Thx-RNMarsh

I get to buy via corporate discount, the price I got was ~$1500, probably not maxed out on memory (512gB).

I guess this is a nice fill in conversation, but I still miss the point. There has probably not been a single circuit posted on DIY audio that would have any significant latency for a transient analysis on my somewhat dated laptop let alone my Linux workstation.

At some level the speed makes engineers lazy, I could envision a giant matrix of virtual decade boxes with thoughtless tweaking replacing thinking. You need breaks to think, design is not a video game making it one will destroy the future (people can be weak willed).

Last edited:

Threading

Micro-Cap 10 uses threading for significant speed improvement where multiple CPU cores are available. When multiple independent analyses are requested, Micro-Cap uses threading technology to speed up the overall analyses by allocating each sub-analysis to a different CPU core. This is beneficial when stepping temperature or parameters, in Monte Carlo Analysis, and with harmonic and intermodulation distortion analysis.

Introducing Micro-Cap 10 - Summer 2010

Test:

I did a transient analysis with 7 steps: time used 29 sec.

When I turned off threading running the same analysis: time used 125 sec.

Im using i7 CPU 960@3.20GHz

4 cores, 8 logical processors

I'm using 7 threads for simulation and 1 thread for plotting.

Cheers

Stein

Micro-Cap 10 uses threading for significant speed improvement where multiple CPU cores are available. When multiple independent analyses are requested, Micro-Cap uses threading technology to speed up the overall analyses by allocating each sub-analysis to a different CPU core. This is beneficial when stepping temperature or parameters, in Monte Carlo Analysis, and with harmonic and intermodulation distortion analysis.

Introducing Micro-Cap 10 - Summer 2010

Test:

I did a transient analysis with 7 steps: time used 29 sec.

When I turned off threading running the same analysis: time used 125 sec.

Im using i7 CPU 960@3.20GHz

4 cores, 8 logical processors

I'm using 7 threads for simulation and 1 thread for plotting.

Cheers

Stein

Attachments

Us engineers and scientists always want more accuracy and thus even thoug computation power increases over time, so does the need for more accuracy, and thus larger matrixes and of course now we want 3D. I work with Ansys Maxwell in 3D and I am waiting and waiting and waiting and going home, and in the morning there are some results

Just take any amplifier on the forum and decrease the time step and you will end up waiting minutes. Necessary? Some times.

Or do like I do, THD sweeps where I sweep both frequency and input amplitude. Add to that some temperature sweeps for all the other sweps and the CPU is starting to get busy.

Or do some fansy Monte Carlo sims where you vary a few parameters for every BJT in an OPamp and whant high accuracy distorsion values in a noisy circuit which needs large FFTs to get the noise down.

/S.

Just take any amplifier on the forum and decrease the time step and you will end up waiting minutes. Necessary? Some times.

Or do like I do, THD sweeps where I sweep both frequency and input amplitude. Add to that some temperature sweeps for all the other sweps and the CPU is starting to get busy.

Or do some fansy Monte Carlo sims where you vary a few parameters for every BJT in an OPamp and whant high accuracy distorsion values in a noisy circuit which needs large FFTs to get the noise down.

/S.

I guess this is a nice fill in conversation, but I still miss the point. There has probably not been a single circuit posted on DIY audio that would have any significant latency for a transient analysis on my somewhat dated laptop let alone my Linux workstation.

QUOTE]

I know.

I also use MC10 Pro. Excellent software!

But running only one TRAN (no stepping) on one circuit is whereI want more speed and do not see any speed improvement using many cores. Scott´s Linux system has that speed increase.

Intel sells some parallel computing math libraries. Not sure if MC10 uses the INtel compiler, though.

I also use MC10 Pro. Excellent software!

But running only one TRAN (no stepping) on one circuit is whereI want more speed and do not see any speed improvement using many cores. Scott´s Linux system has that speed increase.

Intel sells some parallel computing math libraries. Not sure if MC10 uses the INtel compiler, though.

Threading

Micro-Cap 10 uses threading for significant speed improvement where multiple CPU cores are available. When multiple independent analyses are requested, Micro-Cap uses threading technology to speed up the overall analyses by allocating each sub-analysis to a different CPU core. This is beneficial when stepping temperature or parameters, in Monte Carlo Analysis, and with harmonic and intermodulation distortion analysis.

Introducing Micro-Cap 10 - Summer 2010

Test:

I did a transient analysis with 7 steps: time used 29 sec.

When I turned off threading running the same analysis: time used 125 sec.

Im using i7 CPU 960@3.20GHz

4 cores, 8 logical processors

I'm using 7 threads for simulation and 1 thread for plotting.

Cheers

Stein

The FFTW team at MIT claim some of the instruction set is unpublished only accesssible to Intel $$$ compilers. Is this true?

I dont think that this is about the instruction sets that are public

but rather about a discriminatory instructions selection within

Intel Compiler.

When compiling a code a compiler create sub routines to provide

different paths for given parts of the code since only the most recent CPUs have

the recent instructions , the others being feed with older instructions.

Now , it is well documented that Intel Compiler in some instances

create sub routines that select the instructions for a given task

not using the CPUID , that would allow to use the most efficient

instructions avalaible in a CPU , but by checking for the CPU

manufacturer.

If the CPU is Genuine_Intel it will be provided a more efficient

code path than if it is from another vendor , that is , it wont use

the most recent instructions if it s not an Intel CPU even

if the said proceesor support thoses instructions and i think

that this is what the MIT guys are talking about.

Any soft compiled with ICC will display thoses sub routines

although it was demonstrated that it (ICC) produce relatively

good code for AMD CPUs but undoubtly not the most efficient ,

even if it s by a few % , in the final execution speed.

Us engineers and scientists always want more accuracy and thus even thoug computation power increases over time, so does the need for more accuracy, and thus larger matrixes and of course now we want 3D. I work with Ansys Maxwell in 3D and I am waiting and waiting and waiting and going home, and in the morning there are some results

Maybe a better observation, I spent 95-99% of my time examining the output of sims in data post processing tools. This is DIY not our day jobs.

Any soft compiled with ICC will display thoses sub routines

although it was demonstrated that it (ICC) produce relatively

good code for AMD CPUs but undoubtly not the most efficient ,

even if it s by a few % , in the final execution speed.

Intel have had legal trouble over this. Not exactly what you'd call sportsmanship.

Agner`s CPU blog - Intel's "cripple AMD" function

Intel C++ Compiler - Gentoo Linux Wiki

At some level the speed makes engineers lazy, I could envision a giant matrix of virtual decade boxes with thoughtless tweaking replacing thinking. You need breaks to think, design is not a video game making it one will destroy the future (people can be weak willed).

Yes, emphatically agree. Thinking is key, being with a problem. And it is maddening to mediocre technical managers to not be able to somehow see that an employee is working, that progress is made. My father got exasperated at me when I was working on a design because I sat at a desk and drew schematics, but sometimes just sat there and thought about things. He was very partial to circuit compendia like those doorstop collections edited by Graf and others. And at the bench he did love decade boxes

I've thought that one of the most curious aberrations in the direction of supposedly eliminating thought was "genetic" programming. I'm not sure how well it's doing lately, but it was heralded as the next big thing for a while. I can still recall the picture of one of the proponents, sitting at his desk with a grin that spoke volumes about his smug self-satisfaction.

The self-satisfied guy at the desk was Koza. I remembered the name when I read the wiki on GP.

One of the "triumphs" I recall claimed was a high-order filter. In the wiki, electronic designs are mentioned but with no clickable links.

There is this creative-commons free reference: A Field Guide to Genetic Programming

Yes, Thinking is key, being with a problem.

I totally agree.

Personally I always use paper and pencil (and calculator) when I'm working with a new circuit, before I simulate the circuit.

Stein

Yes, emphatically agree. Thinking is key, being with a problem. And it is maddening to mediocre technical managers to not be able to somehow see that an employee is working, that progress is made. My father got exasperated at me when I was working on a design because I sat at a desk and drew schematics, but sometimes just sat there and thought about things.

I used to have an office where I could put my feet up on the desk when i was working (thinking). I did this frequently... leaning back in the chair with my feet on the desk. That picture apparently wasnt a good one for those passing by the door. I soon guessed (with help) that you had to "look" like you were working..... thus came the Look Busy phenom. that has taken over. You know --- Gods coming! Look Busy! -RNM

Last edited:

Nothing will drive a mediocre technical manager to suicide faster than to do a lot of thinking in the beginning of a large project. A large project which has a funding time limit... a drop dead date. When the project must be completed and working properly. There you are doing 'nothing' (but planning, solving problems and getting organized, writing sole source procurment reasons, prioritizing, contracts etc). meanwhile time is passing by and that manager is going to get on you to take some 'action.' But when your project does really launch... it goes fast and smooth and you look like a miracle worker in that poor managers eye. And, he looks good, too.  -RNMarsh

-RNMarsh

Last edited:

I need to get more exercise in the winter.

Does Running Late count as an exercise?

A joke, I get it!

I know I am cryptic at times ( OK, a lot of the time). I mean if we could ween average joe citizen off Microsoft Windows and over to Linux OS we would stand a chance of doing better by allowing for a new cpu archit... one which would be more like a mini-computer. Just how do you turn the big Windows/Intel uPC ship around? Or, are there alternatives (mini-cpu) that are affordable, PNP, for personal use that I dont know about? What cpu do you use -- and software?

I used to have -in my home- an LSI-11 (RT11 OS) maxed out mem and drives of the time..... math coprocessor and the works... efficienct codes and less operating overhead made it do FFT very fast. I used DADiSP software at home ... still available for PC too. Look it up. Superfast -- I could change part values on the schematic and see the bode-plot etc in real time change in the other window. In fact I could have a dozen windows open on the display screen and see the effect in each window's plot of what-ever change made in one window. You dont need a tonne of memory to do this but efficient code and cpu designed for number crunching (eg 'scientific'). And, it didnt use windows or linux... all machine code.

BTW - these number crunching machines are misleading when you compare memory size needed to get work done with todays x86 derivitive machines. The many layers of code and switching data I/O of mem is the best reason to change over to SSD. You'll think you just got hooked up to a Mini-cpu machine. really. Thx-RNMarsh

There is no reason that you cannot run machine code on an X86 box

There is no reason that you cannot run machine code on an X86 box

Some of the engineering software used on the PC uses only the GUI interface and under it all is a seperate OS running the show or in machine language. Such as DADiSP, I mentioned. Originally, DADiSP ran under DOS while the front end looked like what ever Windows that the machine came with. Seems the more you can get rid of Windows OS etal the faster everything becomes.

Hardware and software improvements will optimize what we have to work with. Then add SSD.

Thx-RNMarsh

- Home

- Source & Line

- Analog Line Level

- Discrete Opamp Open Design