^^^ This is IMO what a good stereo is capable of at best. ^^^

Agree

Audio engineers: Enemies of Our Ears

While I'm near the last to credit the current crop of audio engineers with safeguarding this generation's musical heritage, "The majority of audio engineers are deaf or nearly deaf in the most sensitive region of human hearing, the frequency band between 1.5 kHz and 5 kHz" is the dumbest comment on the subject I've read in a very long time. Granted, I got no further into the article.

I do have a question which is: when the students are listening to the instruments and comparing to speaker, although the are hearing the same variation (difference), are they all hearing the same things?

Take this analogy: a group of students is comparing a painting to a photographic reproduction. They all have different vision without artificial correction. Although they can all spot the same difference, they are actually all comparing different artworks to different photographs (the difference being the same degree for both). This is my only concern.

I doubt it; and I doubt the students are hearing the same thing as the old codgers, either, by training or physiology. I'm not a psychologist, but have read some of the literature on babies learning not to hear, and nerve stimuli to sounds not used in a particular language (such as the theta in English, which gets a certain level of reaction in English or Spanish speakers, but not in French or German. The nerves from the inner ear are actually carrying lower level impulses, not merely (merely!) the brain filtering them out.

But as long as the students heard no difference, it doesn't matter about what exactly they are experiencing, what counts is the identicalness (the perfectly good word "identity" seems to have been hijacked to another meaning).

The test wasn't perfect; people off to the edges of the group were getting different results to those in the centre, demonstrating that directionality has its part to play in the

affair, but I spent my entire afternoon in the room, ignoring other conferences which I had intended to attend, finally having to be ejected when they wanted to lock up (and even then going to the bar with the hard core).

Almost as interesting were the demonstrations of 16 bit, 24 bit, 48, 96 and 192 kHz (for the person earlier on in the thread insisting that the "k" for "kilo" should be upper case, it isn't. "M" for mega is (to distinguish it from "milli" - or µ for micro) but not k), analog 30ips (76 cm/sec) delta mod and PCM, where the musicians joined us when they weren't required as references, and the difference in the way they experienced sound. Actually, for a violinist with that density of high harmonics perched on a shoulder can hear any highs in that ear at all.

But what I am claiming is that, even if I who could once point out bats can no longer detect anything above 15kHz without emusifying the colloids in my brain and a fifteen year old who's never been to a heavy metal concert let alone mixed monitors for it might hear very different things, there is a reference, an absolute reality which might not yet be entirely measurable, but isn't totally imaginary either.

Possibly – I date before professional courses, back to apprenticeship days. But when I'm an expert in the AES Exams here, I don't try to judge anyone's hearing, just the results, and knowledge of the techniques to obtain those results. And the written exams might include Fletcher/Munson curves but is more likely to contain jitter or quantisation error. Listening can be learnt, but I'm not certain it can be taught.Hearing is the first topic covered in any professional or academic sound engineering course.

Last edited:

No, they won't. The reproduction is accurate if the audio waveform is isomorphic with the electrical signal from the source. What the listener may hear has nothing to do with it.

If you consider the reproduction chain to be: source/amp(electric) -> speaker(mechanical), then I fully agree with you. It is accurate.

Like I said earlier for me the reproduction chain is recording (mechanical to electrical) -> playback (electrical to mechanical) -> hearing/measuring (mechanical to electrical). In this chain "an audio waveform isomorphic to the electrical from the source" only increases precision.

While I'm near the last to credit the current crop of audio engineers with safeguarding this generation's musical heritage, "The majority of audio engineers are deaf or nearly deaf in the most sensitive region of human hearing, the frequency band between 1.5 kHz and 5 kHz" is the dumbest comment on the subject I've read in a very long time. Granted, I got no further into the article.

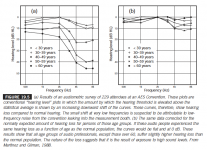

From Toole, "Sound reproduction":

Attachments

High Fidelity is a very lose termI understand it as a term for accurate and precise transformation of mechanical and electrical energy.

A "live" trombone has a whole array of different sounds. It has one sound on a theater stage, one sound in a closet, one sound in cave etc etc. The sound of it also differs depending on your location from the instrument.

Objectively speaking, unless the microphones used to record the instrument were right next to your ears at the actual recording locationyou can't really objectively compare if the reproduction is true to the live. What you can do is compare the sound of the reproduction with a similar one heard in a different scenario. Again this addresses precision and the topic is on accuracy.

I agree that our brain is a vastly powerful processor and I do not really know much about it. Perhaps it has a very powerful precision and accuracy correction processors.

Perhaps leaving more for the brain to process is better than using analog/digital methods - but if we truly believe that, why are we even messing with speaker design?

You missed the point! A proper recording sounds like the instrument. Your changing that sound so the recording will not match the real instrument. If you never listen to live music you don't have to worry.

(for the person earlier on in the thread insisting that the "k" for "kilo" should be upper case, it isn't. "M" for mega is (to distinguish it from "milli" - or µ for micro) but not k)

No, I wasn't insisting; it just occurred to my mind at that moment, and I wasn't even so sure, so...gotcha !!

Just some respect for the 'founders' of this highly techy discipline...

Bell, Ohm and so on...

From Toole, "Sound reproduction":

Even with such extreme HF loss, they still use the same ears to hear real sounds in everyday life, and thus still have the same reference as young people with undamaged hearing.

I can't remember the last time I heard a lawnmower next door, or a garage door slam and though "someone needs to turn up the treble".

Hmm! Anybody use tone-controls anymore?

Yes! Audyssey Dynamic EQ.

Even with such extreme HF loss, they still use the same ears to hear real sounds in everyday life, and thus still have the same reference as young people with undamaged hearing.

Yes and no. Our hearing is in constant training mode. So it calibrates itself to changes in low level hearing processes but why do hearing aids exist (and work)?

Even with such extreme HF loss, they still use the same ears to hear real sounds in everyday life, and thus still have the same reference as young people with undamaged hearing.

I can't remember the last time I heard a lawnmower next door, or a garage door slam and though "someone needs to turn up the treble".

I agree wholeheartedly. That said, I can also see some merit to the argument. If an old surgeon had shaky hands, maybe he should get it fixed(if possible) or just retire. An old recording engineer may want to get hearing aids if they want to continue working.

It seems like a lot to do about nothing. Interesting topic though.

From Toole, "Sound reproduction"

Are you confusing the two types of 'audio engineers'? AES isn't the typical venue for music mixers. Nor are they typically 50+. Nor do those graphs meet the definition of functionally deaf between 1.5 and 5 kHz. Other than that.....

Other than that.....

...it is objective data whilst you and the other guy simply speculate.

You missed the point! A proper recording sounds like the instrument. Your changing that sound so the recording will not match the real instrument. If you never listen to live music you don't have to worry.

I propose this scenario:

During a recording: The singer has an emotional moment and her upper mid range and high frequency voices are slightly amplified. The symbal percussionists feels this and begins to play his instrument differently. He begins to play it lighter. Thus changing the frequency curves of his instrument.

A sound engineer who does not adopt the philosophy of accounting for his individual hearing but has a 20dB hearing loss at 8Khz and rolling down. In fact, he doesn't even measure his hearing so he doesn't know. From his "hearing and experience", he feels the symbal is not as clear, he believes this may be caused by a cancel out or muddying by the trumpet. He starts to tweaks the volume of the symbal track for increased clarity.

A music lover buys the music and plays it back in his system. This music lover has only a slight loss in the high frequencies but a large dip in the midrange from years of listening to loud jazz vocals. He listens to the album and loves it! IT SOUNDS GREAT! The instruments are so clear and just the way he remembers at his local jazz bar. The voice of the female vocalist is so clean and "steady" it sends shutters down his spine.

What is wrong with this picture? ABSOLUTELY NOTHING!! This is the kind of moment I enjoy all the time! However outside of pure enjoyment what do I feel:

The music lover does not know about his hearing loss in the midrange nor does his speakers account for it, therefore he completely did not get a chance to experience the slight rise in upper midrange that is a result of passion. In fact he hasn't experienced this for YEARS due to his hearing so he feels this is the accurate representation. As for the symbal, due to the sound engineer, the music lover does not have a chance to experience that harmony between the vocalist and percussion player. He believe what he heard is "live" when compared to the sounds of the instruments in the local Jazz bar playing the same song and indeed they do! But unfortunately what he hears on that day is not an accurate representation (my own subjectivity) of the "live" that occurs on that particular recording.

Last edited:

I worked with Prince, who also did much of his own engineering before and after he became known as the symbol.As for the symbal, due to the sound engineer, the music lover does not have a chance to experience that harmony between the vocalist and percussion player. He believe what he heard is "live" when compared to the sounds of the instruments in the local Jazz bar playing the same song and indeed they do! But unfortunately what he hears on that day is not an accurate representation (my own subjectivity) of the "live" that occurs on that particular recording.

He liked to hear the ride cymbal in the stage right side fill, and the hi-hat and crash cymbals in the stage left side fill.

Now that most of the high end performers use in-ear monitors, only the artist and their monitor engineer knows what they are hearing.

A lot of what performers want to hear tends to rip the cilia right off the inner ear. Of course, with in ear monitors, feedback is not a problem, so performers can turn it up louder and louder to compensate for the hearing damage done.

Conversely, in ear monitors allow as much as 30 dB of attenuation of external noise, and can allow for listening at a low level in a loud environment.

That seldom seems to happen...

Art

Attachments

rdf said:Are you confusing the two types of 'audio engineers'? AES isn't the typical venue for music mixers. Nor are they typically 50+.

While I was PAing touring bands I was a member of the AES. Previous to that, while working for Tannoy, I was already a member, and I continued while retreating into the studio and mixing music there, frequently at higher levels than are customary in halls.

Most of the music mixers I come in contact with, it is through the AES, either at local meets or conventions.

Marcus, are the 30, 40, 50 etc years the age of the engineer, or the number of years he has been in the business(I sort of vaguely remember having done that test).

dangwei; yes, it is unfortunately possible that I have used my own judgement on a recording and dropped the piano during a whispered vocal line, or something similar, spoiling the dynamic of a performance. (It is unlikely I would ride a cymbal, but a brushed snare?). Certain that I've reduced the dynamic range of performances, bringing up the quiet passages and dropping the crescendi, not respecting the original performance, sometimes for the recording medium, others for the probable reproduction; if the recording is to be played back in a cardboard-walled appartement you can't use a 45 dB dynamic range, however impressive it might have been in the original venue (for the people at the front, anyway. The ones round the walls, less so. To a certain extent the engineer becomes a member of the band during the period of the recording, and like any of the musicians he can make a mistake. But the musicians' hearing is compromised, too; unless the percussionist only plays with that vocalist, he's spending hours surrounded by resonating metal; that doesn't do any good for high frequency perception, either. The magic does not require perfection, it transcends perfection, for that instant. Sorry he boosted the highs on that cymbal, but the fridge motor cutting in and starting all the rivets sizzling in the china type is much more obvious, and just as irrelevant if the performance had what it takes.

chrispenycate and weltersys: Thanks for the real-life insight again! I am very glad to have made a post and hear the expertise of many of the knowledgeable minds that exists on this forum.

Hypothesis: Perhaps this is one of the reasons why myself as well as my friends find "flat frequency response in the high range" speakers very annoying and difficult to listen to for extended periods. Our hearings are not as damaged in the higher frequencies as musicians so what is comfortable to the artist is actually a bit "too loud" for us in the higher frequencies. This may be one of the reasons why many prefer a roll-off in the treble of their speaker and why I can enjoy the emit-r tweeter in the kappas.

This leads to an interesting dilemma:

If a slightly green color blind artist attempts to paint a scene of a Parisian sunset for realism, does he account his color for his green color blindness or does he paint the painting for realism to his eyes. If he paints for realism to account for his green color blindness, how can he knows if it is more or less realistic?

At the same time if a art collector/lover/appreciator looks at a painting and he has red color blindness, does he use a color correcting lens to look at the painting to see the original representation? If he does, then the sunset in the painting does not look like the sunset he sees everyday without the lens.

The beauty of DIY is that you can make the choice (in the second half).

Hypothesis: Perhaps this is one of the reasons why myself as well as my friends find "flat frequency response in the high range" speakers very annoying and difficult to listen to for extended periods. Our hearings are not as damaged in the higher frequencies as musicians so what is comfortable to the artist is actually a bit "too loud" for us in the higher frequencies. This may be one of the reasons why many prefer a roll-off in the treble of their speaker and why I can enjoy the emit-r tweeter in the kappas.

This leads to an interesting dilemma:

If a slightly green color blind artist attempts to paint a scene of a Parisian sunset for realism, does he account his color for his green color blindness or does he paint the painting for realism to his eyes. If he paints for realism to account for his green color blindness, how can he knows if it is more or less realistic?

At the same time if a art collector/lover/appreciator looks at a painting and he has red color blindness, does he use a color correcting lens to look at the painting to see the original representation? If he does, then the sunset in the painting does not look like the sunset he sees everyday without the lens.

The beauty of DIY is that you can make the choice (in the second half).

...it is objective data whilst you and the other guy simply speculate.

While you find comfort measuring the wrong thing.

- Status

- This old topic is closed. If you want to reopen this topic, contact a moderator using the "Report Post" button.

- Home

- Loudspeakers

- Multi-Way

- Hearing and the future of loudspeaker design